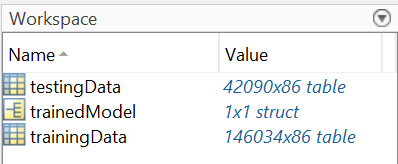

Variables:

lat: 146034×1 double

Values:

Min 27

Median 42

Max 49

lon: 146034×1 double

Values:

Min 236

Median 252

Max 266

start_date: 146034×1 datetime

Values:

Min 01-Jan-2016 00:00:00

Median 01-Jul-2016 12:00:00

Max 31-Dec-2016 00:00:00

cancm3_0_x: 146034×1 double

Values:

Min -12.902

Median 10.535

Max 36.077

cancm4_0_x: 146034×1 double

Values:

Min -13.276

Median 12.512

Max 35.795

ccsm3_0_x: 146034×1 double

Values:

Min -11.75

Median 10.477

Max 32.974

ccsm4_0_x: 146034×1 double

Values:

Min -13.264

Median 12.315

Max 34.311

cfsv2_0_x: 146034×1 double

Values:

Min -11.175

Median 11.34

Max 35.749

gfdl-flor-a_0_x: 146034×1 double

Values:

Min -12.85

Median 11.831

Max 37.416

gfdl-flor-b_0_x: 146034×1 double

Values:

Min -13.52

Median 11.837

Max 37.34

gfdl_0_x: 146034×1 double

Values:

Min -11.165

Median 10.771

Max 36.117

nasa_0_x: 146034×1 double

Values:

Min -19.526

Median 14.021

Max 38.22

nmme0_mean_x: 146034×1 double

Values:

Min -12.194

Median 11.893

Max 34.879

cancm3_x: 146034×1 double

Values:

Min -12.969

Median 9.9291

Max 36.235

cancm4_x: 146034×1 double

Values:

Min -12.483

Median 12.194

Max 38.378

ccsm3_x: 146034×1 double

Values:

Min -13.033

Median 10.368

Max 33.42

ccsm4_x: 146034×1 double

Values:

Min -14.28

Median 12.254

Max 34.957

cfsv2_x: 146034×1 double

Values:

Min -14.683

Median 10.897

Max 35.795

gfdl_x: 146034×1 double

Values:

Min -9.8741

Median 10.476

Max 35.95

gfdl-flor-a_x: 146034×1 double

Values:

Min -13.021

Median 11.15

Max 37.834

gfdl-flor-b_x: 146034×1 double

Values:

Min -12.557

Median 11.117

Max 37.192

nasa_x: 146034×1 double

Values:

Min -21.764

Median 13.721

Max 38.154

nmme_mean_x: 146034×1 double

Values:

Min -13.042

Median 11.354

Max 35.169

cancm3_y: 146034×1 double

Values:

Min 0.075757

Median 18.56

Max 124.58

cancm4_y: 146034×1 double

Values:

Min 0.02538

Median 16.296

Max 137.78

ccsm3_y: 146034×1 double

Values:

Min 4.5927e-05

Median 24.278

Max 126.36

ccsm4_y: 146034×1 double

Values:

Min 0.096667

Median 24.455

Max 204.37

cfsv2_y: 146034×1 double

Values:

Min 0.074655

Median 25.91

Max 156.7

gfdl_y: 146034×1 double

Values:

Min 0.0046441

Median 20.49

Max 133.88

gfdl-flor-a_y: 146034×1 double

Values:

Min 0.0044707

Median 20.438

Max 195.32

gfdl-flor-b_y: 146034×1 double

Values:

Min 0.0095625

Median 20.443

Max 187.15

nasa_y: 146034×1 double

Values:

Min 1.9478e-05

Median 17.98

Max 164.94

nmme_mean_y: 146034×1 double

Values:

Min 0.2073

Median 21.494

Max 132

cancm3_0_y: 146034×1 double

Values:

Min 0.016023

Median 19.365

Max 139.94

cancm4_0_y: 146034×1 double

Values:

Min 0.016112

Median 17.354

Max 160.04

ccsm3_0_y: 146034×1 double

Values:

Min 0.00043188

Median 21.729

Max 144.19

ccsm4_0_y: 146034×1 double

Values:

Min 0.02979

Median 23.642

Max 151.3

cfsv2_0_y: 146034×1 double

Values:

Min 0.01827

Median 25.095

Max 176.15

gfdl-flor-a_0_y: 146034×1 double

Values:

Min 0.0058198

Median 17.634

Max 184.7

gfdl-flor-b_0_y: 146034×1 double

Values:

Min 0.0045824

Median 16.937

Max 194.19

gfdl_0_y: 146034×1 double

Values:

Min 0.0030585

Median 19.379

Max 140.16

nasa_0_y: 146034×1 double

Values:

Min 0.00051379

Median 17.81

Max 167.31

nmme0_mean_y: 146034×1 double

Values:

Min 0.061258

Median 20.697

Max 140.1

cancm3_0_x_1: 146034×1 double

Values:

Min 0.016023

Median 19.436

Max 139.94

cancm4_0_x_1: 146034×1 double

Values:

Min 0.016112

Median 17.261

Max 160.04

ccsm3_0_x_1: 146034×1 double

Values:

Min 0.00043188

Median 21.75

Max 144.19

ccsm4_0_x_1: 146034×1 double

Values:

Min 0.02979

Median 23.45

Max 231.72

cfsv2_0_x_1: 146034×1 double

Values:

Min 0.01827

Median 25.096

Max 176.15

gfdl-flor-a_0_x_1: 146034×1 double

Values:

Min 0.0058198

Median 17.617

Max 217.6

gfdl-flor-b_0_x_1: 146034×1 double

Values:

Min 0.0045824

Median 16.915

Max 195.06

gfdl_0_x_1: 146034×1 double

Values:

Min 0.0030585

Median 19.411

Max 140.16

nasa_0_x_1: 146034×1 double

Values:

Min 0.00051379

Median 17.733

Max 180.77

nmme0_mean_x_1: 146034×1 double

Values:

Min 0.061258

Median 20.67

Max 140.1

tmp2m: 146034×1 double

Values:

Min -21.031

Median 12.742

Max 37.239

cancm3_x_1: 146034×1 double

Values:

Min 0.075757

Median 18.649

Max 124.58

cancm4_x_1: 146034×1 double

Values:

Min 0.02538

Median 16.588

Max 116.86

ccsm3_x_1: 146034×1 double

Values:

Min 4.5927e-05

Median 25.242

Max 134.15

ccsm4_x_1: 146034×1 double

Values:

Min 0.21704

Median 24.674

Max 204.37

cfsv2_x_1: 146034×1 double

Values:

Min 0.028539

Median 26.282

Max 154.39

gfdl_x_1: 146034×1 double

Values:

Min 0.0046441

Median 21.028

Max 142.5

gfdl-flor-a_x_1: 146034×1 double

Values:

Min 0.0044707

Median 21.322

Max 187.57

gfdl-flor-b_x_1: 146034×1 double

Values:

Min 0.0095625

Median 21.444

Max 193.19

nasa_x_1: 146034×1 double

Values:

Min 1.9478e-05

Median 17.963

Max 183.71

nmme_mean_x_1: 146034×1 double

Values:

Min 0.24096

Median 21.881

Max 124.19

cancm3_y_1: 146034×1 double

Values:

Min -11.839

Median 10.067

Max 36.235

cancm4_y_1: 146034×1 double

Values:

Min -11.809

Median 12.179

Max 38.378

ccsm3_y_1: 146034×1 double

Values:

Min -11.662

Median 10.552

Max 33.171

ccsm4_y_1: 146034×1 double

Values:

Min -14.66

Median 12.254

Max 34.891

cfsv2_y_1: 146034×1 double

Values:

Min -14.519

Median 10.99

Max 35.795

gfdl_y_1: 146034×1 double

Values:

Min -10.906

Median 10.555

Max 35.95

gfdl-flor-a_y_1: 146034×1 double

Values:

Min -12.995

Median 11.24

Max 37.834

gfdl-flor-b_y_1: 146034×1 double

Values:

Min -12.899

Median 11.255

Max 37.192

nasa_y_1: 146034×1 double

Values:

Min -21.459

Median 13.768

Max 38.154

nmme_mean_y_1: 146034×1 double

Values:

Min -13.219

Median 11.462

Max 35.169

cancm3_0_y_1: 146034×1 double

Values:

Min -12.902

Median 10.475

Max 36.077

cancm4_0_y_1: 146034×1 double

Values:

Min -13.276

Median 12.385

Max 35.795

ccsm3_0_y_1: 146034×1 double

Values:

Min -9.4298

Median 10.452

Max 32.974

ccsm4_0_y_1: 146034×1 double

Values:

Min -12.54

Median 12.237

Max 34.311

cfsv2_0_y_1: 146034×1 double

Values:

Min -10.862

Median 11.315

Max 35.749

gfdl-flor-a_0_y_1: 146034×1 double

Values:

Min -12.85

Median 11.831

Max 37.416

gfdl-flor-b_0_y_1: 146034×1 double

Values:

Min -13.52

Median 11.842

Max 37.34

gfdl_0_y_1: 146034×1 double

Values:

Min -9.2018

Median 10.658

Max 36.117

nasa_0_y_1: 146034×1 double

Values:

Min -19.526

Median 14.002

Max 38.22

nmme0_mean_y_1: 146034×1 double

Values:

Min -12.194

Median 11.861

Max 34.879

Cleve’s Corner: Cleve Moler on Mathematics and Computing

Cleve’s Corner: Cleve Moler on Mathematics and Computing The MATLAB Blog

The MATLAB Blog Guy on Simulink

Guy on Simulink MATLAB Community

MATLAB Community Artificial Intelligence

Artificial Intelligence Developer Zone

Developer Zone Stuart’s MATLAB Videos

Stuart’s MATLAB Videos Behind the Headlines

Behind the Headlines File Exchange Pick of the Week

File Exchange Pick of the Week Hans on IoT

Hans on IoT Student Lounge

Student Lounge MATLAB ユーザーコミュニティー

MATLAB ユーザーコミュニティー Startups, Accelerators, & Entrepreneurs

Startups, Accelerators, & Entrepreneurs Autonomous Systems

Autonomous Systems Quantitative Finance

Quantitative Finance MATLAB Graphics and App Building

MATLAB Graphics and App Building

コメント

コメントを残すには、ここ をクリックして MathWorks アカウントにサインインするか新しい MathWorks アカウントを作成します。