Overcoming 4 Key Challenges in Cobot Software Development

Close your eyes and picture a collaborative robot (Cobot) in action. What do you see? For me it’s a robot able to skillfully prepare and serve drinks, a sight that captivated me during my visit to a restaurant in Las Vegas.

The possibilities of Cobots are endless if you remove the limitations of traditional robots – limited to repetitive tasks, manual programming, lack of environmental awareness. Cobots emerged as an answer to these limitations, enabling customized workflows and working safely alongside humans in smart factories, hospitals and restaurants. For example, Yaskawa Electric Corp. in Japan used their Motoman (MotoMINI) for pick-and-place system enabled by voice-driven control, object detection, and path planning.

Developing cobot workflows that seamlessly integrate perception and motion might be as hard as it sounds, and there is a reason that traditional robots still exist despite the obvious advantage cobots have for automated solutions. In this blog post, we will explore 4 common challenges faced by cobots developers and provide insights on how to overcome them –

- Evaluating performance and operability of the designed model (plant/controller) in 3D simulation

- Designing autonomy algorithms for perception, planning and control and enabling AI for decision-making

- Processing, visualizing, and analyzing sensor data and designing sensor fusion algorithms

- Ensuring safety of humans working with and alongside cobots

Interested in learning more? Visit the Robot Manipulators Solutions page and register for our upcoming webinars.

Universal Robot enabled with MATLAB

1. Evaluating performance and operability of the designed model (plant/controller) in 3D simulation

3D simulations allow developers to test their models before deploying them in the real world. However, accurately capturing the dynamics of the robot, environment, and objects it interacts with can be complex.

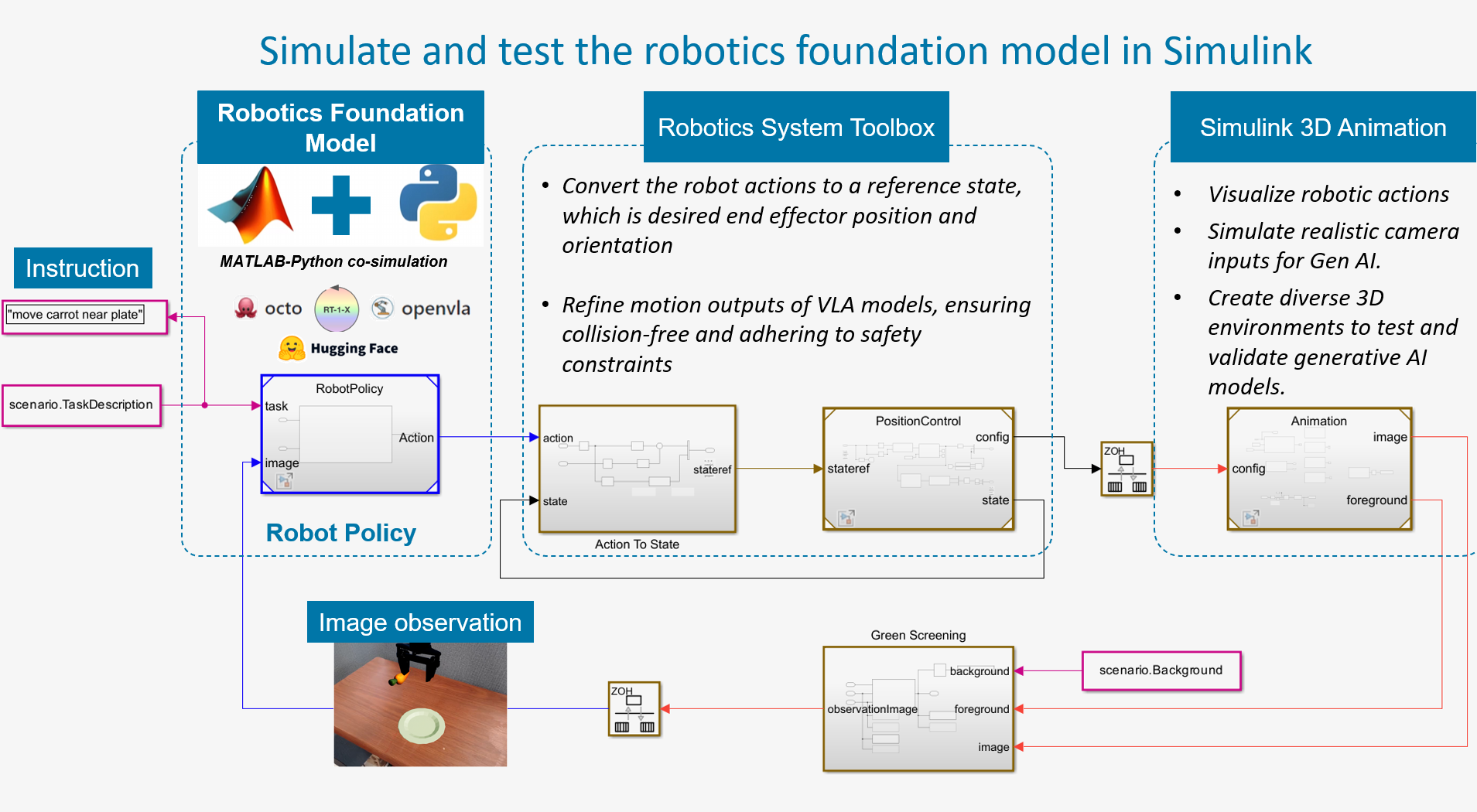

MATLAB and Robotics System Toolbox provides scalable, multi-fidelity simulation solutions by combining Model-based Design tools. In addition, MATLAB and Simulink offers an integrated environment to interface other third-party simulators such as Gazebo and Unreal. You can perform virtual testing before deployment by simulating different scenarios such as a warehouse environment with sensor models.

2. Designing autonomy algorithms for perception, planning and control and enabling AI for decision-making

Designing perception algorithms with variable conditions

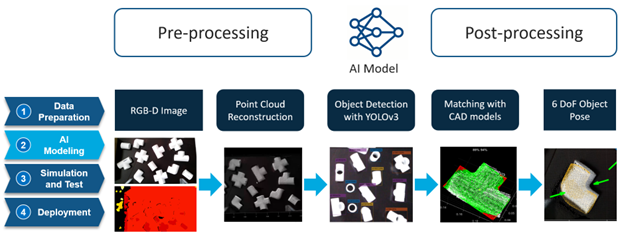

Perception algorithms enable robots to interpret their environment. However, designing perception algorithms that can handle variable conditions such as lighting, background, and scenarios can be challenging. To address this, deep learning based solutions are used. By training perception algorithms on diverse datasets that encompass a wide range of conditions, the algorithms can learn to adapt and generalize their understanding of the environment. Additionally, incorporating techniques like sensor fusion, where data from multiple sensors are combined, can enhance the robustness and reliability of perception algorithms.

MATLAB and Deep Learning Toolbox provides pretrained networks and interactive apps to create, modify, and train networks for robot perception. Once these models are trained and tested in simulation, they can be deployed to the hardware.

Designing motion planning solutions for safe robot motions

Once the robot knows its surroundings and has the information on where to move, motion planning algorithms defines a safe robot motion from one configuration to another. Motion planning algorithms consider the dynamics of the robot, the environment, and any potential obstacles. There are various path planning algorithms, such as RRT (Rapidly-exploring Random Trees) or A* (A-star), to find the most efficient paths. Additionally, leveraging optimization techniques like trajectory optimization and model predictive control can further enhance the cobot’s performance.

MATLAB, Robotics System Toolbox and Navigation Toolbox provides a library of customizable motion planning algorithms. You can use optimization-based motion planners and trajectory optimization functions that enable you to plan robot motions.

Optimizing controls w.r.t. various path planning techniques

Once a set of robot configurations is provided from the motion planning system, control commands are generated to actually move the cobot to its desired configuration. To achieve optimal results, fine-tuning control parameters and iteratively testing and refining the system is required. Techniques such as Model Predictive Control (MPC) also lets you to estimate the future states and account for various constraints. Further advanced workflows include supervisory control and task scheduling as well as multi-agent control.

MATLAB provides state machines to model complex decision logic for robot control systems using state diagrams and flow charts. You can use visualize step-by-step control logic to examine robot behavior using graphical editor and debugger.

3. Processing, visualizing, and analyzing sensor data and designing sensor fusion algorithms

Robot manipulator workflows often involve processing data from multiple sensors, such as vision and point cloud sensors. Designing effective sensor fusion algorithms is essential for integrating and interpreting data from these diverse sources. Techniques like Kalman filtering or particle filtering are used to fuse sensor data and create a more comprehensive understanding of the environment. Combining the strengths of different sensors helps with enhancing perception accuracy. Developers often face challenges in designing sensor fusion algorithms due to lack of real-world data. Synthetic data obtained from photo-realistic simulations help to solve this challenge for training AI models. Once the models are designed, they can be retrained by combining the synthetic dataset with real-world data. MATLAB aids to these challenges with tools for automated data labeling, out-of-the-box sensor fusion algorithms and calibrating point cloud and camera sensors.

4. Ensuring safety of robot motions for those who work with and alongside such robots

Safety is a paramount concern when developing cobot workflows, especially in environments where humans work alongside robots. Developers must implement safety protocols, such as collision detection and avoidance, to prevent accidents and injuries. Collaborative robots equipped with force/torque sensors and compliant control strategies can detect and respond to unexpected forces, ensuring safe interactions with humans. Additionally, risk assessments and safety certifications can provide guidelines and standards for developing and deploying safe robot manipulator workflows.

MATLAB and Computer Vision Toolbox provides workflows that track objects and humans in video and image sequences. It also allows you to design a motion controller that ensures safe force interactions with unexpected contacts.

In conclusion, developing robot manipulator workflows that seamlessly integrate perception and motion involves overcoming several challenges. Software developers can create robust, efficient and safe workflows by leveraging advanced simulation tools, adopting data-driven approaches, testing AI and autonomy algorithms.

Since this blog barely touched the surface in highlighting the solutions to overcome these challenges, we have planned subsequent activities for you to learn more. You can start with visiting the Robot Manipulators Solutions page and register for our upcoming webinars.

- カテゴリ:

- Collaborative Robots

コメント

コメントを残すには、ここ をクリックして MathWorks アカウントにサインインするか新しい MathWorks アカウントを作成します。