Physical AI: AI Beyond the Digital World

For the past decade, AI has brought digital transformation from industrial automation, such as in visual inspection and predictive maintenance, to our everyday life. AI powers the search results we see, voice assistants and predictive text in our smartphones, and smart thermostats and security systems in our homes. Physical AI takes artificial intelligence beyond the screen, beyond the digital domain.

Physical AI is the application of artificial intelligence to systems that operate and interact within the physical world. It represents the next wave of intelligent systems; systems that go beyond information processing and engage directly with the world around them. Physical AI isn’t just about smart algorithms. It’s about systems that can perceive, decide, and act in real time. These systems must operate under physical constraints, respond to dynamic environments, and, in many cases, interact directly with people.

In this blog post we will explore physical AI and its applications, such as autonomous vehicles and robotic manipulators. I will also show you how to leverage MATLAB and Simulink to implement physical AI.

Figure: Design, simulation, test, and deployment of embedded AI applications

Simulation has also matured. Engineers can now create high-fidelity digital twins of physical systems and environments, enabling testing AI models within systems before deployment to hardware. The ability to simulate, test, and iterate, minimizes risk and cost, and makes it feasible to build and refine physical AI systems.

Finally, there’s market demand. Industries from manufacturing and healthcare to transportation and agriculture are looking for intelligent systems that can operate in the real world: systems that can handle complexity, adapt on the fly, and function autonomously or collaboratively with humans.

Figure: Design, simulation, test, and deployment of embedded AI applications

Simulation has also matured. Engineers can now create high-fidelity digital twins of physical systems and environments, enabling testing AI models within systems before deployment to hardware. The ability to simulate, test, and iterate, minimizes risk and cost, and makes it feasible to build and refine physical AI systems.

Finally, there’s market demand. Industries from manufacturing and healthcare to transportation and agriculture are looking for intelligent systems that can operate in the real world: systems that can handle complexity, adapt on the fly, and function autonomously or collaboratively with humans.

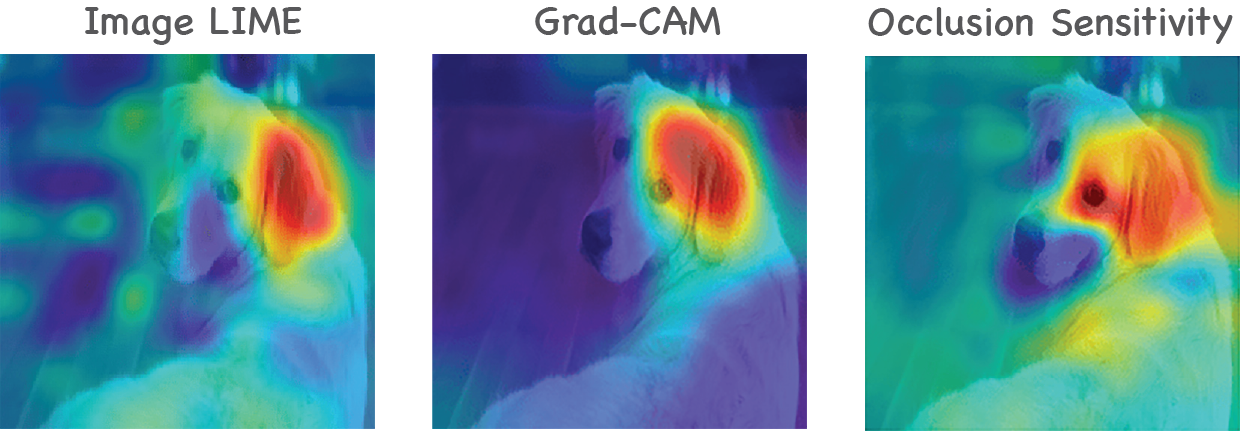

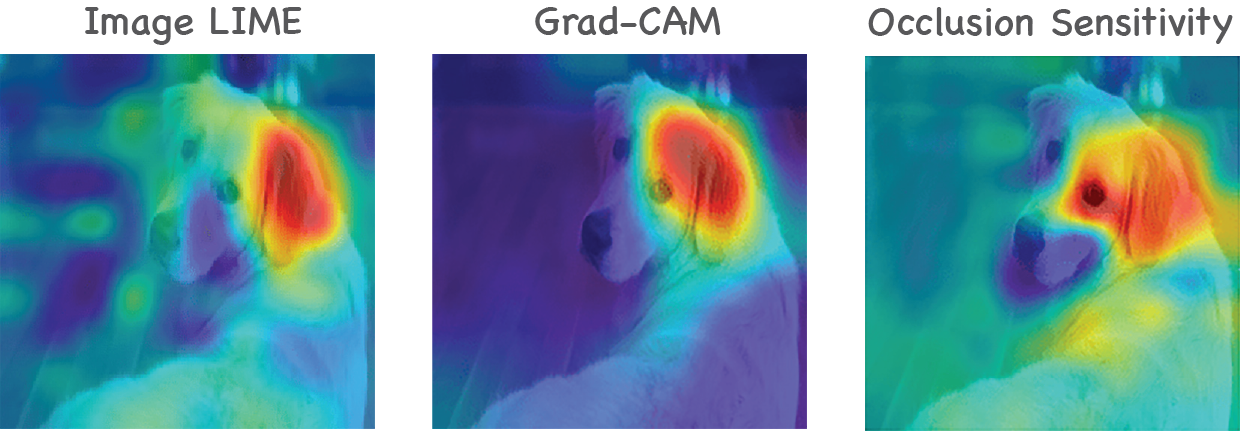

Figure: Explainable AI techniques can be used to understand image classification results

This is where traditional AI development approaches often fall short. Physical AI requires a systems-level perspective, where data science is integrated with signal processing, control theory, embedded systems, and rigorous simulation. And notably, physical AI requires tools that support cross-disciplinary workflows.

Figure: Explainable AI techniques can be used to understand image classification results

This is where traditional AI development approaches often fall short. Physical AI requires a systems-level perspective, where data science is integrated with signal processing, control theory, embedded systems, and rigorous simulation. And notably, physical AI requires tools that support cross-disciplinary workflows.

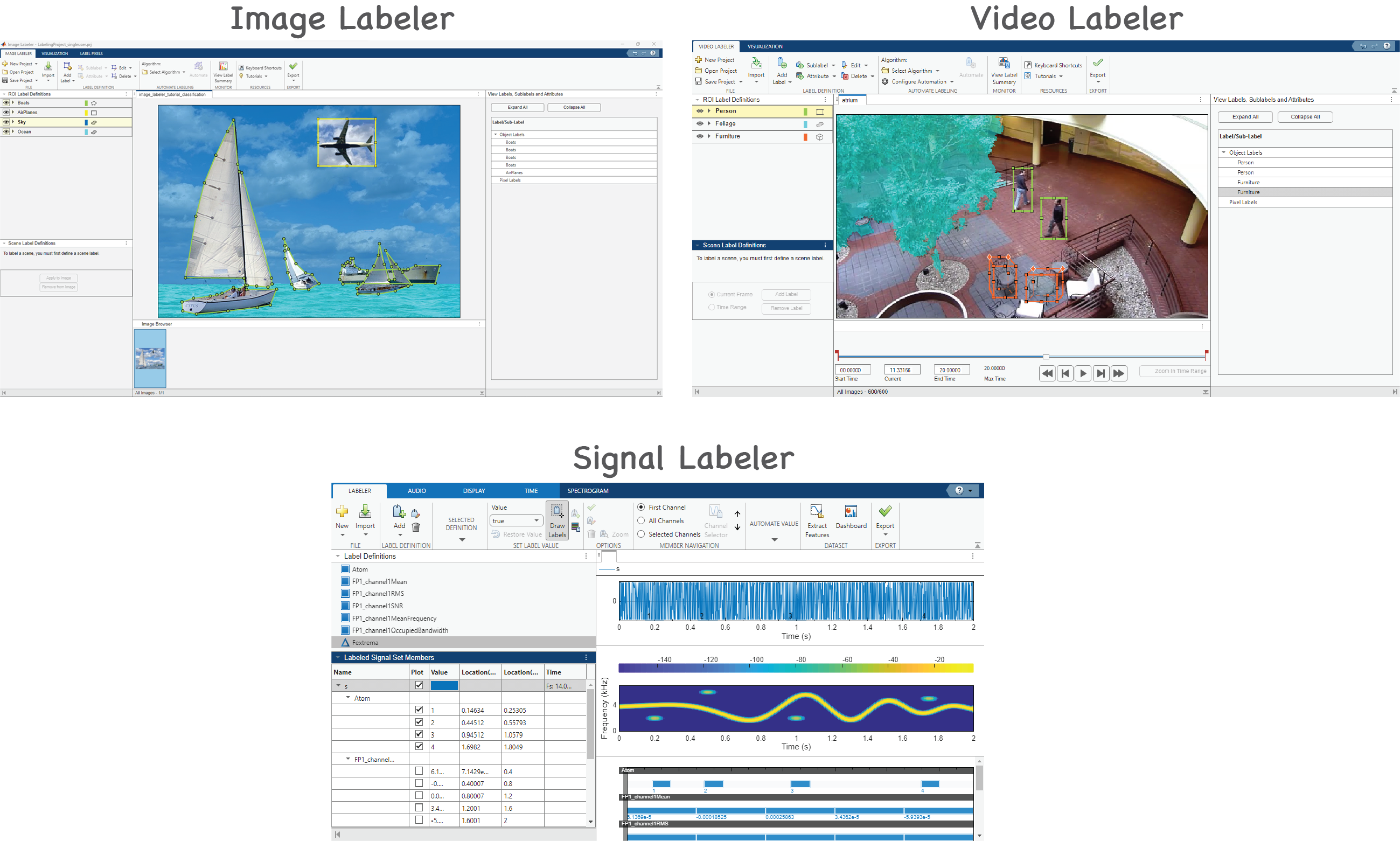

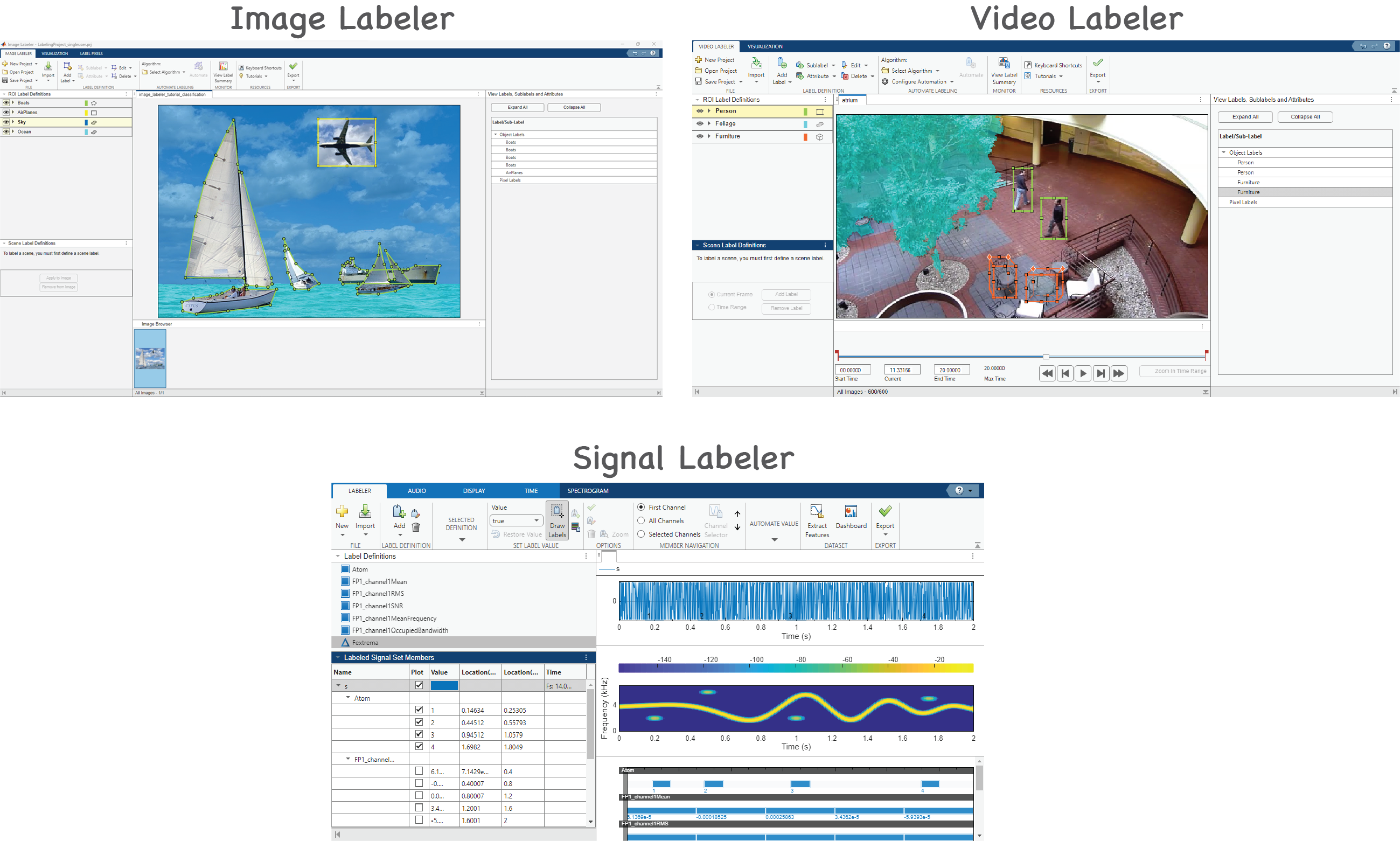

Figure: Low-code apps for labeling the ground truth in image, video, and signal data

Figure: Low-code apps for labeling the ground truth in image, video, and signal data

With MATLAB, engineers can ensure that the AI models that they train are suitable for a physical AI system.

Figure: Lane and vehicle detection in Simulink using deep learning (see example)

Figure: Lane and vehicle detection in Simulink using deep learning (see example)

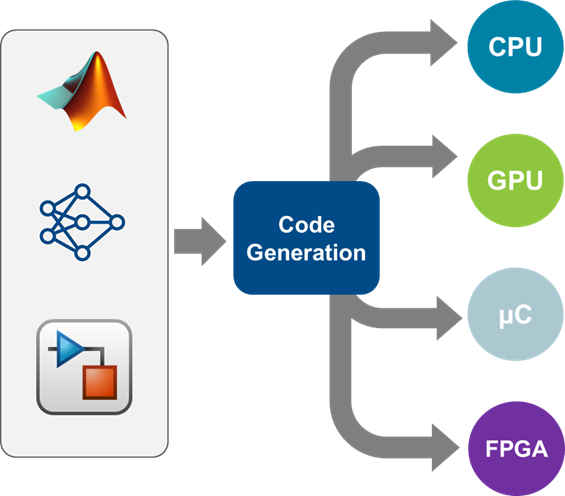

Figure: MATLAB and Simulink code generation tools

Figure: MATLAB and Simulink code generation tools

Why Is Physical AI Happening Now?

Intelligent machines that interact with the physical world have captivated the human imagination for over a century, with many examples in literature and popular movies. Recent advances in both AI and engineering have made physical AI not only possible, but also practical. On the AI side, machine learning, deep learning, and computer vision have improved dramatically in accuracy and efficiency. Reinforcement learning has shown promise in developing behavior policies without explicit programming. Self-supervised learning, where a model uses unsupervised learning for learning useful patterns and representations from unlabeled data, is unlocking new ways to train with less data. These advances are enabling physical AI systems to continuously learn from experience, adapt to new environments, and make complex decisions in real time. On the hardware front, the deployment of embedded AI applications has become increasingly efficient, even on resource-constrained edge devices. The evolution of sensors has been equally impressive; they are now smaller, more cost-effective, and offer greater precision, facilitating the seamless capture of critical data. Together, these advancements are enabling physical AI systems to operate effectively in diverse environments, providing the necessary foundation for real-time data processing and decision making. Figure: Design, simulation, test, and deployment of embedded AI applications

Simulation has also matured. Engineers can now create high-fidelity digital twins of physical systems and environments, enabling testing AI models within systems before deployment to hardware. The ability to simulate, test, and iterate, minimizes risk and cost, and makes it feasible to build and refine physical AI systems.

Finally, there’s market demand. Industries from manufacturing and healthcare to transportation and agriculture are looking for intelligent systems that can operate in the real world: systems that can handle complexity, adapt on the fly, and function autonomously or collaboratively with humans.

Figure: Design, simulation, test, and deployment of embedded AI applications

Simulation has also matured. Engineers can now create high-fidelity digital twins of physical systems and environments, enabling testing AI models within systems before deployment to hardware. The ability to simulate, test, and iterate, minimizes risk and cost, and makes it feasible to build and refine physical AI systems.

Finally, there’s market demand. Industries from manufacturing and healthcare to transportation and agriculture are looking for intelligent systems that can operate in the real world: systems that can handle complexity, adapt on the fly, and function autonomously or collaboratively with humans.

What Makes Physical AI Different?

Unlike traditional AI applications, such as image recognition or sentiment analysis, physical AI involves a closed-loop system. The AI model not only makes predictions or decisions but also directly affects its environment through actuators, motors, and mechanical components. These actions, in turn, alter the subsequent set of inputs the system receives. Therefore, physical AI involves important design considerations:- Real-time performance: Decisions often need to be made within milliseconds, leaving no time for cloud inference or high-latency computation.

- Robustness to noise and uncertainty: Sensor data can be imperfect. Physical AI systems must be able to reason through uncertainty and still perform reliably.

- Safety and reliability: Failures have real-world consequences. Physical AI systems must be tested extensively, especially when operating near or with humans. To learn more about the importance of verification and validation in AI, see this blog post.

- Explainability and interpretability: In regulated industries or safety-critical applications, engineers must be able to understand and explain the AI model’s decisions.

Figure: Explainable AI techniques can be used to understand image classification results

This is where traditional AI development approaches often fall short. Physical AI requires a systems-level perspective, where data science is integrated with signal processing, control theory, embedded systems, and rigorous simulation. And notably, physical AI requires tools that support cross-disciplinary workflows.

Figure: Explainable AI techniques can be used to understand image classification results

This is where traditional AI development approaches often fall short. Physical AI requires a systems-level perspective, where data science is integrated with signal processing, control theory, embedded systems, and rigorous simulation. And notably, physical AI requires tools that support cross-disciplinary workflows.

Physical AI in Engineering Applications

Physical AI represents a powerful convergence: intelligence meets embodiment. As AI goes beyond the digital world, physical AI will increasingly shape how machines move, feel, and adapt to our environments. Physical AI is already transforming how engineers design and deploy systems in real-world settings. |

Engineers are using AI and reinforcement learning to train manipulators that adapt to new objects and tasks, such as grasping irregular shapes or assembling components in variable orientations. Simulation models built with Simulink and Simscape can help teams prototype these physical AI systems virtually before deploying to physical robots. |

|

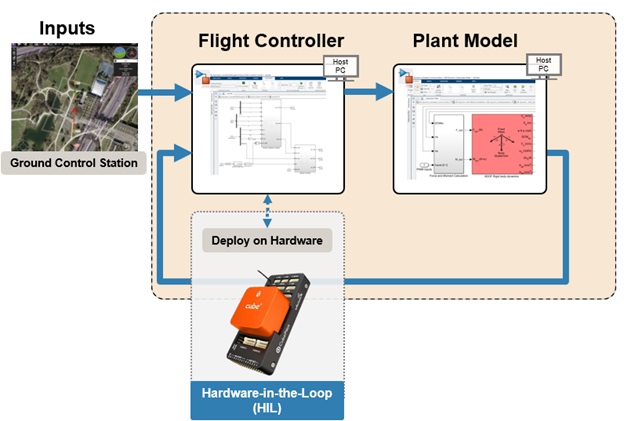

Engineers are using AI for perception and decision-making in autonomous vehicles, then combining these with model predictive control for trajectory planning and vehicle dynamics. Hardware-in-the-loop testing ensures the entire system performs reliably under real-time constraints. |

|

Engineers are building intelligent prosthetics and assistive devices that use biosignals (like EMG or EEG) to learn and adapt to the user’s motion and intent. AI models and predicts for noisy physiological signals, while control systems ensure safe actuation and feedback. |

|

Engineers are embedding AI in devices that respond to gestures, voice, or environmental context. Physical AI is integrating perception, control, and interaction in compact, responsive systems. |

Physical AI with MATLAB

MATLAB and Simulink provide a unified environment that bridges AI development with engineering design. This makes them uniquely suited for building physical AI systems that require a combination of algorithm design, simulation, control design, and deployment. By using MATLAB and Simulink, engineers can build reliable, testable, and scalable physical AI systems. To learn more, see MATLAB and Simulink for Artificial Intelligence.Data Preprocessing

MATLAB provides extensive support for signal, visual, and text data. Using datastores, you can conveniently manage collections of data that are too large to fit in memory at one time. You can use low-code apps (such as the Data Cleaner app and Preprocess Text Data Live Editor task) and built-in functions to improve data quality, and to automatically label the ground truth. Figure: Low-code apps for labeling the ground truth in image, video, and signal data

Figure: Low-code apps for labeling the ground truth in image, video, and signal data

Robust AI Modeling

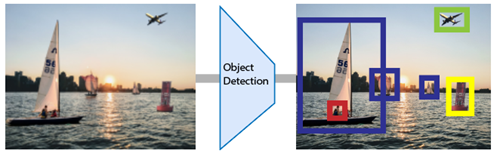

With MATLAB, engineers can design and train AI models using data collected from physical systems, such as images from a drone’s camera, audio signals from a wearable device, or time-series data from an industrial machine. To get started with AI model design and training for physical AI, check out these examples:| Data Type | Example |

Visual Data

Visual Data |

Get Started with Object Detection Using Deep Learning

Get Started with Object Detection Using Deep Learning |

Audio Data

Audio Data |

Train Deep Learning Network for Speech Command Recognition

Train Deep Learning Network for Speech Command Recognition |

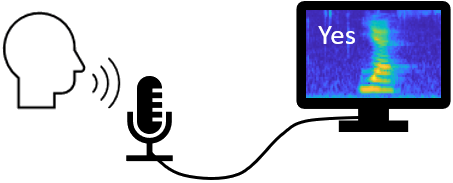

Time-Series Data

Time-Series Data |

Design Transformer Model for Time-Series Forecasting

Design Transformer Model for Time-Series Forecasting |

- Explainable and robust AI models – You can visualize activations, explain decisions, and verify robustness of models

- Resource-efficient AI models – You can compress models with quantization, projection, or pruning.

- Explore Network Predictions Using Deep Learning Visualization Techniques

- Verification of Neural Networks

- Compressing Neural Networks Using Network Projection

Simulation for Reducing Risk

One of the biggest advantages in physical AI development today is the ability to simulate the entire system before ever building a physical prototype. Once an AI model is trained, Simulink allows engineers to integrate it into a dynamic system model. System-wide simulation and testing in Simulink is an important step for physical AI. Engineers can simulate how the AI model interacts with physical components, such as motors, actuators, or environmental forces, and test how it responds under varying conditions. This simulation-first approach is critical in reducing risk, ensuring safety, and accelerating development. Simulation also enables the testing of corner cases, that is, rare, but critical, scenarios that a system must handle safely. For example, engineers can simulate sensor failures, unexpected obstacles, or extreme operating conditions to validate how the AI and control systems respond. These scenarios might be too dangerous, expensive, or unpredictable to reproduce in the real world. Figure: Lane and vehicle detection in Simulink using deep learning (see example)

Figure: Lane and vehicle detection in Simulink using deep learning (see example)

Designing Control Algorithms

In many physical AI systems, control algorithms (such as PID controllers, state machines, or motion planners) must work alongside AI models. Simulink enables this co-design, allowing engineers to simulate and test traditional control systems with AI-driven components. Stateflow supports the design of complex decision logic, enabling engineers to model hybrid systems that blend AI with rule-based behavior.Automating Deployment

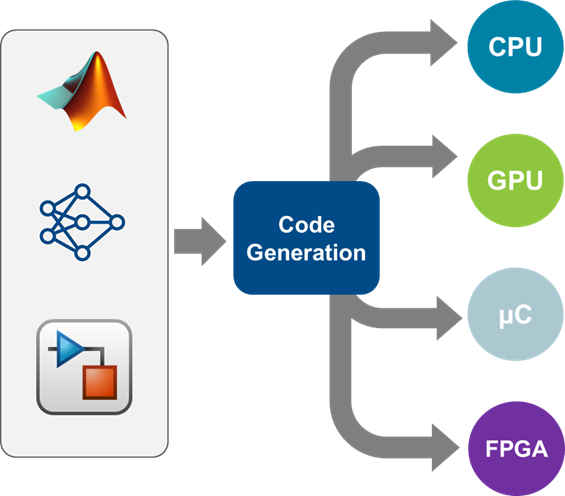

When it’s time to move from simulation to the physical world, MATLAB and Simulink support automatic code generation. Engineers can generate optimized C, C++, CUDA®, or HDL code to deploy entire embedded AI applications to CPUs, microcontrollers, GPUs, and FPGAs. Learn more about automated deployment for physical AI in MATLAB and Simulink for Embedded AI. Figure: MATLAB and Simulink code generation tools

Figure: MATLAB and Simulink code generation tools

Final Thoughts

If you’re an engineer looking to bring AI into the physical world, now is the time to explore the possibilities. Physical AI is here and it’s reshaping how we build the machines of tomorrow. MATLAB and Simulink are an excellent platform of choice for engineers building physical AI systems. They offer the flexibility of AI model development, the rigor of control design, the realism of simulation, and the automation of deployment; all in one workflow and environment.

コメント

コメントを残すには、ここ をクリックして MathWorks アカウントにサインインするか新しい MathWorks アカウントを作成します。