Local LLMs with MATLAB

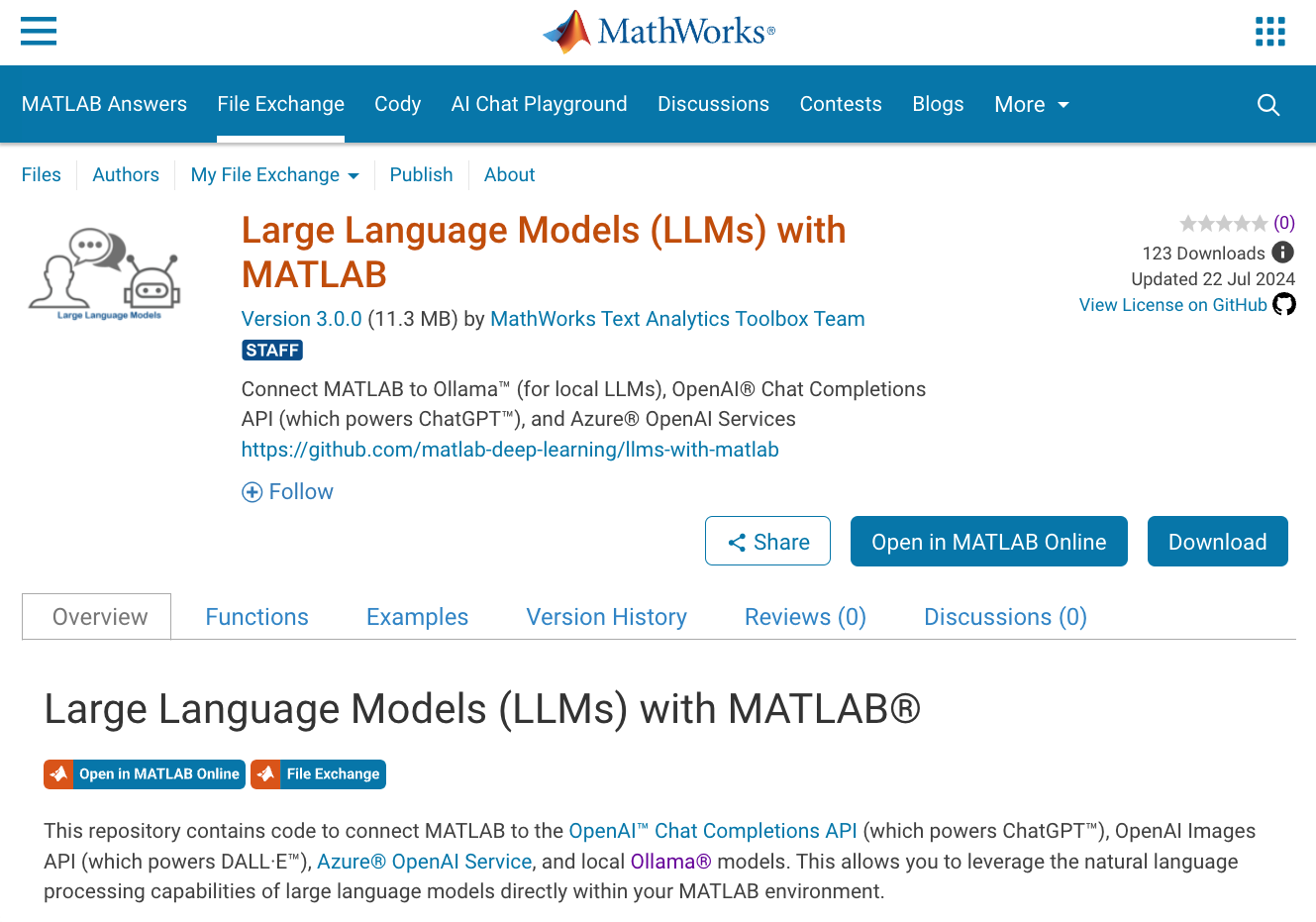

Local large language models (LLMs), such as llama, phi3, and mistral, are now available in the Large Language Models (LLMs) with MATLAB repository through Ollama™! This is such exciting news that I can’t think of a better introduction than to share with you this amazing development. Even if you don’t read any further (but I hope you do), because you are too eager to try out local LLMs with MATLAB, know that you can access the repository via these two options:

I am glad you decided to keep reading. In the previous blog post Large Language Models with MATLAB, I shared with you how to connect MATLAB to the OpenAI API. In this blog post, I am going to show you:

I am glad you decided to keep reading. In the previous blog post Large Language Models with MATLAB, I shared with you how to connect MATLAB to the OpenAI API. In this blog post, I am going to show you:

Initialize the chatbot with the specified model (llama3) and instructions. The chatbot anticipates that it will receive a query from the user, which may or may not be enhanced by additional context. This means, RAG may or may not be applied.

Initialize the chatbot with the specified model (llama3) and instructions. The chatbot anticipates that it will receive a query from the user, which may or may not be enhanced by additional context. This means, RAG may or may not be applied.

I am glad you decided to keep reading. In the previous blog post Large Language Models with MATLAB, I shared with you how to connect MATLAB to the OpenAI API. In this blog post, I am going to show you:

I am glad you decided to keep reading. In the previous blog post Large Language Models with MATLAB, I shared with you how to connect MATLAB to the OpenAI API. In this blog post, I am going to show you:

- How to access local LLMs, including llama3 and mixtral, by connecting MATLAB to a local Ollama server.

- How to use llama3 for retrieval-augmented generation (RAG) with the help of MATLAB NLP tools.

- Why RAG is so useful when you want to use your own data for natural language processing (NLP) tasks.

Set Up Ollama Server

First, go to https://ollama.com/ and follow the download instructions. To use local models with Ollama, you will need to install and start an Ollama server, and then, pull models into the server. For example, to pull llama3, go to your terminal and type:ollama pull llama3Some of the other supported LLMs are llama2, codellama, phi3, mistral, and gemma. To see all supported LLMs by the Ollama server, see Ollama models. To learn more about connecting to Ollama from MATLAB, see LLMs with MATLAB - Ollama.

Initialize Chat for RAG

RAG is a technique for enhancing the results achieved with an LLM by using your own data. The following figure shows the RAG workflow. Both accuracy and reliability can be augmented by retrieving information from trusted sources. For example, the prompt fed to the LLM can be enhanced with more up-to-date or technical information. Initialize the chatbot with the specified model (llama3) and instructions. The chatbot anticipates that it will receive a query from the user, which may or may not be enhanced by additional context. This means, RAG may or may not be applied.

Initialize the chatbot with the specified model (llama3) and instructions. The chatbot anticipates that it will receive a query from the user, which may or may not be enhanced by additional context. This means, RAG may or may not be applied.

system_prompt = "You are a helpful assistant. You might get a " + ...

"context for each question, but only use the information " + ...

"in the context if that makes sense to answer the question. ";

chat = ollamaChat("llama3",system_prompt);

Ask Simple Question

First, let’s check how the model performs when prompted with a general knowledge question. Define the prompt for the chatbot. Notice that the prompt does not include any context, that means that RAG is not applied.query_simple = "What is the most famous Verdi opera?"; prompt_simple = "Answer the following question: "+ query_simple;Define a function that wraps text, which you can use to make the generated text easier to read.

function wrappedText = wrapText(text)

wrappedText = splitSentences(text);

wrappedText = join(wrappedText,newline);

end

Generate a response.

response_simple = generate(chat,prompt_simple); wrapText(response_simple)

ans =

"That's an easy one!

The most famous Verdi opera is undoubtedly "La Traviata".

It was written in 1853 and is based on Alexandre Dumas' novel "La Dame aux Camélias".

It tells the tragic story of Violetta Valéry, a courtesan who falls in love with Alfredo Germont, but ultimately sacrifices her life to preserve his reputation.

The opera features some of Verdi's most beloved music, including the iconic duet "Ah, fors'è lui" and the show-stopping aria "Sempre libera"."

The generated response is coherent, accurate, and quite helpful.

Ask Technical Question

Now, let’s see if the chatbot can perform as well when asked a technical question without enhancing its performance with RAG. More specifically, I want to know how to import a deep learning model from PyTorch® into MATLAB. Define the prompt for the chatbot and generate a response. Wrap the text for easier visualization.query_tech = "How to import a PyTorch model into MATLAB?"; prompt_tech = "Answer the following question: "+ query_tech; response_tech = generate(chat,prompt_tech); wrapText(response_tech)

ans =

"To import a PyTorch model into MATLAB, you can use the `torch2matlab` tool provided by MathWorks.

This tool is specifically designed for converting PyTorch models into MATLAB's deep learning framework, known as Deep Learning Toolbox.

Here are the general steps to follow:

1.

First, make sure you have the `torch2matlab` tool installed on your system.

You can download it from the MathWorks website.

2.

Save your PyTorch model using the `torch.

jit.

save()` function, which will convert your model into a binary file (e.g., `.

pt` file).

3.

Open MATLAB and navigate to the directory where you saved the PyTorch model.

4.

Run the following command in the MATLAB Command Window:

```matlab

model = torch2matlab('your_model_file.

pt');

```

Replace `'your_model_file.

pt'` with the actual name of your PyTorch model file (e.g., `mymodel.pt`).

5.

The `torch2matlab` tool will convert your PyTorch model into a MATLAB-compatible format and store it in the `model` variable.

6.

You can then use this converted model in your MATLAB code, just like you would with any other Deep Learning Toolbox model.

Note that the conversion process may not always be perfect, as the two frameworks have different architectures and representations of neural networks.

However, the `torch2matlab` tool should handle most common cases and allow you to import and use your PyTorch models in MATLAB."

Before you start looking into how to use torch2matlab, I have to inform you that such a tool does not exist. Even though the generated response contains some accurate elements, it is also clear that the model hallucinated, which is the most widely known pitfall of LLMs. The model didn’t have enough data to generate an informed response but generated one anyways.

Hallucinations might be more prevalent when querying on technical or domain-specific topics. For example, if you want as an engineer to use LLMs for your daily tasks, feeding additional technical information to the model using RAG, can yield much better results as you will see further down this post.

Download and Preprocess Document

Luckily, I know just the right technical document to feed to the chatbot, a previous blog post, to enhance its accuracy. Specify the URL of the blog post.url = "https://blogs.mathworks.com/deep-learning/2024/04/22/convert-deep-learning-models-between-pytorch-tensorflow-and-matlab/";Define the local path where the post will be saved, download it using the provided URL, and save it to the specified local path.

localpath = "./data/";

if ~exist(localpath, 'dir')

mkdir(localpath);

end

filename = "blog.html";

websave(localpath+filename,url);

Read the text from the downloaded file by first creating a FileDatastore object.

fds = fileDatastore(localpath,"FileExtensions",".html","ReadFcn",@extractFileText);

str = [];

while hasdata(fds)

textData = read(fds);

str = [str; textData];

end

Define a function for text preprocessing.

function allDocs = preprocessDocuments(str)

paragraphs = splitParagraphs(join(str));

allDocs = tokenizedDocument(paragraphs);

end

Split the text data into paragraphs.

document = preprocessDocuments(str);

Retrieve Document

In this section, I am going to show you an integral part of RAG, that is how to retrieve and filter the saved document based on the technical query. Tokenize the query and find similarity scores between the query and document.embQuery = bm25Similarity(document,tokenizedDocument(query_tech));Sort the documents in descending order of similarity scores.

[~, idx] = sort(embQuery,"descend"); limitWords = 1000; selectedDocs = []; totalWords = 0;Iterate over the sorted document indices until the word limit is reached.

i = 1;

while totalWords <= limitWords && i <= length(idx)

totalWords = totalWords + size(document(idx(i)).tokenDetails,1);

selectedDocs = [selectedDocs; joinWords(document(idx(i)))];

i = i + 1;

end

Generate Response with RAG

Define the prompt for the chatbot with added technical context, and generate a response.prompt_rag = "Context:" + join(selectedDocs, " ") ...

+ newline +"Answer the following question: "+ query_tech;

response_rag = generate(chat, prompt_rag);

wrapText(response_rag)

ans =

"To import a PyTorch model into MATLAB, you can use the `importNetworkFromPyTorch` function.

This function requires the name of the PyTorch model file and the input sizes as name-value arguments.

For example:

net = importNetworkFromPyTorch("mnasnet1_0.

pt", PyTorchInputSizes=[NaN, 3,224,224]);

This code imports a PyTorch model named "mnasnet1_0" from a file called "mnasnet1_0.

pt" and specifies the input sizes as NaN, 3, 224, and 224.

The `PyTorchInputSizes` argument is used to automatically create and add the input layer for a batch of images."

The chatbot’s response is now accurate!

In this example, I used web content to enhance the accuracy of the generated response. You can replicate this RAG workflow to enhance the accuracy of your queries with any sources (one or multiple) you choose, like technical reports, design specifications, or academic papers.

Key Takeaways

- The Large Language Models (LLMs) with MATLAB repository has been updated with local LLMs through an Ollama server.

- Local LLMs are great for NLP tasks, such as RAG, and now you can use the most popular LLMs from MATLAB.

- Take advantage of MATLAB tools, and more specifically Text Analytics Toolbox functions, to enhance the LLM functionality, such as retrieving, managing, and processing text.

コメント

コメントを残すには、ここ をクリックして MathWorks アカウントにサインインするか新しい MathWorks アカウントを作成します。