Buckets of Builds

Buckets. Sometimes it rains buckets. Other times we are challenged with ice buckets. When it comes to my code, tests, and development process, I want to put it all in buckets. One bucket for tests that are crucial to my iterative workflow. One bucket for tests that are less crucial on a change by change basis, but still important overall. A bucket for my performance tests and a bucket for my UI tests. It's buckets all the way down.

A long time ago we chatted a couple times about how we can put some of our tests into different buckets and then select them differently. This is great but I didn't really complete the story with how this can work in your automation. After all, you are using a CI system to run your tests for you right?

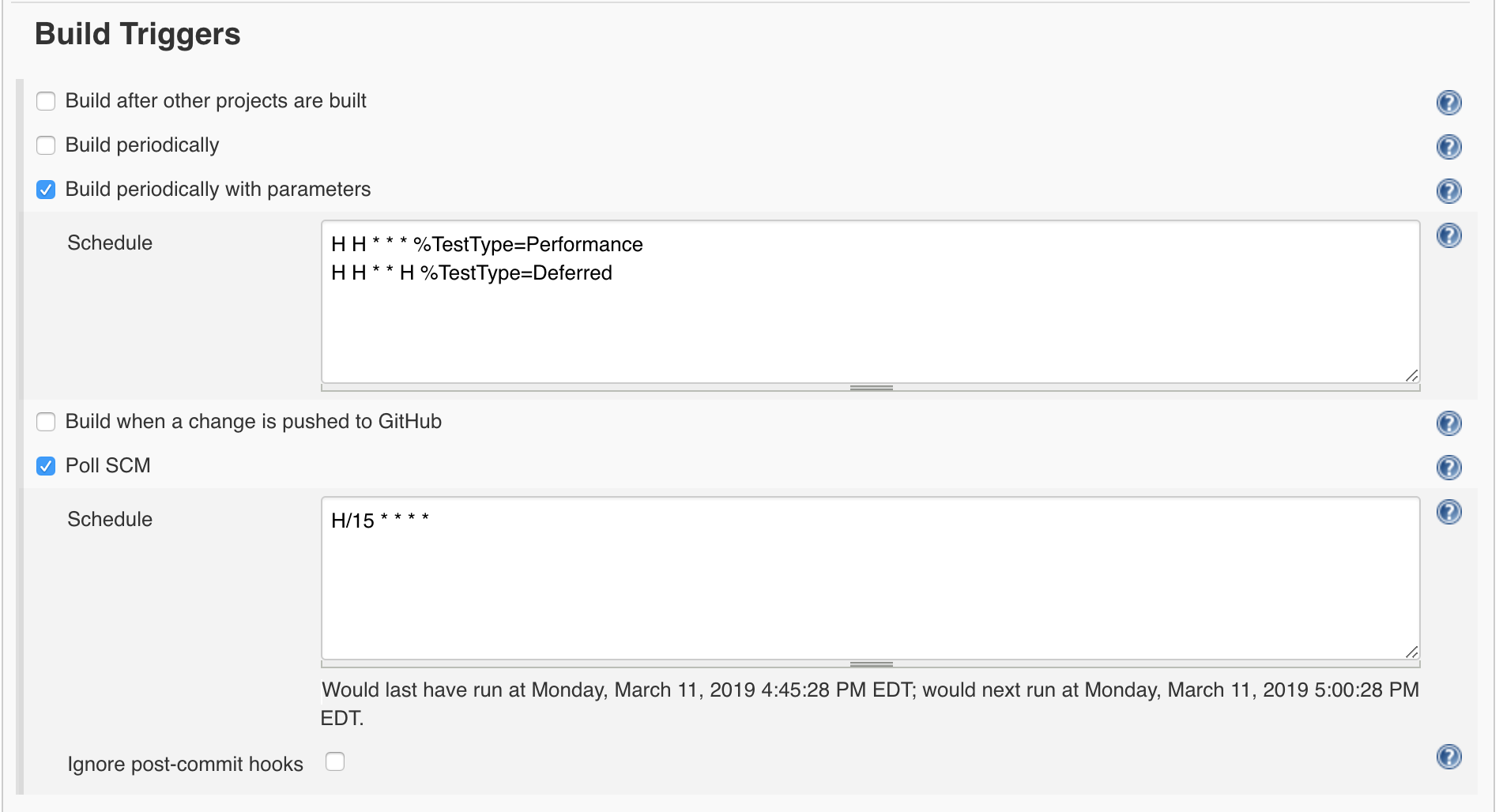

Well the trouble here is that I only want to run some of my tests on every commit, but then I'd like to defer others. For example, I could see myself running all of my fast unit tests on every change to my code, but then run the performance tests perhaps every night. I might also have a set of tests that are expensive and only suited to run periodically. Maybe they take a long time to run, operate on large data or some external resource, require manual verification, or even are a bit flaky. I still want to keep these tests in my arsenal, but I want to defer running them to a weekly or even monthly schedule.

In Jenkins, you can do this with a parameterized build quite easily. First, create a standard freestyle job:

Sitting right there at the top of the build configuration page, right under our collective noses, is the option to mark the build as parameterized. Check that box and we can add our own parameter values, or buckets as one might say, which give us our own bona fide parameters to build against. For our case let's add a standard build for every commit, a build to run our performance tests, and a build to run our more expensive deferred tests:

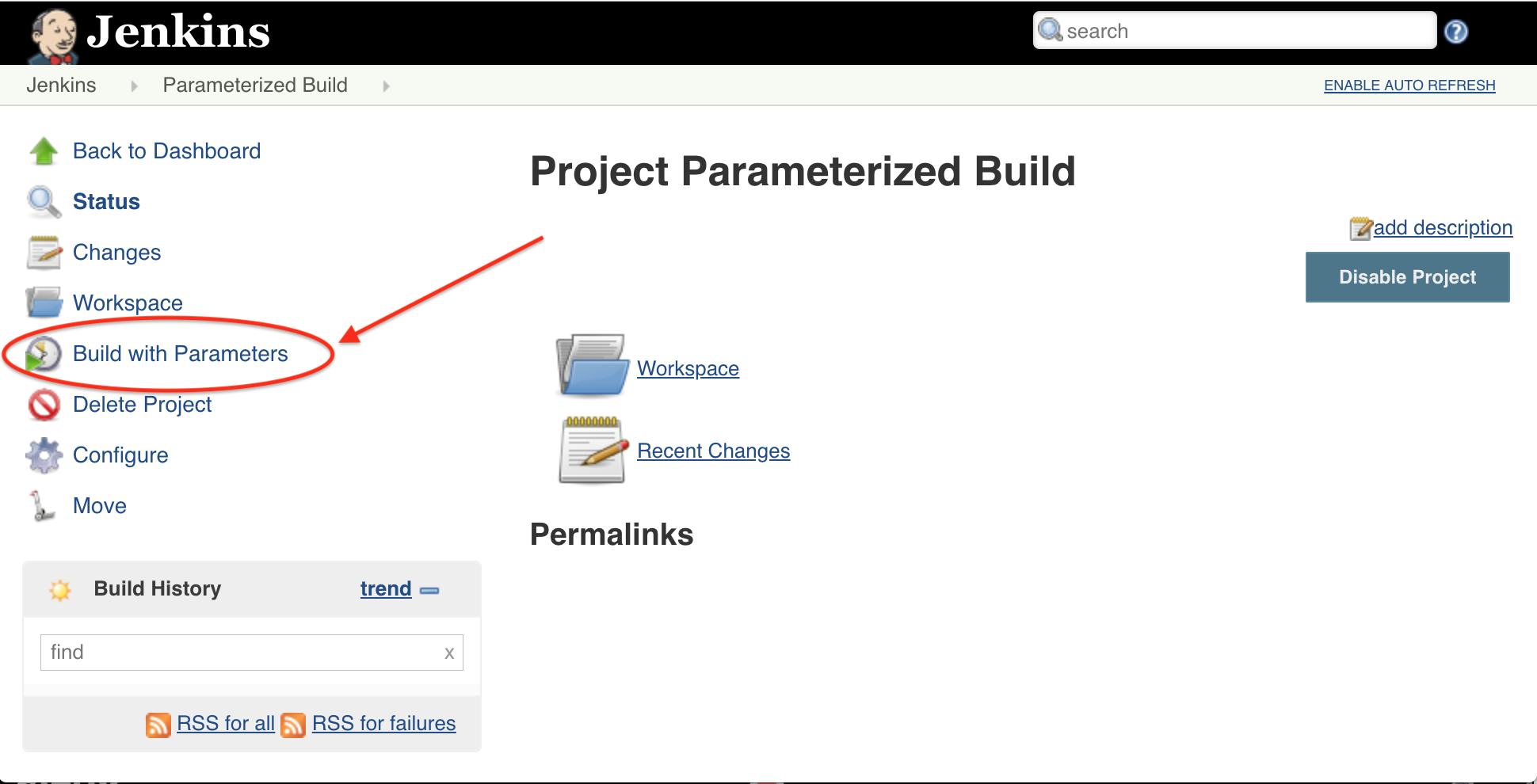

This now changes our project home page to include "Build with parameters". "Build" only is so last week.

Great, our project now runs our standard build to run against every commit, but if we want to inject a bit of flavor into our build we now have that option:

What is this really doing? How is the build different? Well it comes down to the environment. The parameters we have defined now become environment variables during the build so we can process them in our CI script. It might look something like this:

testType = string(getenv("TestType")); if testType == "Performance" perfResults = runperf("IncludeSubfolders", true, "Superclass", "matlab.perftest.TestCase"); assert(all([perfResults.Valid]), "Some performance tests were invalid!") convertToJMeterCSV(perfResults); else testsToRun = runtests("IncludeSubfolders", true, "Tag", testType); assert(~any([results.Failed]), join([testType, "Tests failed!"])); end

Now all we need to do is add TestTags to the "Standard" and "Deferred" tests and we have test selection at our mercy! The default build, such as we might schedule or run on every commit to source control, will use the first parameter value, in this case "Standard" so that fits nicely.

Note for the performance tests we can process them differently, like we demonstrated here. Also, we select these performance tests via their super class, since we have derived all of these tests from matlab.perftest.TestCase . If we want, we can still run these as normal tests as well by simply adding the "Standard" TestTag to them. This would ensure that they don't break on any given change and we don't find out until we want to gather performance data.

There we have it. It's pretty easy to get going with a parameterized build, and leveraging features like test tagging it can really open up some nice workflows. Have you used parameterized builds? Would love to hear your stories!

Cleve’s Corner: Cleve Moler on Mathematics and Computing

Cleve’s Corner: Cleve Moler on Mathematics and Computing The MATLAB Blog

The MATLAB Blog Guy on Simulink

Guy on Simulink MATLAB Community

MATLAB Community Artificial Intelligence

Artificial Intelligence Developer Zone

Developer Zone Stuart’s MATLAB Videos

Stuart’s MATLAB Videos Behind the Headlines

Behind the Headlines File Exchange Pick of the Week

File Exchange Pick of the Week Hans on IoT

Hans on IoT Student Lounge

Student Lounge MATLAB ユーザーコミュニティー

MATLAB ユーザーコミュニティー Startups, Accelerators, & Entrepreneurs

Startups, Accelerators, & Entrepreneurs Autonomous Systems

Autonomous Systems Quantitative Finance

Quantitative Finance MATLAB Graphics and App Building

MATLAB Graphics and App Building

评论

要发表评论,请点击 此处 登录到您的 MathWorks 帐户或创建一个新帐户。