Build and Deploy SLAM Workflows with MATLAB

Explore the essentials of SLAM and its role in robotics and autonomous systems. This blog post by our expert Jose Avendano Arbelaez provides a quick overview of SLAM technologies and its implementation in MATLAB. Watch the webinar video, “Build and Deploy SLAM Workflows with MATLAB,” to learn more!

———————–

The Quickest SLAM Intro

So… you have also heard about SLAM (Simultaneous Localization and Mapping), and how it is a growing technology for robots and autonomous systems. When drones, robots, or cars navigate a place they have never visited, they need the ability to construct a map of an environment and locate their position within it at the same time and this is where SLAM comes into the rescue. The applications of SLAM in robotics, automated driving, and even aerial surveying are plentiful, and since MATLAB now has a pretty strong set of features to implement this technology, we thought it would be a good time to make the quickest introduction to SLAM for newcomers and a good refresher for those building interest in implementing SLAM. We will also be hosting a webinar on Building and Deploying SLAM Workflows in MATLAB on November 8th, so please sign up and we would be happy to answer some questions live 😊.

What is SLAM, and Why is it Important?

SLAM allows a robot or vehicle to build an accurate map of its surroundings while pinpointing its location within this map. This dual capability is crucial for safe navigation, path planning, and obstacle avoidance in dynamic environments. SLAM algorithms are typically classified by the types of sensors they use, including:

- LiDAR SLAM – Uses LiDAR (Light Detection and Ranging) distance sensors.

- Visual SLAM – Relies on camera images.

- Multi-Sensor SLAM – Combines various sensors such as cameras, LiDARs, IMUs (Inertial Measurement Units), and GPS to improve accuracy and robustness.

Fig. Example of Mapping using LiDAR SLAM

How Does SLAM Work?

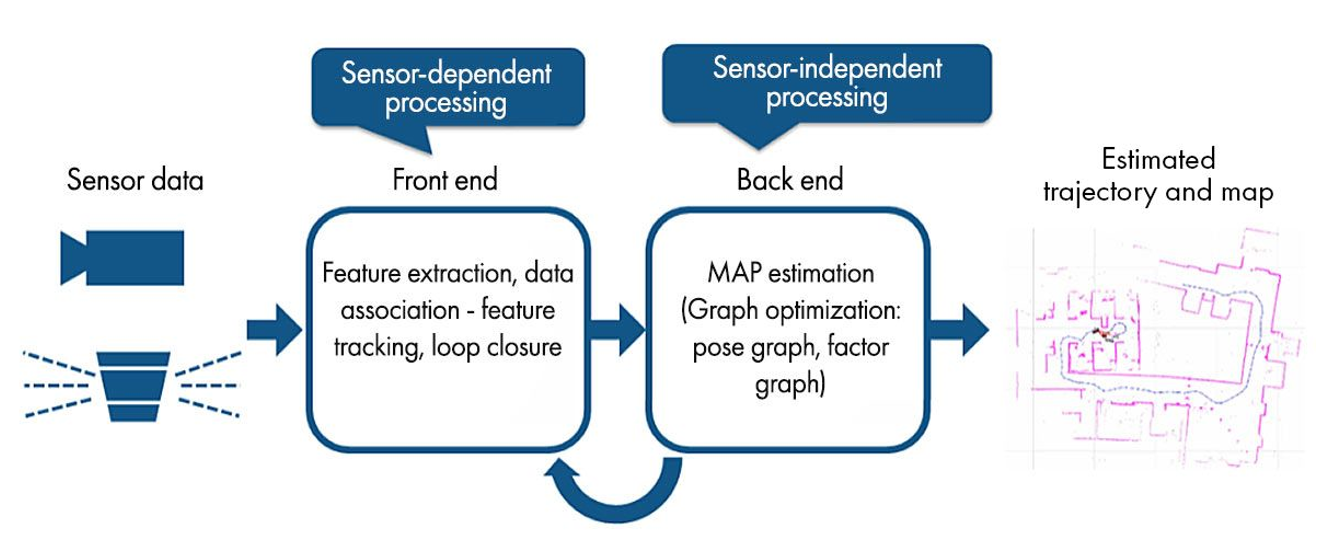

SLAM algorithms function by gathering raw sensor data and processing it through two primary stages:

- Front-End Processing: Extracts relevant features and creates initial estimates of the robot’s pose. This phase focuses on analyzing incoming sensor data, identifying key features, and determining the system’s movement through the disparity of the features across time.

- Back-End Optimization: Refines these initial estimates to reduce inaccuracies and correct for drift. A graph-based approach is often used here, where nodes represent poses and edges represent spatial relationships between poses. Techniques like pose graph optimization and factor graphs help refine this data, ultimately building a cohesive map and accurate localization.

- Loop Closures: Technically this is part of the SLAM back-end, but I thought it was worth highlighting separately. Although there are some great algorithms to reduce drift and error, sensors will have tolerances that affect accuracy. By navigating to previously encountered parts of an environment there is a chance to double check your map and trajectory and apply corrections. This is sooo important in SLAM and although most SLAM applications assume an unknown environment, if you have a chance to bake in some loop closures in your applications you should weigh that option heavily. An example would be trying to reconstruct an environment with a drone, you can easily drive back to a known location, and it will be of great benefit in the optimization back-end of SLAM.

Fig. SLAM Algorithm Stages

Types of SLAM Algorithms

LiDAR SLAM

LiDAR-based SLAM is particularly effective for environments where 3D mapping is essential, such as indoor navigation in robotics or creating dense, high-resolution maps for autonomous vehicles. But 2D lidar SLAM is also very useful and less computationally intensive.

Implementation Workflow:

- Data Acquisition: Read point cloud data in formats like LAS, PCD, and ROS bag files, or stream live LiDAR data from sensors.

- Data Processing: Cleaning and filtering the data (e.g., down-sampling) to reduce computational load, or things like segmenting ground plane, removing outliers and de-noising.

- Point Cloud Registration: Combines multiple point clouds into a unified coordinate system using registration algorithms like ICP (Iterative Closest Point), NDT (Normal Distributions Transform), and LOAM (LiDAR Odometry and Mapping). As a result, the movement of the system is derived.

- Graph Optimization: Minimizes trajectory errors and refine map data. Loop closure detection can further refine the map by correcting for drift when the robot revisits a known location. A common optimization method implemented is pose-graphs.

Visual SLAM (vSLAM)

Visual SLAM uses cameras to perform SLAM. It’s widely used in autonomous driving and UAVs, and it is also gaining adoption in robotics whenever real-time visual data is available.

Fig. Map dense reconstruction using stereo vSLAM

vSLAM Workflow:

- Data Acquisition: Reading images from cameras and deriving camera parameters through calibration.

- Data Processing: Resizing, filtering or masking images to facilitate feature detection.

- Tracking and Mapping: With the main difference being that instead of using point cloud registration to derive motion, it will use computer vision-based algorithms to derive the motion by first identifying key features in an image, tracking them across frames and using known camera parameters (intrinsics) to geometrically derive camera positions.

- Graph Optimization: Minimize trajectory errors, refine map data and process loop closures. In the case of vSLAM, both pose-graphs and factor-graphs are popular ways to optimize the estimation process.

vSLAM Variants

- Stereo VSLAM: Stereo vision uses dual cameras to gauge depth by comparing the disparity between two images, making it ideal for depth estimation. It’s often applied in autonomous driving to map complex environments like parking lots, where loop closures optimize the trajectory and improve overall accuracy.

- Fisheye Cameras: Can provide wider angles are more coverage, they add an extra data processing element of undistorting the images before they can be used in most common vSLAM software stacks.

- RGB-D vSLAM: Can help derive environment scale (absolute dimensions) with a single camera since there is an additional calibrated depth frame.

Multi-Sensor SLAM

For applications requiring robust navigation in various conditions, multi-sensor SLAM can combine data from diverse sensors. For instance, an autonomous drone might use a combination of a camera and an IMU to track its position in 3D space accurately. An upcoming technology to help mapping optimizations with multiple sensors are factor-graphs. As mentioned before they are already used in many vSLAM variants and reusing a factor-graph stack will save a lot of development work.

Visual-Inertial SLAM (VI-SLAM): By integrating camera data with IMU readings, VI-SLAM improves localization accuracy by accounting for environment scale and aiding in rapid movement estimations. Factor graphs, which use nodes and factors to model positional constraints, are commonly used to manage the integration of data sources from multiple sensors. Factor graphs allow for merging of many different data types like GPS, IMU, cameras, wheel encoders and more, providing many more alternatives to consider when deciding to invest into a custom SLAM algorithm.

Fig Animation of Drone Trajectory Estimation Using VI-SLAM

Verification and Deployment

Testing and deploying SLAM algorithms require diligent verification of the developed algorithm and sometimes preparing the algorithm to be ready to run in an embedded hardware might take time. Here are some things to consider when you are done exploring a SLAM implementation:

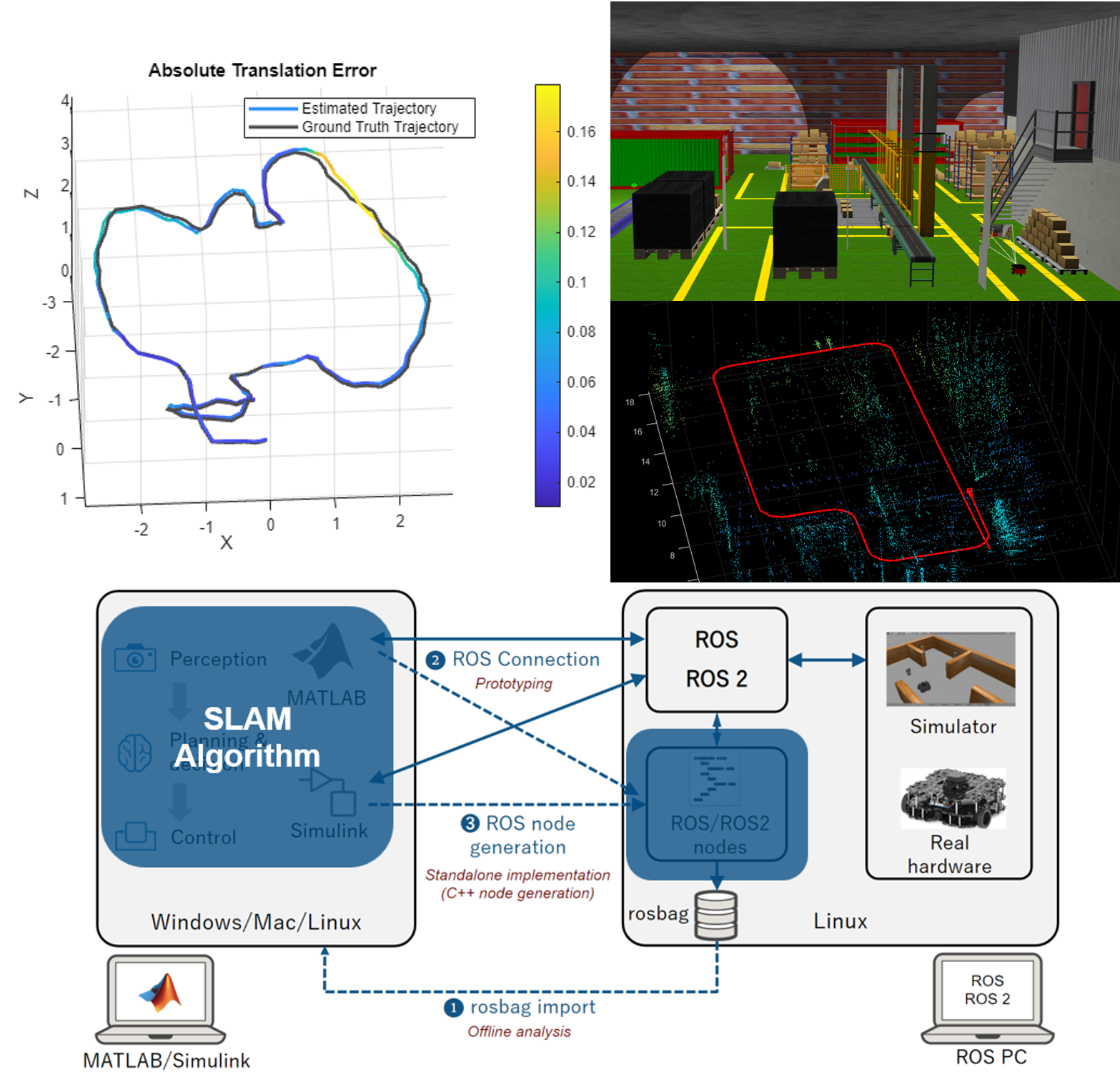

- Compare Trajectories: SLAM-generated paths should be compared with a ground truth to evaluate absolute and relative errors. This helps you make sure that the errors are acceptable.

- Simulate: Simulators like Gazebo allow testing algorithms in virtual environments. Being able to create different scenarios or generate synthetic data can give you many more testing datasets to validate your SLAM algorithm. As an example, a robot operating in a simulated warehouse can help test how a SLAM algorithm responds to complex or even dynamic obstacles.

- Make code reusable: The final step of deploying a SLAM algorithm is modifying it to run in whichever hardware is used in your robot or platform. This can range from adjusting your algorithm implementation or creating wrappers to your development stack, to rewriting code in a different language altogether. If you are using MATLAB/Simulink you can always use the automatic code generation available to generate C/C++ libraries, executables or even CUDA code for GPUs.

- Deploy to ROS (Robot Operating System): A lot of SLAM developers will want to put their algorithm into a packaged ROS node. That will mean adjusting the code from a development/testing stack into a production layout that interfaces with a ROS system. If you are working in MATLAB, I would suggest investing in getting familiar with ROS Toolbox, it can generate packaged ROS nodes from MATLAB code and Simulink models with the appropriate setup.

Fig. SLAM Validation and Deployment Options

Choosing a SLAM Algorithm

Developing a custom algorithm for something like SLAM is definitely a long-term investment. Which is why considering what you actually need (derive formal requirements) before choosing to explore a SLAM approach is important. The best SLAM approach will depend on sensor availability within the desired platform and application requirements (resolution, payload capacity, cost, compute capacity, etc). Although LiDAR is ideal for detailed 3D mapping, it may be overkill/expensive for simpler tasks that cameras can handle. Multi-sensor SLAM suits applications needing high accuracy across diverse environments, such as drones navigating both indoors and outdoors and can also help add accuracy to both LiDAR and Visual SLAM without much cost increase. This page in MATLAB’s documentation is a good start to dive into considering what type of SLAM might be best for your application.

Conclusion

SLAM algorithms can seem like a very complicated development investment for an autonomous system, but they are essential for vehicles and robots travelling in unknown environments and can greatly enhance your perception stack if you have the right sensors and compute to implement them. There are reusable algorithms like the ones available in MATLAB for lidar SLAM, visual SLAM, and factor-graph based multi-sensor SLAM that enables prototyping custom SLAM implementations with much lower effort than before. Of course, I left much unsaid about SLAM in this quick write up, but I hope you found it useful! And if you have questions or any suggestions for follow up content, please make sure to comment.

- カテゴリ:

- SLAM

コメント

コメントを残すには、ここ をクリックして MathWorks アカウントにサインインするか新しい MathWorks アカウントを作成します。