20 years of supercomputing with MATLAB

While prepping for the SC24 supercomputing conference with the rest of the MathWorks crew I idly wondered when Parallel Computing Toolbox was first released. Jos Martin, one of the original authors of the toolbox, glanced at his watch before saying "oh wow! Actually 20 years yesterday! The first version of Distributed Computing Toolbox, which later evolved into the products we now call Parallel Computing Toolbox and MATLAB Parallel Server, was released on November 7th 2004".

"Someone should tell the story!" I insisted and, as it turns out, volunteered. So, Jos sat down and started to tell me how things were back in the day.

1995: Cleve Moler writes "Why there isn't a parallel MATLAB"

All good stories of how a thing was done starts with the story of why the thing shouldn't be done and this one is no different. Back in 1995, a parallel version of MATLAB didn't make much sense and Cleve was moved to write about exactly why this was the case in an article he tells us is the most widely cited piece he's ever written: Why there isn't a parallel MATLAB.

That article considered what we now call distributed arrays: matrices/arrays that are split across multiple independent computers. 1995 computer technology just wasn't good enough to make a performant parallel MATLAB that would work in any way that was recognizable as MATLAB. The results were simply too slow. In addition, barely anyone actually had a decent parallel computer. It didn't make good business sense to put too much effort into this.

2004: Distributed Computing Toolbox debuts in SC04, Pitsburgh.

Things were rather different in 2004. MathWorks had customers that were demanding a parallel MATLAB and so a team formed to build one. This is where Jos' story began:

Jos: We released it on November 7th 2004 and it was pretty basic!

Me: I guess parfor and not much else right?

Jos: parfor?! Oh you sweet summer child! parfor wouldn't be out until R2007b. Version 1.0 supported independent jobs using the MATLAB Job scheduler and nothing else. We didn't have spmd and we didn't have communicating jobs or MPI. We didn't support other schedulers. We didn't have much but it did provide the first building blocks for the tools that allowed people to write parallel algorithms in MATLAB without MPI, and that was what was needed at the time.

Jos: No, that came later. We had the precursor to createJob and createTask. What you could do was say "Run this over there, and run that over at this other place". You could do this with multiple clusters, like you can do with parcluster today. So, a customer could have a local MATLAB program that would send tasks to other machines. Those machines could be running Windows or Linux and you could use all of them at the same time.

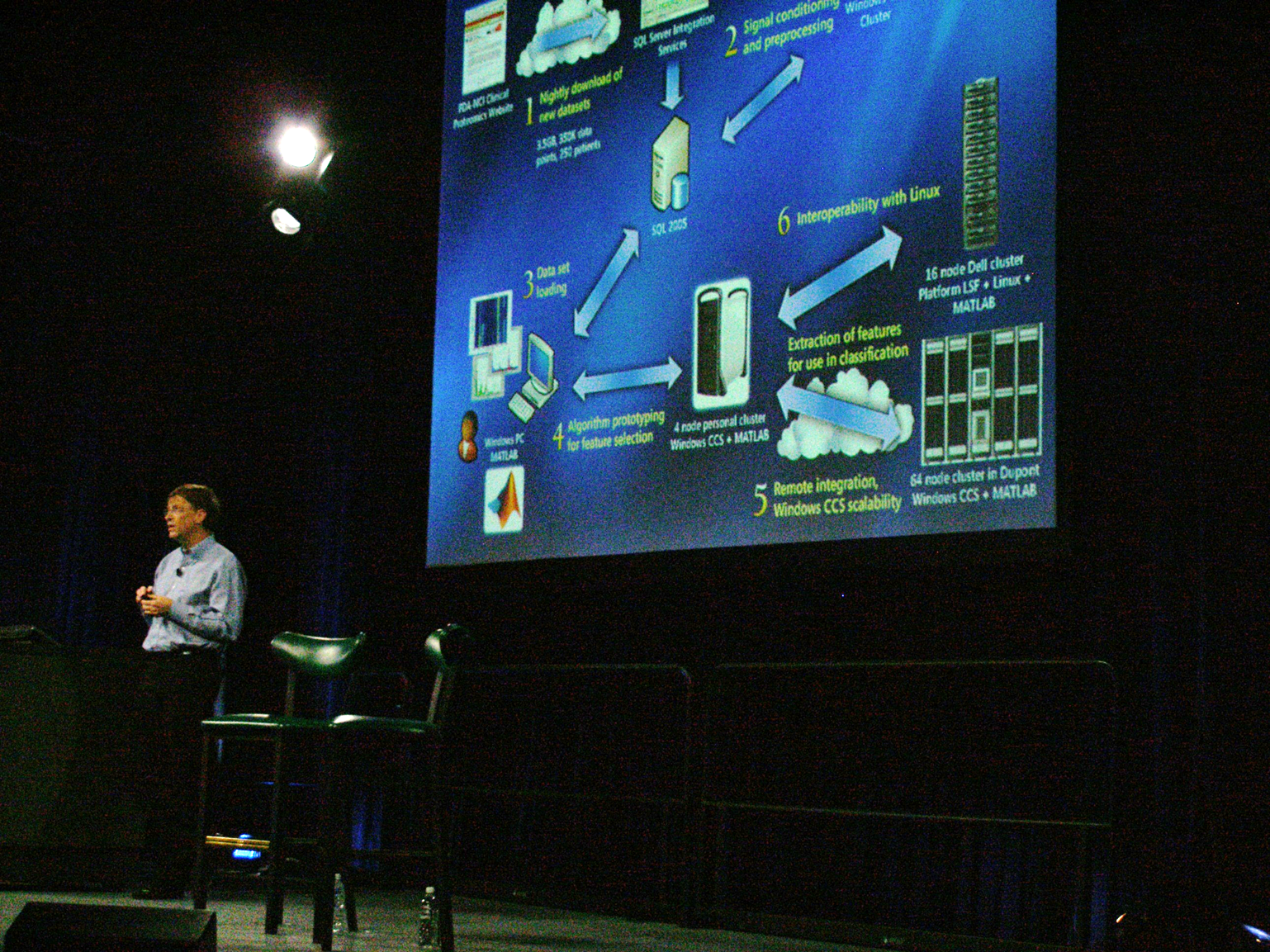

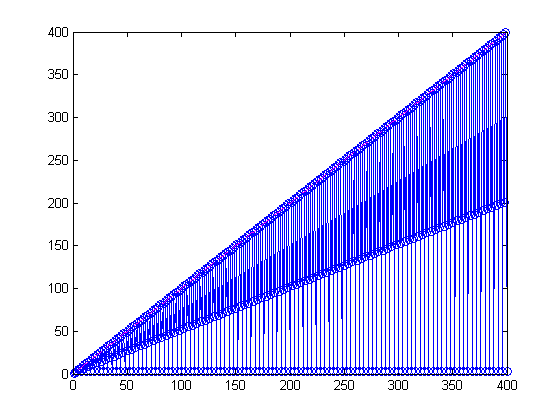

It was basic but it worked and even then it was very useful to some of our users. This is what the demo looked like at the MathWorks Booth at SC04.

2005: Version 2.0 and Bill Gates at the SC05 conference

Version 2.0 had support for communicating jobs via MATLAB Job Scheduler and we also started supporting 3rd party schedulers, but only for non-communicating jobs back then. One of the 3rd party schedulers we supported was CCS, which later became HPC Server on Windows.

In the picture below you have Bill Gates on the SC stage in Seattle running MATLAB on a Windows cluster. A short while later, Kyril Faenov, director of the High Performance Computing product unit in the Windows Server Group, came on stage to run a demo. The live demo distributed an algorithm that analyzed proteomics data on a local multinode Windows cluster and then transferred the job to a 64-node Linux cluster that was based in Washington.

Jos was in the front row, sat next to Cleve, watching rather nervously. He'd been out there for 3 weeks in advance making sure that everything worked OK. I can only imagine what was going through his mind at that moment. Think about it: code you had written was being used in a live demo at the largest event in your industry, as part of a keynote by the CEO of Microsoft, while the co-founder of your company was sat next to you. If it were me, the stress of it all would have resulted in a heart attack moments before the demo started.

Kyril pressed the go button, the CPU core load shot up and results start coming back. Jos breathed out a sigh of relief, the live demo had worked and it all looked pretty cool!

Jos went backstage after the keynote to thank the team who were babysitting the clusters.

Jos: "Guys! We made it. It was awesome! Thank you."

Them: "Just! 20 seconds after the demo finished, we lost the InfiniBand connection and everything crashed"

Back then, the MathWorks booth at SC looked like the picture below. This was in the days when we still had a rack of real machines on-premise to run the demos. Cleve tells us that the rack itself was bought from Home Depot!

2007: Local workers, parfor and Cleve's follow up article

For the first few years, everything in parallel MATLAB was only HPC in the sense that you ran parallel workers on other machines. There was no concept of having a local pool so you couldn't run multiple workers on the same machine.

This was long before my time at MathWorks but looking at the history, it makes sense. The first dual core Intel and AMD chips were released in 2005 so it would be a while before your average MATLAB user felt the pain of not being able to run multiple workers on the same machine.

Local workers really changed the game though. You could prototype your parallel code on your local dual core machine and then it would run without modification on a HPC cluster, you could just scale up the number of workers.

2007 was the year that parfor became a thing and this really changed the game. Usually the first part of Parallel Computing Toolbox that most users learn, parfor is the easy way to parallelize loops in MATLAB. It has been developed a lot since that first version and has all sorts of tricks up its sleeve that aren't known by many people. I'll do a deep dive on it at some point next year.

Cleve also wrote a follow up to his 1995 article. This one was called Parallel MATLAB: Multiple processors and multiple cores that gave an overview of parallel computing in MATLAB at that time.

2008: MathWorks jointly won the SC08 HPC Challenge class 2 award

The HPC Challenge is a benchmark that has obvious winners - the best result. There is also a class 2 award that is a little more subjective as it awards the most "elegant" implementation of four or more of the HPC Challenge benchmarks. This award was weighted 50% on performance and 50% on code elegance, clarity, and size.

In SC08, the MATLAB team jointly won the class 2 award. We shared the prize with Chapel and X10 who also turned in great submissions. One of the examples in today's MATLAB documentation is based on this work which allows you to run the challenge using MATLAB today.

2010: GPU computing in MATLAB debuted at SC10 and NVIDIA GTC

These days, MATLAB has over 1,000 functions that support execution on NVIDIA GPUs but the first version, released as part of R2010b, was rather more limited. Two glaring omissions were the subsref and subsasgn functions which meant that indexing of gpuArray's was not supported. Imagine trying to do anything useful in MATLAB without ever indexing anything!

Even then, however, gpuArray worked with 123 functions and it was a big enough release that Jensen Huang highlighted it in the 2010 NVIDIA conference.

GPU computing was also the highlight of MathWorks' attendance at SC10. The booth is starting to look a little more like it does today.

2013: parfeval gets added to the parallel language

parfeval is a function in Parallel Computing Toolbox that doesn't get enough coverage. It allows you to request asynchronous execution of a function on a worker, This provides the programmer with the means to write more expressive code than is possible with parfor in a way that's easier than low-level functions such as spmdSend, spmdReceive and friends.

In future years, additional support functions, such as afterEach and afterAll, were added to the language that assisted with workflows based on parfeval.

If the only parallel function you know in MATLAB is parfor, I suggest that you take a closer look at how parfeval might be able to help you.

2015: Cleve's trip report of SC15

Cleve wrote a great report of his time at SC15 on his blog. One of the demos at the MathWorks booth was of a Raspberry Pi, running code generated by MATLAB, doing live edge detection of a video feed,

2016: Big data and tall arrays

2016 saw the introduction of Tall arrays. These are what you use when your data has got so many rows that it won't fit into memory. One of the differences between tall arrays and in-memory arrays is that tall arrays typically remain unevaluated until you request that calculations be performed, so called Lazy-evaluation.

Tall arrays have been discussed a couple of times on The MATLAB Blog:

- MATLAB’s High Performance Computing Data Types

- Working efficiently with data: Parquet files and the Needle in a Haystack problem

2017: parsim, bringing Simulink to the HPC party

R2017a introduced parsim which relieved users from all the hassle of trying to call sim from within parfor, something that was very hard to get right. If you want to run many Simulink jobs in parallel, this is the function for you. There are several illuminating examples in the documentation

2020: Thread pools were introduced

Until R2020a, local worker pools in MATLAB were always process-based. In R2020a, thread-based pools were first made possible which allowed for reduced memory usage, faster start-up time and lower data-transfer costs.

Today, as of R2024b, process pools are still the default because only a (ever-growing) subset of MATLAB functions work on thread pools. However, you can open a thread pool very easily instead by calling

parpool("Threads")

before you run any parallel code.

This is what I almost always do when working on a single machine these days. I only switch back to process pools when I am prototyping for multi-node work or when I hit one of the rare occasions where I'm using functionality that's not yet supported by thread pools. If you only ever use a single node of a HPC system, I highly recommend that you give this a try.

2023: Quantum Computing, Parallel server on Kubernetes

Quantum computing isn't part of the Parallel Computing Toolbox, it's available via a downloadable support package. I'm only including it here because I wanted to be part of the story and last year, at SC23, one of the things I did was hang out at the AWS booth presenting the Quantum Support Package and how it provides access to real quantum computers on the cloud via AWS Braket and IBM.

2023 was also when it became possible to deploy MATLAB Parallel Server and MATLAB Job Scheduler on a Kubernetes cluster using a Helm chart. See https://uk.mathworks.com/help/matlab-parallel-server/run-matlab-parallel-server-on-kubernetes.html for details. This was the booth last year.

2024: MathWorks at SC24 in Atlanta

Parallel computing in MATLAB has come a long way in the 20 years since 2004 and I've only covered some of the highlights in the list above. I just know that I've missed out some of the favorite features of both MathWorkers and users alike but SC24 is coming up fast and I need to get this article published.

Come and join us at the MathWorks booth #3414, play with the demos, chat to me, Jos and other members of the MathWorks team about parallel computing in MATLAB or just to share memories about SC's gone by. Oh, and get some swag of course.

コメント

コメントを残すには、ここ をクリックして MathWorks アカウントにサインインするか新しい MathWorks アカウントを作成します。