Scene Classification Using Deep Learning

This is a post from Oge Marques, PhD and Professor of Engineering and Computer Science at FAU, and of course [MathWorks blog] famous for his post on image augmentation. He's back to talk about scene classification, with great code for you to try. You can also follow him on Twitter (@ProfessorOge)

Automatic scene classification (sometimes referred to as scene recognition, or scene analysis) is a longstanding research problem in computer vision, which consists of assigning a label such as 'beach', 'bedroom', or simply 'indoor' or 'outdoor' to an image presented as input, based on the image's overall contents.

In this blog post, I will show you how to design and implement a computer vision solution that can classify an image of a scene into its category (bathroom, kitchen, attic, or bedroom for indoor; hayfield, beach, playground, or forest for outdoor) (Fig. 1) using a deep neural network.

Fig. 1: Examples of images from the MIT Places dataset [1] with their corresponding categories.

First let me guide you through the basics of scene recognition by humans and the history of scene recognition using computer vision.

Fig. 3: A baseline CNN used in "Part 1".

Fig. 3: A baseline CNN used in "Part 1".

Fig. 4: Learning curves for baseline CNN. Notice the telltale signs of overfitting: accuracy and loss keep improving for training data but have already flattened out for the validation dataset.

Unsurprisingly, the network's accuracy is modest (~60%) and it suffers from overfitting (Fig. 4).

Fig. 4: Learning curves for baseline CNN. Notice the telltale signs of overfitting: accuracy and loss keep improving for training data but have already flattened out for the validation dataset.

Unsurprisingly, the network's accuracy is modest (~60%) and it suffers from overfitting (Fig. 4).

Fig. 5: Using a pre-trained CNN "as is": examples of classification errors resulting from predicting similar (or more specific) classes.

Fig. 5: Using a pre-trained CNN "as is": examples of classification errors resulting from predicting similar (or more specific) classes.

Fig. 6: Confusion matrix for the scene classification solution using a pretrained model, Places365GoogLeNet, and best practices in transfer learning.

Upon inspecting some of the misclassified images, you can see that they result from a combination of incorrect labels, ambiguous scenes, and "non-iconic" images [8] (Fig. 7).

Fig. 7: Examples of classification errors from a retrained Places365GoogLeNet, due to (left to right, respectively): incorrect labels, ambiguous scenes (a bedroom in the attic), and "non-iconic" images.

forest |

bedroom |

kitchen |

hayfield |

attic |

beach

beach |

playground

playground |

bathroom

bathroom |

Scene recognition by humans

For the sake of this discussion, let’s use a working definition of a scene as "a view of a real-world environment that contains multiple surfaces and objects, organized in a meaningful way." [2] Humans are capable of recognizing and classifying scenes in a tenth of a second or less, thanks to our ability to capture the gist of the scene, even though this usually means having missed many of its details [3]. For example, we can tell an image of a bathroom from one of a bedroom quickly, but would be dumbfounded if asked (after the image is no longer visible) about specifics of the scene (for example, how many nightstands / sinks did you see?)Scene recognition in computer vision, before and after deep learning

Prior to deep learning, early efforts included the design and implementation of a computational model of holistic scene recognition based on a very low dimensional representation of the scene, known as its Spatial Envelope [3]. This also gave us access to important data sets (such as Places365 [1]), which have been crucial to the success of deep learning in scene recognition research. The training set of Places365-Standard has ~1.8 million images from 365 scene categories, with as many as 5000 images per category. The use of deep learning, particularly Convolutional Neural Networks (CNNs), for scene classification has received great attention from the computer vision community [4]. Several baseline CNNs pretrained on the Places365-Standard dataset are available at https://github.com/CSAILVision/places365.Scene recognition using deep learning in MATLAB

Next, I want to show how to implement a scene classification solution using a subset of the MIT Places dataset [1] and a pretrained model, Places365GoogLeNet [5, 6]. To maximize the learning experience, we will build, train, and evaluate different CNNs and compare the results. In "Part 1", we will build a simple CNN from scratch, train it, and evaluate it. In "Part 2", we will use a pretrained model, Places365GoogLeNet, "as is". In "Part 3", we follow a transfer learning approach that demonstrates some of the latest features and best practices for image classification using transfer learning in MATLAB. Finally, in "Part 4", we employ image data augmentation techniques to see whether they lead to improved results.Data Preparation

- We build an ImageDatastore consisting of eight folders (corresponding to the eight categories: 'attic', 'bathroom', 'beach', 'bedroom', 'forest', 'hayfield', 'kitchen', and 'playground') with 1000 images each.

- We split the data into training (70%) and validation (30%) sets.

- We create an augmentedImageDatastore to handle image resizing, specifying the training images and the size of output images, which must be compatible with the size expected by the input layer of the neural network. This is more elegant and efficient than running batch image resizing (and saving the resized images back to disk).

imds = imageDatastore(fullfile('MITPlaces'),...

'IncludeSubfolders',true,'FileExtensions','.jpg','LabelSource','foldernames');

Count number of images per label and save the number of classes

labelCount = countEachLabel(imds);

numClasses = height(labelCount);

Create training and validation sets

[imdsTraining, imdsValidation] = splitEachLabel(imds, 0.7);

Use image data augmentation to handle the resizing the original images are 256-by-256. The input layer of the CNNs used in this example expects them to be 224-by-224.

inputSize = [224,224,3];

augimdsTraining = augmentedImageDatastore(inputSize(1:2),imdsTraining);

augimdsValidation = augmentedImageDatastore(inputSize(1:2),imdsValidation);

Model development – Part 1 (Building and training a CNN from scratch)

We build a simple CNN from scratch (Fig. 3), specify its training options, train it, and evaluate it. Define Layerslayers = [

imageInputLayer([224 224 3])

convolution2dLayer(3,16,'Padding',1)

batchNormalizationLayer

reluLayer

maxPooling2dLayer(2,'Stride',2)

convolution2dLayer(3,32,'Padding',1)

batchNormalizationLayer

reluLayer

maxPooling2dLayer(2,'Stride',2)

convolution2dLayer(3,64,'Padding',1)

batchNormalizationLayer

reluLayer

fullyConnectedLayer(8)

softmaxLayer

classificationLayer];

Specify Training Options

options = trainingOptions('sgdm',...

'MaxEpochs',30, ...

'ValidationData',augimdsValidation,...

'ValidationFrequency',50,...

'InitialLearnRate', 0.0003,...

'Verbose',false,...

'Plots','training-progress');

Train network

baselineCNN = trainNetwork(augimdsTraining,layers,options);

Classify and Compute Accuracy

predictedLabels = classify(baselineCNN,augimdsValidation);

valLabels = imdsValidation.Labels;

baselineCNNAccuracy = sum(predictedLabels == valLabels)/numel(valLabels);

Fig. 3: A baseline CNN used in "Part 1".

Fig. 3: A baseline CNN used in "Part 1".

Fig. 4: Learning curves for baseline CNN. Notice the telltale signs of overfitting: accuracy and loss keep improving for training data but have already flattened out for the validation dataset.

Unsurprisingly, the network's accuracy is modest (~60%) and it suffers from overfitting (Fig. 4).

Fig. 4: Learning curves for baseline CNN. Notice the telltale signs of overfitting: accuracy and loss keep improving for training data but have already flattened out for the validation dataset.

Unsurprisingly, the network's accuracy is modest (~60%) and it suffers from overfitting (Fig. 4).

Model development – Part 2 (Using a pretrained model, Places365GoogLeNet, "as is")

We use a pretrained model, Places365GoogLeNet, "as is". Since the model has been trained as a 365-class classifier, its performance will be suboptimal (validation accuracy ~53%), in part due to cases in which the model predicted a related/more specific category with greater confidence than any of the 8 categories selected for this exercise (Fig. 5). Load pretrained Places365GoogLeNet, download and install the Deep Learning Toolbox Model for GoogLeNet Network support package. See https://www.mathworks.com/help/deeplearning/ref/googlenet.html for instructions.places365Net = googlenet('Weights','places365');

Classify and Compute Accuracy

YPred = classify(places365Net,augimdsValidation);

YValidation = imdsValidation.Labels;

places365NetAccuracy = sum(YPred == YValidation)/numel(YValidation);

Fig. 5: Using a pre-trained CNN "as is": examples of classification errors resulting from predicting similar (or more specific) classes.

Fig. 5: Using a pre-trained CNN "as is": examples of classification errors resulting from predicting similar (or more specific) classes.

Model development – Part 3 (Transfer Learning)

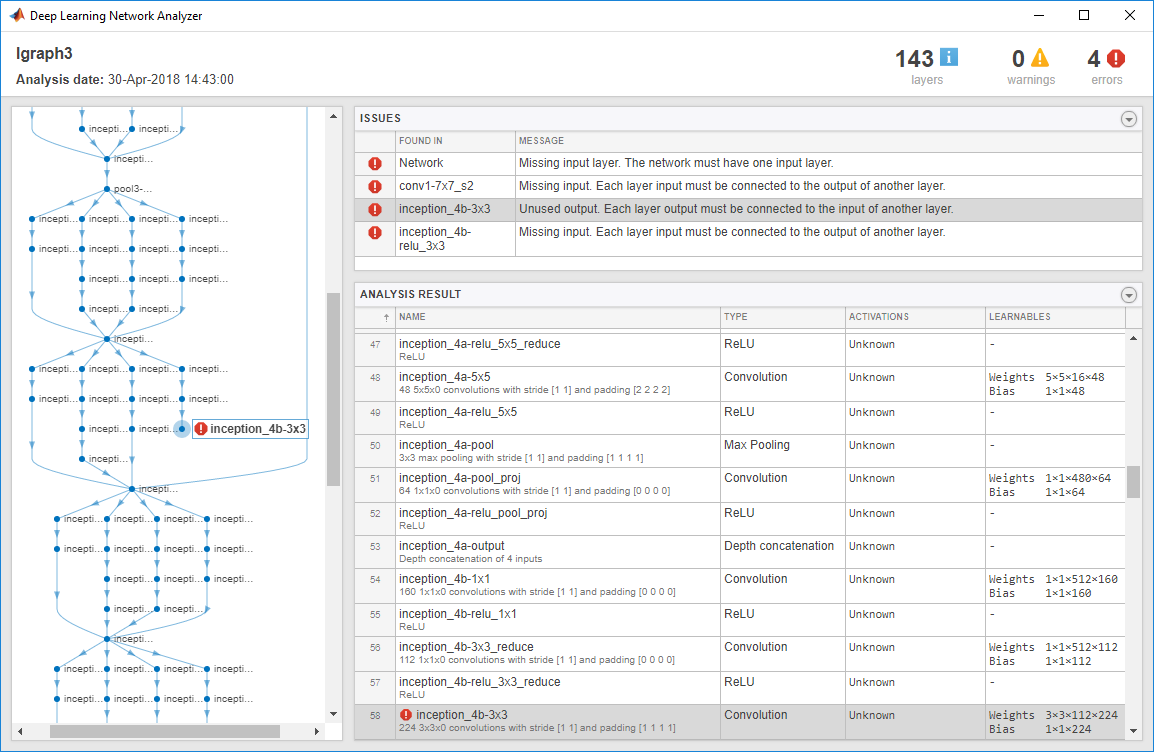

We will now follow a principled transfer learning approach. We start by locating the last learnable layer and the classification layer using layerGraph and findLayersToReplace.lgraph = layerGraph(places365Net);

[learnableLayer,classLayer] = findLayersToReplace(lgraph);

[learnableLayer,classLayer]

Next, we replace them with appropriate equivalent layers (following the example at [7]), updating the network's graph with two calls to replaceLayer, while freezing the initial layers (i.e., setting the learning rates in those layers to zero) using freezeWeights: freezing the weights of the initial layers can significantly speed up network training and, since our new dataset is small, can also prevent those layers from overfitting to the new dataset. [7]

newLayer =

fullyConnectedLayer(numClasses, ...

'Name','new_fc', ...

'WeightLearnRateFactor',10, ...

'BiasLearnRateFactor',10);

lgraph = replaceLayer(lgraph,learnableLayer.Name,newLayer);

newClassLayer = classificationLayer('Name','new_classoutput');

lgraph = replaceLayer(lgraph,classLayer.Name,newClassLayer);

% Freeze initial layers

layers = lgraph.Layers;

connections = lgraph.Connections;

layers(1:10) = freezeWeights(layers(1:10));

lgraph = createLgraphUsingConnections(layers,connections);

We then train the network and evaluate the classification accuracy on the validation set: ~95%. The resulting confusion matrix (Fig. 6) gives us additional insights on which categories are misclassified more frequently by the model – in this case, bathroom scenes classified as kitchen (18 instances) and bedroom scenes labeled as attic (12 cases).

|

|

|

Model development – Part 4 (Data Augmentation)

We employ data augmentation (covered in detail in my previous post [9]) specifying another augmentedImageDatastore (which uses images that might be randomly processed with left-right flip, translation, and scaling) as the data source for the trainNetwork function. The resulting classification accuracy and confusion matrix turn out to be almost identical to the ones obtained without data augmentation, which shouldn’t come as a surprise, since our analysis of the classification errors (such as the ones displayed in Fig. 7) suggests that the reasons why our model's predictions are occasionally incorrect (wrong labels, ambiguity in the scenes, and "non-iconic" images) are not mitigated by offering additional variations (scaled, flipped, translated) of each image to the model during training. This goes to reinforce Andrew Ng's advice to invest time in performing human error analysis and tabulating the reasons behind the mistakes in a machine learning solution before deciding on the best ways to improve it [10]. The complete code and images are available at the MATLAB File Exchange [11]. You can adapt it to use different pretrained CNNs, datasets, and/or model parameters and hyperparameters. If you do, drop us a note in the comments section telling us what you did and how well it worked. To summarize, this blog post has shown how to use MATLAB and deep neural networks to perform scene classification on images from a publicly available dataset. The references below provide links to materials to learn more details.References

- [1] B. Zhou, A. Lapedriza, A. Khosla, A. Oliva, and A. Torralba, "Places: A 10 million Image Database for Scene Recognition", IEEE Transactions on Pattern Analysis and Machine Intelligence, 2017. http://places2.csail.mit.edu/

- [2] A. Oliva, "Visual Scene Perception", http://olivalab.mit.edu/Papers/VisualScenePerception-EncycloPerception-Sage-Oliva2009.pdf

- [3] A. Oliva and A. Torralba (2001). "Modeling the shape of the scene: a holistic representation of the spatial envelope", International Journal of Computer Vision, Vol. 42(3): 145-175. Paper, dataset, and MATLAB code available at: http://people.csail.mit.edu/torralba/code/spatialenvelope/

- [4] B. Zhou, A. Lapedriza, J. Xiao, A. Torralba, and A. Oliva, "Learning Deep Features for Scene Recognition using Places Database," NIPS 2014

- [5] MathWorks. "googlenet: Pretrained GoogLeNet convolutional neural network". https://www.mathworks.com/help/deeplearning/ref/googlenet.html

- [6] MathWorks. Pretrained Places365GoogLeNet convolutional neural network (code) https://www.mathworks.com/matlabcentral/fileexchange/70987-deep-learning-toolboxtm-model-for-places365-googlenet-network

- [7] MathWorks. "Train Deep Learning Network to Classify New Images". https://www.mathworks.com/help/deeplearning/examples/train-deep-learning-network-to-classify-new-images.html

- [8] Lin, Tsung-Yi, et al. "Microsoft COCO: Common objects in context." European conference on computer vision. Springer, Cham, 2014.

- [9] O. Marques, "Data Augmentation for Image Classification Applications Using Deep Learning", https://blogs.mathworks.com/deep-learning/2019/08/22/data-augmentation-for-image-classification-applications-using-deep-learning/

- [10] A. Ng, "Machine Learning Yearning" https://www.deeplearning.ai/machine-learning-yearning/

- [11] Oge Marques (2019). Scene Classification Using Deep Learning (https://www.mathworks.com/matlabcentral/fileexchange/73333-scene-classification-using-deep-learning), MATLAB Central File Exchange.

- カテゴリ:

- Deep Learning

コメント

コメントを残すには、ここ をクリックして MathWorks アカウントにサインインするか新しい MathWorks アカウントを作成します。