Accelerate Edge AI with the Hexagon Hardware Support Package

The following blog post is from Reed Axman, Strategic Partner Manager at MathWorks.

Deploying AI models to edge devices enables real-time data processing and decision-making without relying on constant cloud connectivity. Edge AI and embedded AI reduce latency and improve responsiveness for critical applications. Bringing AI models closer to the point of use enhances privacy and security by keeping sensitive data local, minimizing the risk of data breaches during transmission. Additionally, this approach reduces bandwidth usage and operational costs, making intelligent features more accessible in resource-constrained or remote environments.

However, Edge AI comes with inherent challenges, such as limited compute, power, and latency. Neural processing units (NPUs) can help address these challenges by delivering fast, efficient neural network processing on-device. The Hexagon Hardware Support Package (HSP) from MathWorks supports the Qualcomm® Hexagon™ NPU, enabling integration and deployment of AI models.

This blog post covers what an NPU is, NPU applications and benefits, and how to install and set up the HSP. We'll also highlight the tools from Qualcomm integrated with the HSP and the provided optimizations.

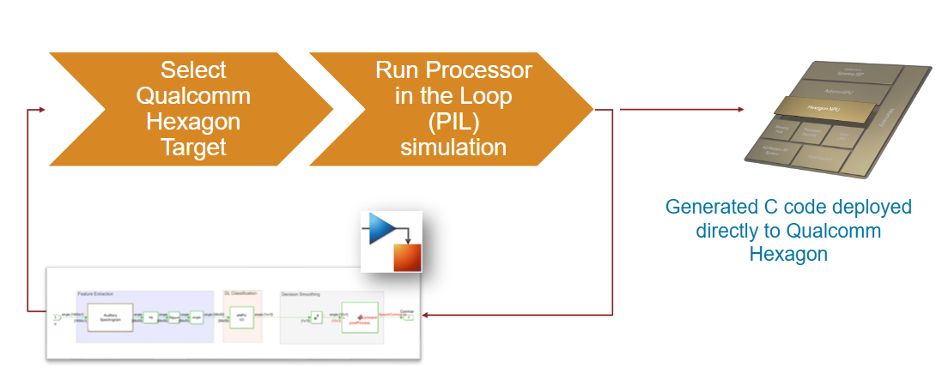

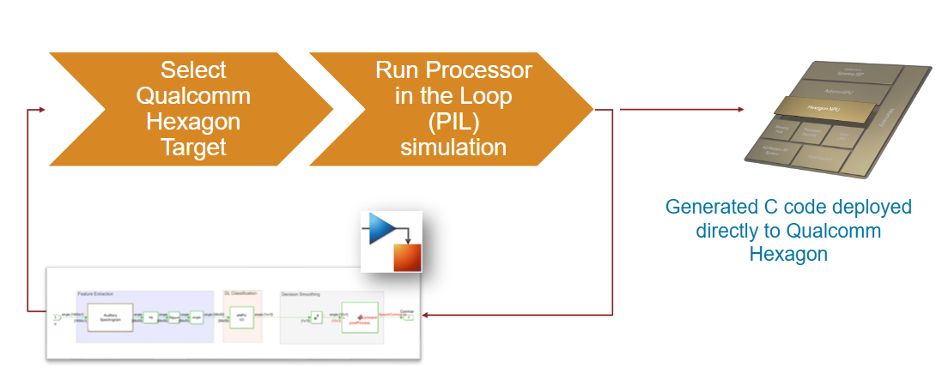

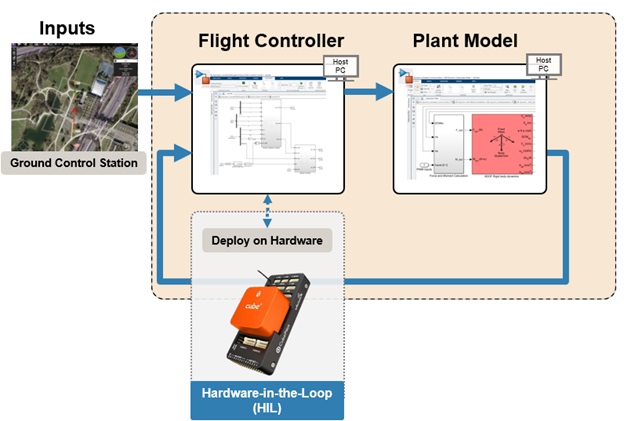

Figure 1:High level workflow for deploying AI to the Hexagon NPU

Figure 1:High level workflow for deploying AI to the Hexagon NPU

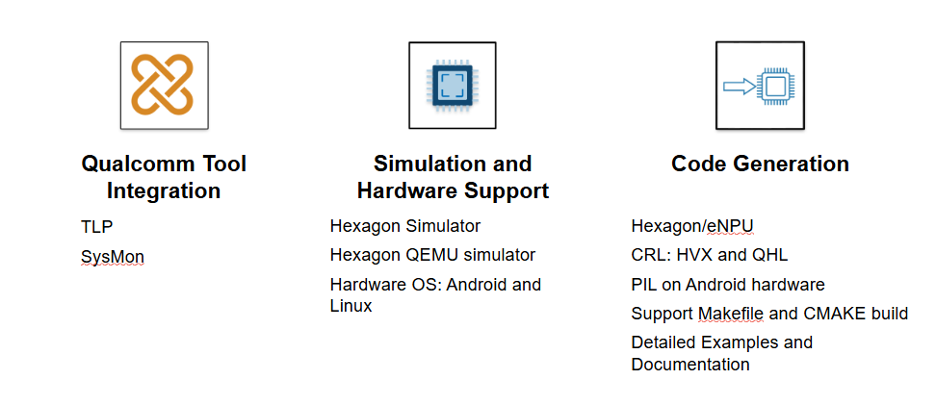

Figure 2: Tools integrated with the Qualcomm Hexagon Hardware Support Package

Figure 2: Tools integrated with the Qualcomm Hexagon Hardware Support Package

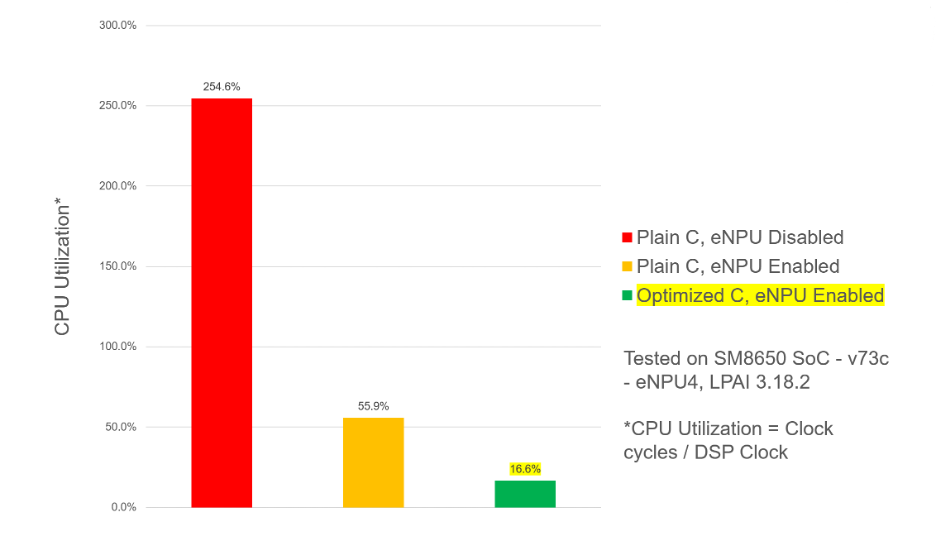

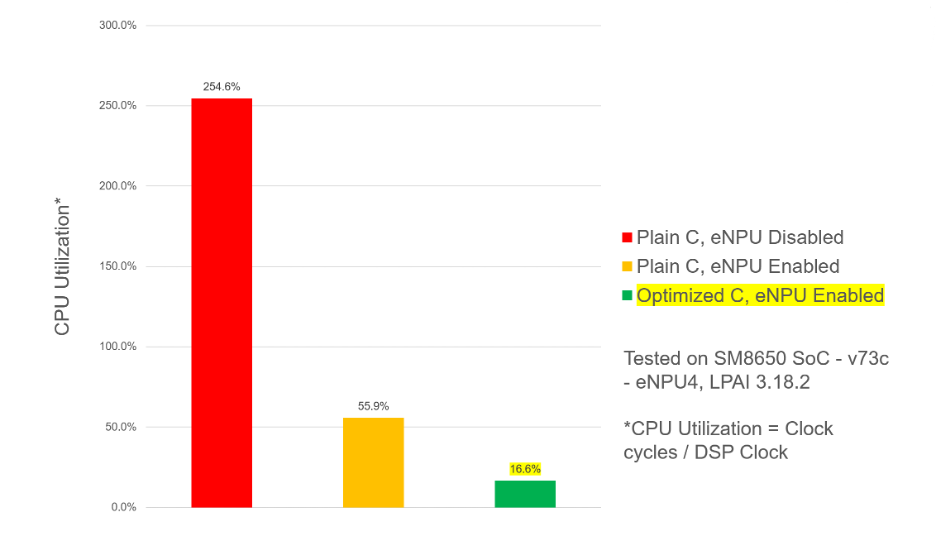

Figure 3: Optimizations provided significantly reduce resource utilization for algorithms deployed to Qualcomm Hexagon

Figure 3: Optimizations provided significantly reduce resource utilization for algorithms deployed to Qualcomm Hexagon

Introduction to NPUs

What is NPU?

A Neural Processing Unit (NPU) is a hardware component designed to accelerate AI and machine learning tasks. It is part of a system-on-chip (SoC) architecture and is optimized for deep learning inference at the edge. More specifically, NPUs are optimized for highly parallel computational tasks such as matrix multiplications and convolutions, which are fundamental operations in deep neural networks.Why Use NPU?

Using an NPU offers several advantages. NPUs handle AI workloads efficiently, providing faster inference times and lower latency. They consume less power compared to general-purpose processors, making them ideal for battery-powered devices. Additionally, NPUs can handle various AI models, from simple neural networks to complex deep learning architectures, offering scalability for different applications.Hexagon NPU and Its Applications

The Qualcomm Hexagon NPU mimics the neural network layers and operations of popular models, such as activation functions, convolutions, fully-connected layers, and transformers. It is used in smart speakers to enhance voice command recognition, smart cameras to improve image processing and object detection, healthcare devices for real-time patient monitoring and diagnostics, and automotive systems to support advanced driver assistance systems (ADAS) and autonomous driving.NPU Hardware Support Package

Easy Installation and Setup of the HSP

The Hexagon Hardware Support Package is user-friendly and easy to install. Here’s how to get started:- Download HSP: Visit mathworks.com/qualcomm or use the Add-On Explorer in MATLAB and download the Hexagon Hardware Support Package.

- Install HSP: Follow the 3-step installation wizard instructions.

- Set Up Your Environment: Configure your hardware settings to target the Hexagon hardware or simulator for deployment.

- Deploy Your Model: Use MATLAB and Simulink to design, simulate, and deploy your AI models to the Hexagon NPU.

Figure 1:High level workflow for deploying AI to the Hexagon NPU

Figure 1:High level workflow for deploying AI to the Hexagon NPU

Integrated Tools from Qualcomm

The Hexagon Hardware Support Package integrates several tools from Qualcomm, including the Hexagon Simulator for testing and validating models in a simulated environment, transaction layer package (TLP), and sysMon. Figure 2: Tools integrated with the Qualcomm Hexagon Hardware Support Package

Figure 2: Tools integrated with the Qualcomm Hexagon Hardware Support Package

Optimization Benefits

The HSP leverages Qualcomm's code libraries to offer optimized performance for math and DSP operations. This includes scalar and vector code optimizations. The ability to take advantage of the NPU for AI acceleration also provides significant performance benefits. Figure 3: Optimizations provided significantly reduce resource utilization for algorithms deployed to Qualcomm Hexagon

Figure 3: Optimizations provided significantly reduce resource utilization for algorithms deployed to Qualcomm Hexagon

Final Thoughts

The Hexagon Hardware Support Package from MathWorks is a tool for generating optimized code and simplifying deployment to the Qualcomm Hexagon NPU. With easy installation, integrated tools, and optimized performance, it enables developers to accelerate their AI development and bring applications to the edge. Explore further resources from MathWorks and Qualcomm to enhance your AI workflow and take your embedded AI applications to production.

댓글

댓글을 남기려면 링크 를 클릭하여 MathWorks 계정에 로그인하거나 계정을 새로 만드십시오.