|

I want to take a minute to highlight one of the apps of Deep Learning Toolbox: Deep Network Designer.

This app can be useful for more than just building a network from scratch, plus in 19a the app generates MATLAB code to programatically create networks! I want to walk through a few common uses for this app (and perhaps some not-so-common uses as well!)

|

|

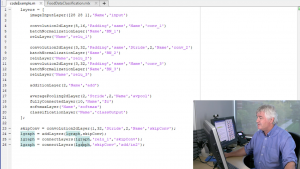

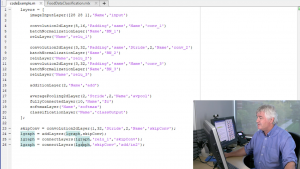

1. Building a network from scratch

Clearly this is one of the top benefits of Deep Network Designer, and there is a great introductory video on this topic. Click on the image to watch the short video.

2. Importing a pretrained network and modifying it

A second, popular use for the DND app is to import a pretrained network and modify it. Another great intro video is here:

2. Importing a pretrained network and modifying it

A second, popular use for the DND app is to import a pretrained network and modify it. Another great intro video is here:

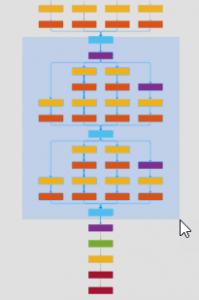

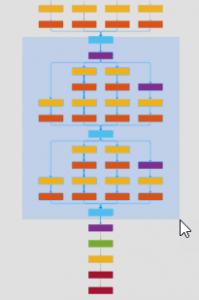

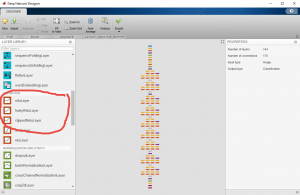

3. Visualizing and describing network architectures

Riddle me this: what type of network is this?

net = googlenet;

net.Layers

Series? DAG?

How about when I show you this portion of the network in the app?

Series? DAG?

How about when I show you this portion of the network in the app?

You can quickly see this is definitely not a series network.

You can quickly see this is definitely not a series network. (It's DAG!)

The app lets you quickly visualize what the network looks like, and see which connections are made. This can be helpful for a deeper understanding of the network, or simply getting a high level overview of the structure.

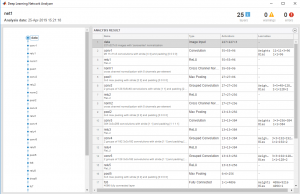

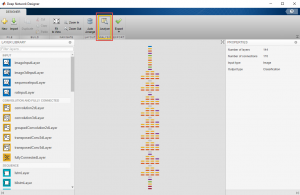

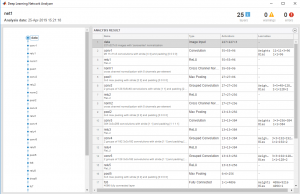

4. What went wrong?

Though not technically the Deep Network Designer*, there is a button that launches the deep network analyzer. Occasionally, I will get a question such as, "Why is my network erroring out?" and I always start the investigation with the analyzer. This will check for problems before you start training which eliminates the not-so-attractive red error message in the command window.

*you can also get to the Network Analyzer programatically:

analyzeNetwork(net);

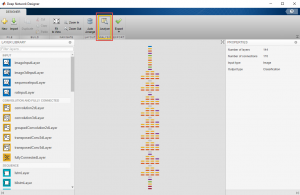

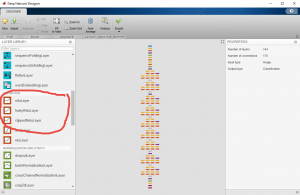

5. Learning more about deep learning

This is not an official benefit of the app, but something I'll mention because it's helped me. Before I started using the app, I wouldn't have been able to tell you anything about "Leaky ReLU layers", since I didn't know they existed!

5. Learning more about deep learning

This is not an official benefit of the app, but something I'll mention because it's helped me. Before I started using the app, I wouldn't have been able to tell you anything about "Leaky ReLU layers", since I didn't know they existed!

By taking a look at all the different layers on the left**, I can pinpoint new layers I may want to learn about, then go to the documentation to learn more! For example, A leaky ReLU layer will take any input value less than zero and multiply it by a fixed scalar. In comparison, a regular ReLU will set anything less than zero to zero.

**If you are looking for every layer that exists in MATLAB, here is a link to all layers in documentation.

Hopefully you feel compelled to try the app right now. As always, you can get a free trial of all our deep learning tools to try the latest functionality: Link to Trial

By taking a look at all the different layers on the left**, I can pinpoint new layers I may want to learn about, then go to the documentation to learn more! For example, A leaky ReLU layer will take any input value less than zero and multiply it by a fixed scalar. In comparison, a regular ReLU will set anything less than zero to zero.

**If you are looking for every layer that exists in MATLAB, here is a link to all layers in documentation.

Hopefully you feel compelled to try the app right now. As always, you can get a free trial of all our deep learning tools to try the latest functionality: Link to Trial

Do you like this app, or like another app better? Let me know in the comments below.

Series? DAG?

How about when I show you this portion of the network in the app?

Series? DAG?

How about when I show you this portion of the network in the app?

You can quickly see this is definitely not a series network. (It's DAG!)

The app lets you quickly visualize what the network looks like, and see which connections are made. This can be helpful for a deeper understanding of the network, or simply getting a high level overview of the structure.

4. What went wrong?

Though not technically the Deep Network Designer*, there is a button that launches the deep network analyzer. Occasionally, I will get a question such as, "Why is my network erroring out?" and I always start the investigation with the analyzer. This will check for problems before you start training which eliminates the not-so-attractive red error message in the command window.

You can quickly see this is definitely not a series network. (It's DAG!)

The app lets you quickly visualize what the network looks like, and see which connections are made. This can be helpful for a deeper understanding of the network, or simply getting a high level overview of the structure.

4. What went wrong?

Though not technically the Deep Network Designer*, there is a button that launches the deep network analyzer. Occasionally, I will get a question such as, "Why is my network erroring out?" and I always start the investigation with the analyzer. This will check for problems before you start training which eliminates the not-so-attractive red error message in the command window.

*you can also get to the Network Analyzer programatically:

*you can also get to the Network Analyzer programatically:

5. Learning more about deep learning

This is not an official benefit of the app, but something I'll mention because it's helped me. Before I started using the app, I wouldn't have been able to tell you anything about "Leaky ReLU layers", since I didn't know they existed!

5. Learning more about deep learning

This is not an official benefit of the app, but something I'll mention because it's helped me. Before I started using the app, I wouldn't have been able to tell you anything about "Leaky ReLU layers", since I didn't know they existed!

By taking a look at all the different layers on the left**, I can pinpoint new layers I may want to learn about, then go to the documentation to learn more! For example, A leaky ReLU layer will take any input value less than zero and multiply it by a fixed scalar. In comparison, a regular ReLU will set anything less than zero to zero.

**If you are looking for every layer that exists in MATLAB, here is a link to all layers in documentation.

Hopefully you feel compelled to try the app right now. As always, you can get a free trial of all our deep learning tools to try the latest functionality: Link to Trial

Do you like this app, or like another app better? Let me know in the comments below.

By taking a look at all the different layers on the left**, I can pinpoint new layers I may want to learn about, then go to the documentation to learn more! For example, A leaky ReLU layer will take any input value less than zero and multiply it by a fixed scalar. In comparison, a regular ReLU will set anything less than zero to zero.

**If you are looking for every layer that exists in MATLAB, here is a link to all layers in documentation.

Hopefully you feel compelled to try the app right now. As always, you can get a free trial of all our deep learning tools to try the latest functionality: Link to Trial

Do you like this app, or like another app better? Let me know in the comments below.

댓글

댓글을 남기려면 링크 를 클릭하여 MathWorks 계정에 로그인하거나 계정을 새로 만드십시오.