Explainable AI

Early in June, I was fortunate to be invited to MathWorks Research Summit for a deep learning discussion, led by Heather Gorr (https://github.com/hgorr) on “Explainable AI” with a diverse group consisting of researchers, practitioners, and various industry experts. I would like to recap some of the discussions and provide a place to keep the conversation going with a larger audience.

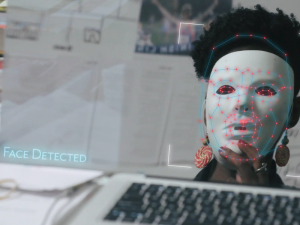

Heather began with a great overview and a definition of Explainable AI to set the tone of the conversation: “You want to understand why AI came to a certain decision, which can have far reaching applications from credit scores to autonomous driving.”

What followed from the panel and audience was a series of questions, thoughts, and themes:

What does explainable mean?

Explainability may have many meanings. By definition, “Explanation” has to do with reasoning and making the reasoning explicit. But there are other ways to think about this term:

Models need understanding too

The challenge is people don’t understand the system and the system doesn’t understand the people.

We need models for the system to use to understand and explain things to the human. We also need models in the human's head about what the system does.

An example of this is a service robot navigating a space, with certain limitations such as safety concerns (not running into people), battery life, and planned path. When something goes wrong, the robot could explain their Markov model, but that doesn’t mean anything to the end user walking by.

Instead of a Markov model, you may want the computer to give you human readable output. This may come at a cost to the system. Perhaps this slows down the system, or is costlier to build due to producing an output in a UI.

Ask questions: You may want to ask the system certain questions to verify the results:

Models need understanding too

The challenge is people don’t understand the system and the system doesn’t understand the people.

We need models for the system to use to understand and explain things to the human. We also need models in the human's head about what the system does.

An example of this is a service robot navigating a space, with certain limitations such as safety concerns (not running into people), battery life, and planned path. When something goes wrong, the robot could explain their Markov model, but that doesn’t mean anything to the end user walking by.

Instead of a Markov model, you may want the computer to give you human readable output. This may come at a cost to the system. Perhaps this slows down the system, or is costlier to build due to producing an output in a UI.

Ask questions: You may want to ask the system certain questions to verify the results:

-

- Explainable could mean interpretable.

- In fact, Interpretability may even be more important than explainability: If a device gives an explanation, can we interpret it in the context of what we are trying to achieve? For example, a mathematical formula or a decision which is in symbolic form assumes the user has some knowledge of what these formulas and symbols mean.

- Explainable could mean interpretable.

-

- Another key term is the concept of abstraction: how much of an explanation do we need?

- If for example you’re using a neural network, do you need to understand what’s happening at the end of each node? At the end of each layer? At the classification layer?

- Who is your audience: Are they a manager? Are they an engineer? Are they an end user? If you are an engineer trying to give reasons to spend company budget, you need to find a way to explain it at that level to a manager. There’s a difference between two scientists having a conversation and one scientist with a random person in a separate field.

- Another key term is the concept of abstraction: how much of an explanation do we need?

Explainability is about needing a "model" to verify what you develop. To work together and maintain trust, the human needs a "model" of what the computer is doing, the same way the computer needs a "model" of what the human is doing.

- “What are you trying to do, what is your goal?”

- “Why did you decide this certain decision?”

- “What were reasonable alternatives, and why were these rejected?”

- Risk vs. confidence: If I’m confident in the results, how likely am I to want to see the explanation? We use risk vs. confidence in our everyday life. For example, mapping software like Google maps – we don’t necessarily know why the algorithm is directing people one way or another. Book recommendations is another example of a low-risk prediction. What’s the worst thing that happens if this recommender system is wrong?

- If a neural network works 100% of the time with 100% confidence, do we really care about the explain-ability?

- Discussions about explainability will vary immensely industry to industry.

- Finance vs. aerospace vs. autonomous driving. These will all have different requirements.

- Safety is more important than explainability. Does one lead to the other?

- Concerning higher education: If we do not address the issue of explainability in AI, we will end up educating PhD students that only know how to train neural networks blindly without any idea why they work (or why they do not.)

- In certain applications, especially safety critical ones, part of the process for validation will be people trying to break it.

- This can include test data such as fake input data known to confuse a system and can give incorrect results.

- Testing networks: what if a model is presented something completely foreign and not in the original dataset? How will the system respond?

- How does a system “unlearn” wrong decisions? As humans we can say “that decision no longer works for me, my inherent decision making isn’t working.”

- Unlearning is a hard problem for both humans and machines. How can you make your AI unlearn something if you don’t know why/how it learned in the first place?

- One example of where a network may have an advantage over a human is in the case of muscle memory. For example, American pedestrians instinctively learn to look to the right first before crossing the street. Networks do not have this “muscle memory” and can be trained to learn the rules for a certain region of the world.

- Can we use adversarial networks when we’re trying to not learn aspects of an image?

- Networks should be well defined for a task and what they expect to encounter. If we keep our networks transparent (both the training data and network architecture are transparent) and in-scope for a well-defined problem, this may eliminate errors.

- 범주:

- Deep Learning

댓글

댓글을 남기려면 링크 를 클릭하여 MathWorks 계정에 로그인하거나 계정을 새로 만드십시오.