I’m pleased to be writing about a new exhibition at the Science Museum, London, featuring MATLAB for deep learning as it applies in autonomous vehicles. The exhibition named “Driverless: Who is in Control?” (link:

https://www.sciencemuseum.org.uk/see-and-do/driverless-who-is-in-control) explores the presence of AI systems in our everyday lives and how much control we’re willing to transfer to them, as well as how they might shape our behavior and society. It features multiple exhibits which allow visitors to experience AI technology involved in creating autonomous vehicles, including cars, drones, and boats. This not only explores current technology but also past and future AI innovations.

MathWorks is the principal sponsor of the exhibition and is thrilled to be involved with this project to educate and inspire visitors about autonomous technology and deep learning applications.

The Exhibition

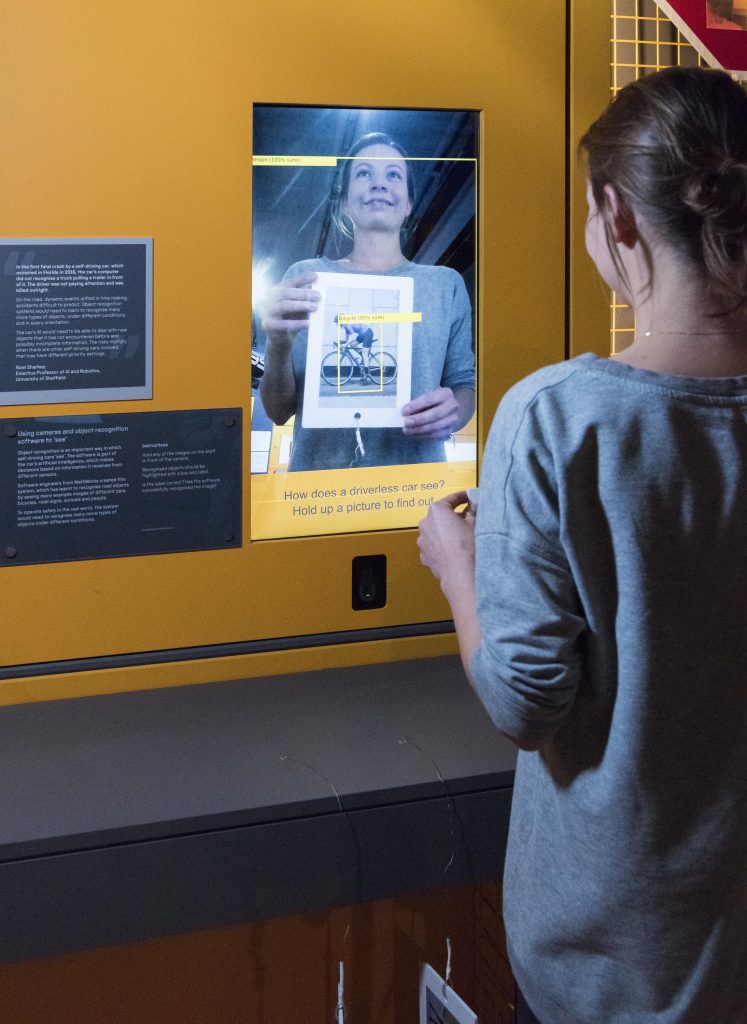

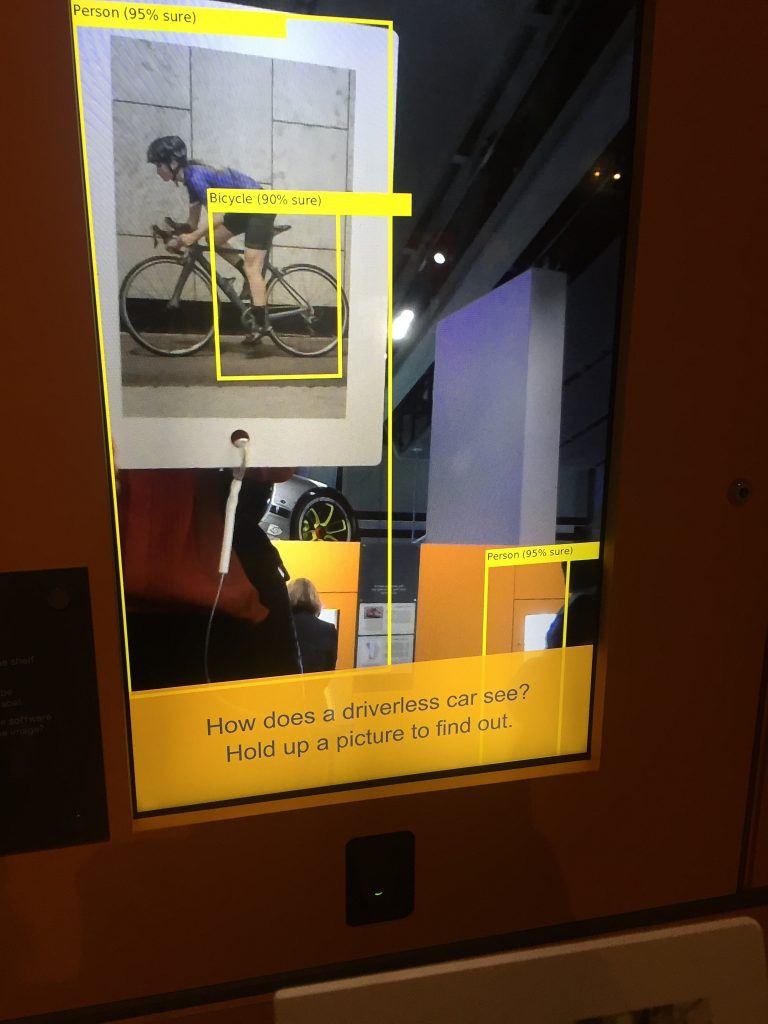

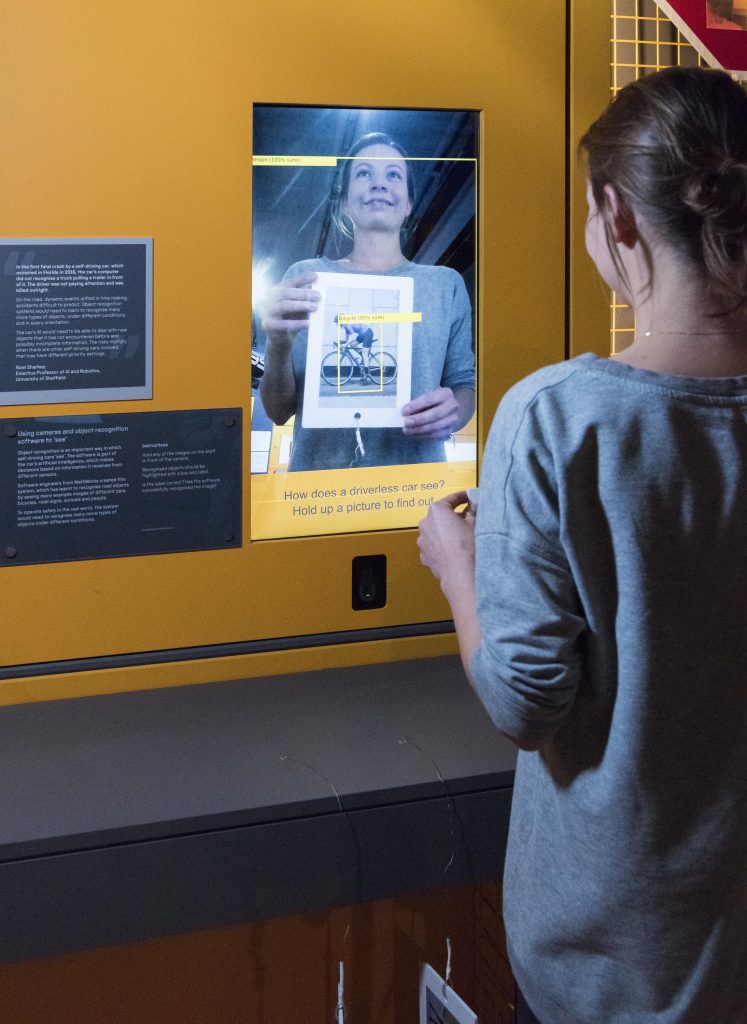

MathWorks' interactive exhibit developed with MATLAB is focused on the perception system of an autonomous vehicle: using deep learning to detect objects as they appear. A visitor will walk up to the station and be able to show the camera a picture (sample images provided at the venue) and see what the system identifies and how confident the algorithm is with the prediction.

Showing object detection with MATLAB. Courtesy Science Museum Group

The Code

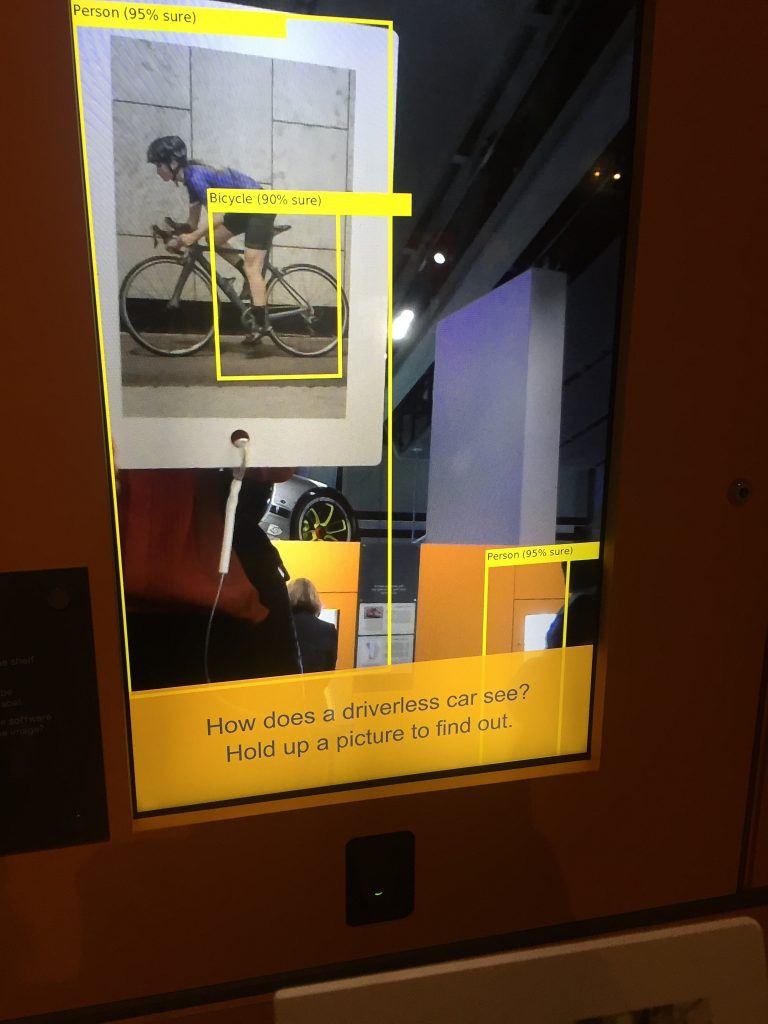

Written in MATLAB, of course, the team used YOLO v2 as an object detector trained to identify over 20 various objects , including bicycles, cars, and motorcycles, along with a few other categories including dogs, horses and sheep! Visitors at the museum can hold up objects to be identified, or even use their own images from their mobile device.

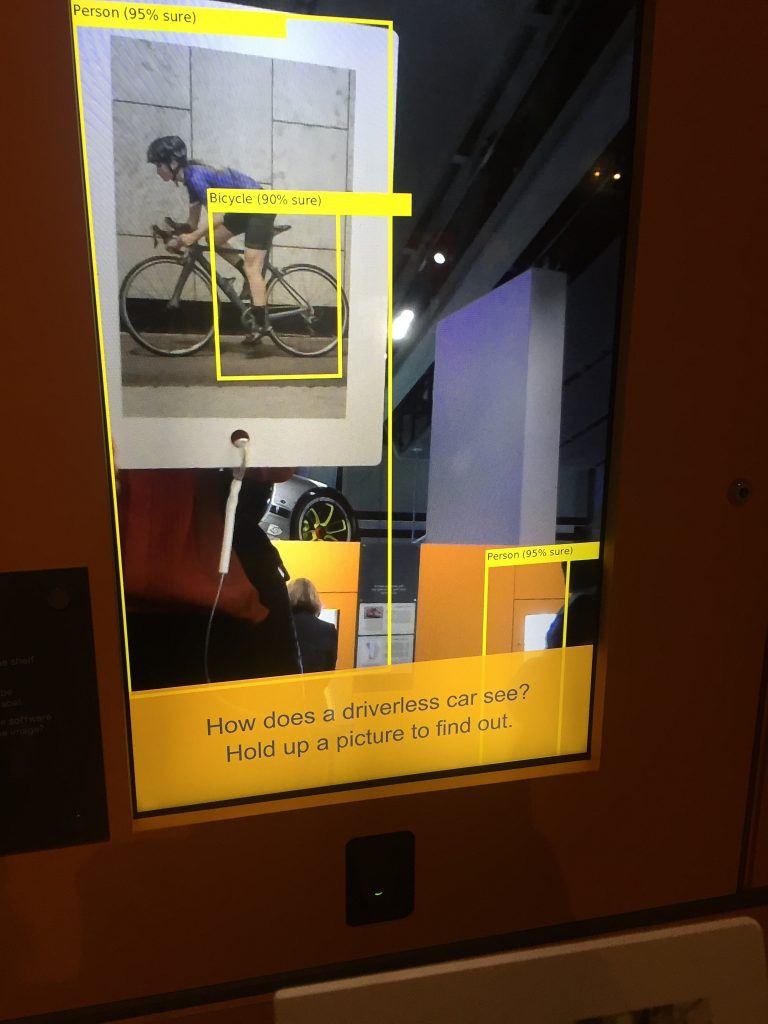

Close up of Object Detection with MATLAB. Courtesy Science Museum Group

Here is a sample of code used to predict objects and visualize bounding boxes:

I = snapshot(camera);

[boxes, scores] = detect(detector,I);

I = insertShape(I,'rectangle',boxes);

imshow(I);

Finally, here is the algorithm running:

Showing Object Detection in action: courtesy of Science Museum Group

Showing Object Detection in action: courtesy of Science Museum Group

Demo Constraints and User Considerations

In addition to the algorithmic constraints and verifying the accuracy of the object detection network, the team also had to consider the conditions in which this demo would be shown:

- The algorithm must run 8 hours per day, including automatically booting the demo when the museum opens every morning.

- There were interesting considerations having to do with usability:

- - The average height of the visitors: Both children and adults would want to interact with the display simultaneously, so it was determined to have the monitors face vertically to accommodate a wide range of heights.

- - How do you describe the prediction’s confidence to a person not familiar with deep learning techniques? With help from the Science Museum’s staff, it was determined not to use the word “confidence.” Instead the word “sure” (as shown in the images above) followed the percentage. “99% sure” is an easily understood phrase to a non-technical audience.

Q&A with MATLAB Team

1. How would a vision system such as the one you are demonstrating be incorporated into a driverless car?

| A vision system like this would be one of the ways in which a driverless car detects its surroundings. The car will use information from a variety of sources – cameras, sensors, GPS, online communications – to gather information about what is happening around it and make decisions about what to do next. For example, the car might use GPS to determine it needs to turn left, and use the vision system to determine when it is safe to do so. |

2. What's next?

| There are many active areas of research for autonomous driving. One of the ideas here is that we utilize our hands, feet and eyes to drive a car. As autonomous technology continues to progress, the less of these elements we need. It turns out that hands, feet and eyes are considered important also in semi-autonomous cars, like the ones we have in certain vehicles today, but less important in fully autonomous cars, like the ones we still need to fully build. (Here is a paper for more information.) |

3. Were there any specific features in Deep Learning Toolbox that you found useful for this project?

| We used the YOLO v2 object detector network in Computer Vision Toolbox to create the vision system. The YOLO detector is very fast at object detection because of the way it divides the vision region into a grid of anchor boxes. The network looks for a single object in each anchor box. If it finds one with a good enough confidence, the size and shape of the anchor box are a good first estimate for the bounding box of the object. The final output bounding box is refined from the anchor box based on where the center of the object is and how much of the object is within in that anchor box. There are a few different sizes and aspect ratios of bounding boxes, depending on the size and shape of the objects your network is trained to detect. |

4. Any thoughts on the limitations of deep learning for autonomous vehicles?

| Thought #1: To be a safe and reliable technology, a fully autonomous driving system needs to work in a many different situations and environments, such as different terrains, different times of day, and different traffic conditions. This requires a large amount of data in all possible scenarios – a autonomous driving system can’t reliably infer what to do is a totally new situation in the same way a human would be able to. We need to help the system by training it with data from lots and lots of real-world situations, so that it can make the right decisions. To do this, the data needs to be good quality, properly labelled and balanced to prevent biases against certain situations or populations. The quality of the data directly impacts the quality of the driving system. As well, there are lots of ethical issues involved in training a system that could potentially be dangerous to other vehicles and pedestrians. When an accident happens, there may be conflicting actions that a vehicle could take, and the autonomous driving system will have to weigh up the options in accordance with some moral principles that need to be coded into the system. Deciding on those principles will need a lot of thought and debate. |

| As technology progresses, autonomy levels are higher, but there are also different concerns for each level, especially in levels where the driver still needs to be in the loop.

The handover, the point where the car gives control to the driver or vice versa, is a critical point of transition that needs to be smooth and safe, until we have fully autonomous cars. Reasons for a handover can be comfort, car sensor degradation or simply reaching the limits of the car’s capabilities. People have thought of ways of facilitating this, either using ambient car displays or dialogue systems.

You can read these related articles on this topic:

|

5. Do you feel mostly positive, negative, or neutral towards autonomous technology?

| I don’t currently drive, so I’m looking forward to the point when autonomous cars are on the road! At the moment, I do have some concerns about safety, but I think that with good technology, good data, and good cooperation between driving systems creators, we’ll be able to develop safe autonomous driving systems. In some cases, these could be safer than humans, as they won’t get distracted or tired, and will have faster reaction times. I think there’s still a lot of work and discussion needed to get to that point, but from here it looks very promising. |

Thanks to the team for taking the time to speak with me about this interesting project. The exhibition opened June 12

th and will run until October 2020. If you’re in London, you should check it out!

Have a question for the team? Leave a comment below!

Close up of Object Detection with MATLAB. Courtesy Science Museum Group

Here is a sample of code used to predict objects and visualize bounding boxes:

Close up of Object Detection with MATLAB. Courtesy Science Museum Group

Here is a sample of code used to predict objects and visualize bounding boxes:

Showing Object Detection in action: courtesy of Science Museum Group

Showing Object Detection in action: courtesy of Science Museum Group

Close up of Object Detection with MATLAB. Courtesy Science Museum Group

Here is a sample of code used to predict objects and visualize bounding boxes:

Close up of Object Detection with MATLAB. Courtesy Science Museum Group

Here is a sample of code used to predict objects and visualize bounding boxes:

Showing Object Detection in action: courtesy of Science Museum Group

Showing Object Detection in action: courtesy of Science Museum Group

Comments

To leave a comment, please click here to sign in to your MathWorks Account or create a new one.