Explainable AI (XAI): Are we there yet?

This is the second post in a 3-post series on Explainable AI (XAI). The first post highlighted examples and offered practical advice on how and when to use XAI techniques for computer vision tasks. In this post, we will expand the discussion by offering advice on some of the XAI limitations and challenges that you might find along the path of XAI adoption.At this point, you might have heard about Explainable AI and want to explore XAI techniques within your work. But along the way you might also have wondered : Just how well do XAI techniques work? And what should you do if you find the results of XAI aren’t as compelling as you’d like? Below, we explore some of the questions surrounding XAI with a healthy dose of skepticism.

Are expectations too high?

XAI – like many subfields of AI – can be subject to some of the hype, fallacies, and misplaced hopes associated with AI at large, including the potential for unrealistic expectations. Terms such as explainable and explanation risk falling under the fallacy of matching AI developments with human abilities (the 2021 paper Why AI is Harder Thank We Think brilliantly describes four similar fallacies in AI assumptions). This fallacy leads to the unrealistic expectation that we are approaching a point in time where AI solutions will not only achieve great feats and eventually surpass human intelligence, but – on top of that – they will be able to explain how and why AI did what it did; therefore, increasing the level of trust in AI decisions. Once we become aware of this fallacy, it is legitimate to ask ourselves: How much can we realistically expect from XAI? In this post we will discuss whether our expectations for XAI (and the value XAI techniques might add to our work) are too high and focus on answering three main questions:- Can XAI become a proxy for trust(worthiness)?

- What limitations of XAI techniques should we be aware of?

- Can consistency across XAI techniques improve user experience?

1. Can XAI become a proxy for trust(worthiness)?

It is natural to associate an AI solution’s “ability to explain itself” to the degree of trust humans place in that solution. However, should explainability translate to trustworthiness? XAI, despite its usefulness, falls short of ensuring trust in AI for at least these three reasons: A. The premise that AI algorithms must be able to explain their decisions to humans can be problematic. Humans cannot always explain their decision-making process, and even when they do, their explanations might not be reliable or consistent. B. Having complete trust in AI involves trusting not only the model, but also the training data, the team that created the AI model, and the entire software ecosystem. To ensure parity between models and outcomes, best practices and conventions for data standards, workflow standards, and technical subject matter expertise must be followed. C. Trust is something that develops over time, but in the age where early adopters want models as fast as they can be produced, models are sometimes introduced to the public early with the expectation they will be continuously updated and improved upon in later releases – which might not happen. In many applications, adopting AI models that stand the test of time by showing consistently reliable performance and correct results over time might be good enough, even without any XAI capabilities.2. What limitations of XAI techniques should we be aware of?

In the fields of image analysis and computer vision, a common interface for showing the results of XAI techniques consists of overlaying the “explanation” (usually in the form of a heatmap or saliency map) on top of the image. This can be helpful in determining which areas of the image the model deemed to be most relevant in its decision-making process. It can also assist in diagnosing potential blunders that the deep learning model might be making, which produce results that are seemingly correct but in reality the model was looking in wrong place. A classic example is the husky vs. wolf image classification algorithm which in fact was a snow detector (Figure 1).A. XAI techniques can sometimes use similar explanations for correct and incorrect decisions.

For example, in the previous post in this series, we showed that the heatmaps produced by the gradCAM function provided similarly convincing explanations (in this case, focus on the head area of the dog) both when their prediction is correct (Figure 2, top) as well as incorrect (Figure 2, bottom: a Labrador retriever was mistakenly identified as a beagle).B. Even in cases where XAI techniques show that a model is not looking in the right place, that doesn’t necessarily mean that it is easy to know how to fix the underlying problem.

In some easier cases, such as in the husky vs. wolf classification mentioned earlier, a quick visual inspection of model errors could have helped identify the spurious correlation between “presence of snow” and “images of wolves.” There is no guarantee that the same process would work for other (larger or more complex) tasks.C. Results are model- and task-dependent.

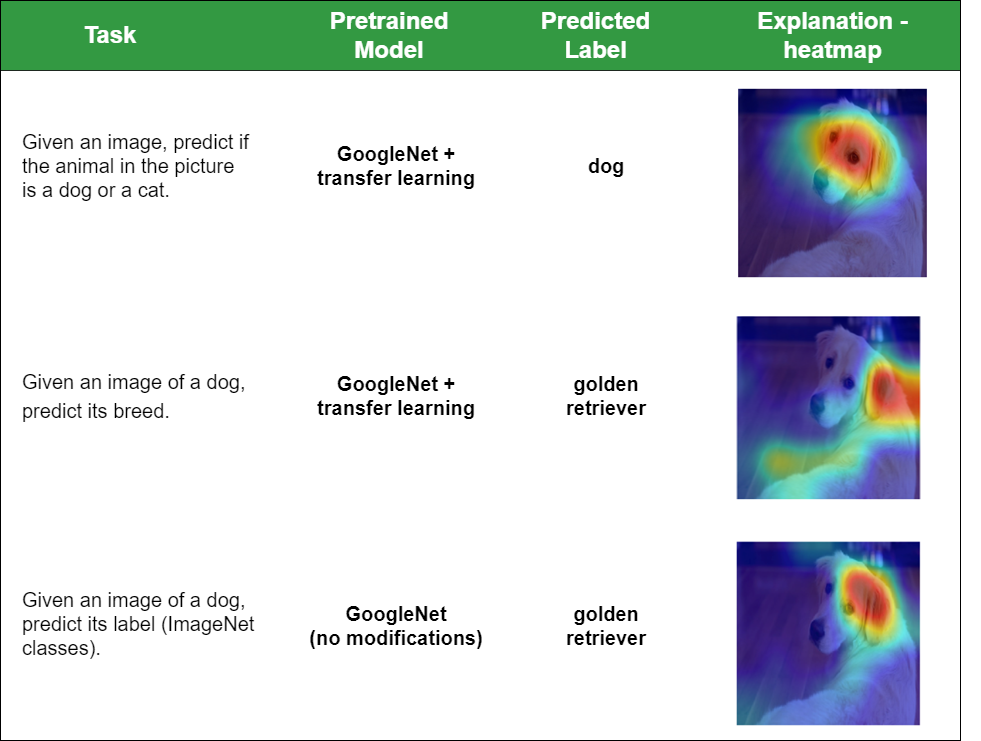

In our previous post in this series, we also showed that the heatmaps produced by the gradCAM function in MATLAB provided different visual explanations associated with the same image (Figure 3) depending on the pretrained model and task. Figure 4 shows those two examples and adds a third example, in which the same network (GoogLeNet) was used without modification. A quick visual inspection of Figure 4 is enough to spot significant differences among the three heatmaps. Figure 3: Test image for different image classification tasks and models (shown in Figure 4).

Figure 3: Test image for different image classification tasks and models (shown in Figure 4).

Figure 4: Using gradCAM on the same test image (Figure 3), but for different image classification tasks and models.

Figure 4: Using gradCAM on the same test image (Figure 3), but for different image classification tasks and models.

3. Can consistency across XAI techniques improve user experience?

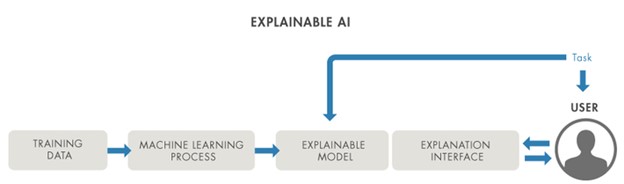

In order to understand the why and how behind an AI model’s decisions and get a better insight into its successes and failures, an explainable model should be capable of explaining itself to a human user through some type of explanation interface (Figure 5). Ideally this interface should be rich, interactive, intuitive, and appropriate for the user and task. Figure 5: Usability (UI/UX) aspects of XAI: an explainable model requires a suitable interface.

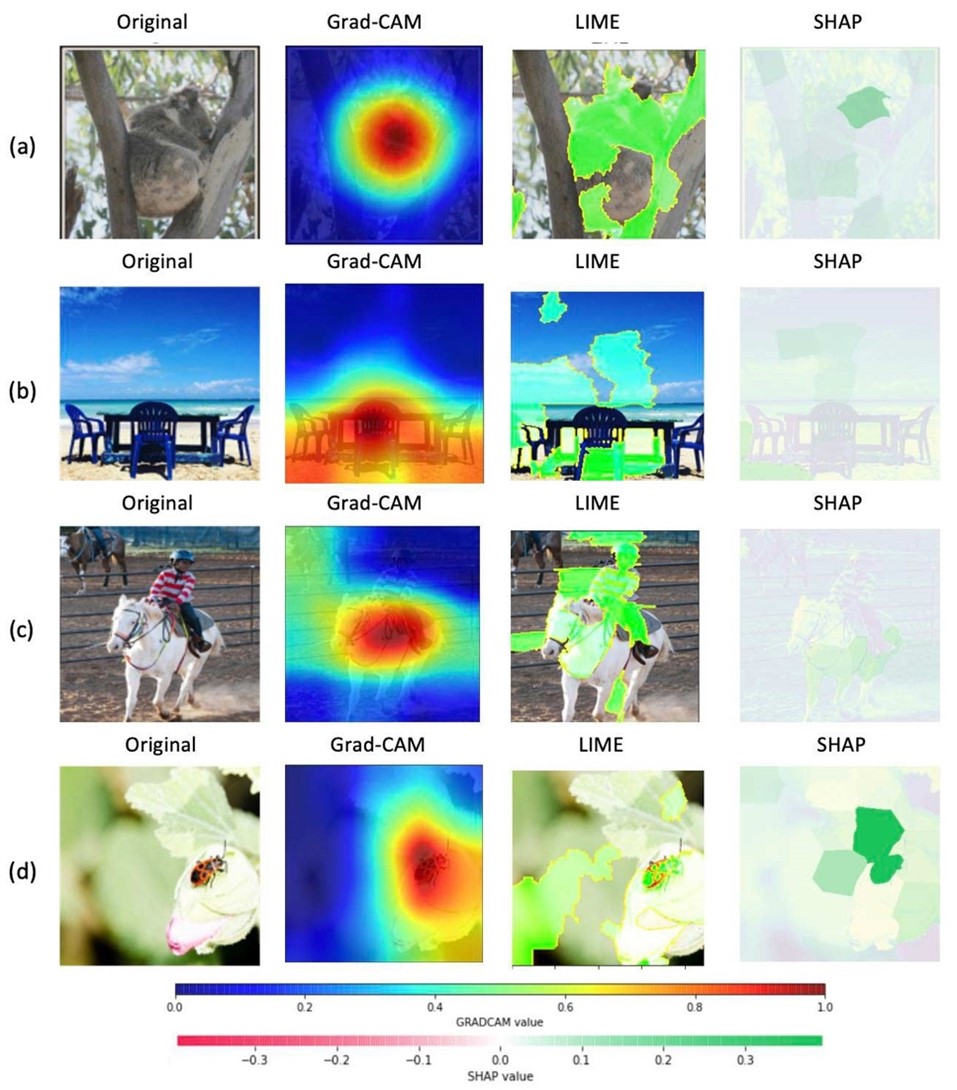

In visual AI tasks, comparing the results produced by two or more methods can be problematic, since the most commonly used post-hoc XAI methods use significantly different explanation interfaces (that is, visualization schemes) in their implementation (Figure 6):

Figure 5: Usability (UI/UX) aspects of XAI: an explainable model requires a suitable interface.

In visual AI tasks, comparing the results produced by two or more methods can be problematic, since the most commonly used post-hoc XAI methods use significantly different explanation interfaces (that is, visualization schemes) in their implementation (Figure 6):

- CAM (Class Activation Maps), including Grad-CAM, and occlusion sensitivity use a heatmap to correlate hot colors with salient/relevant portions of the image.

- LIME (Local Interpretable Model-Agnostic Explanations) generates superpixels, which are typicallly shown as highlighted pixels outlined in different pseudo-colors.

- SHAP (SHapley Additive exPlanations) values are used to divide pixels among those that increase or decrease the probability of a class being predicted.

Figure 6: Three different XAI methods (Grad-CAM, LIME, and SHAP) for four different images. Notice how the structure (including border definition), choice of colors (and their meaning), ranges of values and, consequently, meaning of highlighted areas vary significantly between XAI methods. [Source: https://arxiv.org/abs/2006.11371]

Figure 6: Three different XAI methods (Grad-CAM, LIME, and SHAP) for four different images. Notice how the structure (including border definition), choice of colors (and their meaning), ranges of values and, consequently, meaning of highlighted areas vary significantly between XAI methods. [Source: https://arxiv.org/abs/2006.11371]

Takeaway

In this blog post, we offered words of caution and discussed some limitations of existing XAI methods. Despite the downsides, there are still many reasons to be optimistic about the potential of XAI, as we will share the next (and final) post in this series.

댓글

댓글을 남기려면 링크 를 클릭하여 MathWorks 계정에 로그인하거나 계정을 새로 만드십시오.