How and When to Use Explainable AI Techniques

This post is from Oge Marques, PhD and Professor of Engineering and Computer Science at FAU.

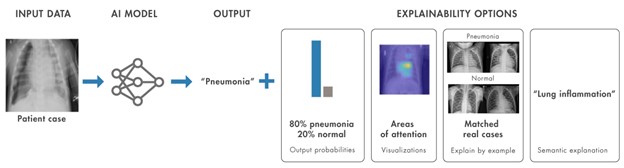

Despite impressive achievements, deep learning (DL) models are inherently opaque and lack the ability to explain their predictions, decisions, and actions. To circumvent such limitations, often referred to as "the black-box" problem, a new field within artificial intelligence (AI) has emerged: XAI (eXplainable Artificial Intelligence). This is a vast field that includes a

wide range of tools and techniques.

In this blog post, we focus on selected XAI techniques for computer vision tasks and demonstrate how they can be successfully used to enhance your work. Along the way, we provide practical advice on how and when you can apply these techniques judiciously.

First things first

XAI is a vast field of research, whose boundaries are being redrawn as the field advances and there is greater clarity on what XAI can (and cannot) do. At this point, even basic aspects of this emerging area, such as terminology (e.g., explainability vs. interpretability), scope, philosophy, and usefulness, are being actively discussed.

Essentially, XAI aims at providing contemporary AI models the ability to explain their predictions, decisions, and actions. This can be achieved primarily in two different ways:

- By designing models that are inherently interpretable, i.e., whose architecture allows the extraction of key insights into how decisions were made, and values were computed. One of the most popular examples in this category is the decision tree for classification tasks. By its very nature, a decision tree algorithm will select the most important features, associated thresholds, and the path taken by the algorithm to arrive at a prediction (Fig. 1).

- By producing explanations "after the fact" (hence the Latin expression post-hoc), which has become quite popular in computer vision and image analysis tasks, as we will see next.

Use your judgment

Before taking the time needed to apply XAI methods to your computer vision solution, we advise you to answer four fundamental questions:

- What are your needs and motivation?Your reasons for using XAI can vary widely. Typical uses of XAI include: verification and validation of the code used to build your model; comparison of results among competing models for the same task; compliance with regulatory requirements; and sheer curiosity, just to mention a few.

- What are the characteristics of the task at hand?

Different tasks might benefit more than others from the additional insights provided by XAI as we'll see in the examples below.

- Which datasets are being used to train, validate, and test the model?The size, nature, and statistical properties of your dataset (for example, how imbalanced it is) will determine to a great extent whether XAI will impact on your work.

- Which tools are available to support your work?XAI tools typically consist of a (complex) algorithm that computes the parameters (such as weights and gradients in deep neural networks) used to infer a model's decisions, and a user interface (UI) to communicate these decisions to users. Both are essential, but in practice the UI is the weakest link since its job is to ensure a satisfactory user experience by conveying the XAI method's findings in a way that is clear to the user while hiding the complexity of the underlying calculations.

Examples

We have selected three examples that showcase different ways by which you can use XAI techniques to help you enhance your work. As we explore these examples together you should get a better sense of how and when to use XAI in the following computer vision tasks, selected for their usefulness and popularity:

(1) Image classification

(2) Semantic segmentation

(3) Anomaly detection in visual inspection.

For each example/task, we advise you to consider a

checklist:

| 1. Did the use of post-hoc XAI add value in this case? Why (not)?

2. Are the results meaningful?

3. Are the results (un)expected?

4. What could I do differently? |

The answer to these questions can determine how much value the XAI heatmap actually adds to your solution (besides satisfying your curiosity).

Example 1: Image classification

Image classification using pretrained convolutional neural networks (CNNs) has become a straightforward task that can be accomplished with

less than 10 lines of code. Essentially, an image classification model predicts the label (name or category) that best describes the contents of a given image.

Given a test image and a predicted label, post-hoc XAI methods can be used to answer the question:

Which parts of the image were deemed most important by the model?

We can use different XAI methods (such as

gradCAM ,

occlusionSensitivity and

imageLIME, all of which are available as part of Deep Learning Toolbox) to produce results as colormaps overlaid on the actual images (see example

here). This is fine and might help quench our curiosity. We can claim that post-hoc XAI techniques can help "explain" or enhance the image classification results, despite the differences in results and visualization methods.

Figure 2 shows representative results using

gradCAM for two different tasks (with associated datasets) and test images: dogs vs. cats classifier and dog breed classifier. In both cases, we use a transfer learning approach starting from a pretrained GoogLeNet.

Let's analyze the results, one row at a time.

- The first two rows refer to the "dogs vs. cats" task. Both results are correct and the Grad-CAM heatmaps provide some reassurance that the classifier was "looking at the right portion of the image" in both cases. Great!

Things get significantly more interesting in the second task.

- The first case shows a beagle being correctly classified and a convincing Grad-CAM heatmap. So far, so good.

- The second case, however, brings a surprising aspect: even though the dog was correctly identified as a golden retriever, the associated Grad-CAM heatmap is significantly different than the one we saw earlier (in the "cats vs. dogs" case). This would be fine, except that in the breed classifier case, the heatmap suggests that the network gave very little importance to the eyes region, which is somewhat unexpected.

- The third case illustrates one of the reasons why post-hoc XAI methods are often criticized: they provide similar "explanations" (in this case, focus on the head area of the dog) even when their prediction is incorrect (in this case, a Labrador retriever was mistakenly identified as a beagle).

Using our checklist, we can possibly agree that the XAI results are helpful in highlighting which regions of the test image were considered most important by the underlying image classifier. We have already seen, however, that post-hoc XAI is not a panacea, as illustrated by the last two cases in Figure 2.

One thing we could do differently, of course, would be to use other post-hoc XAI techniques – which would essentially consist of modifying one line of code. You can use this example code (

link) as a starting point for your own experiments.

Example 2: Semantic segmentation

Semantic segmentation is the process through which a neural network classifies every pixel in an image as belonging to one or more semantic classes of objects present in a scene. The results can be visualized by pseudo coloring each semantic class with a different color, which provides clear and precise (pixel-level) feedback about the quality of the segmentation results to the user (Fig. 3).

Figure 3 – Example of semantic segmentation results using an arbitrary convention for pseudo-coloring pixels belonging to each semantic region.

Just as we did for the image classification task, we can use Grad-CAM to see which regions of the image are important for the pixel classification decisions for each semantic category (Fig. 4).

Figure 4 – Example of results using Grad-CAM for semantic image segmentation.

Looking at our checklist we could argue that – contrary to the image classification scenario and despite its ease of use – the additional information provided by the Grad-CAM heatmap did

not significantly increase our understanding of the solution nor did it add value to our overall solution. It did, however, help us confirm that some aspects of the underlying network (e.g., feature extraction in the early layers) worked as expected, therefore confirming the hypothesis that this network architecture is indeed suitable for the task.

Example 3: Anomaly detection in visual inspection

In this final example, we show deep learning techniques that can perform anomaly detection in visual inspection tasks and produce visualization results to explain their decisions.

The resulting heatmaps can drive attention to the anomaly and provide instantaneous verification that the model worked for this case. It is worth mentioning that each anomalous instance is potentially different than any other and these anomalies are often hard-to-detect by a human quality assurance inspector.

There are numerous variations of the problem in different settings and industries (manufacturing, automotive, medical imaging, to name a few). Here are two examples:

1.

Detecting undesirable cracks in concrete.

This example uses the

Concrete Crack Images for Classification dataset which contains 20,000 images divided in two classes:

Negative images, i.e.,

without noticeable cracks in the road, and

Positive images, i.e., with the specific anomaly (in this case, cracks). Fig. 5 shows results for images without and with cracks.

Looking at the results from the perspective of our checklist, there should be no discussion about how much value is added by the additional layer of explanation/visualization. The areas highlighted as "hot" in the true positive result are meaningful and help drive attention to the portion of the image that contains the cracks, whereas the lack of "hot" areas in the true negative result gives us the peace of mind to know that there is nothing to worry about (i.e., no undesirable crack) in that image.

Figure 5 - Example of results: anomaly detection in visual inspection task (concrete cracks).

2.

Detecting defective pills.

This example uses the

pillQC dataset which contains images from three classes: normal images without defects, images with chip defects in the pills, and images with dirt contamination. Fig. 6 shows heatmap results for normal and defective images. Once again, evaluating the results against our checklist, we should agree that there is compelling evidence of the usefulness of XAI and visualization techniques in this context.

Anomaly heatmap for defective pill image

Anomaly heatmap for defective pill image |

Anomaly heatmap for normal image

Anomaly heatmap for normal image |

Figure 6 - Example of results: anomaly detection in visual inspection task (defective pills).

Key takeaways

In this blog post we have shown how post-hoc XAI techniques can be used to visualize which parts of an image were deemed most important for three different classes of computer vision applications.

These techniques might be useful beyond the explanation of correct decisions, since they also help us identify blunders, i.e., cases where the model learned the wrong aspects of the images. We have seen that the usefulness and added value of these XAI methods can vary between one case and the next, depending on the nature of the task and associated dataset and the need for explanations, among many other aspects.

Going back to the title of this blog post, we have shown that it is much easier to answer the 'how' question (

thanks to several post-hoc XAI visualization techniques in MATLAB) than the when question (for which we hope to have provided additional insights and a helpful checklist).

Please keep in mind that XAI is much more than colormaps produced by post-hoc techniques such as illustrated in this blog post – and that these techniques and their associated colormaps can be

problematic, something we will discuss in the next post in this series.

In the meantime you might want to check out this

blog post (and companion

code) on "Explainable AI for Medical Images" and try out the UI-based

UNPIC (understanding network predictions for image classification) MATLAB app.

Anomaly heatmap for defective pill image

Anomaly heatmap for defective pill image Anomaly heatmap for normal image

Anomaly heatmap for normal image Cleve’s Corner: Cleve Moler on Mathematics and Computing

Cleve’s Corner: Cleve Moler on Mathematics and Computing The MATLAB Blog

The MATLAB Blog Guy on Simulink

Guy on Simulink MATLAB Community

MATLAB Community Artificial Intelligence

Artificial Intelligence Developer Zone

Developer Zone Stuart’s MATLAB Videos

Stuart’s MATLAB Videos Behind the Headlines

Behind the Headlines File Exchange Pick of the Week

File Exchange Pick of the Week Hans on IoT

Hans on IoT Student Lounge

Student Lounge MATLAB ユーザーコミュニティー

MATLAB ユーザーコミュニティー Startups, Accelerators, & Entrepreneurs

Startups, Accelerators, & Entrepreneurs Autonomous Systems

Autonomous Systems Quantitative Finance

Quantitative Finance MATLAB Graphics and App Building

MATLAB Graphics and App Building

Comments

To leave a comment, please click here to sign in to your MathWorks Account or create a new one.