Large Language Models with MATLAB

How to connect MATLAB to the OpenAI™ API to boost your NLP tasks.

Have you heard of ChatGPT™, Generative AI, and large-language models (LLMs)? This is a rhetorical question at this point. But did you know you can combine these transformative technologies with MATLAB? In addition to the MATLAB AI Chat Playground (learn more by reading this blog post), you can now connect MATLAB to the OpenAI™ Chat Completions API (which powers ChatGPT). In this blog post, we are talking about the technology behind LLMs and how to connect MATLAB to the OpenAI API. We also show you how to perform natural language processing (NLP) tasks, such as sentiment analysis and building a chatbot, by taking advantage of LLMs and tools from Text Analytics Toolbox.What are LLMs?

Large language models (LLMs) are based on transformer models (a special case of deep learning models). Transformers are designed to track relationships in sequential data. They rely on a self-attention mechanism to capture global dependencies between input and output. LLMs have revolutionized NLP, because they can capture complex relationships between words and nuances present in human language. Well known transformer models include BERT and GPT models, both of which you can use with MATLAB. If you want to use a pretrained BERT model included with MATLAB, you can use the bert function. In this blog post, we are focusing on GPT models.LLMs Repository

The code you need to access and interact with LLMs using MATLAB is in the LLMs repository. By using the code in the repository, you can interface the ChatGPT API from your MATLAB environment. Some of the supported models are gpt-3.5-turbo and gpt-4.

Set Up

To interface the ChatGPT API, you must obtain an OpenAI API key. To learn more about how to obtain the API key and charges for using the OpenAI API, see OpenAI API. It’s good practice to save the API key in a file in your current folder, so that you have it handy. Animated Figure: Save the OpenAI API key in your current folder.

Animated Figure: Save the OpenAI API key in your current folder.

Getting Started

To initialize the OpenAI Chat object and get started with using LLMs with MATLAB, type just one line of code.chat = openAIChat(systemPrompt,ApiKey=my_key);In the following sections of this blog, I will show you how to specify the system prompt for different use cases and how to enhance the functionality of the OpenAI Chat object with optional name-value arguments.

Getting Started in MATLAB Online

You might want to work with LLMs in MATLAB Online. GitHub repositories with MATLAB code have an “Open in MATLAB Online” button. By clicking on the button, the repository opens directly in MATLAB Online. Watch the following video to see how to open and get started with the LLMs repository in MATLAB Online in less than 30 seconds.Use Cases

In this section, I am going to present use cases for LLMs with MATLAB and link to relevant examples. The use cases include sentiment analysis, building a chatbot, and retrieval augmented generation. You can use tools from Text Analytics Toolbox to preprocess, analyze, and ‘meaningfully’ display text. We are going to mention a few of these functions here but check the linked examples to learn more.Sentiment Analysis

Let’s start with a simple example on how to perform sentiment analysis. Sentiment analysis deals with the classification of opinions or emotions in text. The emotional tone of the text can be classified as positive, negative, or neutral. Figure: Creating a sentiment analysis classifier.

Specify the system prompt. The system prompt tells the assistant how to behave, in this case, as a sentiment analyzer. It also provides the system with simple examples on how to perform sentiment analysis.

Figure: Creating a sentiment analysis classifier.

Specify the system prompt. The system prompt tells the assistant how to behave, in this case, as a sentiment analyzer. It also provides the system with simple examples on how to perform sentiment analysis.

systemPrompt = "You are a sentiment analyser. You will look at a sentence and output"+...

" a single word that classifies that sentence as either 'positive' or 'negative'."+....

"Examples: \n"+...

"The project was a complete failure. \n"+...

"negative \n\n"+...

"The team successfully completed the project ahead of schedule."+...

"positive \n\n"+...

"His attitude was terribly discouraging to the team. \n"+...

"negative \n\n";

Initialize the OpenAI Chat object by passing a system prompt.

chat = openAIChat(systemPrompt,ApiKey=my_key);Generate a response by passing a new sentence for classification.

text = generate(chat,"The team is feeling very motivated.")

text = "positive"

The text is correctly classified as having a positive sentiment.Build Chatbot

A chatbot is software that simulates human conversation. In simple words, the user types a query and the chatbot generates a response in a natural human language. Figure: Building a chatbot.

Chatbots started as template based. Have you tried querying a template-based chatbot? Well, I have and almost every chat ended with me frantically typing “talk to human”. By following the Example: Build ChatBot in the LLMs repository, I was able to build a helpful chatbot in minutes.

The first two steps in building a chatbot are to (1) create an instance of openAIChat to perform the chat and (2) use the openAIMessages function to store the conversation history.

Figure: Building a chatbot.

Chatbots started as template based. Have you tried querying a template-based chatbot? Well, I have and almost every chat ended with me frantically typing “talk to human”. By following the Example: Build ChatBot in the LLMs repository, I was able to build a helpful chatbot in minutes.

The first two steps in building a chatbot are to (1) create an instance of openAIChat to perform the chat and (2) use the openAIMessages function to store the conversation history.

chat = openAIChat("You are a helpful assistant. You reply in a very concise way, keeping answers "+...

"limited to short sentences.",ModelName=modelName,ApiKey=my_key);

messages = openAIMessages;

After a few more lines of code, I built a chatbot that helped me plan my Mexico vacations. In addition to the example code, I used other MATLAB functions (e.g., extractBetween) to format the chatbot responses. The following figure shows my brief (but helpful) chat with the chatbot. Notice that the chatbot retains information from previous queries. I don’t have to repeat “Yucatan Peninsula” in my questions.

Figure: User queries and chatbot responses for planning a Mexico vacation.

Figure: User queries and chatbot responses for planning a Mexico vacation.

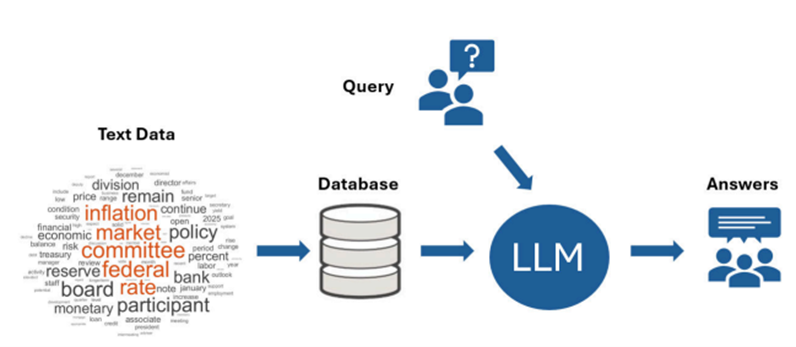

Retrieval Augmented Generation

Retrieval-augmented generation (RAG) is a technique for enhancing the results achieved by an LLM. Both accuracy and reliability can be augmented by retrieving information from external sources. For example, the prompt fed to the LLM can be enhanced with more up-to-date or technical information. Figure: Workflow for retrieval-augmented generation (RAG).

The Example: Retrieval-Augmented Generation shows how to retrieve information from technical reports on power systems to enhance ChatGPT for technical queries. I am not going showing all the example details here, but I will highlight key steps.

Figure: Workflow for retrieval-augmented generation (RAG).

The Example: Retrieval-Augmented Generation shows how to retrieve information from technical reports on power systems to enhance ChatGPT for technical queries. I am not going showing all the example details here, but I will highlight key steps.

- Use MATLAB tools (e.g., websave and fileDatastore) for retrieving and managing online documents.

- Use Text Analytics Toolbox functions (e.g., splitParagraphs, tokenizedDocument, and bm25Similarity) for preparing the text from the retrieved documents.

- When the retrieved text is ready for the task, initialize the chatbot with the specified context and API key.

chat = openAIChat("You are a helpful assistant. You will get a " + ... "context for each question, but only use the information " + ... "in the context if that makes sense to answer the question. " + ... "Let's think step-by-step, explaining how you reached the answer.",ApiKey=my_key); - Define the query. Then, retrieve and filter the relevant documents based on the query.

query = "What technical criteria can be used to streamline new approvals for grid-friendly DPV?"; selectedDocs = retrieveAndFilterRelevantDocs(allDocs,query);

- Define the prompt for the chatbot and generate a response.

prompt = "Context:" ... + join(selectedDocs, " ") + newline +"Answer the following question: "+ query; response = generate(chat,prompt);Wrap the text for easier visualization.wrapText(response)

ans =

"The technical criteria that can be used to streamline new approvals for grid-friendly DPV can include prudent screening criteria for systems that meet certain specifications. These criteria can be based on factors such as DPV capacity penetration relative to minimum feeder daytime load. Additionally, hosting capacity calculations can be used to estimate the point where DPV would induce technical impacts on system operations. These screening criteria are commonly used in countries like India and the United States."

Other Use Cases

The use cases presented above are just a sample of what you can achieve with LLMs. Other notable use cases (with examples in the LLMs repository) include text summarization and function calling. You can also use LLMs for many other NLP tasks like machine translation. What will you use the MATLAB LLMs repository for? Leave comments below and links to your GitHub repository. Text summarization is automatically creating a short, accurate, and legible summary of a longer text document. In the Example: Text Summarization, you can see how to incrementally summarize a large text by breaking it into smaller chunks and summarizing each chunk step by step. Function calling is a powerful tool that allows you to combine the NLP capabilities of LLMs with any functions that you define. But remember that ChatGPT can hallucinate function names, so avoid executing any arbitrary generated functions and only allow the execution of functions that you have defined. For an example on how to use function calling for automatically analyzing scientific papers from the arXiv API, see Function Calling with LLMs.Key Takeaways

- There is a new GitHub repository that enables you to use GPT models with MATLAB for natural language processing tasks. Find the repository here.

- The repository includes example code and use cases that achieve many tasks like sentiment analysis and building a chatbot.

- Take advantage of MATLAB tools, and more specifically Text Analytics Toolbox functions, to enhance the LLM functionality, such as retrieving, managing, and preparing text.

댓글

댓글을 남기려면 링크 를 클릭하여 MathWorks 계정에 로그인하거나 계정을 새로 만드십시오.