Highlights from the Edge AI Foundation’s Austin 2025 Conference

The following blog post is from Jack Ferrari, Edge AI Product Manager, Joe Sanford, Senior Application Engineer, and Reed Axman, Strategic Partner Manager.

We recently attended the Edge AI Foundation’s conference in Austin, Texas, and are excited to share our highlights in this blog post. The event brought together a diverse group of academics, researchers, business leaders, and engineers, all focused on bringing AI models out of the lab and into the real-world on edge devices. Over three engaging days, the MathWorks team connected with fellow attendees and gained valuable insights from a range of speakers.

As a proud Edge AI sponsor, MathWorks was actively involved with a demo booth featuring two live demonstrations and delivered a hands-on workshop, which showed how to develop AI models for motor control using MATLAB and Simulink. It was a fantastic chance to learn from, share with, and contribute to the Edge AI growing community.

Figure 1: The MathWorks booth at Edge AI Foundation’s conference 2025

Figure 1: The MathWorks booth at Edge AI Foundation’s conference 2025

Figure 2: Battery SoC virtual sensor demo

Figure 2: Battery SoC virtual sensor demo

Figure 3: Simulink model used in smart speaker deployment demo

Figure 3: Simulink model used in smart speaker deployment demo

Figure 4: Wake word detection demo deployed to Qualcomm Hexagon eNPU

In addition to these hands-on demos, we conducted impromptu demonstrations of tools like the Deep Network Designer app, the Analyze for Compression tool, Diagnostic Feature Designer, the Classification Learner app, Experiment Manager, and the Signal and Image Labeler apps.

Figure 4: Wake word detection demo deployed to Qualcomm Hexagon eNPU

In addition to these hands-on demos, we conducted impromptu demonstrations of tools like the Deep Network Designer app, the Analyze for Compression tool, Diagnostic Feature Designer, the Classification Learner app, Experiment Manager, and the Signal and Image Labeler apps.

Figure 5: Engagement at the MathWorks booth

Figure 5: Engagement at the MathWorks booth

Figure 6: Workshop on Rev it up: Deploy Tiny Neural Network to Boost Embedded Field-Oriented Controls of Electrical Drives

Figure 6: Workshop on Rev it up: Deploy Tiny Neural Network to Boost Embedded Field-Oriented Controls of Electrical Drives

Figure 1: The MathWorks booth at Edge AI Foundation’s conference 2025

Figure 1: The MathWorks booth at Edge AI Foundation’s conference 2025

Demos at the MathWorks Booth

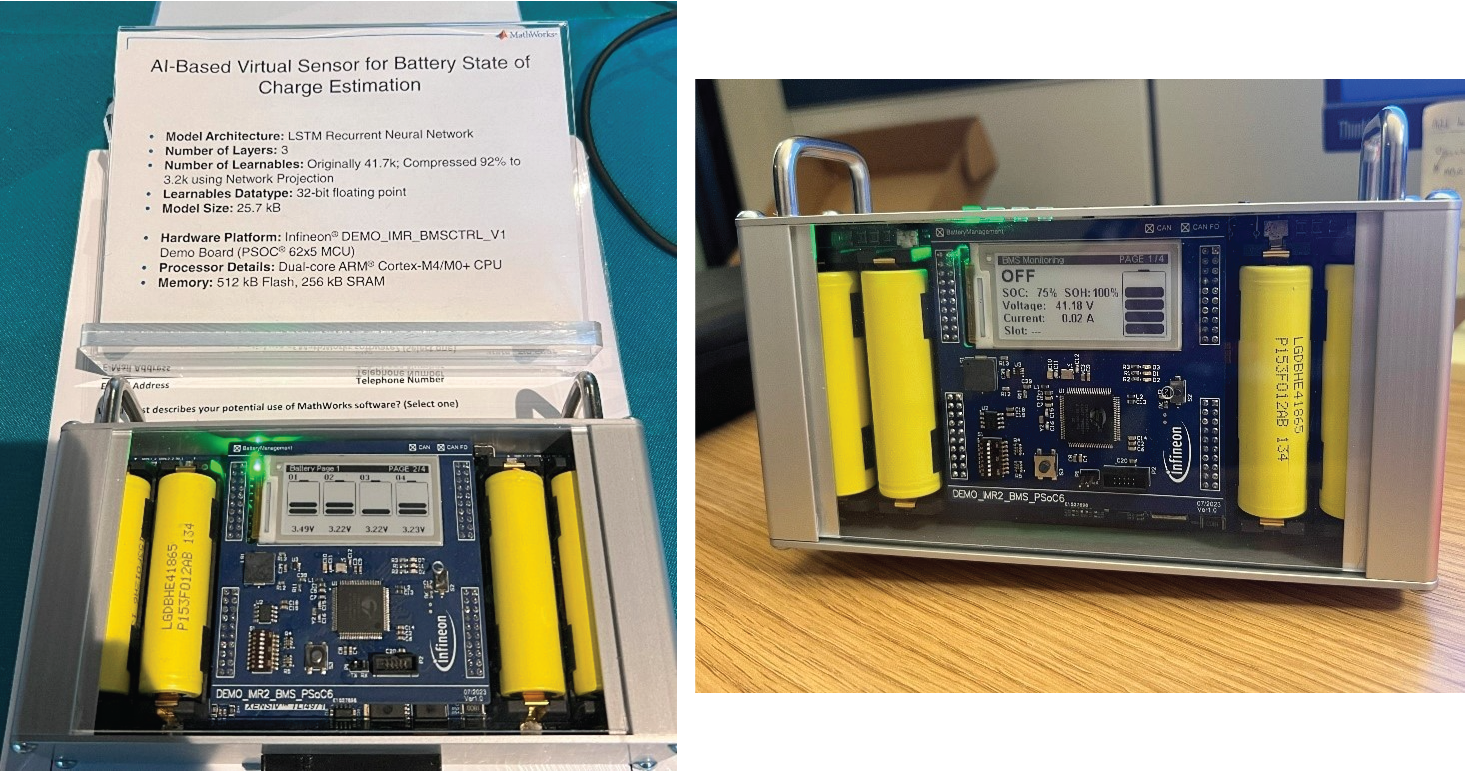

We presented two interactive demos showcasing how MATLAB and Simulink can be used to accelerate the process of developing and deploying AI models to edge devices.Battery State of Charge Virtual Sensor

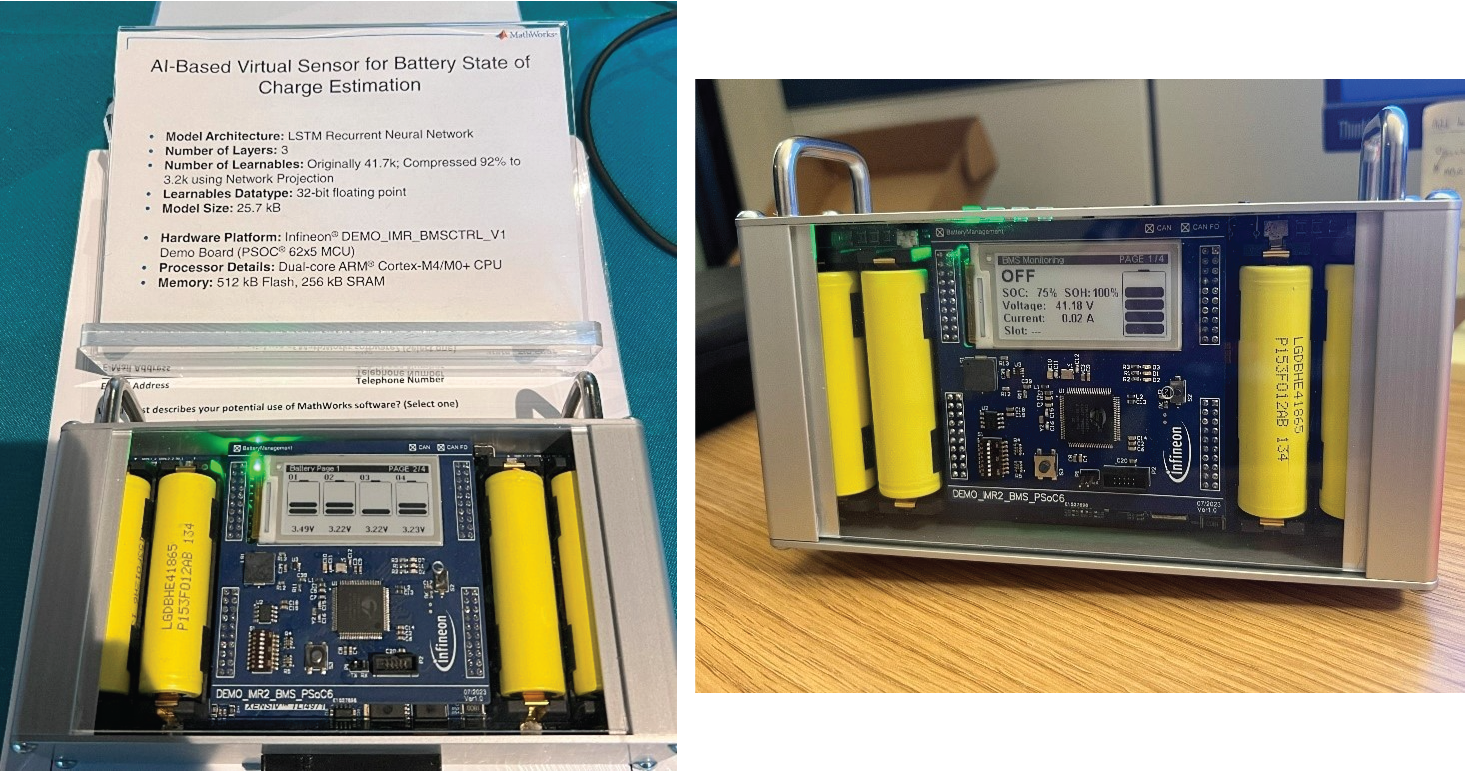

This demo showed an AI-based virtual sensor predicting in real time the battery-state-of-charge (SoC) of a lithium-ion battery from voltage, current, and temperature measurements. Estimating a battery’s SoC is crucial for many battery-powered systems, and it can often be difficult or impossible to measure directly. Data-driven models like neural networks can be used to make accurate predictions based on related measurements. In this demo, a simple LSTM model was trained on a real-world dataset using Deep Learning Toolbox and prepared for deployment to a resource-constrained embedded device using the neural network projection compression technique. Remarkably, with network projection, the number of learnable parameters in the LSTM model was reduced by 92%, from 41.7k to 3.2k, with no loss in accuracy on a test dataset. The size of the model parameters after compression was 25.7 kB. Finally, standalone C code was generated from the LSTM model using MATLAB Coder and integrated into the demo kit’s existing BMS firmware. The hardware platform used for the demo is based on the BMS Controller used in the Infineon Mobile Robot. Figure 2: Battery SoC virtual sensor demo

Figure 2: Battery SoC virtual sensor demo

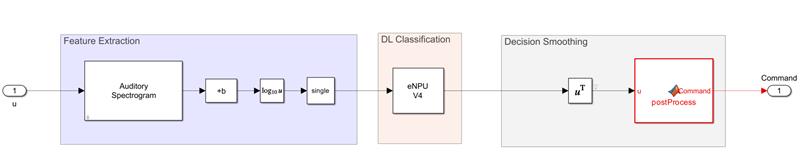

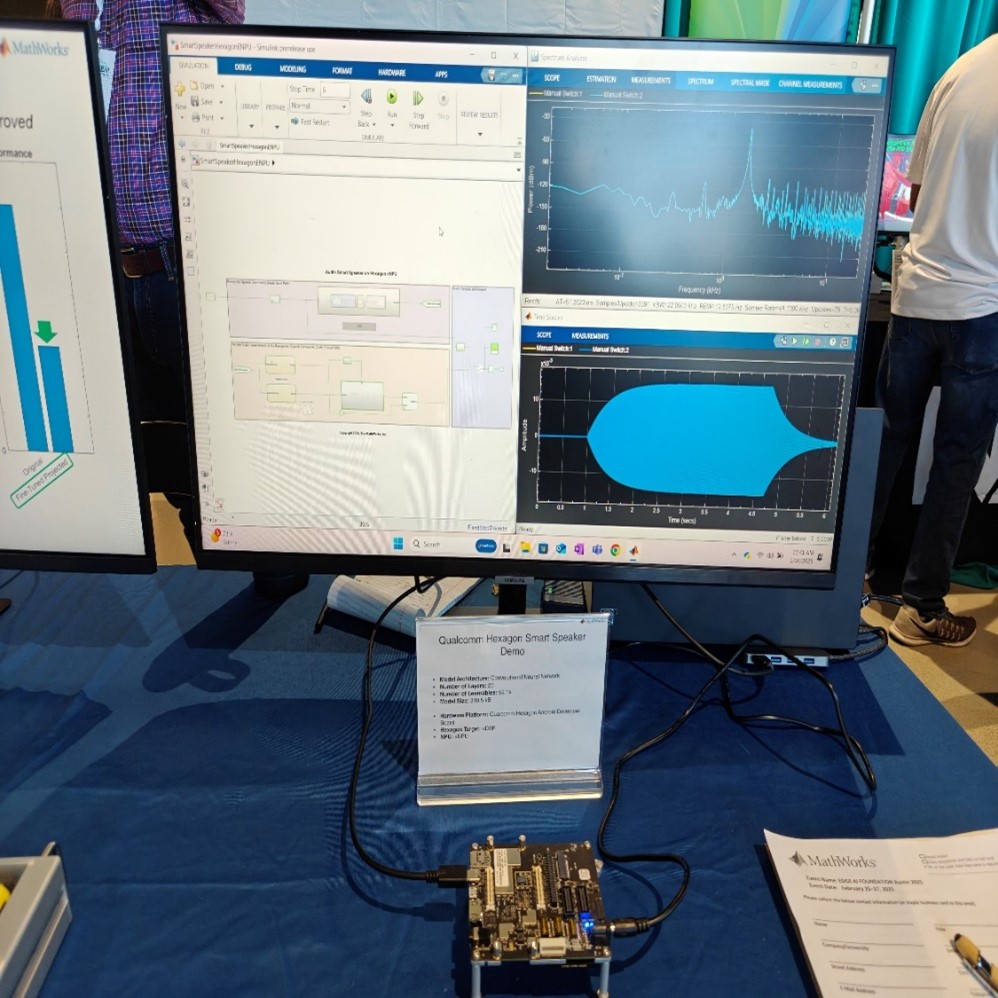

Wake Word Detection for Smart Speaker

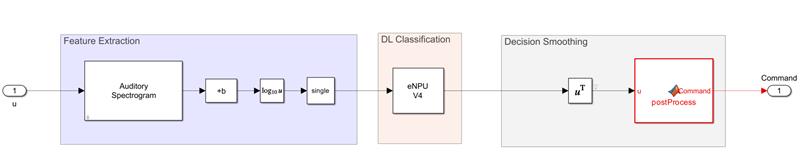

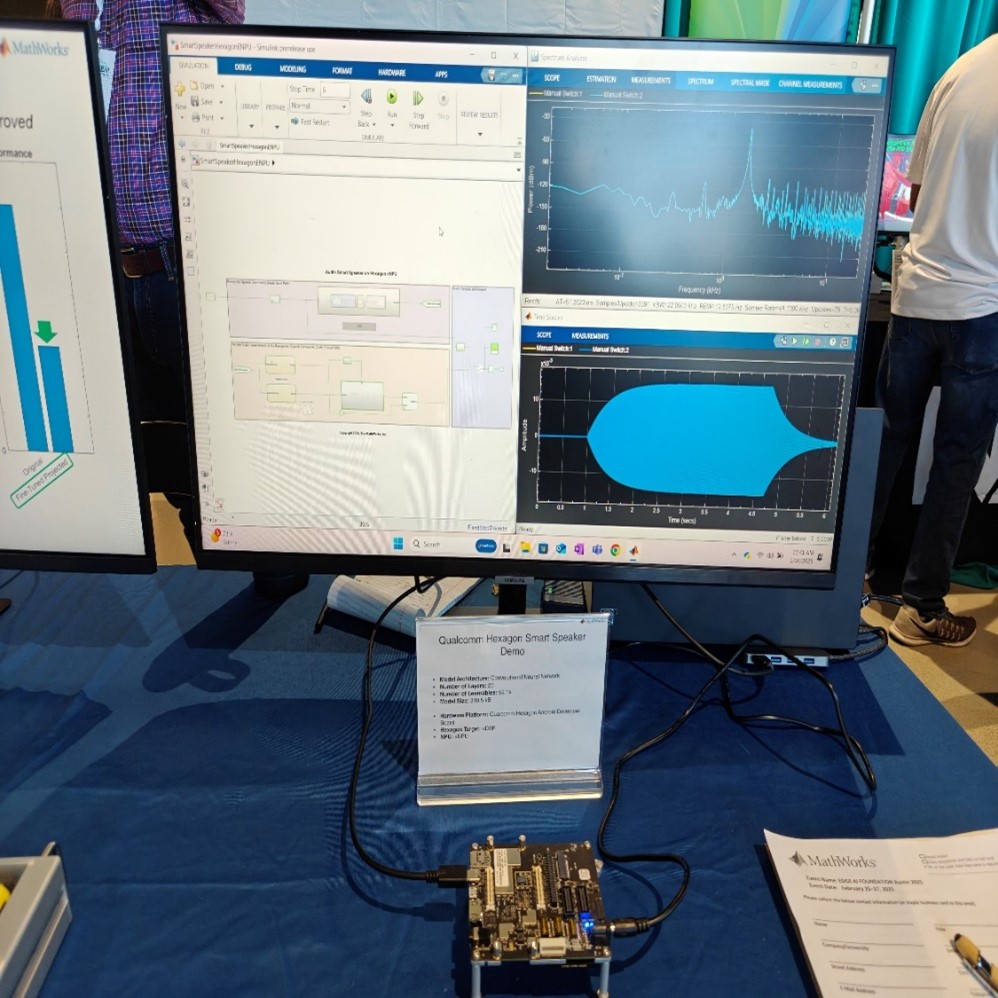

This demo showed the deployment of a convolutional neural network (CNN) for speech command recognition on Qualcomm’s Hexagon using Simulink and the Embedded Coder Support Package for Qualcomm Hexagon Processors. The application specifically targeted wake word detection for smart speakers. The demo highlighted the advantages of optimized code generation for the Hexagon and underscored how this hardware support package simplifies the process of getting started with Qualcomm hardware. Attendees were excited to have the opportunity to leverage Qualcomm's hardware without needing expertise in their software tools, allowing users to get started with deploying AI models to the eNPU in under 5 minutes. Figure 3: Simulink model used in smart speaker deployment demo

Figure 3: Simulink model used in smart speaker deployment demo

Figure 4: Wake word detection demo deployed to Qualcomm Hexagon eNPU

In addition to these hands-on demos, we conducted impromptu demonstrations of tools like the Deep Network Designer app, the Analyze for Compression tool, Diagnostic Feature Designer, the Classification Learner app, Experiment Manager, and the Signal and Image Labeler apps.

Figure 4: Wake word detection demo deployed to Qualcomm Hexagon eNPU

In addition to these hands-on demos, we conducted impromptu demonstrations of tools like the Deep Network Designer app, the Analyze for Compression tool, Diagnostic Feature Designer, the Classification Learner app, Experiment Manager, and the Signal and Image Labeler apps.

Figure 5: Engagement at the MathWorks booth

Figure 5: Engagement at the MathWorks booth

Joint Workshop Delivered with STMicroelectronics

Our workshop, titled Rev it up: Deploy Tiny Neural Network to Boost Embedded Field-Oriented Controls of Electrical Drives, gave attendees the chance to design, optimize, and benchmark tiny neural networks to improve upon classic Proportional-Integral (PI) controllers used in motor control applications. Participants generated synthetic training data from Simulink simulations, trained and compressed a range of deep learning models in Deep Learning Toolbox, and evaluated the runtime performance of the models on the remote board farm accessible from the ST Edge AI Developer Cloud. We’re very grateful to the Edge AI Foundation for the opportunity to host this workshop and to Dr. Danilo Pau from STMicroelectronics for presenting alongside us. It was great to hear the praise and suggestions for improvements from those who participated. Many were impressed at how they were able to complete an end-to-end workflow from generating training data all the way through to deployment and benchmarking on STM32 hardware in the span of the 90-minute event. To learn more about this workshop, see the Workshop Abstract and Workshop Repository. Figure 6: Workshop on Rev it up: Deploy Tiny Neural Network to Boost Embedded Field-Oriented Controls of Electrical Drives

Figure 6: Workshop on Rev it up: Deploy Tiny Neural Network to Boost Embedded Field-Oriented Controls of Electrical Drives

Key Takeaways and Industry Trends

The conference was rich in insights on the fast-moving world of edge AI. We’re particularly excited about the following trends that caught our attention during the three days in Austin:- Growing Interest in Physical AI and Robotics: There's a noticeable surge in enthusiasm for integrating AI into robots and other autonomous, engineered systems that interact with physical environments. As these systems become more complex, ensuring their reliability and effectiveness through comprehensive design and testing processes becomes crucial. The ability to rapidly prototype and rigorously test these systems will be key to their successful deployment and safe operation.

- Expansion of Dedicated Neural Processing Units (NPUs): Once confined to PCs and smartphones, NPUs are continuing to make their way into more microcontrollers and IoT devices. This shift shows potential for enhanced power efficiency and performance compared to traditional CPUs, marking a significant evolution in data processing at the edge. If you're a MATLAB user interested in deploying AI models to NPUs, we'd love to hear from you! Your insights and experiences could provide valuable perspectives as we continue to explore support for additional NPU targets.

- Advancements in Neural Network Compression Algorithms: New compression techniques are significantly reducing the size and computational demands of AI models and making it feasible to deploy increasingly complex models to edge devices. At the conference, we were particularly impressed by the SplitQuant method presented by Nota AI. We’re also very partial to the neural network projection method in MATLAB!

- Category:

- Deep Learning,

- Embedded AI

Comments

To leave a comment, please click here to sign in to your MathWorks Account or create a new one.