Large language models like ChatGPT or Claude can write MATLAB code, but cannot sense your hardware, read sensor data, understand your hardware constraints, or validate solutions against real-world performance. You were stuck in an isolated conversation of brilliant suggestions but disconnected from reality.

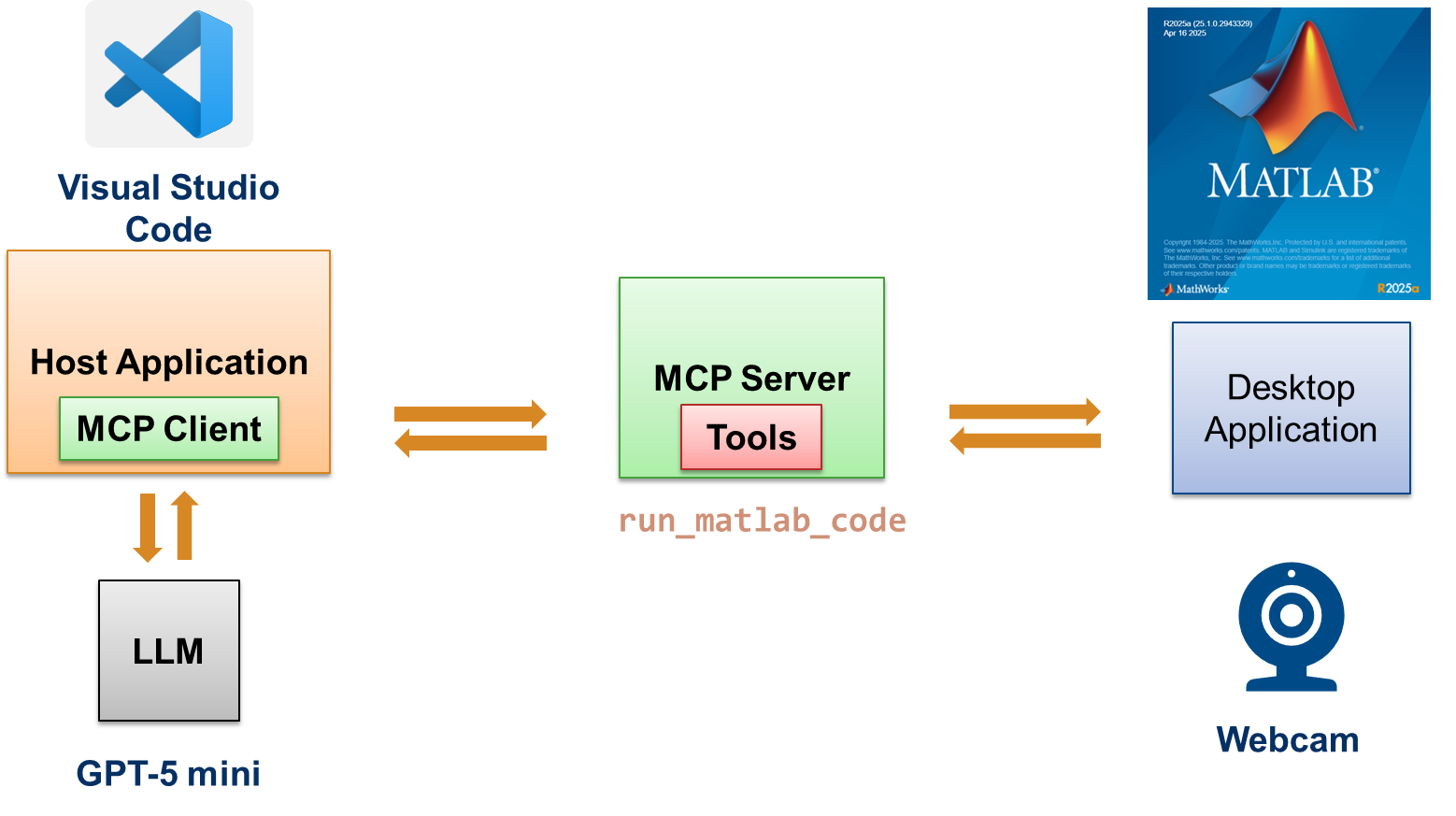

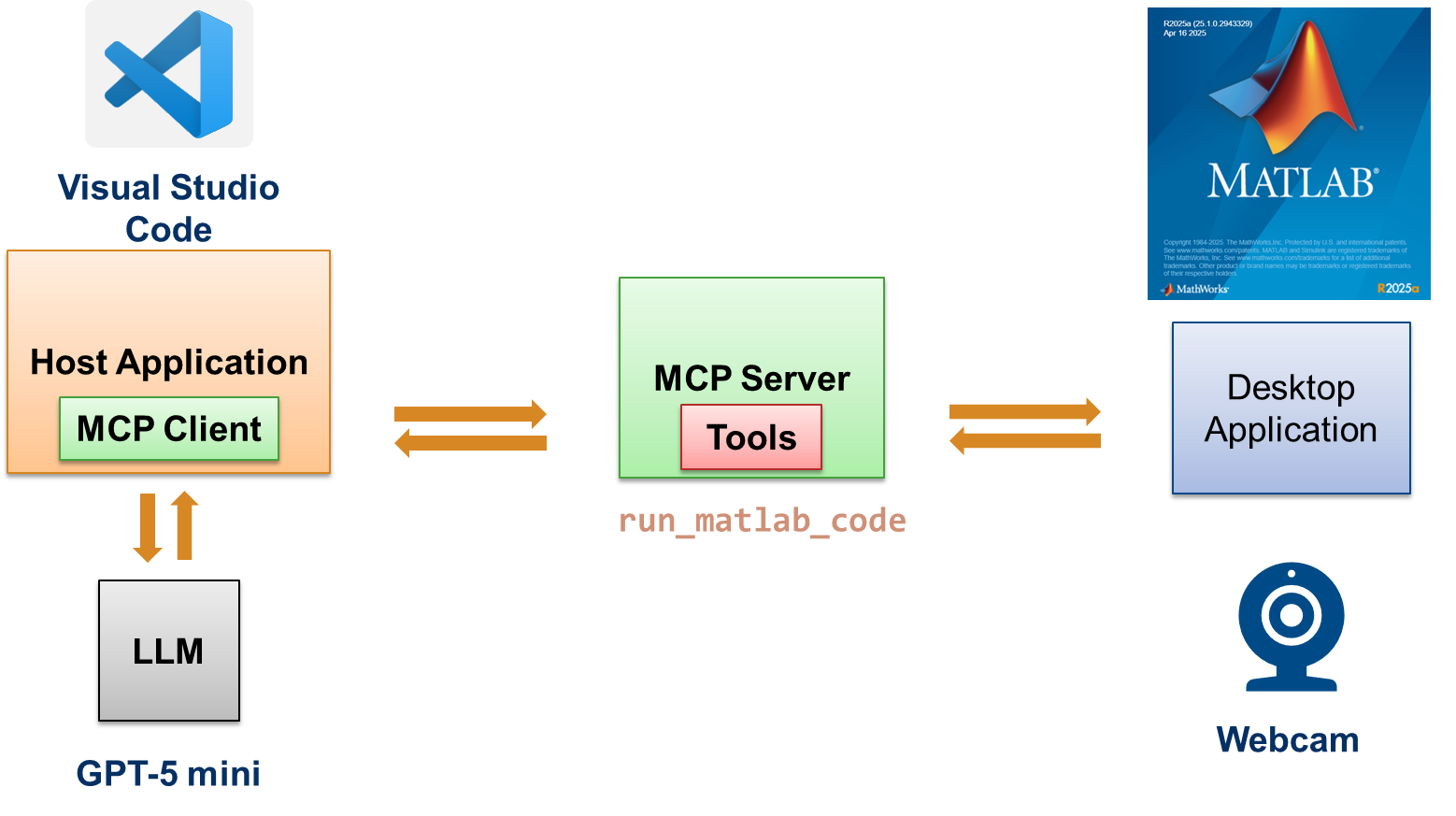

The MATLAB MCP Core Server changes this fundamental limitation by making your hardware and data part of the AI's reasoning process.

Webcam Face Detection in VS Code

Let me show you what hardware-integrated AI coding looks like in practice using GitHub Copilot in VS Code.

Say you want to capture an image from your webcam using Image Acquisition Toolbox and detect faces using Computer Vision Toolbox in MATLAB. In a traditional workflow, you would search documentation, write code, test it manually, debug errors, and iterate.

In VS Code with Copilot enabled and

MATLAB MCP Server configured, you could simply ask:

" Create and run MATLAB code that gets a snapshot from my Logitech Camera (not my integrated camera) using the Image Acquisition Toolbox functions, run face detection on the snapshot and circle the face in blue."

Copilot does not just write code; it uses the MCP server to execute it directly in your MATLAB environment and accesses your actual webcam hardware using the Image Acquisition Toolbox. This was 0 to 1 in just 2.5 minutes!

The Real Problem with Current AI Workflows

Here is what engineers experience today: An AI chat suggests an algorithm for signal processing. You copy it and run it elsewhere. It crashes because calibration data from your specific sensor does not match the example assumptions. You describe the error back to the AI. It suggests a fix. Repeat ten times. By the time you have debugged the code, you have lost momentum and the AI's context feels stale.

But there is a deeper issue. Engineers don't just write code, they run it against real hardware, watch it fail in interesting ways, and use those failures to refine their understanding. Traditional LLM cannot do that. It cannot access your Arduino data stream and update the GPIO pins. It cannot connect to your oscilloscope, configure trigger levels, and iterate based on real measurements. The MATLAB

Test and Measurement products with MATLAB MCP enable these workflows.

Conclusion

The MATLAB MCP Core Server represents a fundamental shift in hardware-assisted development. Rather than AI being separate from your hardware systems, it becomes an integrated participant in the development process.

For engineers building IoT systems, sensor networks, embedded controls, and hardware-connected applications, this is the missing link that makes AI-assisted development truly practical. Feedback loops with actual hardware and rapid iteration cycles transform how quickly you can prototype and deploy.

The future of engineering with AI is not about better code suggestions. It is about integrated intelligence that sees what your hardware sees, runs what you build, measures what happens, and iterates alongside you.

Comments

To leave a comment, please click here to sign in to your MathWorks Account or create a new one.