Deep Learning in Action – part 2

Hello Everyone! It's Johanna, and Steve has allowed me to take over the blog from time to time to talk about deep learning.

I'm back for another episode of:

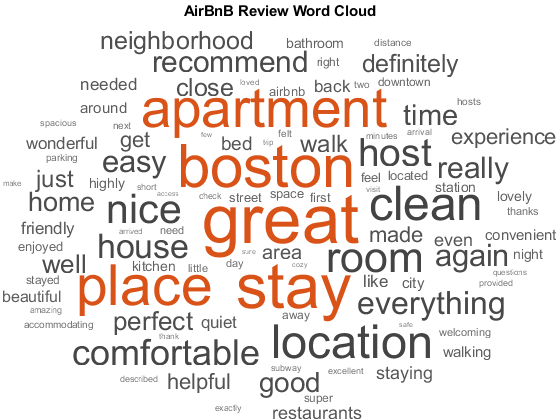

Demo: Sentiment Analysis Imagine typing in a term and instantly getting a sense of how that term is perceived? That's what we're going to do today. What better place to start when talking about sentiment, than Twitter? Twitter is filled with positively and negatively charged statements, and companies are always looking for insight into how their company is perceived without reading every tweet. Sentiment analysis can have many practical applications, such as branding, political campaigning, and advertising. In this example we'll analyze Twitter data to see whether the sentiment surrounding a specific term or phrase is generally positive or negative. Machine learning was (and still is) commonly used for sentiment analysis. It is often used to analyze individual words, whereas deep learning can be applied to complete sentences, greatly increasing its accuracy. Here is an app that Heather built to quickly show sentiment analysis in MATLAB. It ties into live Twitter data, shows a word cloud of the popular words associated with a term, and the overall sentiment score:

Before we get into the demo, I have two shameless plugs:

Training Data The original dataset that was used contains 1.6 million pre-classified tweets. This subset contains 100,000 tweets. The original dataset can be found at http://thinknook.com/twitter-sentiment-analysis-training-corpus-dataset-2012-09-22/ Here is a sampling of training tweets:

How were these classified as positive and/or negative? Great question! After all, we could have an argument/debate on whether “Back to work!” could also be a positive tweet.

In fact, even in the link to the training data, the author suggests:

Test the Model

Once we've trained the model, it can be used to see how well it predicts on new data.

Test the Model

Once we've trained the model, it can be used to see how well it predicts on new data.

I spoke with Heather about the results of this model: There are lots of ways to interpret the results, and you can spend lots of time improving these models. For example, these are the results of very generic Tweets, and you could make them more application specific if you wanted to bias the data towards those results.

Also, using the Stanford word embedding increases the model accuracy to 75%.

Before you judge the results, it’s often helpful to try this out on data that you want the model to be successful.

We can take a few sample tweets, or make our own! These are the few that I decided to try.

I spoke with Heather about the results of this model: There are lots of ways to interpret the results, and you can spend lots of time improving these models. For example, these are the results of very generic Tweets, and you could make them more application specific if you wanted to bias the data towards those results.

Also, using the Stanford word embedding increases the model accuracy to 75%.

Before you judge the results, it’s often helpful to try this out on data that you want the model to be successful.

We can take a few sample tweets, or make our own! These are the few that I decided to try.

Q&A with Heather

1. When choosing training data, did you pick random samples of all Tweets, or did you capture a certain category of tweets, for example political, scientific, celebrities, etc.?

2. Is this code available?

3. The calculateScore function, is that something you created? How do you determine the score?

4. Why text analytics?

5. Can you really predict stocks with this information?

Thanks to Heather for the demo, and taking the time to walk me through it! I hope you enjoyed it as well. Anything else you'd like to ask Heather? What type of demo would you like to see next? Leave a comment below!

“Deep Learning in Action: Cool projects created at MathWorks

This aims to give you insight into what we’re working on at MathWorks: I’ll show some demos, and give you access to the code and maybe even post a video or two. Today’s demo is called "Sentiment Analysis" and it’s the second article in a series of posts, including:- 3D Point Cloud Segmentation using CNNs

- GPU Coder

- Age Detection

- Pictionary

Demo: Sentiment Analysis Imagine typing in a term and instantly getting a sense of how that term is perceived? That's what we're going to do today. What better place to start when talking about sentiment, than Twitter? Twitter is filled with positively and negatively charged statements, and companies are always looking for insight into how their company is perceived without reading every tweet. Sentiment analysis can have many practical applications, such as branding, political campaigning, and advertising. In this example we'll analyze Twitter data to see whether the sentiment surrounding a specific term or phrase is generally positive or negative. Machine learning was (and still is) commonly used for sentiment analysis. It is often used to analyze individual words, whereas deep learning can be applied to complete sentences, greatly increasing its accuracy. Here is an app that Heather built to quickly show sentiment analysis in MATLAB. It ties into live Twitter data, shows a word cloud of the popular words associated with a term, and the overall sentiment score:

Before we get into the demo, I have two shameless plugs:

- If you are doing any sort of text analysis, you should really check out the new Text Analytics Toolbox. I’m not a text analytics expert, and I take for granted all the processing that needs to happen to turn natural language into something a computer can understand. In this example, there are functions to take all the hard work out of processing text, something that will save you hundreds of hours.

- Secondly, I just discovered that if you want to plug into live Twitter feed data, we have a toolbox for that too! This is the Datafeed Toolbox. It lets you access live feeds like Twitter, and real-time market data from leading financial data providers. Any day traders out there? Might be worth considering this toolbox!

Training Data The original dataset that was used contains 1.6 million pre-classified tweets. This subset contains 100,000 tweets. The original dataset can be found at http://thinknook.com/twitter-sentiment-analysis-training-corpus-dataset-2012-09-22/ Here is a sampling of training tweets:

| Tweet | Sentiment |

| "I LOVE @Health4UandPets u guys r the best!! " | Positive |

| "@nicolerichie: your picture is very sweet " | Positive |

| "Dancing around the room in Pjs, jamming to my ipod. Getting dizzy. Well twitter, you asked! " | Positive |

| "Back to work! " | Negative |

| "tired but can't sleep " | Negative |

| "Just has the worst presentation ever! " | Negative |

| "So it snowed last night. Not enough to call in for a snow day at work though. " | Negative |

…10% of sentiment classification by humans can be debated…The categories of training data can be determined through manual labeling, using emojis to label sentiment, using a machine learning or deep learning model to get determine sentiment, or a combination of these. I'd recommend verifying that you agree with the dataset categories depending on what you want the outcome to be. For example: move "Back to work!" into the positive category if you think this is a positive statement; its up to you to determine how your model is going to respond. Data Prep We first clean the data by removing punctuation and URLs:

% Preprocess tweets tweets = lower(tweets); tweets = eraseURLs(tweets); tweets = removeHashtags(tweets); tweets = erasePunctuation(tweets); t = tokenizedDocument(tweets);We can also remove “stop words” such as “the”, “and”, which do not add helpful information that will help the algorithm learn.

% Edit stop words list to take out words that could carry important meaning % for sentiment of the tweet newStopWords = stopWords; notStopWords = ["are", "aren't", "arent", "can", "can't", "cant", ... "cannot", "could", "couldn't", "did", "didn't", "didnt", "do", "does",... "doesn't", "doesnt", "don't", "dont", "is", "isn't", "isnt", "no", "not",... "was", "wasn't", "wasnt", "with", "without", "won't", "would", "wouldn't"]; newStopWords(ismember(newStopWords,notStopWords)) = []; t = removeWords(t,newStopWords); t = removeWords(t,{'rt','retweet','amp','http','https',... 'stock','stocks','inc'}); t = removeShortWords(t,1);And then perform “word embedding” which has been explained to me as turning words into vectors to be used as training. More officially, it can be used to create a word vector based on unsupervised learning of word co-occurrences in text. Word embedding are typically unsupervised models, which can be trained in MATLAB, or there are several pre-trained word vector models with varying sizes of vocabulary and dimensions from a number of sources like Wikipedia and Twitter. Another shameless plug for text analytics toolbox, which makes this step very simple.

embeddingDimension = 100; embeddingEpochs = 50; emb = trainWordEmbedding(tweetsTrainDocuments, ... 'Dimension',embeddingDimension, ... 'NumEpochs',embeddingEpochs, ... 'Verbose',0)We can then set up the structure of the network. In this example we'll use a long short-term memory ( LSTM) network, a recurrent neural network (RNN) that can learn dependencies over time. At a high level, LSTMs are good for classifying sequence and time-series data. For text analytics, this means that an LSTM will take into account not only the words in a sentence, but the structure and combination of words, as well. The network itself is very simple:

layers = [ sequenceInputLayer(inputSize)

lstmLayer(outputSize,'OutputMode','last')

fullyConnectedLayer(numClasses)

softmaxLayer

classificationLayer ]

When run on a GPU, it trains very quickly, taking just 6 minutes for 30 epochs (complete passes through the data).

Here is our ever-famous training plot. Just in case you haven’t tried this, in training options: set 'Plots','training-progress'

[YPred,scores] = classify(net,XTest); accuracy = sum(YPred == YTest)/numel(YPred)accuracy = 0.6606

heatmap(table(YPred,YTest,'VariableNames',{'Predicted','Actual'}),...

'Predicted','Actual');

I spoke with Heather about the results of this model: There are lots of ways to interpret the results, and you can spend lots of time improving these models. For example, these are the results of very generic Tweets, and you could make them more application specific if you wanted to bias the data towards those results.

Also, using the Stanford word embedding increases the model accuracy to 75%.

Before you judge the results, it’s often helpful to try this out on data that you want the model to be successful.

We can take a few sample tweets, or make our own! These are the few that I decided to try.

I spoke with Heather about the results of this model: There are lots of ways to interpret the results, and you can spend lots of time improving these models. For example, these are the results of very generic Tweets, and you could make them more application specific if you wanted to bias the data towards those results.

Also, using the Stanford word embedding increases the model accuracy to 75%.

Before you judge the results, it’s often helpful to try this out on data that you want the model to be successful.

We can take a few sample tweets, or make our own! These are the few that I decided to try.

tw = ["I'm really sad today. I was sad yesterday too"

"This is super awesome! The best!"

"Everyone should be buying this!"

"Everything better with bacon"

"There is no more bacon."];

s = preprocessTweets(tw);

C = doc2sequence(emb,s);

[pred,score] = classify(net,C)

| pred = 5x1 categorical array |

| neutral |

| positive |

| positive |

| positive |

| negative |

| score = 5×3 single matrix |

| 0.4123 | 0.5021 | 0.0856 |

| 0.0206 | 0.0370 | 0.9424 |

| 0.2423 | 0.0526 | 0.7052 |

| 0.1280 | 0.0180 | 0.8540 |

| 0.9696 | 0.0278 | 0.0027 |

totalScore = calculateScore(score)

| totalScore = 5×1 single column vector |

| -0.0043 |

| 0.9259 |

| 0.8949 |

| 0.9641 |

| 0.9444 |

| If I'm working with finance data, I pick stock price discussions to make sure we're getting the right content and context. But it's also good practice to include some generic text as well, since it's good to have your model have samples of "normal" language too. It's just like any other example of deep learning - if you're really intent on identify cats and dogs in pictures, make sure you have lots of pictures of cats and dogs. You may also want to throw in some pictures of images that could easily confuse the model too, so that it learns those differences. |

| I will have the demo on FileExchange (in about a week or two), but the live Twitter portion has been disabled, since you'll need the Datafeed toolbox and your own Twitter credentials for that to work. If you're serious about that part, you can look at the demos in Datafeed toolbox which will walk you through those steps. |

| I made it up! There's lots of more sophisticated ways of doing score, but since it brings back the probabilities of the 3 classes, I set neutral to zero, and normalized the positive and negative score to create a final score. |

| Text analytics is a really interesting and rich research area. There's still new research coming out, and it's up and coming. Preprocessing text is really different and offers a completely different set of challenges than images. Numbers are more predictable, and in some ways easier. How you preprocess the text can have a huge impact on the results of the model. |

| Tweets really do track with finance data, and can give you insight into certain stocks. Bloomberg provides social sentiment analytics, and has written a few articles about the topic. So you could just use this score, but you have no control over the model and the data. Plus we already mentioned that preprocessing can have a huge effect on the outcome of the score. There's a lot more insight if you are actually doing it yourself. |

- 类别:

- Deep Learning

评论

要发表评论,请点击 此处 登录到您的 MathWorks 帐户或创建一个新帐户。