Embedded AI Integration with MATLAB and Simulink

Embedded AI, that is the integration of artificial intelligence and embedded systems, enables devices to process data and make decisions locally. It enhances efficiency, reduces latency, and improves user experience. This blog post describes what Embedded AI is, highlights the benefits of using MATLAB and Simulink for Embedded AI, and provides an overview of a recent webinar on Embedded AI.

Blog post contents:

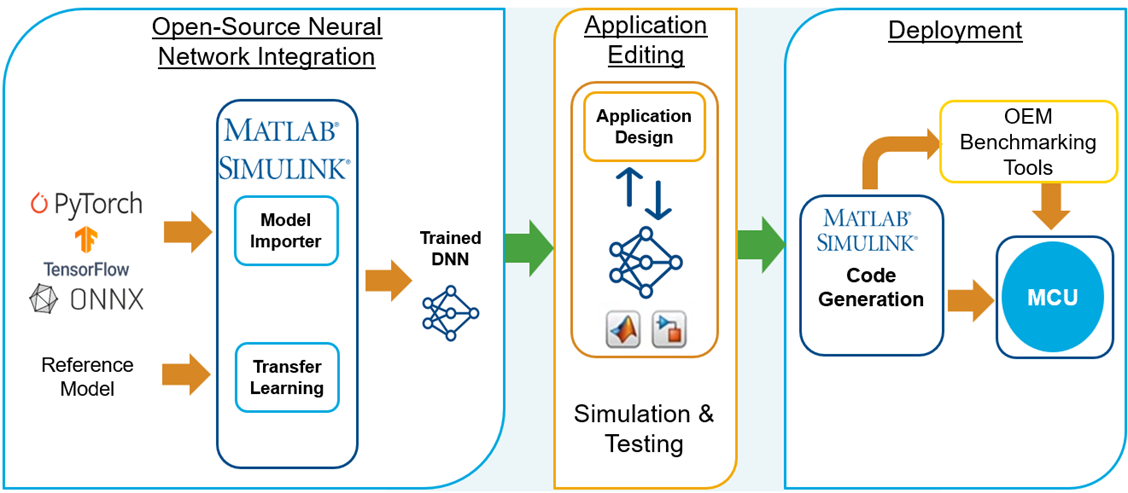

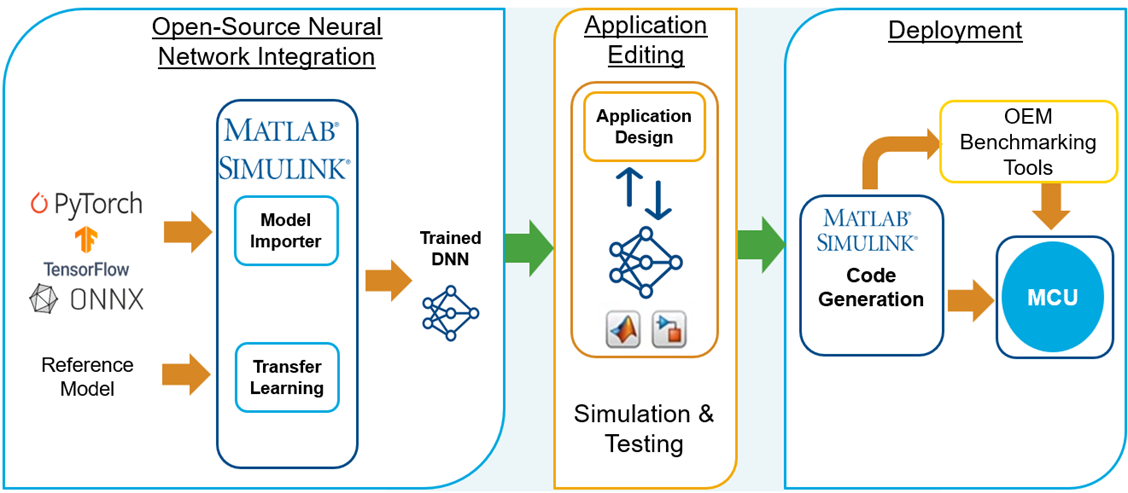

Figure: The complete embedded AI workflow, as presented and explained in recent webinar.

Embedded AI is the integration of artificial intelligence (AI) capabilities directly into embedded systems. Embedded AI refers to both signal and vision applications. For image and video data, Embedded AI is also known as Embedded Vision.

An embedded system is a combination of computer hardware and software designed for a specific function or functions within a larger system. These systems can be found in a variety of applications, from automotive and aerospace to consumer electronics and industrial automation. Embedded systems typically have real-time computing constraints, and they are optimized for specific tasks, often with limited resources such as memory and processing power.

Figure: The complete embedded AI workflow, as presented and explained in recent webinar.

Embedded AI is the integration of artificial intelligence (AI) capabilities directly into embedded systems. Embedded AI refers to both signal and vision applications. For image and video data, Embedded AI is also known as Embedded Vision.

An embedded system is a combination of computer hardware and software designed for a specific function or functions within a larger system. These systems can be found in a variety of applications, from automotive and aerospace to consumer electronics and industrial automation. Embedded systems typically have real-time computing constraints, and they are optimized for specific tasks, often with limited resources such as memory and processing power.

Figure: A virtual sensor designed with AI is an example of an Embedded AI system. A virtual sensor for estimating battery State-of-Charge (SOC) is shown.

Figure: A virtual sensor designed with AI is an example of an Embedded AI system. A virtual sensor for estimating battery State-of-Charge (SOC) is shown.

Figure: Edge AI system for visual inspection on an industrial computer (see blog post)

Figure: Edge AI system for visual inspection on an industrial computer (see blog post)

Figure: Quality inspection robot empowered by AI

Figure: Quality inspection robot empowered by AI

Figure: Conversion of deep learning models between MATLAB, PyTorch, TensorFlow, and ONNX

Figure: Conversion of deep learning models between MATLAB, PyTorch, TensorFlow, and ONNX

Figure: Automatic code generation from MATLAB and Simulink enables rapid prototyping and deployment of AI applications on embedded devices

Figure: Automatic code generation from MATLAB and Simulink enables rapid prototyping and deployment of AI applications on embedded devices

Figure: W-shaped development process. Credit: EASA, Daedalean

Figure: The complete embedded AI workflow, as presented and explained in recent webinar.

Embedded AI is the integration of artificial intelligence (AI) capabilities directly into embedded systems. Embedded AI refers to both signal and vision applications. For image and video data, Embedded AI is also known as Embedded Vision.

An embedded system is a combination of computer hardware and software designed for a specific function or functions within a larger system. These systems can be found in a variety of applications, from automotive and aerospace to consumer electronics and industrial automation. Embedded systems typically have real-time computing constraints, and they are optimized for specific tasks, often with limited resources such as memory and processing power.

Figure: The complete embedded AI workflow, as presented and explained in recent webinar.

Embedded AI is the integration of artificial intelligence (AI) capabilities directly into embedded systems. Embedded AI refers to both signal and vision applications. For image and video data, Embedded AI is also known as Embedded Vision.

An embedded system is a combination of computer hardware and software designed for a specific function or functions within a larger system. These systems can be found in a variety of applications, from automotive and aerospace to consumer electronics and industrial automation. Embedded systems typically have real-time computing constraints, and they are optimized for specific tasks, often with limited resources such as memory and processing power.

Figure: A virtual sensor designed with AI is an example of an Embedded AI system. A virtual sensor for estimating battery State-of-Charge (SOC) is shown.

Figure: A virtual sensor designed with AI is an example of an Embedded AI system. A virtual sensor for estimating battery State-of-Charge (SOC) is shown.

Key Characteristics

When we are talking about Embedded AI, there are three key features that you need to remember:- Integration with Hardware - AI algorithms are integrated into the device hardware, often requiring optimization to run efficiently on limited resources.

- Dedicated Functions - The AI capabilities are tailored to specific applications, such as voice recognition in smart speakers or image processing in cameras.

- Limited Resources - Embedded systems typically have constraints on processing power, memory, and energy consumption.

Embedded AI vs Edge AI vs TinyML

The terms Embedded AI, Edge AI, and TinyML refer to closely related but distinct concepts within the field of artificial intelligence, each with its unique focus and application domains. All three approaches have a component of real-time or near-real-time processing. The key attribute of Embedded AI is a resource-constrained target, such as an MCU (microcontroller unit), that cannot use libraries. Thus, Embedded AI requires embedded code (C/C++ or HDL). Edge AI is Embedded AI plus running AI models on industrial computers. Industrial computers have much more resources than embedded systems. TinyML is a sub-area of Embedded AI, which brings AI to the smallest MCUs and sensors, and thus operates under the most stringent limitations. Figure: Edge AI system for visual inspection on an industrial computer (see blog post)

Figure: Edge AI system for visual inspection on an industrial computer (see blog post)

Why MATLAB and Simulink for Embedded AI?

You can use MATLAB and Simulink to design, simulate, test, verify, and deploy Embedded AI algorithms that enhance the performance and functionality of complex engineering systems. MATLAB and Simulink provide a complete and versatile set of tools for designing complex AI-empowered embedded systems for applications such as enhancing a quality inspection robot with deep learning or developing a control system that uses virtual sensors for temperature estimation. Figure: Quality inspection robot empowered by AI

Figure: Quality inspection robot empowered by AI

Key Benefits

Some of the key benefits of using MATLAB and Simulink tools for Embedded AI are:- Simulation and Testing of AI-driven systems in Simulink. Trained machine learning models can be easily integrated into Simulink systems using provided Simulink blocks. You can evaluate the model performance within the system, and perform processor-in-the-loop (PIL) or hardware-in-the-loop (HIL) testing. To learn more, see Integrating AI into System-Level Design and AI with Model-Based Design.

- Integration between MATLAB and Python for deep learning. You can import pretrained deep learning models from PyTorch®, TensorFlow™, and ONNX™ into MATLAB. You can also export deep neural networks from MATLAB to TensorFlow and ONNX. To learn more, check out Convert Deep Learning Models between PyTorch, TensorFlow, and MATLAB.

Figure: Conversion of deep learning models between MATLAB, PyTorch, TensorFlow, and ONNX

Figure: Conversion of deep learning models between MATLAB, PyTorch, TensorFlow, and ONNX

- Deployment to Resource-Constrained Targets. You can generate plain C/C++ source code (with no dependency on a runtime or interpreter) for CPUs and microcontrollers, CUDA code for NVIDIA® GPUs, and Verilog and VHDL® code for AMD & Intel® FPGAs and SoCs. You can also compress models to reduce their computational costs by performing pruning, projection or quantization.

Figure: Automatic code generation from MATLAB and Simulink enables rapid prototyping and deployment of AI applications on embedded devices

Figure: Automatic code generation from MATLAB and Simulink enables rapid prototyping and deployment of AI applications on embedded devices

- Verification and Validation for AI. You can verify and validate AI models, especially in safety-critical industries such as automotive, aerospace, and medical devices (to learn more, see blog post series: Verification and Validation for AI). You can apply explainable AI techniques for machine learning and deep learning models, and verify the robustness of deep neural networks with Deep Learning Toolbox Verification Library.

Discover More

Check out these customer stories featuring Embedded AI systems built with MATLAB and Simulink:- Coca-Cola Develops Virtual Pressure Sensor with Machine Learning to Improve Beverage Dispenser Diagnostics

- Mercedes-Benz Simulates Hardware Sensors with Deep Neural Networks

- Miba AG Creates Deep Learning System for Enhanced Quality Inspection

Webinar Highlights

- Engineers can incorporate AI into their Simulink models with confidence, ensuring that the AI models function as intended when deployed in their products.

- The process of testing and verifying the robustness of the AI model within Simulink systems is simplified, allowing for direct and efficient validation of the AI model's performance.

- The generation of deployment-ready code is automated, enabling engineers to rapidly move from model to deployment on MCUs without the complexities of manual code translation.

评论

要发表评论,请点击 此处 登录到您的 MathWorks 帐户或创建一个新帐户。