We’ve Got You Covered

Coverage. It's all good. Health care coverage is good. Cell phone coverage is good. Even cloud coverage can provide a nice break from the intensity of the sun's rays sometimes. It should come then as no surprise that good code coverage also gives us that nice warm fuzzy feeling, like a nice warm drink on a cold day.

...and often for good reason. Code coverage can help us see areas of our code that are not exercised in some form or another, and can be very helpful, in a good-friend-that-can-be-brutally-honest-with-you sort of way. It can point out where we really need to add more testing. Once we react to our good friend's advice and add better testing, we are all the better.

A quick aside to add some caution is in order. We (all) should remember that code coverage does not as much show what code is covered as which code is not covered. This is because seeing that a piece of code is covered says nothing about how it was covered, whether it was covered by a test specifically targeting that code or some incidental coverage, and there is no information as to whether the coverage verified the correct result in any way. Takeaway, use coverage to see areas of the code are not tested. Don't fall into the trap and draw conclusions that covered code is bug free or is even remotely tested sufficiently.

That said, it is a great tool if you have the right mindset about it, and when combined with testing and continuous integration it can help us ensure our testing is up to snuff.

Good news! This just got a lot easier. We have had the CodeCoveragePlugin for the unit test framework for some time, but it has produced a report that was more designed to be used in interactive MATLAB environments rather than CI system workflows. Now we have the option to produce our coverage into the Cobertura format and benefit from things like the Cobertura plugin for Jenkins.

Let's continue with our (matrix'd) build from last time, but add some coverage.

import('matlab.unittest.plugins.CodeCoveragePlugin'); import('matlab.unittest.plugins.codecoverage.CoberturaFormat'); coverageFile = fullfile(resultsDir, 'coverage.xml'); runner.addPlugin(CodeCoveragePlugin.forFolder(src,... 'Producing', CoberturaFormat(coverageFile)));

Easy as pie. Now let's see what it looks like in our CI build:

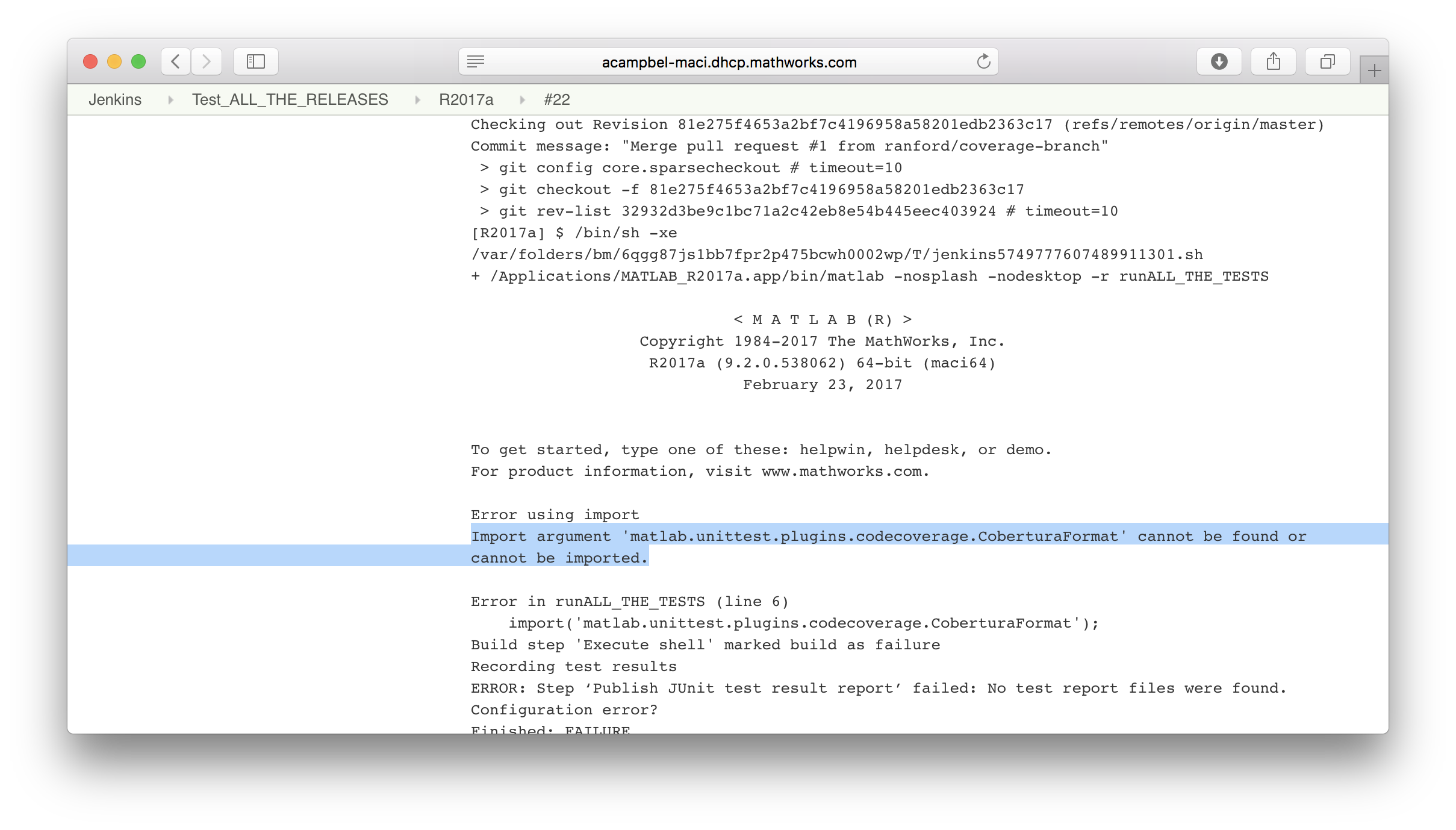

Oops! I forgot about the fact that other releases we are building against don't have the plugin:

No biggie, we'll just need to wrap the installation of our plugin into the TestRunner with a version check. Let's put it into a function:

function addCoberturaCoverageIfPossible(runner, src, coverageFile) if ~verLessThan('matlab','9.3') import('matlab.unittest.plugins.CodeCoveragePlugin'); import('matlab.unittest.plugins.codecoverage.CoberturaFormat'); runner.addPlugin(CodeCoveragePlugin.forFolder(src,... 'Producing', CoberturaFormat(coverageFile))); end end

and we can just call that function from our test running script:

try import('matlab.unittest.TestRunner'); import('matlab.unittest.plugins.XMLPlugin'); import('matlab.unittest.plugins.ToFile'); ws = getenv('WORKSPACE'); src = fullfile(ws, 'source'); addpath(src); tests = fullfile(ws, 'tests'); suite = testsuite(tests); % Create and configure the runner runner = TestRunner.withTextOutput('Verbosity',3); % Add the TAP plugin resultsDir = fullfile(ws, 'testresults'); mkdir(resultsDir); resultsFile = fullfile(resultsDir, 'testResults.xml'); runner.addPlugin(XMLPlugin.producingJUnitFormat(resultsFile)); coverageFile = fullfile(resultsDir, 'coverage.xml'); addCoberturaCoverageIfPossible(runner, src, coverageFile); results = runner.run(suite) catch e disp(getReport(e,'extended')); exit(1); end quit('force');

Also, the MATLAB code is just one half of the equation. We also need to ensure the Jenkins Cobertura plugin is installed and that the build is configured to track coverage. Here we simply add it as a post-build action and we configure it to look in our results folder, looking for files named coverage.xml.

Note that since we are running testing across multiple releases, and the earlier releases don't have the plugin, we also need to tell the plugin not to fail the build if there are no coverage files present. If we don't then the builds for all the earlier releases will fail because no Cobertura xml file will be found.

OK, now we are in business. The build now passes across all releases:

However, when we navigate to the R2017b build, we can see the coverage report:

Digging in deeper we can then see where we need to add a test:

The build now shows very clearly that we forgot to add a negative test. Let's remedy that! Here is the test I want to write:

function testInvalidInput(testCase) % Test to ensure we fail gracefully with bogus input testCase.verifyError(@() simulateSystem('bunk'), ... 'simulateSystem:InvalidDesign:ShouldBeStruct'); end

However, looking again at our source, I notice something horrible, we are not testable! As a reminder, here is the code for the simulator:

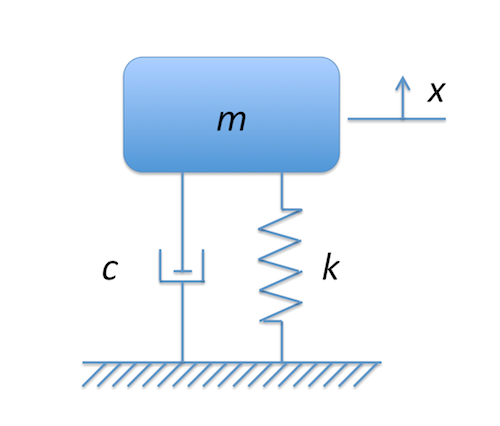

function [x, t] = simulateSystem springMassDamperDesign; % Create design variable. if ~isstruct(design) || ~all(isfield(design,{'c','k'})) error('simulateSystem:InvalidDesign:ShouldBeStruct', ... 'The design should be a structure with fields "c" and "k"'); end % Design variables c = design.c; k = design.k; % Constant variables z0 = [-0.1; 0]; % Initial Position and Velocity m = 1500; % Mass odefun = @(t,z) [0 1; -k/m -c/m]*z; [t, z] = ode45(odefun, [0, 3], z0); % The first column is the position (displacement from equilibrium) x = z(:, 1);

...and for the design:

m = 1500; % Need to know the mass to determine critical damping design.k = 5e6; % Spring Constant design.c = 2*m*sqrt(design.k/m); % Damping Coefficient to be critically damped clear m;

Yikes! There is no way to get a bad value into the code in order to test it. As such currently the code is dead. If we want we could just remove the error condition because we know that the script produces the right format of the design. However, that would miss the point. The whole reason why we separated out the design from the simulation script is so that we could tweak the design and explore. We really want the simulateSystem function to be reusable across many different designs, so we should parameterize it proper. Then the software is much more testable, which is synonymous with flexible. To do this all we need to do is make the design script a function and accept it as an input to the simulation function:

function [x, t] = simulateSystem(design) if ~isstruct(design) || ~all(isfield(design,{'c','k'})) error('simulateSystem:InvalidDesign:ShouldBeStruct', ... 'The design should be a structure with fields "c" and "k"'); end % Design variables c = design.c; k = design.k; % Constant variables z0 = [-0.1; 0]; % Initial Position and Velocity m = 1500; % Mass odefun = @(t,z) [0 1; -k/m -c/m]*z; [t, z] = ode45(odefun, [0, 3], z0); % The first column is the position (displacement from equilibrium) x = z(:, 1); function design = springMassDamperDesign m = 1500; % Need to know the mass to determine critical damping design.k = 5e6; % Spring Constant design.c = 2*m*sqrt(design.k/m); % Damping Coefficient to be critically damped

Make a few test updates:

function tests = designTest tests = functiontests(localfunctions); end function testSettlingTime(testCase) %%Test that the system settles to within 0.001 of zero under 2 seconds. [position, time] = simulateSystem(springMassDamperDesign); positionAfterSettling = position(time > .002); %For this example, verify the first value after the settling time. verifyLessThan(testCase, abs(positionAfterSettling), 2); end function testOvershoot(testCase) %Test to ensure that overshoot is less than 0.01 [position, ~] = simulateSystem(springMassDamperDesign); overshoot = max(position); verifyLessThan(testCase, overshoot, 0.01); end function testInvalidInput(testCase) % Test to ensure we fail gracefully with bogus input testCase.verifyError(@() simulateSystem('bunk'), ... 'simulateSystem:InvalidDesign:ShouldBeStruct'); end

...and now we should be in business. Let's check the build and the resulting coverage:

Ah, that's nice. Let's call it a day.

...but not before you check out Steve's new blog on deep learning! The man is amazing. He's been a world class blogger on image processing for years, and now he's adding deep learning into his repertoire. He has even promised that he will keep the image processing blog going. What a guy! I'm looking forward to it, be sure to catch up he already has a couple posts here and here.

评论

要发表评论,请点击 此处 登录到您的 MathWorks 帐户或创建一个新帐户。