Last year's Valentine post was an attempt to link a few Valentine's MATLAB animations with good practice in research software engineering. This year, I used Claude Code along with MATLAB's MCP server... read more >>

Last year's Valentine post was an attempt to link a few Valentine's MATLAB animations with good practice in research software engineering. This year, I used Claude Code along with MATLAB's MCP server... read more >>

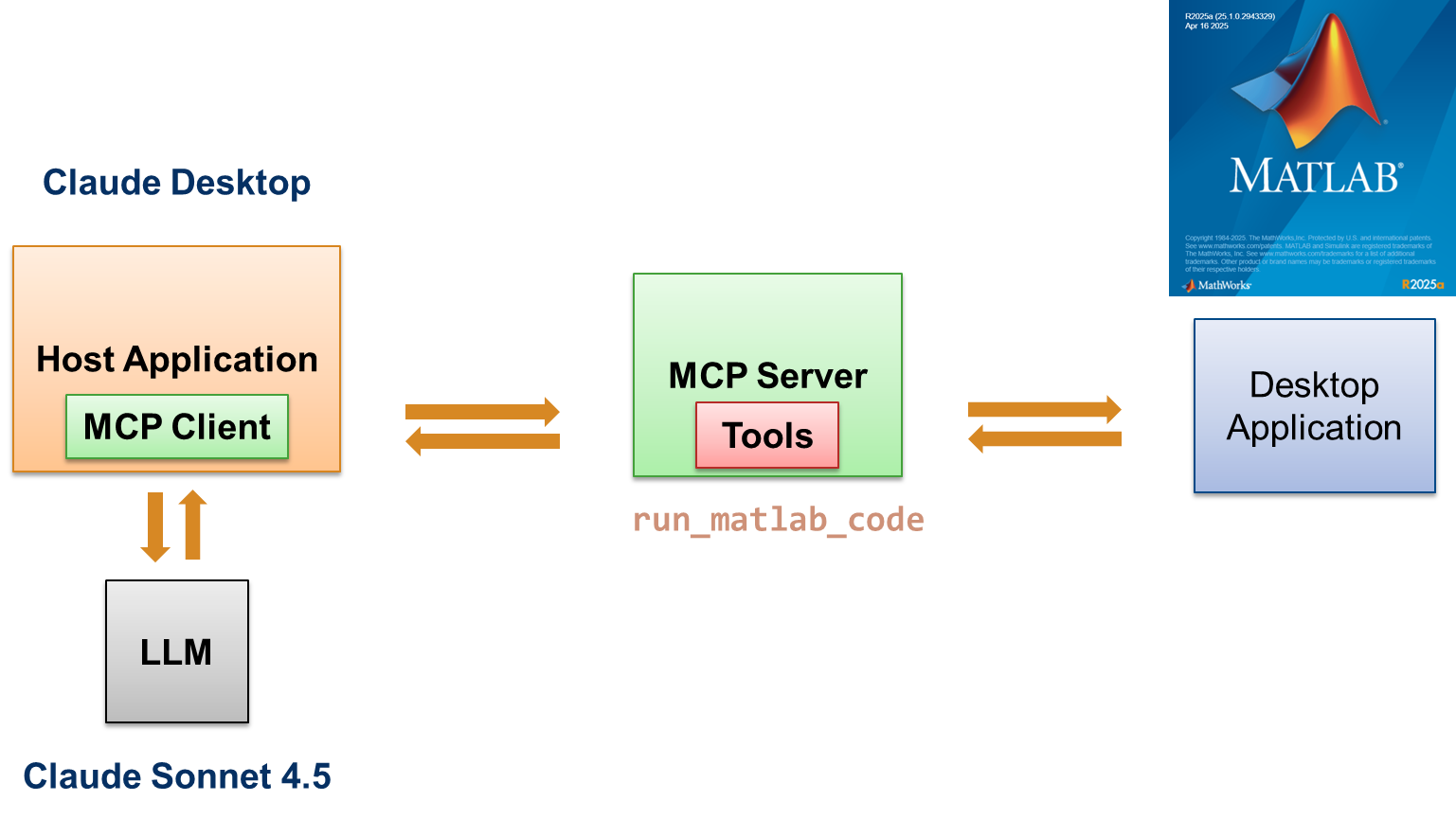

Bio: This blog post is co-authored by Toshi Takeuchi, Community Advocate active in online communities. Toshi has held marketing roles at MathWorks over the last 19 years.MathWorks released MATLAB MCP... read more >>

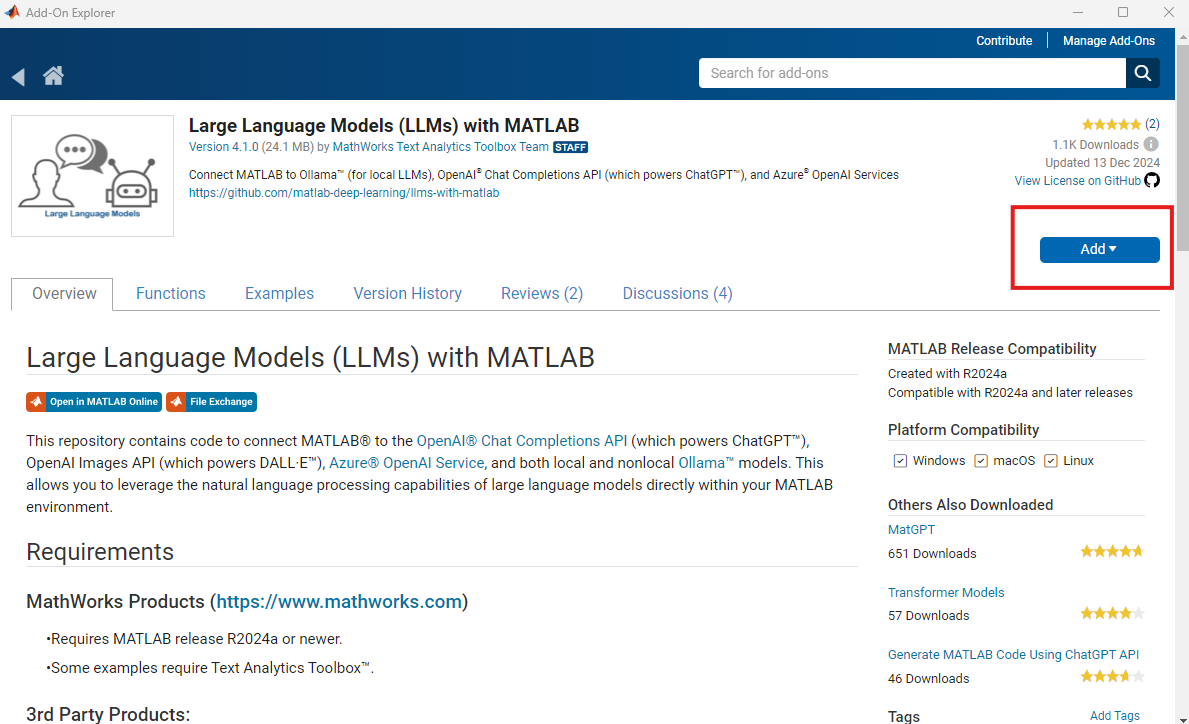

When experimenting with AI, I often like to use local large language models (LLMs), i.e. models that I run on my own hardware. I've written about how to do this with MATLAB a couple of times over the... read more >>

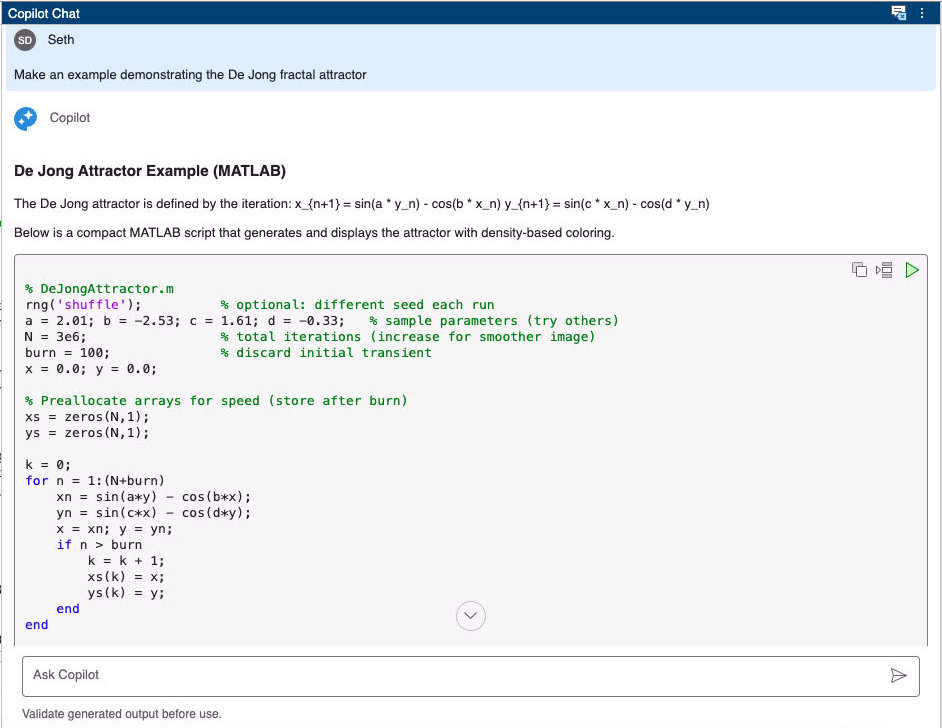

Bio: This blog post is co-authored by Seth DeLand, product manager for MATLAB Copilot. Seth has held various product management roles at MathWorks over the last 14 years, including products for... read more >>

Over at the Artificial Intelligence blog my colleagues, Yann Debray and Akshay Paul, have announced the release of the MATLAB MCP Core Server, MathWorks' first MCP Server.Introduced last November by... read more >>

At their heart, LLMs are just text generation machines that predict the next word based on patterns learned from huge amounts of data. If you want them to do something more than this such as perform... read more >>

Here at MathWorks, we are all very proud of the quality of our documentation. We put a huge amount of effort into it and it clearly shows! Most of our users rate MATLAB's documentation very highly... read more >>