Quantization issues when testing image processing code

Today I have for you an insider's view of a subtle aspect of testing image processing software (such as the Image Processing Toolbox!).

I've written several times in this blog about testing software. Years ago I wrote about the testing framework I put on the File Exchange, and more recently (12-Mar-2013) I've promoted the new testing framework added to MATLAB a few releases ago.

In an article I wrote for the IEEE/AIP magazine Computing in Science and Engineering a few years ago, I described the difficulties of comparing floating-point values when writing tests. Because floating-point arithmetic operations are subject to round-off error and other numerical issues, you generally have to use a tolerance when checking an output value for correctness. Sometimes it might be appropriate to use an absolute tolerance:

$$|a - b| \leq T$$

And sometimes it might be more appropriate to use a relative tolerance:

$$ \frac{|a - b|}{\max(|a|,|b|)} \leq T $$

But for testing image processing code, this isn't the whole story, as I was reminded a year ago by a question from Greg Wilson. Greg, the force behind Software Carpentry, was asked by a scientist in a class about how to test image processing code, such as a "simple" edge detector. Greg and I had an email conversation about this, which Greg then summarized on the Software Carpentry blog.

This was my initial response:

Whenever there is a floating-point computation that is then quantized to produce an output image, comparing actual versus expected can be tricky. I had to learn to deal with this early in my MathWorks software developer days. Two common scenarios in which this occurs:

- Rounding a floating-point computation to produce an integer-valued output image

- Thresholding a floating-point computation to produce a binary image (such as many edge detection methods)

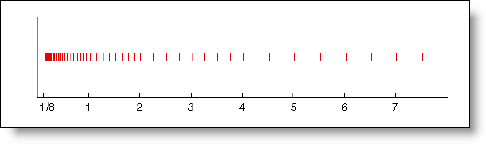

The problem is that floating-point round-off differences can turn a floating-point value that should be a 0.5 or exactly equal to the threshold into a value that's a tiny bit below. For testing, this means that the actual and expected images are exactly the same...except for a small number of pixels that are off by one. In a situation like this, the actual image can change because you changed the compiler's optimization flags, used a different compiler, used a different processor, used a multithreaded algorithm with dynamic allocation of work to the different threads, etc. So to compare actual against expected, I wrote a test assertion function that passes if the actual is the same as the expected except for a small percentage of pixels that are allowed to be different by 1.

Greg immediately asked the obvious follow-up question: What is a "small percentage"?

There isn't a general rule. With filtering, for example, some choices of filter coefficients could lead to a lot of "int + 0.5" values; other coefficients might result in few or none. I start with either an exact equality test or a floating-point tolerance test, depending on the computation. If there are some off-by-one values, I spot-check them to verify whether they are caused by a floating-point round-off plus quantization issue. If it all looks good, then I set the tolerance based on what's happening in that particular test case and move on. If you tied me down and forced me to pick a typical number, I'd say 1%.

PS. Greg gets some credit for indirectly influencing the testing tools in MATLAB. He's the one who prodded me to turn my toy testing project into something slightly more than a toy and make it available to MATLAB users. The existence and popularity of that File Exchange contribution then had a positive influence on the decision of the MathWorks Test Tools Team to create full-blown testing framework for MATLAB. Thanks, Greg!

댓글

댓글을 남기려면 링크 를 클릭하여 MathWorks 계정에 로그인하거나 계정을 새로 만드십시오.