Create a Simple DAG Network

Creating a Simple DAG Network

Today I want to show the basic tools needed to build your own DAG (directed acyclic graph) network for deep learning. I'm going to build this network and train it on our digits dataset.

As the first step, I'll create the main branch, which follows the left path shown above. The layerGraph function will save a bunch of connection steps; it creates a graph network from a simple array of layers, connecting the layers together in order.

layers = [

imageInputLayer([28 28 1],'Name','input')

convolution2dLayer(5,16,'Padding','same','Name','conv_1')

batchNormalizationLayer('Name','BN_1')

reluLayer('Name','relu_1')

convolution2dLayer(3,32,'Padding','same','Stride',2,'Name','conv_2')

batchNormalizationLayer('Name','BN_2')

reluLayer('Name','relu_2')

convolution2dLayer(3,32,'Padding','same','Name','conv_3')

batchNormalizationLayer('Name','BN_3')

reluLayer('Name','relu_3')

additionLayer(2,'Name','add')

averagePooling2dLayer(2,'Stride',2,'Name','avpool')

fullyConnectedLayer(10,'Name','fc')

softmaxLayer('Name','softmax')

classificationLayer('Name','classOutput')];

Connect the layers using layerGraph.

lgraph = layerGraph(layers)

lgraph =

LayerGraph with properties:

Layers: [15×1 nnet.cnn.layer.Layer]

Connections: [14×2 table]

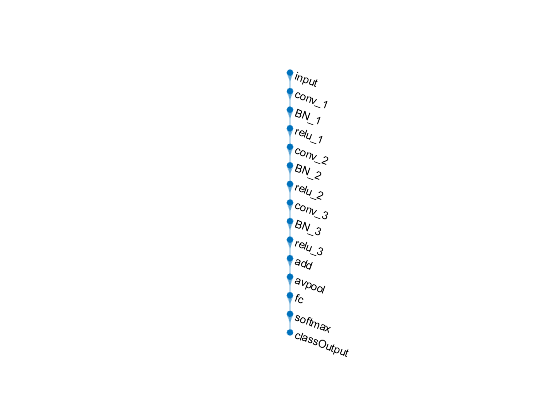

The plot function knows how to visualize a DAG network.

plot(lgraph)

axis off

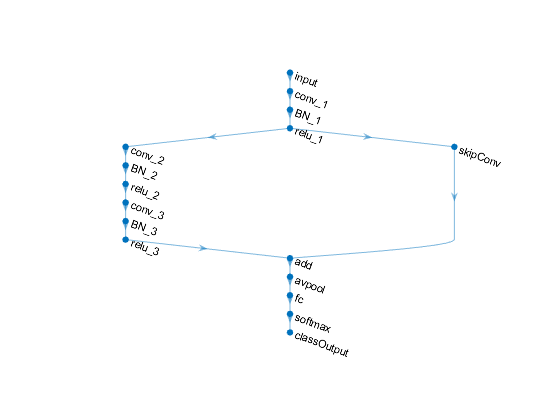

Next, I need to create the single layer that will be on the other branch. It is a 1-by-1 convolutional layer. Its parameters (number of filters and stride) are set to match the activation size of the 'relu_3' layer. Once the layer is created, addLayers adds it to lgraph.

skip_conv_layer = convolution2dLayer(1,32,'Stride',2,'Name','skipConv'); lgraph = addLayers(lgraph,skip_conv_layer); plot(lgraph) axis off

You can see the new layer there, but it's looking a little lonely off to the side. I need to connect it to the other layers using connectLayers.

lgraph = connectLayers(lgraph,'relu_1','skipConv'); lgraph = connectLayers(lgraph,'skipConv','add/in2'); plot(lgraph); axis off

Now that I've constructed the network, here are the steps for training it using our digits dataset.

[trainImages,trainLabels] = digitTrain4DArrayData; [valImages,valLabels] = digitTest4DArrayData;

Specify training options and train the network.

options = trainingOptions('sgdm',... 'MaxEpochs',6,... 'Shuffle','every-epoch',... 'ValidationData',{valImages,valLabels},... 'ValidationFrequency',20,... 'VerboseFrequency',20); net = trainNetwork(trainImages,trainLabels,lgraph,options)

Training on single GPU.

Initializing image normalization.

|=======================================================================================================================|

| Epoch | Iteration | Time Elapsed | Mini-batch | Validation | Mini-batch | Validation | Base Learning|

| | | (seconds) | Loss | Loss | Accuracy | Accuracy | Rate |

|=======================================================================================================================|

| 1 | 1 | 0.03 | 2.3317 | 2.2729 | 11.72% | 14.38% | 0.0100 |

| 1 | 20 | 0.81 | 0.9775 | 0.9119 | 71.88% | 72.24% | 0.0100 |

| 2 | 40 | 1.61 | 0.3783 | 0.4352 | 92.19% | 88.86% | 0.0100 |

| 2 | 60 | 2.38 | 0.3752 | 0.3111 | 89.06% | 92.06% | 0.0100 |

| 3 | 80 | 3.21 | 0.1935 | 0.1945 | 96.88% | 96.18% | 0.0100 |

| 3 | 100 | 4.04 | 0.1777 | 0.1454 | 97.66% | 97.32% | 0.0100 |

| 4 | 120 | 4.89 | 0.0662 | 0.0956 | 100.00% | 98.50% | 0.0100 |

| 4 | 140 | 5.77 | 0.0764 | 0.0694 | 99.22% | 99.30% | 0.0100 |

| 5 | 160 | 6.58 | 0.0466 | 0.0540 | 100.00% | 99.52% | 0.0100 |

| 5 | 180 | 7.36 | 0.0459 | 0.0459 | 99.22% | 99.60% | 0.0100 |

| 6 | 200 | 8.12 | 0.0276 | 0.0390 | 100.00% | 99.56% | 0.0100 |

| 6 | 220 | 9.01 | 0.0242 | 0.0354 | 100.00% | 99.62% | 0.0100 |

| 6 | 234 | 9.84 | 0.0160 | | 100.00% | | 0.0100 |

|=======================================================================================================================|

net =

DAGNetwork with properties:

Layers: [16×1 nnet.cnn.layer.Layer]

Connections: [16×2 table]

The output of trainNetwork is a DAGNetwork object. The Connections property records how the individual layers are connected to each other.

net.Connections

ans =

16×2 table

Source Destination

__________ _____________

'input' 'conv_1'

'conv_1' 'BN_1'

'BN_1' 'relu_1'

'relu_1' 'conv_2'

'relu_1' 'skipConv'

'conv_2' 'BN_2'

'BN_2' 'relu_2'

'relu_2' 'conv_3'

'conv_3' 'BN_3'

'BN_3' 'relu_3'

'relu_3' 'add/in1'

'add' 'avpool'

'avpool' 'fc'

'fc' 'softmax'

'softmax' 'classOutput'

'skipConv' 'add/in2'

Classify the validation images and calculate the accuracy.

predictedLabels = classify(net,valImages); accuracy = mean(predictedLabels == valLabels)

accuracy =

0.9974

Those are the basics. Create layers using the various layer functions and join them up using layerGraph and connectLayers. Then you can train and use the network in the same way you would train and use other networks.

- Category:

- Deep Learning

Comments

To leave a comment, please click here to sign in to your MathWorks Account or create a new one.