Defining Your Own Network Layer (Revisited)

Today I want to follow up on my previous post, Defining Your Own Network Layer. There were two reader comments that caught my attention.

The first comment, from Eric Shields, points out a key conclusion from the Clevert, Unterthiner, and Hichreiter paper that I overlooked. I initially focused just on the definition of the exponential linear unit function, but Eric pointed out that the authors concluded that batch normalization, which I used in my simple network, might not be needed when using an ELU layer.

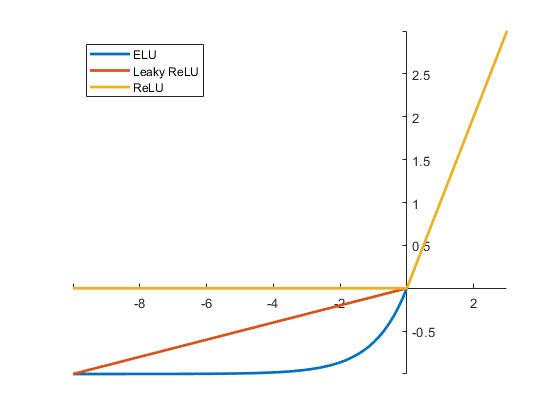

Here's a reminder (from the previous post) about what the ELU curve looks like.

And here's the simple network that used last time.

layers = [ ...

imageInputLayer([28 28 1])

convolution2dLayer(5,20)

batchNormalizationLayer

eluLayer(20)

fullyConnectedLayer(10)

softmaxLayer

classificationLayer];

I used the sample digits training set.

[XTrain, YTrain] = digitTrain4DArrayData; imshow(XTrain(:,:,:,1010),'InitialMagnification','fit') YTrain(1010)

ans =

categorical

2

Now I'll train the network again, using the same options as last time.

options = trainingOptions('sgdm');

net = trainNetwork(XTrain,YTrain,layers,options);

Training on single GPU. Initializing image normalization. |=========================================================================================| | Epoch | Iteration | Time Elapsed | Mini-batch | Mini-batch | Base Learning| | | | (seconds) | Loss | Accuracy | Rate | |=========================================================================================| | 1 | 1 | 0.01 | 2.5899 | 10.16% | 0.0100 | | 2 | 50 | 0.61 | 0.4156 | 85.94% | 0.0100 | | 3 | 100 | 1.20 | 0.1340 | 96.88% | 0.0100 | | 4 | 150 | 1.80 | 0.0847 | 98.44% | 0.0100 | | 6 | 200 | 2.41 | 0.0454 | 100.00% | 0.0100 | | 7 | 250 | 3.01 | 0.0253 | 100.00% | 0.0100 | | 8 | 300 | 3.60 | 0.0219 | 100.00% | 0.0100 | | 9 | 350 | 4.19 | 0.0141 | 100.00% | 0.0100 | | 11 | 400 | 4.85 | 0.0128 | 100.00% | 0.0100 | | 12 | 450 | 5.46 | 0.0126 | 100.00% | 0.0100 | | 13 | 500 | 6.05 | 0.0099 | 100.00% | 0.0100 | | 15 | 550 | 6.66 | 0.0079 | 100.00% | 0.0100 | | 16 | 600 | 7.27 | 0.0084 | 100.00% | 0.0100 | | 17 | 650 | 7.86 | 0.0075 | 100.00% | 0.0100 | | 18 | 700 | 8.45 | 0.0081 | 100.00% | 0.0100 | | 20 | 750 | 9.05 | 0.0066 | 100.00% | 0.0100 | | 21 | 800 | 9.64 | 0.0057 | 100.00% | 0.0100 | | 22 | 850 | 10.24 | 0.0054 | 100.00% | 0.0100 | | 24 | 900 | 10.83 | 0.0049 | 100.00% | 0.0100 | | 25 | 950 | 11.44 | 0.0055 | 100.00% | 0.0100 | | 26 | 1000 | 12.04 | 0.0046 | 100.00% | 0.0100 | | 27 | 1050 | 12.66 | 0.0041 | 100.00% | 0.0100 | | 29 | 1100 | 13.25 | 0.0044 | 100.00% | 0.0100 | | 30 | 1150 | 13.84 | 0.0038 | 100.00% | 0.0100 | | 30 | 1170 | 14.08 | 0.0042 | 100.00% | 0.0100 | |=========================================================================================|

Note that the training took about 14.1 seconds.

Check the accuracy of the trained network.

[XTest, YTest] = digitTest4DArrayData; YPred = classify(net, XTest); accuracy = sum(YTest==YPred)/numel(YTest)

accuracy =

0.9878

Now let's make another network without the batch normalization layer.

layers2 = [ ...

imageInputLayer([28 28 1])

convolution2dLayer(5,20)

eluLayer(20)

fullyConnectedLayer(10)

softmaxLayer

classificationLayer];

Train it up again.

net2 = trainNetwork(XTrain,YTrain,layers2,options);

Training on single GPU. Initializing image normalization. |=========================================================================================| | Epoch | Iteration | Time Elapsed | Mini-batch | Mini-batch | Base Learning| | | | (seconds) | Loss | Accuracy | Rate | |=========================================================================================| | 1 | 1 | 0.01 | 2.3022 | 7.81% | 0.0100 | | 2 | 50 | 0.52 | 1.6631 | 51.56% | 0.0100 | | 3 | 100 | 1.04 | 1.4368 | 52.34% | 0.0100 | | 4 | 150 | 1.58 | 1.0426 | 61.72% | 0.0100 | | 6 | 200 | 2.12 | 0.8223 | 72.66% | 0.0100 | | 7 | 250 | 2.67 | 0.6842 | 80.47% | 0.0100 | | 8 | 300 | 3.21 | 0.6461 | 78.13% | 0.0100 | | 9 | 350 | 3.79 | 0.4181 | 85.94% | 0.0100 | | 11 | 400 | 4.33 | 0.4163 | 86.72% | 0.0100 | | 12 | 450 | 4.88 | 0.2115 | 96.09% | 0.0100 | | 13 | 500 | 5.42 | 0.1817 | 97.66% | 0.0100 | | 15 | 550 | 5.96 | 0.1809 | 96.09% | 0.0100 | | 16 | 600 | 6.53 | 0.1001 | 100.00% | 0.0100 | | 17 | 650 | 7.07 | 0.0899 | 100.00% | 0.0100 | | 18 | 700 | 7.61 | 0.0934 | 99.22% | 0.0100 | | 20 | 750 | 8.14 | 0.0739 | 99.22% | 0.0100 | | 21 | 800 | 8.68 | 0.0617 | 100.00% | 0.0100 | | 22 | 850 | 9.22 | 0.0462 | 100.00% | 0.0100 | | 24 | 900 | 9.76 | 0.0641 | 100.00% | 0.0100 | | 25 | 950 | 10.29 | 0.0332 | 100.00% | 0.0100 | | 26 | 1000 | 10.86 | 0.0317 | 100.00% | 0.0100 | | 27 | 1050 | 11.41 | 0.0378 | 99.22% | 0.0100 | | 29 | 1100 | 11.96 | 0.0235 | 100.00% | 0.0100 | | 30 | 1150 | 12.51 | 0.0280 | 100.00% | 0.0100 | | 30 | 1170 | 12.73 | 0.0307 | 100.00% | 0.0100 | |=========================================================================================|

That took about 12.7 seconds to train, about a 10% reduction. Check the accuracy.

[XTest, YTest] = digitTest4DArrayData; YPred = classify(net2, XTest); accuracy = sum(YTest==YPred)/numel(YTest)

accuracy =

0.9808

Eric said he got the same accuracy, whereas I am seeing a slightly lower accuracy. But I haven't really explored this further, and I so I wouldn't draw any conclusions. I just wanted to take the opportunity to go back and mention one of the important points of the paper that I overlooked last time.

A second reader, another Eric, wanted to know if alpha could be specified as a learnable or non learnable parameter at run time.

The answer: Yes, but not without defining a second class. Recall this portion of the template for defining a layer with learnable properties:

properties (Learnable)

% (Optional) Layer learnable parameters

% Layer learnable parameters go here

end

That Learnable attribute of the properties block is a fixed part of the class definition. It can't be changed dynamically. So, you need to define a second class. I'll call mine eluLayerFixedAlpha. Here's the properties block:

properties

alpha

end

And here's a constructor that takes alpha as an input argument.

methods

function layer = eluLayerFixedAlpha(alpha,name)

layer.Type = 'Exponential Linear Unit';

layer.alpha = alpha;

% Assign layer name if it is passed in.

if nargin > 1

layer.Name = name;

end

% Give the layer a meaningful description.

layer.Description = "Exponential linear unit with alpha: " + ...

alpha;

end

I also modifed the backward method to remove the computation and output argument associated with the derivative of the loss function with respect to alpha.

function dLdX = backward(layer, X, Z, dLdZ, ~)

% Backward propagate the derivative of the loss function through

% the layer

%

% Inputs:

% layer - Layer to backward propagate through

% X - Input data

% Z - Output of layer forward function

% dLdZ - Gradient propagated from the deeper layer

% memory - Memory value which can be used in

% backward propagation [unused]

% Output:

% dLdX - Derivative of the loss with

% respect to the input data

dLdX = dLdZ .* ((X > 0) + ...

((layer.alpha + Z) .* (X <= 0)));

end

Let's try it. I'm just going to make up a value for alpha.

alpha = 1.0;

layers3 = [ ...

imageInputLayer([28 28 1])

convolution2dLayer(5,20)

eluLayerFixedAlpha(alpha)

fullyConnectedLayer(10)

softmaxLayer

classificationLayer];

net3 = trainNetwork(XTrain,YTrain,layers3,options);

YPred = classify(net3, XTest);

accuracy = sum(YTest==YPred)/numel(YTest)

Training on single GPU.

Initializing image normalization.

|=========================================================================================|

| Epoch | Iteration | Time Elapsed | Mini-batch | Mini-batch | Base Learning|

| | | (seconds) | Loss | Accuracy | Rate |

|=========================================================================================|

| 1 | 1 | 0.01 | 2.3005 | 14.06% | 0.0100 |

| 2 | 50 | 0.48 | 1.4979 | 53.13% | 0.0100 |

| 3 | 100 | 0.97 | 1.2162 | 57.81% | 0.0100 |

| 4 | 150 | 1.48 | 1.1427 | 67.97% | 0.0100 |

| 6 | 200 | 1.99 | 0.9837 | 67.19% | 0.0100 |

| 7 | 250 | 2.50 | 0.8110 | 70.31% | 0.0100 |

| 8 | 300 | 3.04 | 0.7347 | 75.00% | 0.0100 |

| 9 | 350 | 3.55 | 0.5937 | 81.25% | 0.0100 |

| 11 | 400 | 4.05 | 0.5686 | 78.13% | 0.0100 |

| 12 | 450 | 4.56 | 0.4678 | 85.94% | 0.0100 |

| 13 | 500 | 5.06 | 0.3461 | 88.28% | 0.0100 |

| 15 | 550 | 5.57 | 0.3515 | 87.50% | 0.0100 |

| 16 | 600 | 6.07 | 0.2582 | 92.97% | 0.0100 |

| 17 | 650 | 6.58 | 0.2216 | 92.97% | 0.0100 |

| 18 | 700 | 7.08 | 0.1705 | 96.09% | 0.0100 |

| 20 | 750 | 7.59 | 0.1212 | 98.44% | 0.0100 |

| 21 | 800 | 8.09 | 0.0925 | 98.44% | 0.0100 |

| 22 | 850 | 8.59 | 0.1045 | 97.66% | 0.0100 |

| 24 | 900 | 9.10 | 0.1289 | 96.09% | 0.0100 |

| 25 | 950 | 9.60 | 0.0710 | 99.22% | 0.0100 |

| 26 | 1000 | 10.10 | 0.0722 | 99.22% | 0.0100 |

| 27 | 1050 | 10.60 | 0.0600 | 99.22% | 0.0100 |

| 29 | 1100 | 11.10 | 0.0688 | 99.22% | 0.0100 |

| 30 | 1150 | 11.61 | 0.0519 | 100.00% | 0.0100 |

| 30 | 1170 | 11.82 | 0.0649 | 99.22% | 0.0100 |

|=========================================================================================|

accuracy =

0.9702

Thanks for your comments and questions, Eric and Eric.

- Category:

- Deep Learning

Comments

To leave a comment, please click here to sign in to your MathWorks Account or create a new one.