Automating Visual Inspection with AI and PLC

The following post is from Sagar Hukkire, application engineer for AI, and Conrado Ramirez Garcia, application engineer for design automation and code generation.

Visual inspection is the image-based inspection of parts where a camera scans the part under test for failures and quality defects. It is a process used in industrial settings to automate checking whether the manufactured products meet their quality requirements. Artificial intelligence (AI) is increasingly becoming an integral component to automating visual inspection.

In the manufacturing industry, automating visual inspection requires integrating AI-based visual inspection capabilities into a system of PLCs (Programmable Logic Controllers), which control the production line. The visual inspection system should also be easily used with a GUI (graphical user interface) by any operator without programming. In this blog post, we ‘ll show you how we implemented a visual inspection system with AI and a PLC, and the interactive app we created for operating the system.

The visual inspection system was designed by expertly combining MATLAB, Simulink, and Beckhoff® tools for integrating the various software components (including AI algorithms and embedded control software for a servomotor). In the following video, you can see the visual inspection system at work, automatically classifying between good and defective hex nuts.

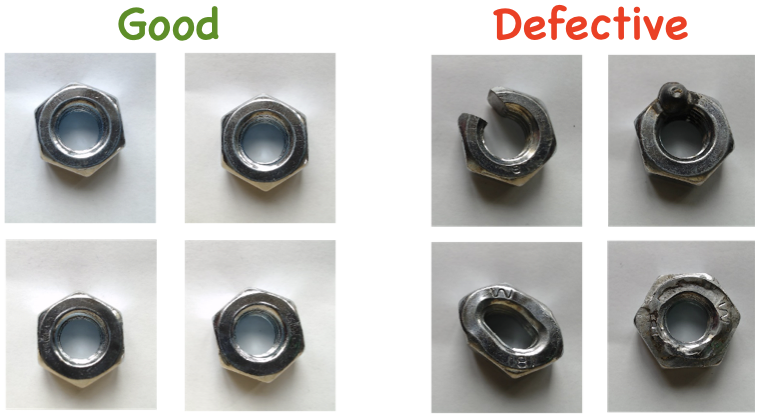

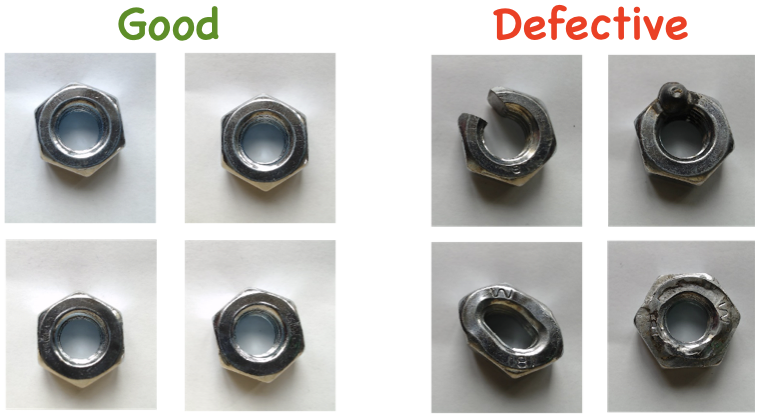

Figure: Classifying hex nuts as good or defective

If you ‘visually inspect’ the image above, you can probably easily distinguish between the good and defective hex nuts. The task seems easy enough. But without the automation that AI brings, how many hex nuts can you classify?

Figure: Classifying hex nuts as good or defective

If you ‘visually inspect’ the image above, you can probably easily distinguish between the good and defective hex nuts. The task seems easy enough. But without the automation that AI brings, how many hex nuts can you classify?

Animated Figure: Can you find the defective hex nut?

In image classification tasks, deep learning models can achieve state-of-the-art accuracy, often exceeding human-level performance, and can speed up the process of classifying a large number of images. To learn more about how and why deep learning is applied to visual inspection, check out the Automated Visual Inspection with Deep Learning ebook.

Animated Figure: Can you find the defective hex nut?

In image classification tasks, deep learning models can achieve state-of-the-art accuracy, often exceeding human-level performance, and can speed up the process of classifying a large number of images. To learn more about how and why deep learning is applied to visual inspection, check out the Automated Visual Inspection with Deep Learning ebook.

Figure: Components of the visual inspection system

The key steps for integrating the components of the visual inspection system are:

Figure: Components of the visual inspection system

The key steps for integrating the components of the visual inspection system are:

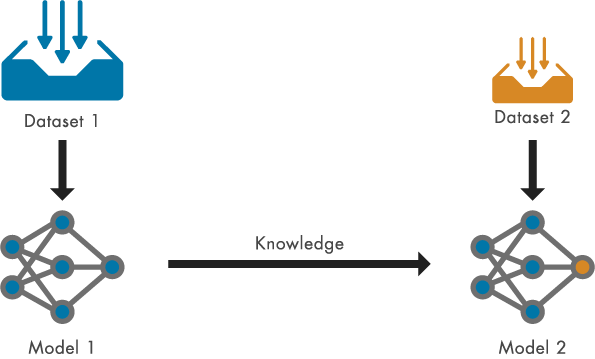

Figure: Workflow integration for the visual inspection system

The following sections will walk you through the steps for designing and combining the components of the visual inspection system.

Figure: Workflow integration for the visual inspection system

The following sections will walk you through the steps for designing and combining the components of the visual inspection system.

Video: Code generation for deep neural network

The following video shows how to integrate the compiled TwinCAT object into the visual inspection solution. The deep learning model is instantiated within TwinCAT, and by using TwinCAT Vision image processing is added to the platform. So, the object can process an input image as a 224x224x3 matrix and then, output the predicted label and corresponding score.

Video: Code generation for deep neural network

The following video shows how to integrate the compiled TwinCAT object into the visual inspection solution. The deep learning model is instantiated within TwinCAT, and by using TwinCAT Vision image processing is added to the platform. So, the object can process an input image as a 224x224x3 matrix and then, output the predicted label and corresponding score.

Video: System integration of deep learning model with TwinCAT Vision

Video: System integration of deep learning model with TwinCAT Vision

Video: Controller design for Beckhoff motion terminals using Simulink and Stateflow

Then, the generated code for the designed controller was integrated with the TwinCAT environment. For more information, see Beckhoff Information System.

Video: Controller design for Beckhoff motion terminals using Simulink and Stateflow

Then, the generated code for the designed controller was integrated with the TwinCAT environment. For more information, see Beckhoff Information System.

Video: System integration of designed motion controller with TwinCAT

Video: System integration of designed motion controller with TwinCAT

Video: Visual inspection app classifying hex nuts to good or bad

Video: Visual inspection app classifying hex nuts to good or bad

Why AI for Visual Inspection?

By using deep learning and computer vision techniques, visual inspection can be automated for detecting manufacturing flaws in many industries such as biotech, automotive, and semiconductors. For example, with visual inspection, flaws can be detected in semiconductor wafers and pills. To get specific examples on various applications, see Examples - Automated Visual Inspection. One visual inspection application can be the classification of hex nuts into good and defective. This is the applications on which we benchmarked our visual inspection system. Figure: Classifying hex nuts as good or defective

If you ‘visually inspect’ the image above, you can probably easily distinguish between the good and defective hex nuts. The task seems easy enough. But without the automation that AI brings, how many hex nuts can you classify?

Figure: Classifying hex nuts as good or defective

If you ‘visually inspect’ the image above, you can probably easily distinguish between the good and defective hex nuts. The task seems easy enough. But without the automation that AI brings, how many hex nuts can you classify?

Animated Figure: Can you find the defective hex nut?

In image classification tasks, deep learning models can achieve state-of-the-art accuracy, often exceeding human-level performance, and can speed up the process of classifying a large number of images. To learn more about how and why deep learning is applied to visual inspection, check out the Automated Visual Inspection with Deep Learning ebook.

Animated Figure: Can you find the defective hex nut?

In image classification tasks, deep learning models can achieve state-of-the-art accuracy, often exceeding human-level performance, and can speed up the process of classifying a large number of images. To learn more about how and why deep learning is applied to visual inspection, check out the Automated Visual Inspection with Deep Learning ebook.

Visual Inspection System

We have built a visual inspection system that classifies hex nuts into two categories: good and bad (broken). The main system components are a development computer, an industrial computer, an industrial camera, and a servomotor. And of course, we need hex nuts to test the system.- Development PC – It runs a MATLAB instance, using a web app, to visualize the classification results of a deep learning model along with the input picture from the industrial computer. It is a standard PC, where MATLAB is installed, along with the following toolboxes and support packages.

- Toolboxes: Deep Learning Toolbox, MATLAB coder, Image Processing Toolbox, Simulink, and Simulink coder

- Support Packages: TE1400 | TwinCAT® 3 Target for Simulink, TE 1401 | TwinCAT 3 Target for MATLAB, and TE1410 | TwinCAT 3 Interface for MATLAB and Simulink

- Industrial PC – It executes real-time applications, controls the servomotor motion and fieldbus communication (ADS protocol), executes the deep learning model, and provides access to the industrial camera. The system is using the CX2043 Embedded PC from Beckhoff.

- Industrial Camera – It is responsible for acquiring new images of test data of hex nuts. It is controlled via the industrial PC. The system is using a GigE camera with the the VOS2000-1218 lens from Beckhoff.

- Servomotor – This is fully controlled by the industrial PC, which has a spindle attached to it. The spindle horizontally rotates the hex nuts to the desired position, as specified by the web app.

Figure: Components of the visual inspection system

The key steps for integrating the components of the visual inspection system are:

Figure: Components of the visual inspection system

The key steps for integrating the components of the visual inspection system are:

- Designing the deep learning model and retraining it with our data.

- Deploying the trained deep learning model to a Beckhoff PLC by using library-free C/C++ code generation.

- Incorporating motion control for a spindle using Model-Based Design with Stateflow, thus ensuring the precise control for accurately inspecting hex nuts.

- Using MATLAB App Designer to create a user-friendly GUI for interacting with a Beckhoff PLC, enabling continuous communication and monitoring.

Figure: Workflow integration for the visual inspection system

The following sections will walk you through the steps for designing and combining the components of the visual inspection system.

Figure: Workflow integration for the visual inspection system

The following sections will walk you through the steps for designing and combining the components of the visual inspection system.

Designing Visual inspection System

Designing AI Model

A key step in building an AI-powered system is designing the AI model. We used a pretrained deep learning model, available in Deep Learning Toolbox, and by performing transfer learning we adapted this model to our task. Here, we are just showing some of the steps for adapting the deep learning model. The example Retrain Neural Network to Classify New Images shows all the transfer learning steps in detail. Just remember that you need to retrain the model with your data and adjust the training options to achieve optimal performance. Create an image data store, which enables you to store large collections of image data and efficiently read batches of images during network training.imds = imageDatastore(foldername,...

IncludeSubfolders=true,...

LabelSource="foldernames");

Partition the data into training, validation, and testing datasets. Use 75% of the images for training, 15% for validation, and 10% for testing.

[imdsTrain,imdsValidation] = splitEachLabel(imds,0.75,0.15,"randomized");Load a pretrained ResNet neural network. To return a neural network ready for retraining for the new data, also specify the number of classes. When you specify the number of classes, the imagePretrainedNetwork function adapts the neural network so that it outputs prediction scores for each of the specified number of classes. To find more available pretrained networks, see Pretrained Deep Neural Networks.

net = imagePretrainedNetwork("resnet18",NumClasses=numClasses);

For transfer learning, you can freeze the weights of earlier layers in the network by setting the learning rates in those layers to zero. Keep the last learnable layer unfrozen. During training, the trainnet function does not update the parameters of these frozen layers. Because the function does not compute the gradients of the frozen layers, freezing the weights can significantly speed up network training. For small datasets, freezing the network layers prevents those layers from overfitting to the new dataset.

net = freezeNetwork(net,LayerNamesToIgnore=layerName);The images in the datastore can have different sizes. To automatically resize the training images, use an augmented image datastore. Data augmentation also helps prevent the network from overfitting and memorizing the exact details of the training images.

pixelRange = [-30 30];

scaleRange = [0.9 1.1];

imageAugmenter = imageDataAugmenter( ...

RandXReflection=true, ...

RandRotation=[-180 180], ...

RandXTranslation=pixelRange, ...

RandYTranslation=pixelRange, ...

RandXScale=scaleRange,...

RandYScale=scaleRange);

augimdsTrain = augmentedImageDatastore(inputSize(1:2),imdsTrain, ...

DataAugmentation=imageAugmenter);

Specify the training options. Choosing the right training options often requires trial and error.

options = trainingOptions("adam", ...

MiniBatchSize= 10, ...

ValidationData=augimdsValidation, ...

ValidationFrequency=5, ...

Plots="training-progress", ...

Metrics="accuracy", ...

Verbose=false);

Train the neural network using the trainnet function.

net = trainnet(augimdsTrain,net,"crossentropy",options);For visual inspection with AI, also check out Automated Visual Inspection Library for Computer Vision Toolbox. This library offers functions for training, evaluating, and deploying anomaly detection and object detection networks.

Deploying AI Model

Using Deep Learning Toolbox with MATLAB Coder, you can automatically generate library-free C/C++ code for deploying a deep neural network to the target of your choice. As shown in the following video, you first define an entry-point function for predicting using the deep learning model, and then generate code for that function. Based on the generated C/C++ code, TwinCAT Target builds TwinCAT objects to enable exchange of data between MATLAB and the TwinCAT runtime. Video: Code generation for deep neural network

The following video shows how to integrate the compiled TwinCAT object into the visual inspection solution. The deep learning model is instantiated within TwinCAT, and by using TwinCAT Vision image processing is added to the platform. So, the object can process an input image as a 224x224x3 matrix and then, output the predicted label and corresponding score.

Video: Code generation for deep neural network

The following video shows how to integrate the compiled TwinCAT object into the visual inspection solution. The deep learning model is instantiated within TwinCAT, and by using TwinCAT Vision image processing is added to the platform. So, the object can process an input image as a 224x224x3 matrix and then, output the predicted label and corresponding score.

Video: System integration of deep learning model with TwinCAT Vision

Video: System integration of deep learning model with TwinCAT Vision

Designing Motion Controller

In addition to designing and implementing the AI model, we also designed and implemented an embedded control algorithm for the servomotor. We implemented this algorithm in Stateflow and generated C code using Simulink Coder. Video: Controller design for Beckhoff motion terminals using Simulink and Stateflow

Then, the generated code for the designed controller was integrated with the TwinCAT environment. For more information, see Beckhoff Information System.

Video: Controller design for Beckhoff motion terminals using Simulink and Stateflow

Then, the generated code for the designed controller was integrated with the TwinCAT environment. For more information, see Beckhoff Information System.

Video: System integration of designed motion controller with TwinCAT

Video: System integration of designed motion controller with TwinCAT

App for Visual Inspection

Finally, we leveraged MATLAB App Designer to create an interactive app for controlling the visual inspection system and visualizing the operation of the system and the classification result. The following video shows a demonstration of this app. Video: Visual inspection app classifying hex nuts to good or bad

Video: Visual inspection app classifying hex nuts to good or bad

Conclusion

Deploying an AI algorithm to a Programmable Logic Controller (PLC) on industrial-proven hardware, using a single integration platform like Beckhoff TwinCAT 3, offers a robust and scalable solution for industrial AI applications. This approach simplifies the design and implementation of AI algorithms, ensuring that they can be easily integrated into existing systems. Additionally, developing the end-to-end visual inspection application using MATLAB and Simulink tools further enhances the efficiency and effectiveness of deploying AI in industrial settings. This comprehensive strategy not only streamlines the development process but also ensures a reliable and scalable deployment of AI technologies in industrial environments.- Category:

- AI Application,

- Deep Learning

Comments

To leave a comment, please click here to sign in to your MathWorks Account or create a new one.