Simulate PyTorch and Other Python-Based Models with Simulink Co-Execution Blocks

This blog post is from Maggie Oltarzewski, Product Marketing Engineer at MathWorks.

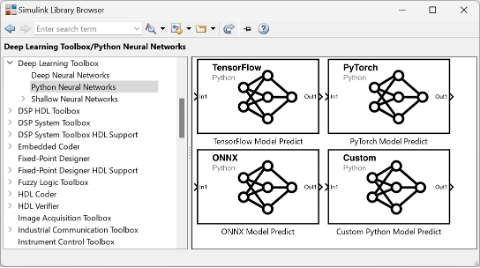

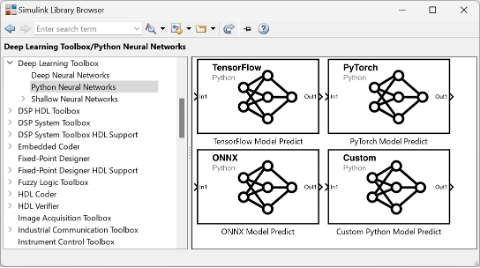

In R2024a, four new blocks for co-executing deep learning models in Simulink were added to Deep Learning Toolbox. These blocks let you simulate pretrained models from PyTorch®, TensorFlow™, and ONNX™ directly in Simulink.

Figure: The four new co-execution blocks available in Deep Learning Toolbox shown in the Simulink Library Browser

You can assess how third-party models impact the performance of a system modeled in Simulink before going through the effort to import the model into MATLAB. If you try out these blocks or want to hear more about deep learning with Simulink, leave a comment below!

Figure: The four new co-execution blocks available in Deep Learning Toolbox shown in the Simulink Library Browser

You can assess how third-party models impact the performance of a system modeled in Simulink before going through the effort to import the model into MATLAB. If you try out these blocks or want to hear more about deep learning with Simulink, leave a comment below!

To start using the co-execution blocks, you need to set up a Python environment. Make sure you have a local virtual environment for Python installed with any necessary libraries for the models you’d like to simulate (the blocks have been tested using Python version 3.10). For more information, visit this resource for configuring your system to use Python.

Figure: Simulink model that implements PyTorch co-execution blocks to evaluate a traffic video

This example uses MATLAB Function blocks to define the pre- and post-processing functions. Using a MATLAB function block, you can write a MATLAB function for use in a Simulink model. You also have the option to define the pre- and post- processing functions within the co-execution block itself. The last tab in the block parameters lets you browse for Python files that define the functions preprocess() and postprocess(). You can use this option if you already have the functions defined or leave them blank if you prefer another method.

Figure: Simulink model that implements PyTorch co-execution blocks to evaluate a traffic video

This example uses MATLAB Function blocks to define the pre- and post-processing functions. Using a MATLAB function block, you can write a MATLAB function for use in a Simulink model. You also have the option to define the pre- and post- processing functions within the co-execution block itself. The last tab in the block parameters lets you browse for Python files that define the functions preprocess() and postprocess(). You can use this option if you already have the functions defined or leave them blank if you prefer another method.

Figure: Modifying the pre- and post-processing parameters of the PyTorch Model Predict block

To configure the block to run in Simulink, two essential parameters are the load command and the path to the model. Selecting the path to the PyTorch model file is straightforward – you use the browse button on the right to navigate in your file explorer and select the model you wish to use.

Figure: Modifying the pre- and post-processing parameters of the PyTorch Model Predict block

To configure the block to run in Simulink, two essential parameters are the load command and the path to the model. Selecting the path to the PyTorch model file is straightforward – you use the browse button on the right to navigate in your file explorer and select the model you wish to use.

Figure: Specifying the load command and PyTorch model to be used in the block

When specifying the model file using the load command, there are three different choices to select from based on the type of model you have:

Figure: Specifying the load command and PyTorch model to be used in the block

When specifying the model file using the load command, there are three different choices to select from based on the type of model you have:

Figure: The three available load command options

You also need to configure the inputs and outputs for the co-execution block. Since this application goes frame by frame, the dimensions being used are for 2-D images. The expected input dimension ordering is different between MATLAB and PyTorch, so you must identify how the inputs should be permuted when feeding 2-D MATLAB images to a PyTorch model. To learn more, visit this page about input dimension ordering.

Figure: The three available load command options

You also need to configure the inputs and outputs for the co-execution block. Since this application goes frame by frame, the dimensions being used are for 2-D images. The expected input dimension ordering is different between MATLAB and PyTorch, so you must identify how the inputs should be permuted when feeding 2-D MATLAB images to a PyTorch model. To learn more, visit this page about input dimension ordering.

Figure: Permutation of input dimension ordering for 2-D images from MATLAB to PyTorch

Figure: Permutation of input dimension ordering for 2-D images from MATLAB to PyTorch

Figure: Defining the matrix for how to permute the inputs from MATLAB to Python

For the output, you have the option to specify Max MATLAB Dim Size to limit the size of the output along each dimension. Some deep learning models can return variable-sized output during a single simulation. Prescribing a limit can prevent large outputs being generated, which could slow down your simulation. If you’re comparing different PyTorch models within the same simulation, you may want similar output sizes to facilitate the comparison or post-processing.

Figure: Defining the matrix for how to permute the inputs from MATLAB to Python

For the output, you have the option to specify Max MATLAB Dim Size to limit the size of the output along each dimension. Some deep learning models can return variable-sized output during a single simulation. Prescribing a limit can prevent large outputs being generated, which could slow down your simulation. If you’re comparing different PyTorch models within the same simulation, you may want similar output sizes to facilitate the comparison or post-processing.

Figure: Options to modify the block output parameters

Once the co-execution block parameters are configured, run the simulation. The PyTorch models are executed at each step in the simulation and this example creates a visualization of the probabilities of each class label per their index. By taking the index of the maximum value at any point, we could identify how that frame of the video is classified by the PyTorch models. Since both models were trained on the ImageNet database, it’s easy to find the class names. For an example on how to compute classification results from probabilities, see Import Network from PyTorch and Classify Image.

Figure: Options to modify the block output parameters

Once the co-execution block parameters are configured, run the simulation. The PyTorch models are executed at each step in the simulation and this example creates a visualization of the probabilities of each class label per their index. By taking the index of the maximum value at any point, we could identify how that frame of the video is classified by the PyTorch models. Since both models were trained on the ImageNet database, it’s easy to find the class names. For an example on how to compute classification results from probabilities, see Import Network from PyTorch and Classify Image.

Figure: One frame of the output visualized for each PyTorch model

The two different model outputs (bottom left and bottom right in the following figure) exhibit different behavior throughout the simulation. The MobileNetV2 model output (on the left) shows little variation between steps and sustains the same maximum index. Meanwhile, the MnasNet model output (on the right) fluctuates at each step, with different indexes being the maximum value at different frames.

Figure: One frame of the output visualized for each PyTorch model

The two different model outputs (bottom left and bottom right in the following figure) exhibit different behavior throughout the simulation. The MobileNetV2 model output (on the left) shows little variation between steps and sustains the same maximum index. Meanwhile, the MnasNet model output (on the right) fluctuates at each step, with different indexes being the maximum value at different frames.

Clockwise starting at upper left: Simulating a Simulink model with 2 co-execution blocks; Visualization of the video being analyzed; MnasNet model output showing high variation; MobileNetV2model output with a consistent maximum rating.

From here you could further compare the models by performing some analysis on the outputs, edit one of the co-execution blocks to test a new model, or determine that one model is preferred to use for your application. You could build out the post-processing functionality to identify the index of the maximum probability at each step and map it to the corresponding class label. If you have additional video inputs, you could swap the input to the models and test the same blocks using the alternate input. There are many ways to proceed, and by using the co-execution blocks, it’s simple to get started.

Clockwise starting at upper left: Simulating a Simulink model with 2 co-execution blocks; Visualization of the video being analyzed; MnasNet model output showing high variation; MobileNetV2model output with a consistent maximum rating.

From here you could further compare the models by performing some analysis on the outputs, edit one of the co-execution blocks to test a new model, or determine that one model is preferred to use for your application. You could build out the post-processing functionality to identify the index of the maximum probability at each step and map it to the corresponding class label. If you have additional video inputs, you could swap the input to the models and test the same blocks using the alternate input. There are many ways to proceed, and by using the co-execution blocks, it’s simple to get started.

Figure: The four new co-execution blocks available in Deep Learning Toolbox shown in the Simulink Library Browser

You can assess how third-party models impact the performance of a system modeled in Simulink before going through the effort to import the model into MATLAB. If you try out these blocks or want to hear more about deep learning with Simulink, leave a comment below!

Figure: The four new co-execution blocks available in Deep Learning Toolbox shown in the Simulink Library Browser

You can assess how third-party models impact the performance of a system modeled in Simulink before going through the effort to import the model into MATLAB. If you try out these blocks or want to hear more about deep learning with Simulink, leave a comment below!

Why Use Co-Execution Blocks in Simulink?

Working in Simulink means that you get the benefits of Model-Based Design. Model-Based Design is the systematic use of models throughout the development process that improves how you deliver complex systems. You can reduce the risk of slowing down development by introducing Model-Based Design in stages. Start with a single project, then build on initial success with expanded model usage, simulation, automated testing, and code generation. You can use Model-Based Design with MATLAB and Simulink to shorten development cycles and reduce your development time by 50% or more. Simulink is useful in designing complex engineered systems where AI algorithms may be incorporated, such as AI detection of pedestrians in a vehicle or a motor control system that estimates rotor position with a neural net. By systematically using Model-Based Design to build and test your system, you can find errors earlier and mitigate them when it’s less costly to do so. You develop a digital thread in the process that connects the different aspects of your system together as it evolved. If you want to learn more, check out Integrating AI into System-Level Design and AI with Model-Based Design. In addition to the deep learning co-execution blocks, there are other methods that bring Python® functionality into Simulink. For Python code that isn’t a deep learning model, you can use Python Importer to bring Python modules or packages into your Simulink model. Going through the import process generates a Simulink custom block library containing a MATLAB® System block for each specified function. You can also convert Python-based models to MATLAB networks and simulate them in Simulink using Deep Neural Networks blocks. This process is still necessary if you want to generate code for the model, but starting with co-execution can reduce the time to initial simulation and evaluation of the deep learning model in your system. One of the benefits of Model-Based Design is the ability to rapidly iterate and test scenarios in the safety of a simulated environment. The co-execution blocks provide added flexibility in this process - you can change the deep learning model being applied simply by modifying block parameters. Being able to pivot helps you quickly iterate on your design, rapidly test different models, and get to the stage of evaluating which model works best within your system. You can easily test a system with multiple models at once, use one block and update its properties to test different models, or use variants to define variations of the system that each simulate with different co-execution blocks.Co-Execution Blocks in Simulink

The co-execution Simulink blocks run pretrained deep learning models from PyTorch, TensorFlow, or ONNX as part of your Simulink simulation. For each framework, a corresponding Simulink block is offered that you can configure to call your model and define the inputs and outputs. You can also co-execute custom deep learning models that have been written in Python. The custom Python co-execution block allows you to incorporate a pretrained Python model in your simulation. If you have defined load_model() and predict() functions in a Python file, you can specify the path and appropriate arguments in the block properties. The predict() function is executed at every step throughout the simulation. This is a good alternative if you have Python code rather than a saved deep learning model in a specific format.| Use this block… | to predict responses using… |

|

TensorFlow |

|

PyTorch |

|

ONNX |

|

Python code |

Implementing the PyTorch Co-Execution Block

To show you how to use the co-execution Simulink blocks, I have prepared a simple example for this blog post. The example implements the PyTorch co-execution block to evaluate the performance of two PyTorch models when processing a traffic video. The Simulink model imports the video, pre-processes it, sends it through two different PyTorch models, and post-processes the model outputs to generate visualizations. This example uses the MobileNetV2 and MnasNet PyTorch models, which are both image classification models trained on the ImageNet image database. Many pretrained models are available for image classification, and when choosing the right model for your application you have to consider their trade-offs, such as prediction speed, size, and accuracy. And very importantly, the rapid integration of the PyTorch models in Simulink simulations allows you to effectively test how the models perform within a system. Figure: Simulink model that implements PyTorch co-execution blocks to evaluate a traffic video

This example uses MATLAB Function blocks to define the pre- and post-processing functions. Using a MATLAB function block, you can write a MATLAB function for use in a Simulink model. You also have the option to define the pre- and post- processing functions within the co-execution block itself. The last tab in the block parameters lets you browse for Python files that define the functions preprocess() and postprocess(). You can use this option if you already have the functions defined or leave them blank if you prefer another method.

Figure: Simulink model that implements PyTorch co-execution blocks to evaluate a traffic video

This example uses MATLAB Function blocks to define the pre- and post-processing functions. Using a MATLAB function block, you can write a MATLAB function for use in a Simulink model. You also have the option to define the pre- and post- processing functions within the co-execution block itself. The last tab in the block parameters lets you browse for Python files that define the functions preprocess() and postprocess(). You can use this option if you already have the functions defined or leave them blank if you prefer another method.

Figure: Modifying the pre- and post-processing parameters of the PyTorch Model Predict block

To configure the block to run in Simulink, two essential parameters are the load command and the path to the model. Selecting the path to the PyTorch model file is straightforward – you use the browse button on the right to navigate in your file explorer and select the model you wish to use.

Figure: Modifying the pre- and post-processing parameters of the PyTorch Model Predict block

To configure the block to run in Simulink, two essential parameters are the load command and the path to the model. Selecting the path to the PyTorch model file is straightforward – you use the browse button on the right to navigate in your file explorer and select the model you wish to use.

Figure: Specifying the load command and PyTorch model to be used in the block

When specifying the model file using the load command, there are three different choices to select from based on the type of model you have:

Figure: Specifying the load command and PyTorch model to be used in the block

When specifying the model file using the load command, there are three different choices to select from based on the type of model you have:

- load() loads a scripted model and can be used if you have the source code for the model.

- jit.load() is the option to choose if you’re loading a traced model. Using a traced model is a good option if you plan, down the line, to convert the PyTorch model to a MATLAB network so that you can take advantage of MATLAB built-in tools for deep learning (learn more in this blog post). In this example, we are loading traced PyTorch models. For more information on how to trace a PyTorch model, go to Torch documentation: Tracing a function.

- load_state_dict() should be used if you only have the weights saved from your model in a state dictionary.

Figure: The three available load command options

You also need to configure the inputs and outputs for the co-execution block. Since this application goes frame by frame, the dimensions being used are for 2-D images. The expected input dimension ordering is different between MATLAB and PyTorch, so you must identify how the inputs should be permuted when feeding 2-D MATLAB images to a PyTorch model. To learn more, visit this page about input dimension ordering.

Figure: The three available load command options

You also need to configure the inputs and outputs for the co-execution block. Since this application goes frame by frame, the dimensions being used are for 2-D images. The expected input dimension ordering is different between MATLAB and PyTorch, so you must identify how the inputs should be permuted when feeding 2-D MATLAB images to a PyTorch model. To learn more, visit this page about input dimension ordering.

Figure: Permutation of input dimension ordering for 2-D images from MATLAB to PyTorch

Figure: Permutation of input dimension ordering for 2-D images from MATLAB to PyTorch

Figure: Defining the matrix for how to permute the inputs from MATLAB to Python

For the output, you have the option to specify Max MATLAB Dim Size to limit the size of the output along each dimension. Some deep learning models can return variable-sized output during a single simulation. Prescribing a limit can prevent large outputs being generated, which could slow down your simulation. If you’re comparing different PyTorch models within the same simulation, you may want similar output sizes to facilitate the comparison or post-processing.

Figure: Defining the matrix for how to permute the inputs from MATLAB to Python

For the output, you have the option to specify Max MATLAB Dim Size to limit the size of the output along each dimension. Some deep learning models can return variable-sized output during a single simulation. Prescribing a limit can prevent large outputs being generated, which could slow down your simulation. If you’re comparing different PyTorch models within the same simulation, you may want similar output sizes to facilitate the comparison or post-processing.

Figure: Options to modify the block output parameters

Once the co-execution block parameters are configured, run the simulation. The PyTorch models are executed at each step in the simulation and this example creates a visualization of the probabilities of each class label per their index. By taking the index of the maximum value at any point, we could identify how that frame of the video is classified by the PyTorch models. Since both models were trained on the ImageNet database, it’s easy to find the class names. For an example on how to compute classification results from probabilities, see Import Network from PyTorch and Classify Image.

Figure: Options to modify the block output parameters

Once the co-execution block parameters are configured, run the simulation. The PyTorch models are executed at each step in the simulation and this example creates a visualization of the probabilities of each class label per their index. By taking the index of the maximum value at any point, we could identify how that frame of the video is classified by the PyTorch models. Since both models were trained on the ImageNet database, it’s easy to find the class names. For an example on how to compute classification results from probabilities, see Import Network from PyTorch and Classify Image.

Figure: One frame of the output visualized for each PyTorch model

The two different model outputs (bottom left and bottom right in the following figure) exhibit different behavior throughout the simulation. The MobileNetV2 model output (on the left) shows little variation between steps and sustains the same maximum index. Meanwhile, the MnasNet model output (on the right) fluctuates at each step, with different indexes being the maximum value at different frames.

Figure: One frame of the output visualized for each PyTorch model

The two different model outputs (bottom left and bottom right in the following figure) exhibit different behavior throughout the simulation. The MobileNetV2 model output (on the left) shows little variation between steps and sustains the same maximum index. Meanwhile, the MnasNet model output (on the right) fluctuates at each step, with different indexes being the maximum value at different frames.

Clockwise starting at upper left: Simulating a Simulink model with 2 co-execution blocks; Visualization of the video being analyzed; MnasNet model output showing high variation; MobileNetV2model output with a consistent maximum rating.

From here you could further compare the models by performing some analysis on the outputs, edit one of the co-execution blocks to test a new model, or determine that one model is preferred to use for your application. You could build out the post-processing functionality to identify the index of the maximum probability at each step and map it to the corresponding class label. If you have additional video inputs, you could swap the input to the models and test the same blocks using the alternate input. There are many ways to proceed, and by using the co-execution blocks, it’s simple to get started.

Clockwise starting at upper left: Simulating a Simulink model with 2 co-execution blocks; Visualization of the video being analyzed; MnasNet model output showing high variation; MobileNetV2model output with a consistent maximum rating.

From here you could further compare the models by performing some analysis on the outputs, edit one of the co-execution blocks to test a new model, or determine that one model is preferred to use for your application. You could build out the post-processing functionality to identify the index of the maximum probability at each step and map it to the corresponding class label. If you have additional video inputs, you could swap the input to the models and test the same blocks using the alternate input. There are many ways to proceed, and by using the co-execution blocks, it’s simple to get started.

Conclusion

This simple example shows just one way the new co-execution blocks can be implemented in Simulink. There are many benefits to using these blocks, but here are a few main takeaways:

|

Comments

To leave a comment, please click here to sign in to your MathWorks Account or create a new one.