MATLAB Copilot gets a new LLM – November 2025 updates

Bio: This blog post is co-authored by Seth DeLand, product manager for MATLAB Copilot. Seth has held various product management roles at MathWorks over the last 14 years, including products for optimization and machine learning.

With the rapid rate of change in the generative AI space, we've always had a vision that MATLAB Copilot should get updates outside of our general A/B release cadence.

I'm excited to share the most recent round of updates that are already live. You'll get these updates automatically if you're using MATLAB Copilot (which is available in R2025a and newer):

- Copilot Chat now uses the GPT-5 mini model when generating responses.

- The context window in Copilot Chat has been expanded to support longer conversations.

- Other features like "Explain Error", "Explain Code", and "Generate Comments" now use GPT-5 mini as well.

Our goal with these updates was to improve the quality of responses coming from MATLAB Copilot. Here are a few examples of the updates in action.

Better base knowledge in Copilot Chat

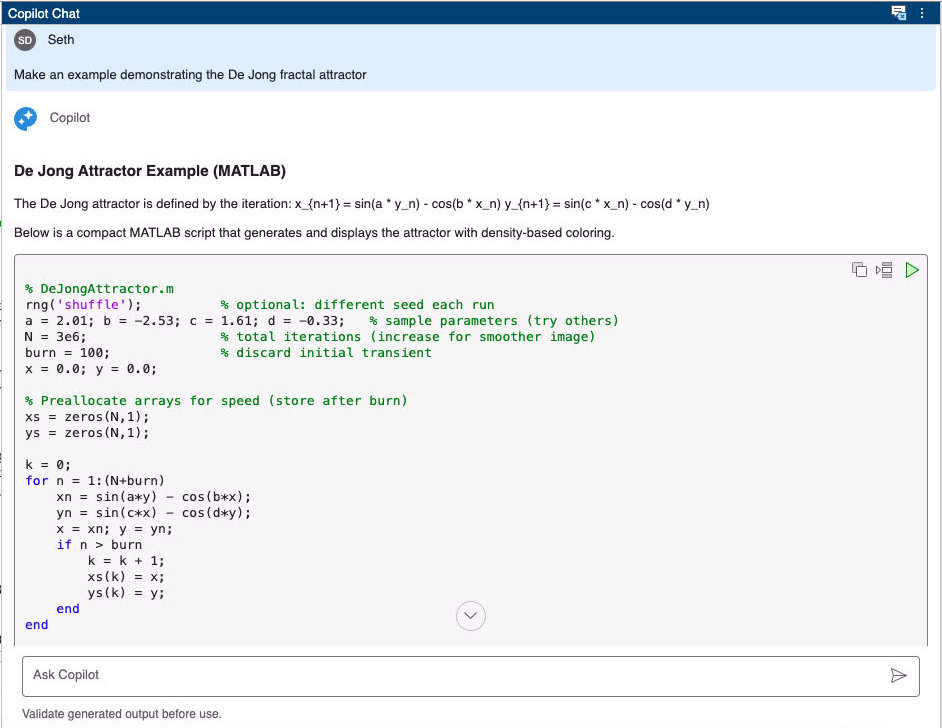

GPT-5 mini tends to have better and more up-to-date knowledge than what we used previously. Over on the MATLAB Community blog my colleague Ned recently posted an example about creating MATLAB code to illustrate the De Jong fractal attractor. MATLAB Copilot used to struggle with this! When I asked it to generate an example with the previous LLM I got a rather sad dot at (0,0):

The new GPT-5 mini model has much better awareness of these concepts, in both the response and the generated figure:

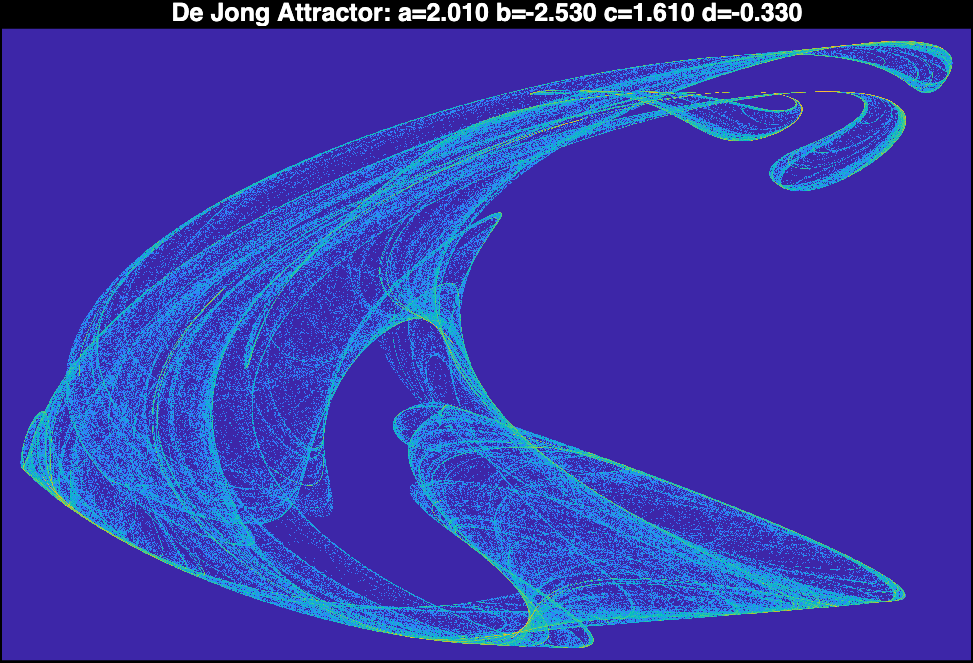

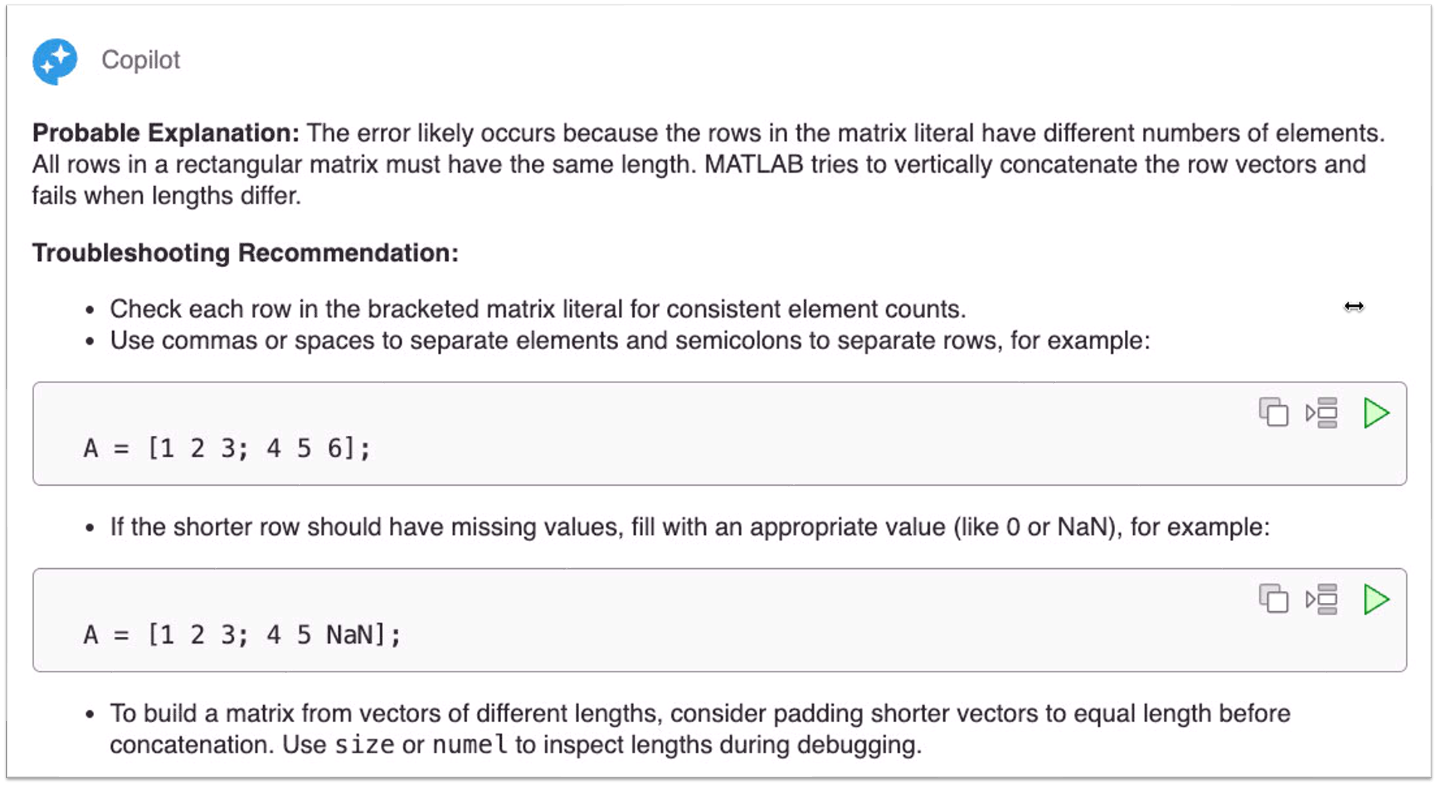

Explain Error

Explain Error now uses the improved model as well. Here's a comparison where I hit an "Undefined function or variable" error and asked for an explanation:

Old model:

New model:

I really like that the new model is giving me some code suggestions so I can quickly assess what went wrong. The "Probable Explanation" from the new model is more detailed, but it also uses what I would consider more specialized language that could be difficult for a new user to understand. With LLMs, there is always more room for improvement!

Explain Code

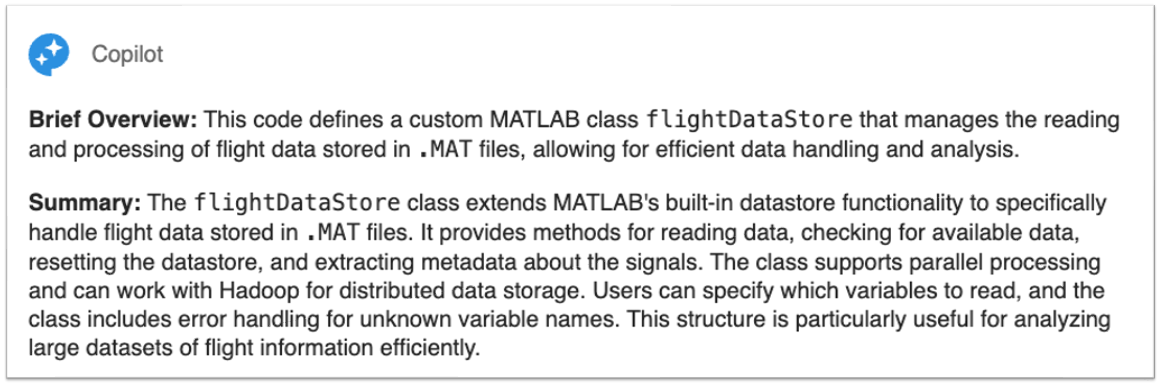

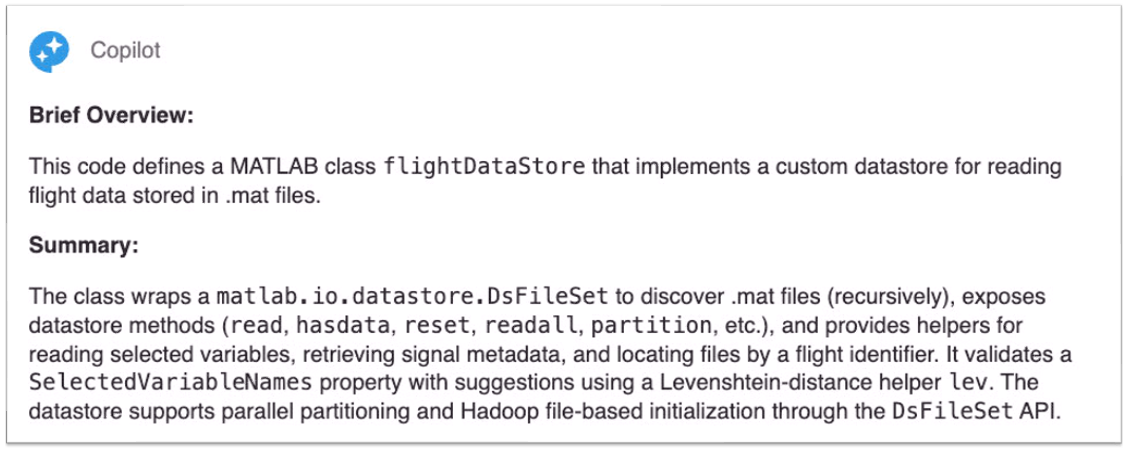

These changes also impact how MATLAB Copilot actions work. My personal favorite, "Explain Code", now generates explanations using the new model. Here's a comparison of the summaries generated by "Explain Code" for a MATLAB class I wrote many years ago that as far as I'm aware is not in the LLM's training set. My summary of this code is that it's a custom datastore for working with aircraft flight telemetry stored in MAT-files, let's see how MATLAB Copilot does:

Old model:

New model:

Personally, I find the new summary much better:

- The "Brief Overview" is more concise (conciseness is certainly a theme with this new model)

- The new summary gives me a bunch of useful information that the old summary was lacking, mainly the names of several of the methods so I can quickly find them.

- The old summary follows the same order that the code is implemented in the file, which actually isn't the most helpful way to summarize what the code does. The new model seems to have figured this out and puts the most important stuff up front and the less important stuff at the end (Hadoop support will only be relevant in certain circumstances).

Closing Thoughts

As with all things GenAI, these are just a few examples, your own experiences may vary. But I encourage you to try them out in MATLAB Copilot.

We have a lot more planned, so stay tuned!

댓글

댓글을 남기려면 링크 를 클릭하여 MathWorks 계정에 로그인하거나 계정을 새로 만드십시오.