4 Capabilities of Autonomous Navigation Systems

Autonomous Navigation with Brian Douglas: Part 3

This post is from Brian Douglas, YouTube content creator for Control Systems and Autonomous Applications.

In the last post, we learnt about the heuristic and optimal approaches for autonomous navigation. At first glance, an optimal approach to autonomous navigation might seem like a fairly straightforward problem – observe the environment to determine where the vehicle is, figure out where it needs to be, and then determine the best way to get there. Unfortunately, accomplishing this might not be as easy as that sentence makes it sound.

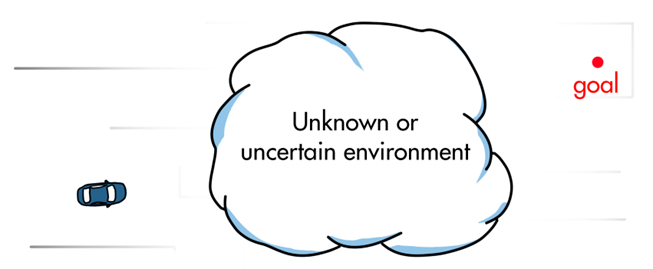

I think the number one thing that can make autonomous navigation a difficult problem to solve is when the vehicle must navigate through an environment that isn’t perfectly known. In these situations, the vehicle can’t say for certain where it is, where the destination is, or what obstacles and constraints are in the way. Therefore, coming up with a viable plan and acting on that plan becomes challenging.

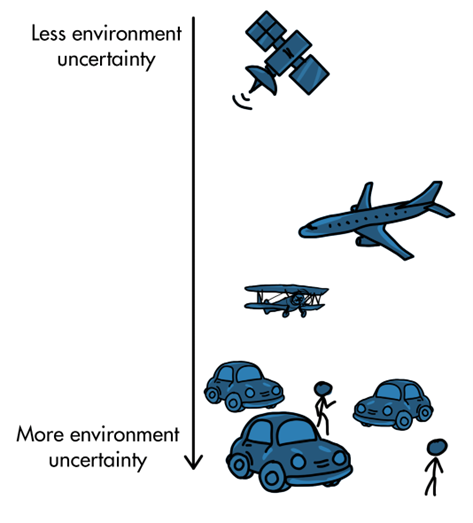

In general, the more predictable and understandable an environment is, the easier it is to accomplish full autonomous navigation.

For example, building an autonomous spacecraft that orbits the Earth is typically a simpler navigation problem than an autonomous aircraft – at least in terms of environment complexity. Space is a more predictable environment than air because we have less uncertainty with the forces that act on the vehicle, and we have more certainty in the behavior of other nearby objects. When the vehicle comes up with a plan, say to perform a station-keeping maneuver, we have confidence that the spacecraft will autonomously be able to follow that plan without encountering unknown forces or obstacles from the environment. Therefore, we don’t have to build a vehicle that has to account for a lot of unknown situations; orbiting the Earth is a relatively predictable environment.

With aircrafts, on the other hand, we have to navigate through a lot more uncertainty. There are unknown wind gusts, flocks of birds flying around, other human-controlled planes, and having to land and taxi around an airport, just to name a few.

Even with all of that, an autonomous aircraft is typically a simpler problem than an autonomous car. And it’s for the same reasons. There is much more uncertainty driving around in a chaotic city than there is flying around in relatively open air.

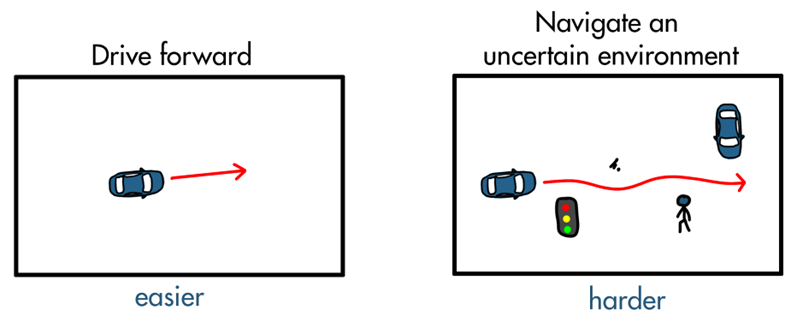

Building a vehicle that can maneuver autonomously is absolutely an awesome accomplishment and I don’t want to trivialize it too much, however, the thing I want to stress here is that what makes autonomous vehicles impressive is not the fact that they can move on their own but that they can navigate autonomously within an uncertain and changing environment. Getting a car to move forward by itself simply requires an actuator that compresses the gas pedal. The difficulty comes from knowing when to compress the pedal. For the car, it’s to make adjustments in a way that gets the car to the destination efficiently, while following local traffic laws, avoiding potholes and other obstacles, rerouting around construction, and avoiding other cars driven by unpredictable humans. And to do all of this in the snow and the rain and so on. Not an easy feat!

4 capabilities of an autonomous systems

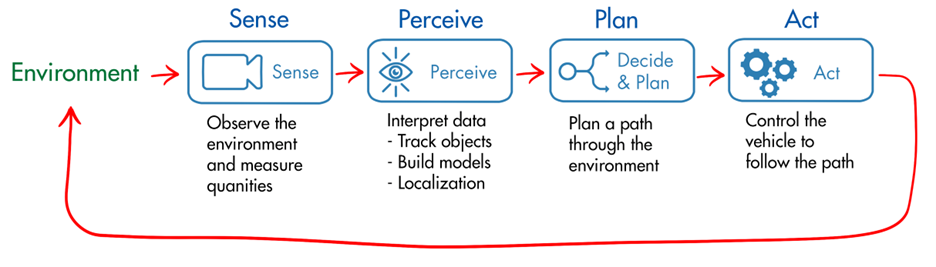

To understand how we overcome these challenges, let’s split up the larger autonomous navigation problem into a set of four general capabilities.

Refer the ebook on “Sensor Fusion and Tracing for Autonomous Systems: An Overview” for more information

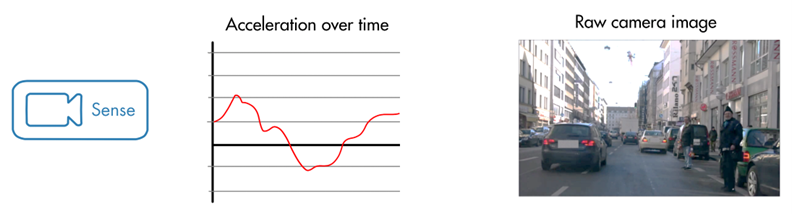

Autonomous systems need to interact with an uncertain physical world. To develop some certainty, sensors are used to collect information about both the state of the system and the state of the external world. Sensors are application dependent, but they can be anything that observe and measure some quantity. For example, an automated driving system may measure its own state with an accelerometer, but it also may measure the state of the environment with externally facing sensors like radar, lidar, and visible cameras.

The sensor data isn’t necessarily useful in its raw form. For example, a camera image might have several million pixels, each with 8 bits or more of information in three different color bands. This is a lot of data! In order to make use of this massive array of numbers, an algorithm or a human need to pull out something of value. In this way, perception is more than acquiring information, it’s the act of interpreting it into a useful quantity. Perception can be separated into two different but equally important responsibilities: it’s responsible for self-awareness (perceiving your own state) and it’s responsible for situational awareness (perceiving other objects in the environment and tracking them).

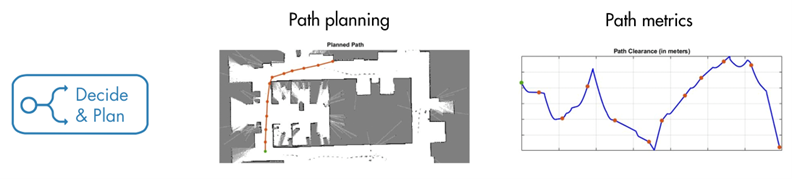

After perception, the vehicle has the information it needs to make decisions, and one of the first decisions it has to make is to plan a path from its current location to the goal, avoiding obstacles and other objects along the way. I will share more on this topic in the upcoming blog posts.

Refer the ebook on “Motion Planning with MATLAB” for more information

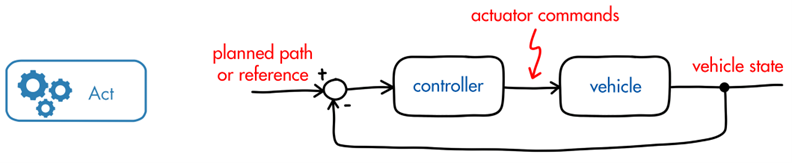

The last step is to act on that plan; to command the motors and actuators in such a way that the vehicle follows the path. This is the job of the controller and the control system. We can think of the plan as the reference signal that the controller uses to command the actuators and other control effectors in a way that manipulates the system to move along that path.

The state of the vehicle and the state of the physical world is constantly changing and so the whole autonomy loop continues. The vehicle senses the environment, it understands where it is in relation to landmarks in the environment, it perceives and tracks dynamics objects, it replans given this new set of information, it controls the actuators to follow that plan, and so on until it reaches the goal.

Combining the heuristic and optimal approaches

We’re going to go into more detail on each of these capabilities throughout this blog, but before I end this post, I want to comment on how uncertainty in the environment affects which approach we want to take to solve the autonomous navigation problem.

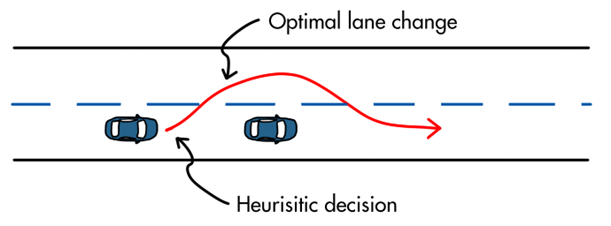

In the last post, we walked through the two different approaches for achieving full autonomy: a heuristic approach and an optimal approach. I want to point out that it doesn’t have to be the case where a solution is either 100% heuristic or 100% optimal. We can use both approaches to achieve a larger goal and the choice to do so often comes down to how much knowledge the vehicle has about the environment it’s operating within.

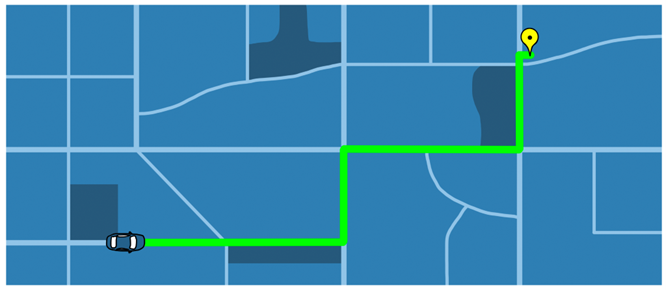

Take an autonomous car, for example. At a high level, the vehicle can plan an optimal route through a city. To do this, the car would need access to the local street maps with which it could find an optimal path that minimizes the amount of time it takes for the journey.

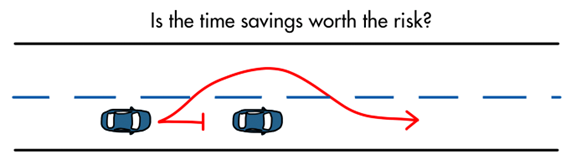

However, other cars and obstacles don’t exist within that map. Therefore, as the autonomous vehicle approaches a slower car, it has to sense that car and adjust its original plan to either slow down and follow or to change lanes and pass. Passing might seem like the most optimal solution at first, however, this wouldn’t necessarily be the case if, say, the slower car was only traveling 1 mph below the speed limit. Sure, the autonomous car could pass it and get to the destination faster, but the dangers of slowly overtaking the car and spending a lot of time in the on-coming traffic lane might outweigh the benefits of getting to the destination slightly faster.

An optimal solution is only as good as the model you used to solve for it. Therefore, if the objective function doesn’t consider the speed differential, then the decision to pass might not produce a desired result. So, why not write a better objective function and create a better model of the environment? Unfortunately, in some situations it is not practical to acquire all that information or understand the environment well enough.

One possible way to get around this is to have a heuristic behavior or a rule that helps guide the vehicle in situations where there isn’t enough information. For example, a rule could be, “only attempt to pass a car if the lead car is traveling slower than 10 mph under the speed limit.” That heuristic decision can be made using only the state of the lead car, and then once it is made – an optimal path into the adjacent lane can be created. In this way, these two approaches can complement each other.

Of course, this doesn’t guarantee that there won’t be an oncoming obstacle and the vehicle has to merge back, but we can just add more rules to cover those situations! In this way, the heuristic approach allows us to make decisions when information is scarce and then make optimal decisions for the cases where more information is available.

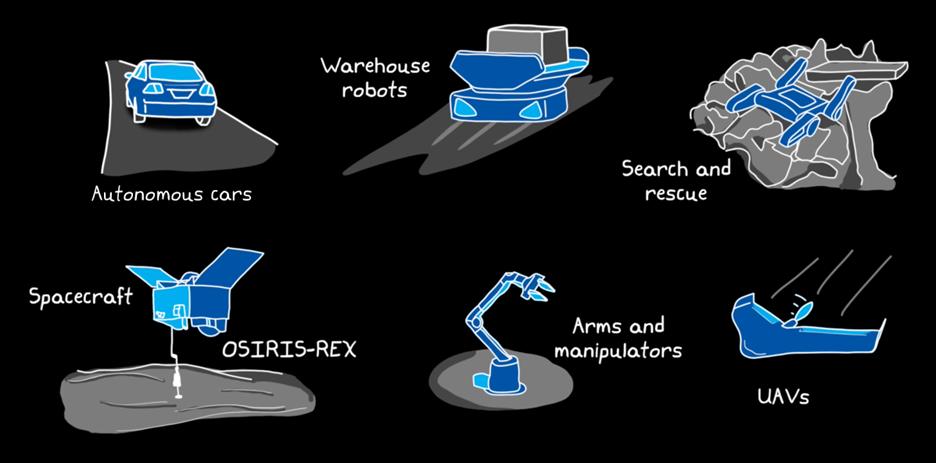

I keep using autonomous cars as the example, but they aren’t the only systems that make use of these two approaches.

- There are other ground vehicles, like they have in Amazon warehouses that quickly maneuver to a given storage area to move packages around while not running into other mobile vehicles and stationary shelves.

- There are vehicles that search within disaster areas that navigate unknown and hazardous terrain.

- There are space missions like OSIRIS-REX which navigate around the previously unvisited asteroid Bennu and prepare for a precisely located touch and go to collect a sample to return to Earth.

- There are robotic arms (cobots) and manipulators that navigate within their local space to pick things up and move them to new locations.

- There are UAVs and drones that survey areas, and many, many more applications.

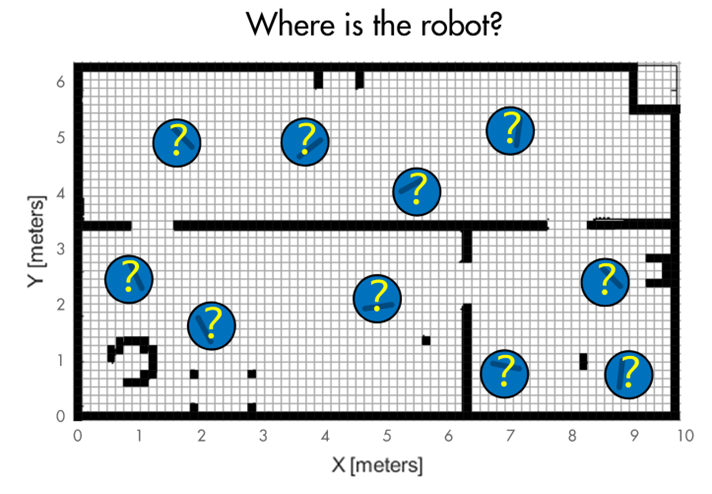

In their own way, each of these systems can achieve the four capabilities of autonomous systems; they can sense their surroundings, perceive their environment, plan a path, and follow that path. In the next post, we’re going to dive a little deeper into perception and talk about the localization problem – that is, how vehicles can determine where they are within an environment.

To learn more on these capabilities, you can watch this detailed video on “Sensor Fusion and Navigation for Autonomous Systems using MATLAB and Simulink”. Thanks for sticking around till the end! If you haven’t yet, please follow this blog to keep getting the updates for the upcoming content.

- Category:

- Autonomous Navigation with Brian Douglas

Cleve’s Corner: Cleve Moler on Mathematics and Computing

Cleve’s Corner: Cleve Moler on Mathematics and Computing The MATLAB Blog

The MATLAB Blog Guy on Simulink

Guy on Simulink MATLAB Community

MATLAB Community Artificial Intelligence

Artificial Intelligence Developer Zone

Developer Zone Stuart’s MATLAB Videos

Stuart’s MATLAB Videos Behind the Headlines

Behind the Headlines File Exchange Pick of the Week

File Exchange Pick of the Week Hans on IoT

Hans on IoT Student Lounge

Student Lounge MATLAB ユーザーコミュニティー

MATLAB ユーザーコミュニティー Startups, Accelerators, & Entrepreneurs

Startups, Accelerators, & Entrepreneurs Autonomous Systems

Autonomous Systems Quantitative Finance

Quantitative Finance MATLAB Graphics and App Building

MATLAB Graphics and App Building

Comments

To leave a comment, please click here to sign in to your MathWorks Account or create a new one.