Prevent UAV Crashes with Integrated Simulation Workflows: Insights from AUVSI Xponential 2023

The possibility of Unmanned Aerial Systems (UAS/UAV) improving day-to-day transportation seems closer than ever, with drones delivering packages and air taxis not far off in the horizon. Last month, at the AUVSI Xponential 2023 conference, I found myself captivated by the ongoing discussions surrounding the introduction of air taxis into our lives. While safety was rightfully a significant focus in most of the talks, what drew my attention the most was the conference’s theme, “Blueprint for Autonomy,” and the detailed guide that accompanied it. As one of the speakers, we took the opportunity to discuss the crucial role modeling and simulation play in the development of Unmanned Aerial Systems. In this post, I will provide a concise overview of our talk and delve deeper into the significance of our solutions in steering this industry towards safe and efficient UAS flights.

Since these keywords are used interchangeably, I will use drones, UAS, UAV, aircraft to represent the system throughout this blog.

Introduction

Have you ever wondered how pilots are trained to fly drones or aircraft? It requires intense training and hundreds of hours of practice, but with the help of flight simulators, pilots can acquire the necessary skills in a safer and cheaper way. With the advent of wireless communication technology, pilots can now fly drones without even stepping inside a cockpit. This is where drone simulators come in handy, offering a computer-based virtual environment where pilots can hone their skills.

With autonomous operations becoming increasingly popular for specific applications, human pilots are being taken out of the loop while AI pilots are making the decisions. But one thing that is still relevant with these advancements is safety – the primary objective is to prevent drones or aircraft from crashing. In our discussion, we tackled the growing importance of simulations as a solution to improving safety and increasing the adoption of autonomous operations. After all, just like human pilots, AI algorithms require learning and training too.

Why a UAS may crash?

To save the UAS from crashing, it’s important to know different reasons that can lead to a crash. Other than the safety aspects, a UAS crash also leads to loss of time, efforts, and assets. To prevent that, virtual assets or digital twins in a simulated environment comes to the rescue. All the possible failure tests can be performed in this simulated environment, which ensures safety in the real world. Here are some common reasons that lead to a system failure:

- Unexpected environmental conditions

- Increased payload

- Autonomy failure (untested behavior)

- Sensor or comms failure (GPS, IMU etc.)

- Controller errors

- Mechanical/Rotor failure

UAS Simulation Workflow

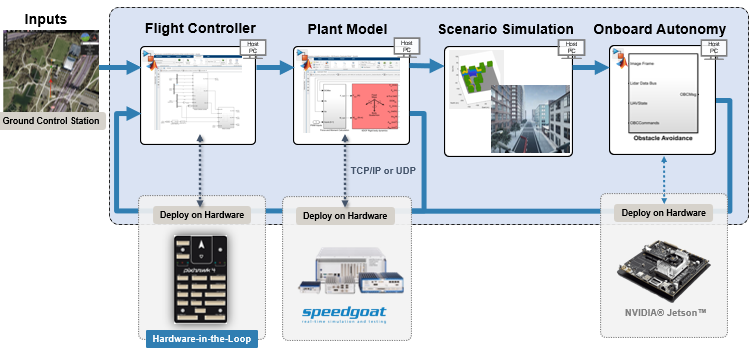

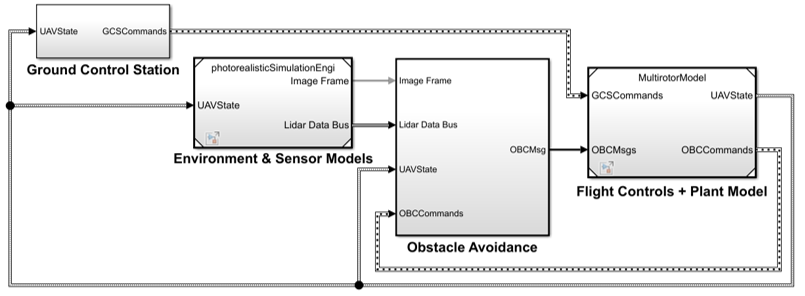

The simulation workflow involves designing the system, which includes modeling the flight dynamics, controller, and autonomy algorithms. This iterative process requires a simulation environment and connection with a ground control station to get real-world data. Simulated sensors such as a camera/lidar may be needed for specific applications. Further, photorealistic scenarios are required for the simulated sensors to read environment data from. And of course, they provide pretty visualizations for cross-functional teams to share the expected outcomes. Further, we want to reach at a point where we can deploy flight controller and autonomy algorithms on the drone.

What are UAV Plant Models?

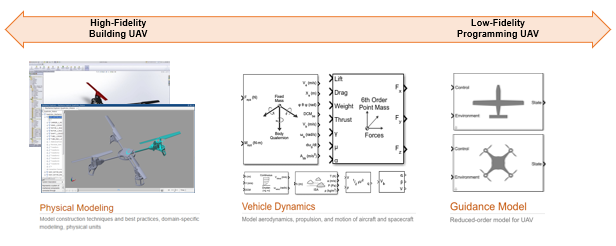

The simulations are only as good as the models, which should represent the real-life drone hardware. The UAV plant model (terminology comes from controls theory) should be verified and tuned using controller testing and plant verification.

Simulations can (almost) accurately represent the real hardware. Visualizing the behavior of the UAV plant model and flight controller in a virtual scenario is crucial for designing and testing drone autopilots. The simulations can also be used to verify and validate autonomy algorithms, such as obstacle avoidance.

The UAV plant model can be designed at different fidelity levels based on where we are in our development phase. A low fidelity guidance model is great for rapidly prototyping autonomy algorithms and testing the behavior in simulations. Whereas a high-fidelity physics-based model includes the UAV dynamics as well as real-world parameters.

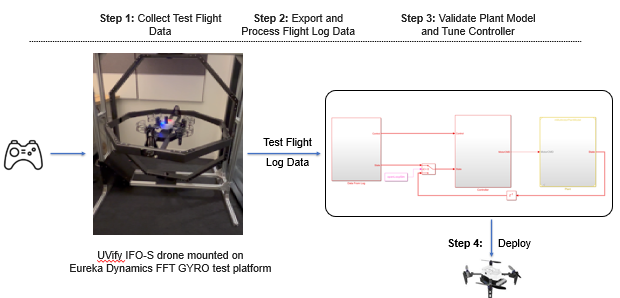

Now, you may have an expensive drone or aircraft that needs to be tested under various conditions. Simulating that drone needs representing a UAV plant using the real-world parameters. Plant identification is a process that lets you do this. This example in the UAV Toolbox documentation walks you through that process using a UVify IFO-S drone mounted on a Eureka Dynamics FFT GYRO test platform.

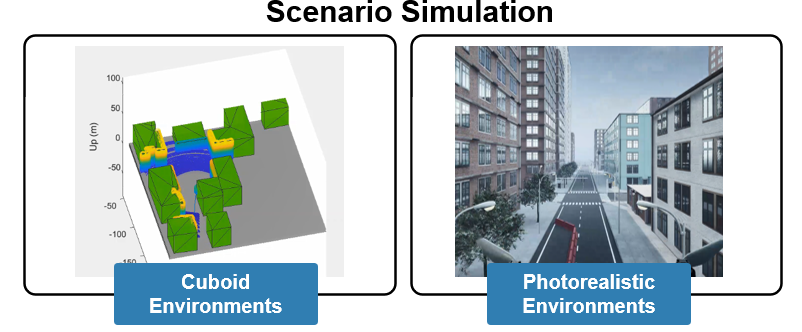

Scenario Simulation

We can enhance the simulations using different virtual scenarios, including cuboid-based environments and photorealistic scenes. We can import different types of vehicle models such as fixed-wing or multirotor UAS and test different sensor models, so the vehicle starts detecting the objects within the configured scene. A photorealistic scene also offers the possibility of training AI algorithms using the training data from the simulated world. You must be thinking if there is a way to bring all these components (plant model, controller, scenarios) together. Model-Based Design can integrate these different components in a cohesive way.

Deployment with SITL/HITL workflows

Next, you would go from the simulation to the deployment phase of the project. Before deploying the updated controller to the autopilot, you can verify the accuracy of the controller by running it in software-in-the-loop mode. For that, it deploys the controller as an executable within the host machine.

Further, you can deploy the generated code on to the autopilot hardware (Pixhawk) for hardware-in-the-loop testing. Here we bring the actuators, sensors, and other drone peripherals in the loop with the simulation models in MATLAB and Simulink. By simulation models, I mean the plant model that represents the drone which is still running in the host machine. As a next step, we can also deploy the plant model on a high-speed computing platform such as Speedgoat for faster simulations.

After verifying the controller accuracy in hardware-in-the-loop mode, we can test it with the actual drone in a controlled-lab setting. Once we are satisfied with the controller performance, we also deploy the autonomy algorithms on a compute board such as NVIDIA Jetson TX2. This lets us test the detect and avoid algorithms that we prototyped and simulated within the photorealistic scenes on the drone hardware.

UAV Inflight Failure Recovery

Even after going through so many steps before deploying a UAS, failures can occur. For that, we need to have fail-safe algorithms designed and deployed on the drone. This example shows how to use Control System Tuner to tune the fixed-structure PID controllers of a multi-copter for nominal flight conditions and fault conditions. It shows how to use a gain-scheduled approach to recover from a single rotor failure and land the UAV.

Summary

We covered a lot of topics in this blog! I would like to get your thoughts if you’d like to see separate blogs elaborating on the individual sections. Let me know what drone applications or algorithms you are working on.

Watch this webinar to learn more on this topic – Simulate and Deploy UAV Applications with SIL and HIL Workflows.

Cleve’s Corner: Cleve Moler on Mathematics and Computing

Cleve’s Corner: Cleve Moler on Mathematics and Computing The MATLAB Blog

The MATLAB Blog Guy on Simulink

Guy on Simulink MATLAB Community

MATLAB Community Artificial Intelligence

Artificial Intelligence Developer Zone

Developer Zone Stuart’s MATLAB Videos

Stuart’s MATLAB Videos Behind the Headlines

Behind the Headlines File Exchange Pick of the Week

File Exchange Pick of the Week Hans on IoT

Hans on IoT Student Lounge

Student Lounge MATLAB ユーザーコミュニティー

MATLAB ユーザーコミュニティー Startups, Accelerators, & Entrepreneurs

Startups, Accelerators, & Entrepreneurs Autonomous Systems

Autonomous Systems Quantitative Finance

Quantitative Finance MATLAB Graphics and App Building

MATLAB Graphics and App Building

Comments

To leave a comment, please click here to sign in to your MathWorks Account or create a new one.