Passing the Test

Last time we saw that the new version 13 YAML output can provide a richer CI system experience because the diagnostics are in a standard structured format that can be read by a machine (the CI system) and presented to a human (you) much more cleanly.

One thing I didn't mention is that the TAPPlugin (along with several other plugins) now also supports passing diagnostics! It's as easy as an additional Name/Value pair when creating the plugin:

import matlab.unittest.TestRunner; import matlab.unittest.plugins.TAPPlugin; import matlab.unittest.plugins.ToFile; try suite = testsuite('unittest'); runner = TestRunner.withTextOutput('Verbosity',3); % Add the TAP plugin tapFile = fullfile(getenv('WORKSPACE'), 'testResults.tap'); runner.addPlugin(TAPPlugin.producingVersion13(ToFile(tapFile)), ... 'IncludingPassingDiagnostics',true); results = runner.run(suite) catch e disp(getReport(e,'extended')); exit(1); end exit;

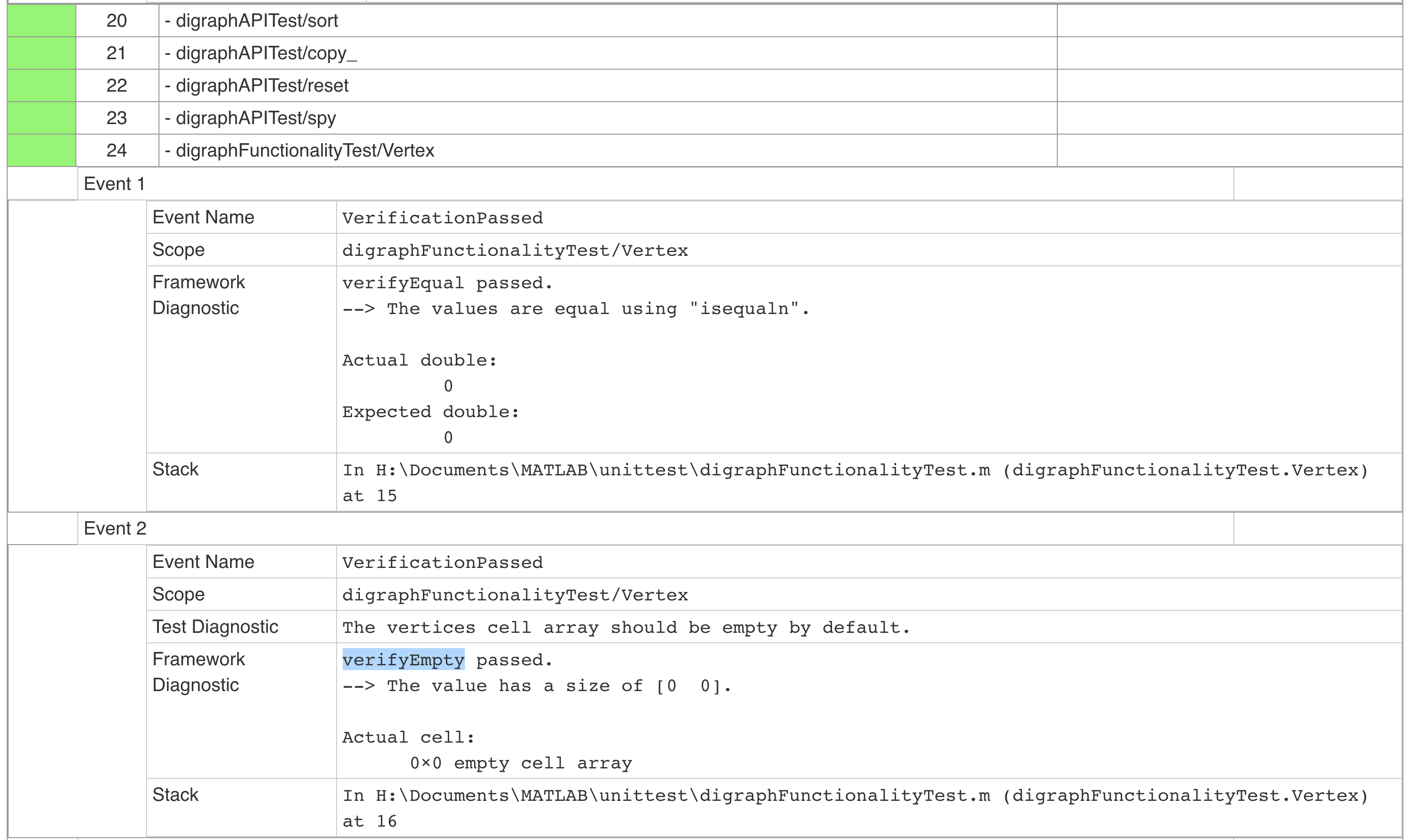

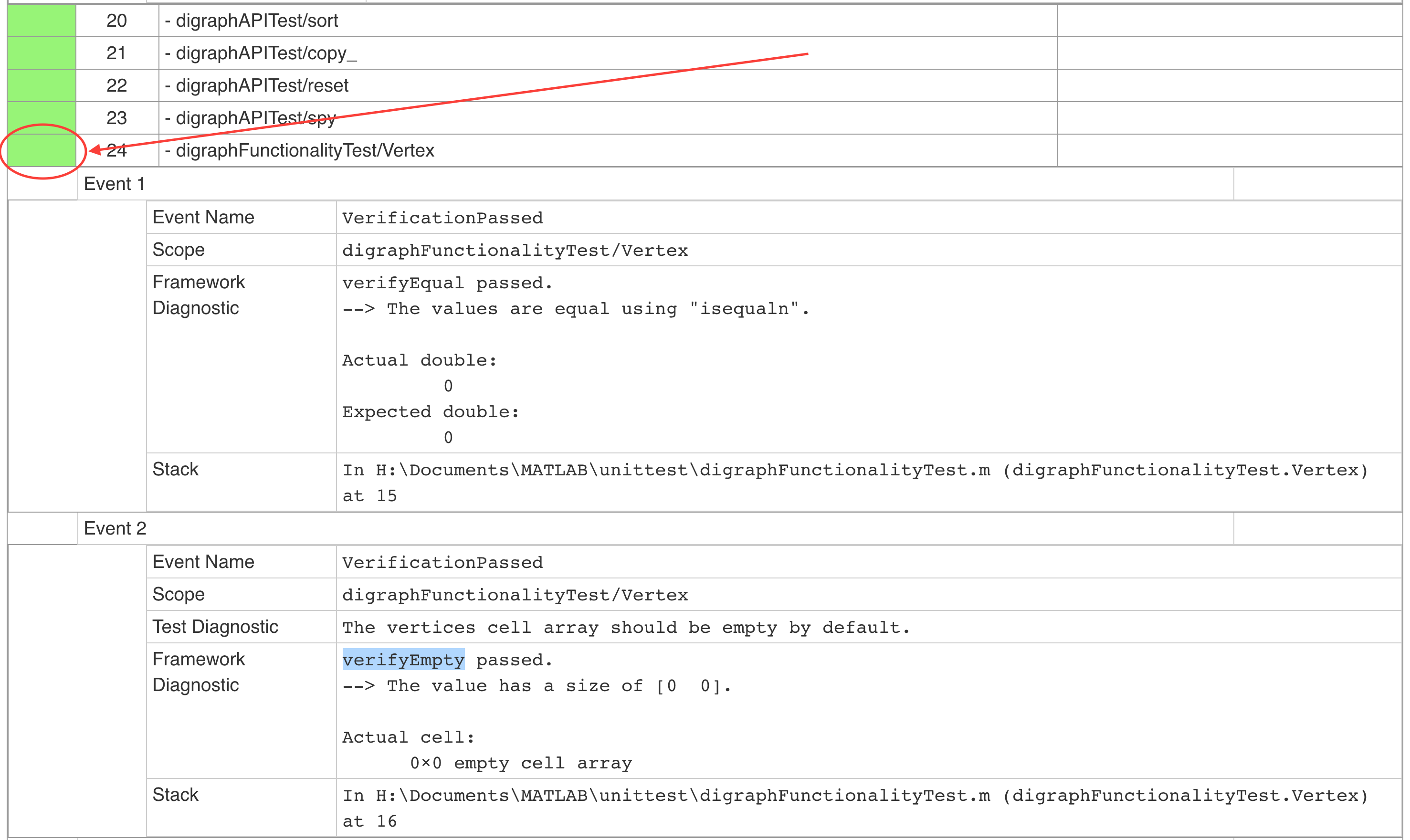

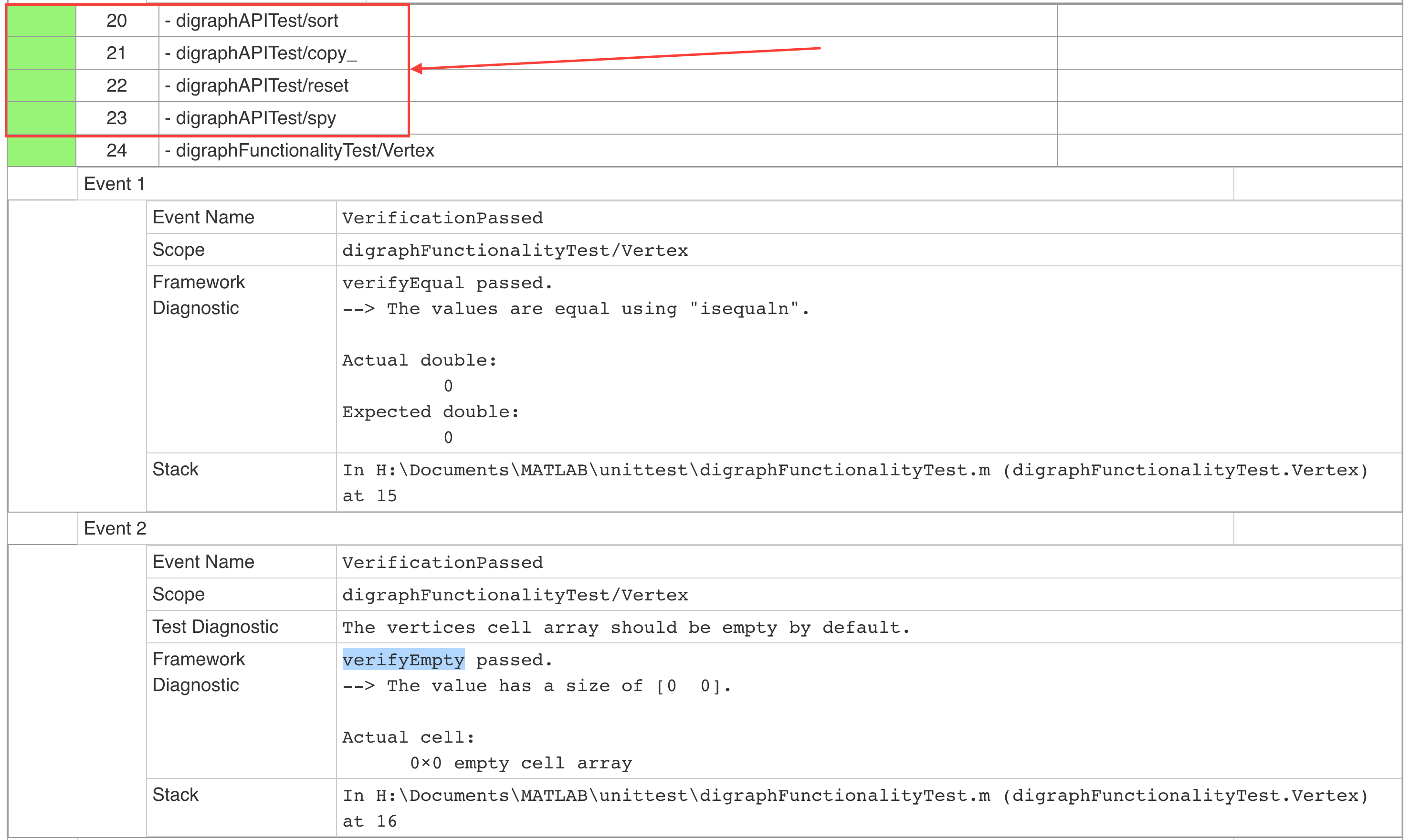

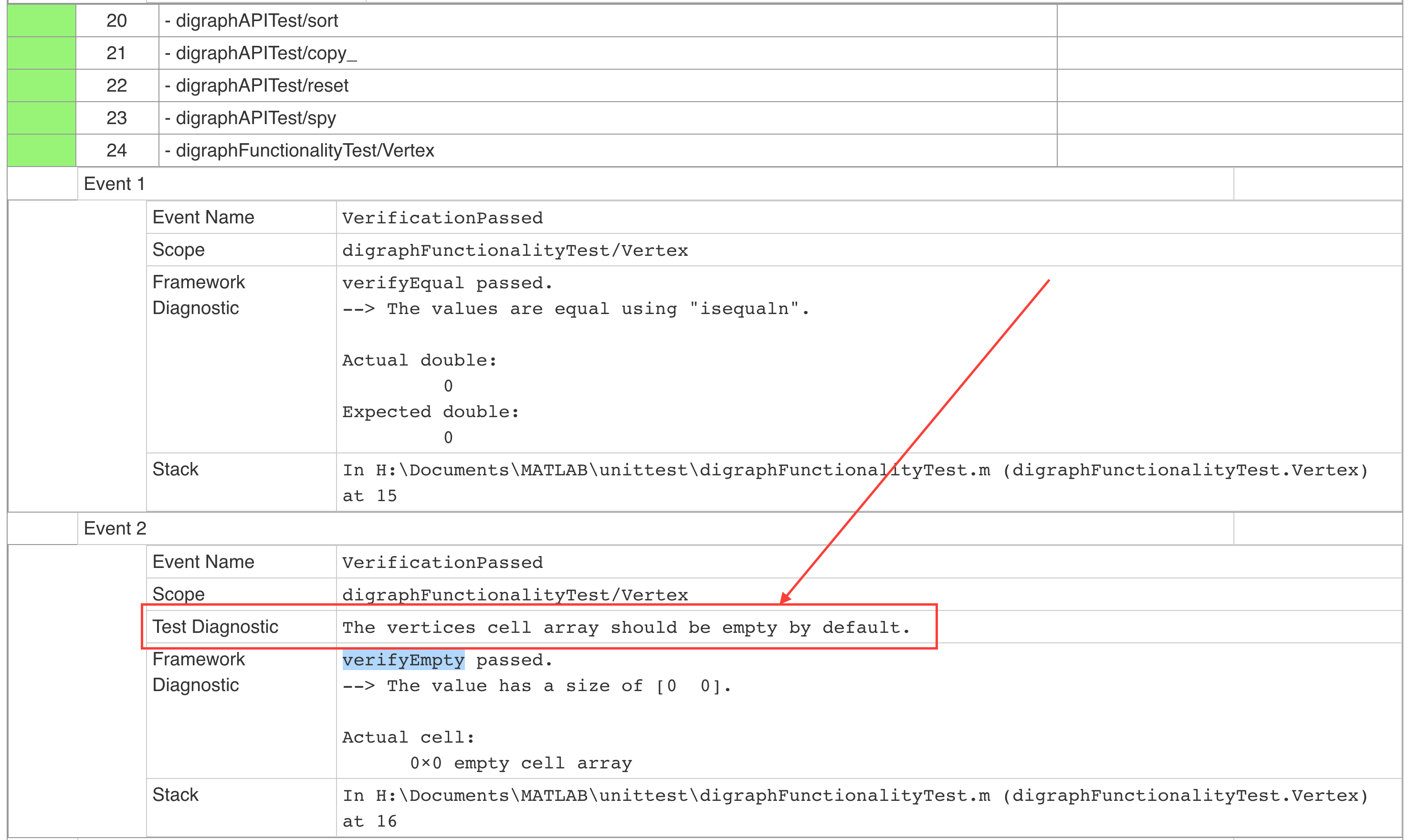

With that simple change the output now includes not only the diagnostics from failing qualifications, but also the diagnostics from the passing qualifications as well. All of this information can now be stored in your Jenkins logs. Take a look:

A couple things to note right off the bat. First, we can see that the tests I've zoomed in on here are passing. How do we know that without digging into the text? The colors tell us, look for the green!

Second, we can actually see that some of these tests passed without any qualifications at all. Since we see no passing diagnostics, we can infer that there was no call to verifyEqual or assertTrue or anything. Is this expected? Not sure. There are definitely some times where a test does not need to perform any verification step, and just executing the code correctly is all the verification needed, but if I were a bettin' man I'd bet that most of the time there is an appropriate verification that can and should be done. This view makes such things more clear.

Why might we want to include passing diagnostics? Usually we are most concerned with the test failures and we don't really need to provide any insight into passing qualifications? While this is definitely true, including the passing diagnostics may prove to be desirable in a number of ways:

Diagnostics as proof - Showing the passing diagnostics gives more information to the user, including even simply the test name. When called upon to prove the behavior of your software at any point in time (or any version of your SCM system), keeping a history of your test runs and how they passed (as opposed to merely that they passed) may prove valuable.

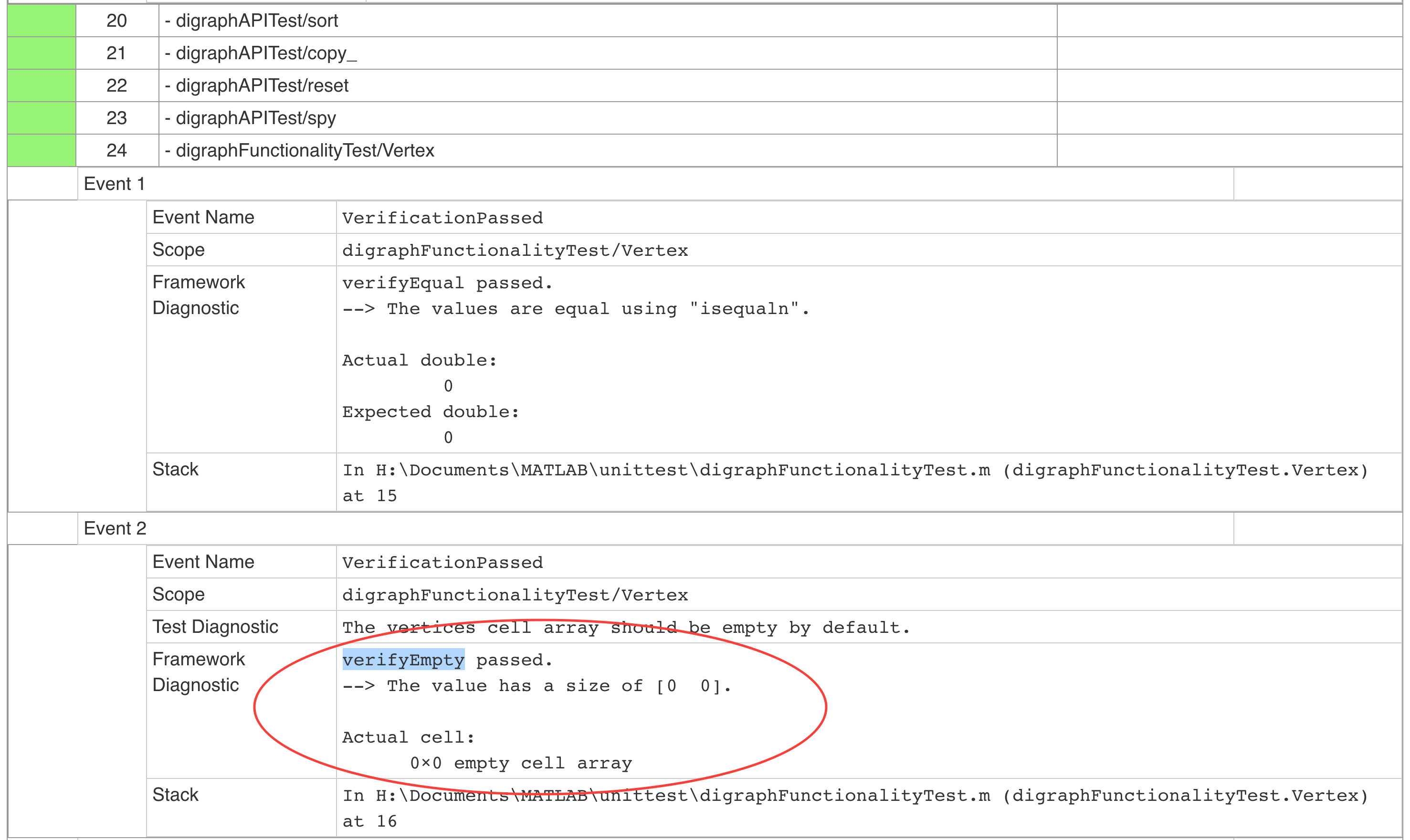

Diagnostics to find root causes - Unfortunately, test suites aren't perfect. They are very good defenses against the introduction of bugs, but sometimes bugs can slip passed their fortifications. However, when that happens you can look back into previously passing results and possibly gain insight into why the test suite didn't catch the bug. For example, in this example the test passes the verifyEmpty call, but if the correct behavior called for an empty double of size 1x0, these diagnostics would show that is was actually a 0x0 cell array which was unexpected and would explain why the tests failed to catch the introduction of the bug.

Diagnostics as specification - Finally, including the passing diagnostics can provide self documenting test intent. This is particularly true if you leverage test diagnostics as the last input argument to your verification. The test writer can provide more context on the expected behavior of the test in the language of the domain, which can therefore act as a specification when combined with such reports. This is an important and oft misunderstood point. In order to support the display of passing diagnostics, when writing tests we should phrase diagnostics we supply in a way that does not depend on whether the test passed or failed. It should simply state the expected behavior instead. You can see in this example that:

The vertices cell array should be empty by default.

is much better than:

The vertices cell array was not empty by default.

A common mistake is to assume these descriptions only ever apply to failures when in fact they apply to both passing and failing conditions.

What are the downsides of leveraging passing diagnostics? Really it boils down to performance and verbosity. Not everyone will want to wade through all the passing diagnostics to analyze the failures, and not everyone will want to incur the extra time/space performance overhead that will come with including them. What about you? Do you find you need to analyze in more depth what happens in passing tests?

- Category:

- Continuous Integration

Comments

To leave a comment, please click here to sign in to your MathWorks Account or create a new one.