Coming around full Circle

CI services in the cloud. They are beautiful. All you need to do is drop a little bit o' yaml in your repository and a whole world of automated build and test infrastructure starts creating some good clean fun with your commits. The kind of good clean fun that actually makes your code cleaner. You don't need to set up your machines, you don't need to worry about the power button on your CI server (or your agents for that matter). It's all just done for you and it's lovely.

A little while ago we showed how MATLAB, Simulink, and most toolboxes are now supported on a variety of cloud CI services for public projects. Today, I'd like to highlight one of these services, CircleCI, in a little more detail. CircleCI is a top CI platform that supports repositories on both GitHub and Bitbucket.

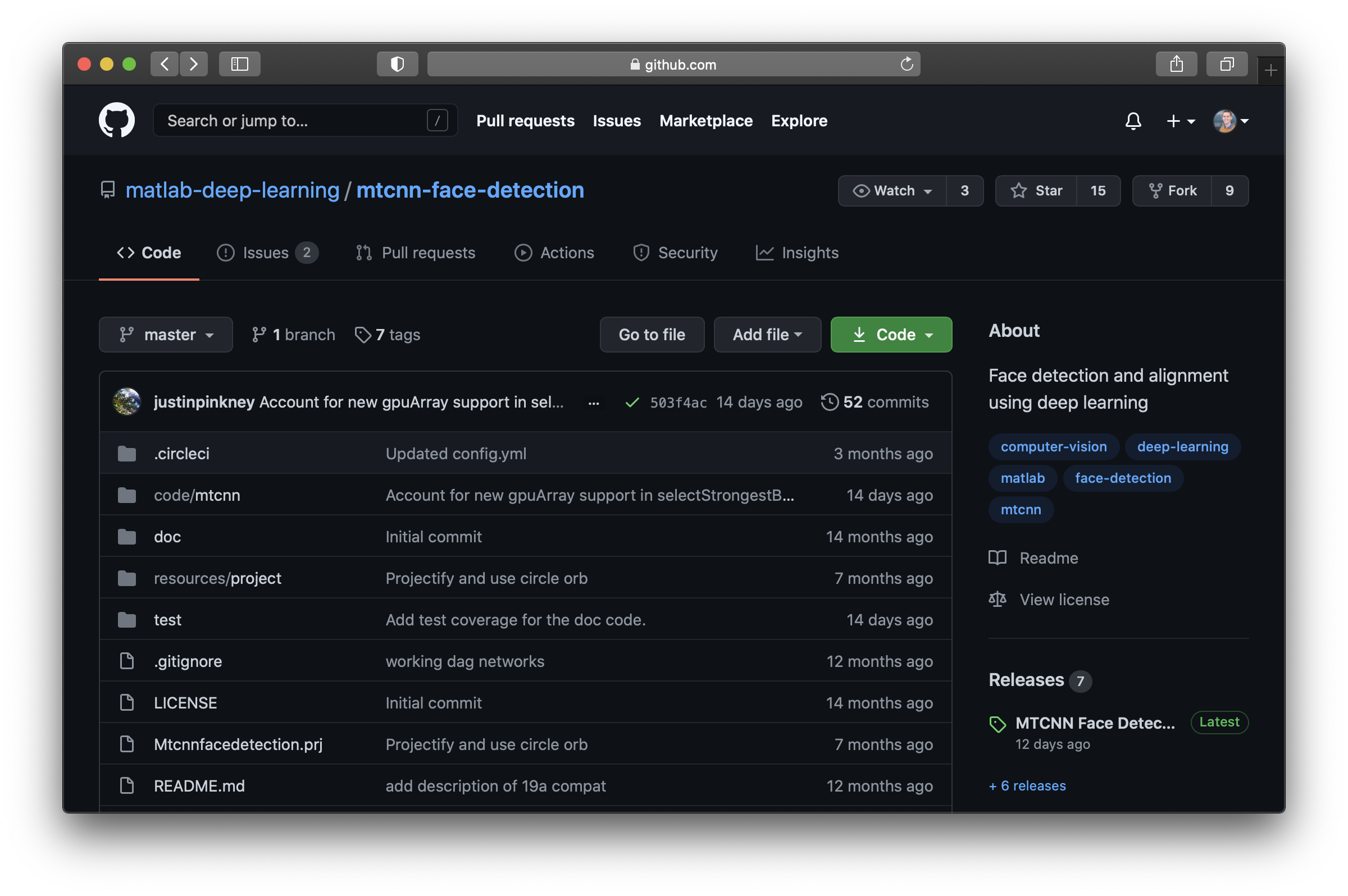

To show you how this can work with MATLAB and Simulink code, let me remind you about our deep learning repository highlighting face detection using a multi-task cascaded convolutional neural network (MTCNN). This is a nice project that we can use to show some of the benefits of a platform like CircleCI.

First, let's see how easy it is for us to run the tests this repo specifies to run. This repo uses a MATLAB project to label all the tests to be run. Assuming the repository is already setup to build with CircleCI, you can then immediately reap the benefits from an orb that we have developed to streamline your MATLAB and Simulink builds. What are orbs you say? They are sharable nuggets of CI config. In our case it helps you define how you can get MATLAB to CircleCI cloud agents, how you can run arbitrary MATLAB commands, and how you can easily run tests with some of the most common options.

For example, let's say we start by running our tests and producing a couple popular artifacts, a JUnit-style xml output and a PDF test report, the former for integrating with CircleCI test results views and the latter for archiving purposes and rich MATLAB and Simulink specific reporting information (e.g. like we see here, here, and here). First we start by adding to a CircleCI YAML config file some version information, both for what version of the CircleCI configuration spec we are using and what version of our orb we will be using. We will use the most current versions of both the spec and the orb.

Then, you set up the executor type, which is the machine or image that you will be using to run your build. In this case we will use a recent version of Ubuntu. Note, that if you are using a Linux cloud agent machine image in a public project, you can setup MATLAB quickly and easily using the install element that you get with our orb.

Once MATLAB is squared away, you can then define a job that can be referenced in a workflow to produce our JUnit and PDF artifacts from the test run by using the run-tests element and simply telling the orb where to create the artifacts.

We then, as part of the same job, store these artifacts using the built-in store_test_results element (to process the JUnit-style XML output), and the store_artifacts element to save away both the PDF report and the XML for saving keeping. Here's is what that looks like:

Finally, we reference this job we've defined in a workflow and we are all good.

version: 2.1

orbs:

matlab: mathworks/matlab@0.4.0

jobs:

run-all-dem-magnificient-matlab-tests:

machine:

image: ubuntu-2004:202101-01

steps:

- checkout

- matlab/install

- matlab/run-tests:

test-results-junit: artifacts/junit/testResults.xml

test-results-pdf: artifacts/pdf/testResults.pdf

- store_test_results:

path: artifacts/junit

- store_artifacts:

path: artifacts

workflows:

test:

jobs:

- run-all-dem-magnificient-matlab-tests

Alright, now let's run a build with that and see what we are lookin like:

Note I sped up the video of this build for brevity. You can see however, that CircleCI spins up a build agent, MATLAB is setup on this agent, and the tests run and pass, all in a matter of minutes. What's more, it saved us some artifacts! Let's check out the PDF report:

Alright there's a couple cool things here:

- Can we say artifacts? It's pretty easy to get a streamlined way to store record keeping artifacts like pdf results

- These reports can contain additional richness like images, plots and visualizations, screenshots, and if you are using Simulink Test, signal level comparisons and visualizations. Dope. In this case we are including images generated from the example doc code, which is tested to ensure the examples execute without error, and the resulting figures are logged using a FigureDiagnostic (similar to what we did in this post).

- Look at 'dem filtered tests! Alright, while not super cool that we have some tests that are filtered out, it is at least nice that we are able to see this pretty cleanly from the report. Digging into the diagnostics we see that the reason these tests are filtered out is because they require a GPU to run.

Ah well, on that last point, we can't get too picky right? After all we are leveraging a cloud service that is handling all of our machine configuration for us, so we shouldn't expect to be able to run tests that need GPUs in the cloud. I guess we will just have to remember to run those tests from time to time offline on our own machines with GPUs.

Wrong!

As it turns out, CircleCI has a plan that supports machines with GPU hardware! Isn't that nice? Trying that out here on our build simply means changing our yaml snippet from this:

machine: image: ubuntu-2004:202101-01

...to something like this:

resource_class: gpu.nvidia.small machine: image: ubuntu-1604-cuda-11.1:202012-01

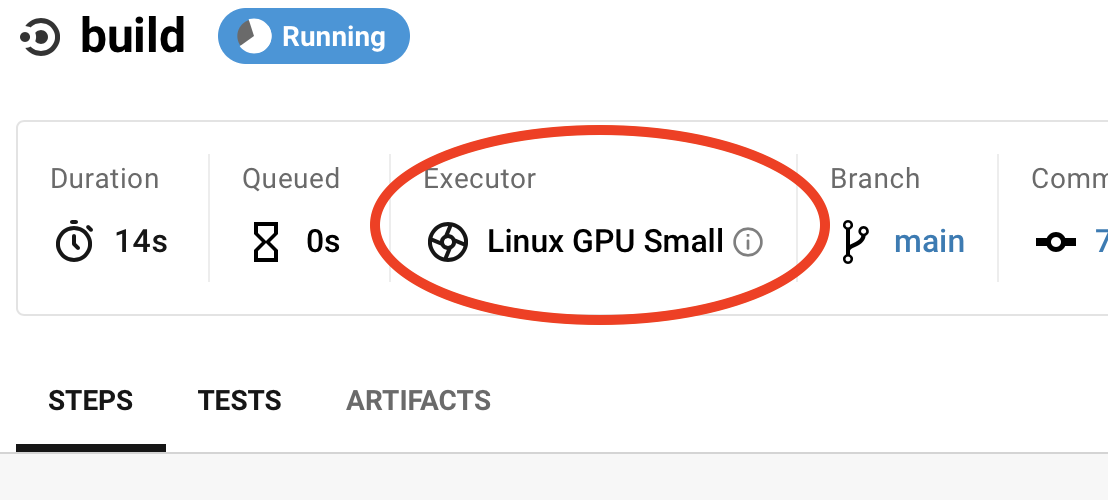

Now if your CircleCI plan has GPUs enabled, with that small snippet you can see our build is now using a machine with a GPU:

However, we have a problem!

Looks like we have some test failures. That was unexpected (really, it was unexpected as I was writing this blog!) Turns out, when you don't test things in automation, test failures creep in. That's why having all your tests automated under CI is really a must have. In this case, this deep-learning repository began failing in R2020b with the introduction of gpuArray support for selectStrongestBbox from the Computer Vision Toolbox. Long story short, it was an easy fix, we simply needed to shift around a gather call around the gpuArray to account for these differences in earlier releases. However, just getting early detection of this bug required that we run on GPU hardware, so this is a great feature of the CircleCI platform to enable that. Also, take note of that clean test results failure display that we see because we uploaded our JUnit-style xml artifacts. Nice bonus.

I seem to remember that once or twice I have heard that GPUs can be otherwise useful in deep learning applications? Does that sound right? grin Just imagine what automated workflows you could do with the power of MATLAB & CircleCI.

Cleve’s Corner: Cleve Moler on Mathematics and Computing

Cleve’s Corner: Cleve Moler on Mathematics and Computing The MATLAB Blog

The MATLAB Blog Guy on Simulink

Guy on Simulink MATLAB Community

MATLAB Community Artificial Intelligence

Artificial Intelligence Developer Zone

Developer Zone Stuart’s MATLAB Videos

Stuart’s MATLAB Videos Behind the Headlines

Behind the Headlines File Exchange Pick of the Week

File Exchange Pick of the Week Hans on IoT

Hans on IoT Student Lounge

Student Lounge MATLAB ユーザーコミュニティー

MATLAB ユーザーコミュニティー Startups, Accelerators, & Entrepreneurs

Startups, Accelerators, & Entrepreneurs Autonomous Systems

Autonomous Systems Quantitative Finance

Quantitative Finance MATLAB Graphics and App Building

MATLAB Graphics and App Building

Comments

To leave a comment, please click here to sign in to your MathWorks Account or create a new one.