TL;DR: Too Long; Didn’t Run: Part 4 – Incremental Testing in CI

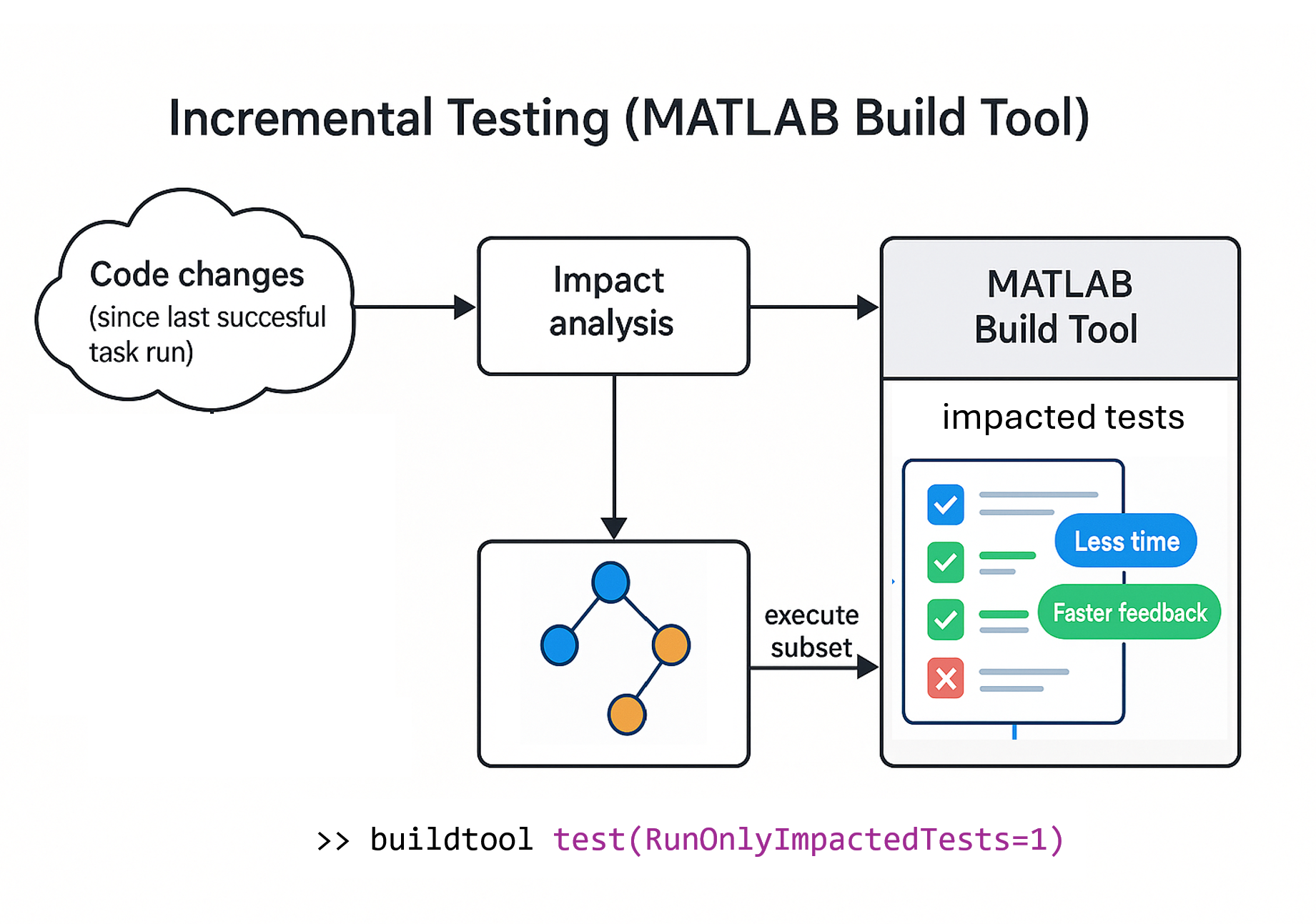

In the previous post we explored how to use the MATLAB build tool to run only impacted tests during local, iterative development cycles. In this post, we'll take that concept further and apply incremental testing in a continuous integration (CI) environment to speed up feedback and improve overall throughput.

Contents

Example Project: arithmetic

For this walkthrough, we'll use a simple example project, arithmetic, that contains:

- MATLAB functions for basic arithmetic operations.

- Unit tests for those functions.

Project Structure:

The toolbox folder holds the source code, while the tests folder contains tests for those functions.

Context

Whether (and when) you choose to run only impacted tests versus the full test suite depends on many factors, including:

- Project complexity

- Branching strategy

- Release cadence and workflow

- Team or organizational standards

This post does not prescribe a software qualification strategy. Instead, it shows an example of how to achieve incremental testing in CI using the build tool's test impact analysis.

For this example, we'll configure the build to run incremental tests on feature branches whenever a developer pushes changes. The same approach, however, can be adapted to other events such as pull requests, merges to main , or nightly builds.

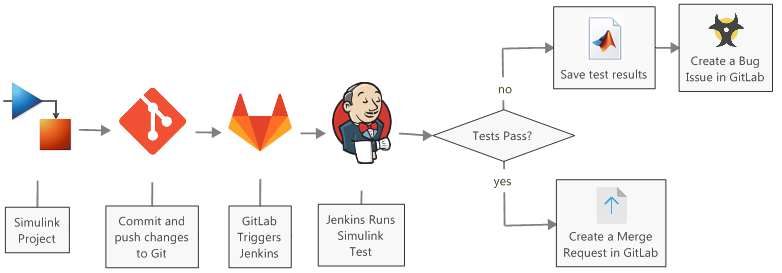

I'll demonstrate this example using GitHub Actions, but this pattern is easily transferable to other CI systems like GitLab CI, Azure DevOps or Jenkins.

The Build File

The buildfile.m in the project defines a build plan with a test task that runs the project's tests and produces results.

function plan = buildfile import matlab.buildtool.tasks.* plan = buildplan(localfunctions); plan("clean") = CleanTask; plan("test") = TestTask(SourceFiles="toolbox",TestResults="results/test-results.xml"); plan.DefaultTasks="test"; end

Incremental testing is enabled by setting RunOnlyImpactedTests property or task argument to true on a TestTask instance. In this example, we will use the task argument at runtime, rather than baking it directly into the test task configuration.

As discussed in the previous post, the build tool stores task traces in the .buildtool cache folder. A task trace is a MATLAB release-specific record of a task's inputs, outputs, actions and arguments from its last successful run.

By caching and restoring these task traces (and outputs such as test results) appropriately in CI, we can effectively enable the build tool to detect changes and run only impacted tests in CI workflows as well.

CI Pipeline Configuration

The GitHub Actions workflow configuration is defined in .github/workflows/ci.yml:

name: MATLAB Build

on:

push:

branches:

- 'feature/*' # Only run on feature branches

workflow_dispatch:

jobs:

build:

runs-on: ubuntu-latest

steps:

# Check out the repository

- uses: actions/checkout@v4

# Sets up MATLAB on a GitHub-hosted runner

- name: Set up MATLAB

uses: matlab-actions/setup-matlab@v2

with:

release: R2025a

products: >

MATLAB

MATLAB_Test

# Cache .buildtool and test results

- name: Cache buildtool artifacts

id: buildtool-cache

uses: actions/cache@v4

with:

path: |

.buildtool

results

key: ${{ runner.os }}-buildtool-cache-${{ github.ref_name }}

# Run MATLAB build

- name: Run build

uses: matlab-actions/run-build@v2

with:

tasks: test(RunOnlyImpactedTests=1)

build-options: -verbosity 3

The configuration has the following key steps:

- Setup MATLAB

Installs MATLAB R2025a including MATLAB Test using the MathWorks' official matlab-actions/setup-matlab action. We are using GitHub-hosted runners in this demo.

- Setup Caching

Cache and restore the .buildtool folder and results/ directory. The cache key, in this example, includes the OS and branch name (e.g., Linux-buildtool-cache-feature/cache). See GitHub Actions cache action usage for more information on how to use cache action and how key matching works.

- Run MATLAB

Uses matlab-actions/run-build to execute the test task with RunOnlyImpactedTests=true to run only impacted tests.

First Run (No Cache)

Let's create a feature branch feature/cache under this blog's branch 2025-Test-Impact-Analysis-CI.

On the first pipeline run, there is no cache hit for key Linux-buildtool-cache-feature/cache.

All tests run because no prior traces exist:

The pipeline then creates and saves a cache for the feature/cache branch for future runs:

Second Run: Modify h_my_add.m

Let's make a change.

- Clone the repository at branch 2025-Test-Impact-Analysis-CI.

- Run the build locally within MATLAB using buildtool. All the tests will run since there are no task traces yet.

Next, checkout and switch to the feature/cache branch, then modify h_my_add.m, say, by adding an arguments block:

function out = h_my_add(in1, in2) % This is a helper function to add 2 values arguments in1 {mustBeNumeric} in2 {mustBeNumeric} end out = in1 + in2; end

Verify locally with:

>> buildtool test(RunOnlyImpactedTests=1) -verbosity 3

Output (excerpt) :

Only 8 out of 21 tests were selected to run—those impacted by the modified file. That's promising!

Now let's push the changes and inspect the CI pipeline.

We see a cache hit and it confirms the .buildtool and results folder were restored.

The build tool leverages the restored cache to run only impacted tests.

Third Run: Modify my_subtract.m

Make another change, commit, and push. The cache is restored again, and now only the tests impacted by both outstanding changes on the feature branch are executed:

Wrapping Up

There you have it! This workflow demonstrates how to combine:

- MATLAB build tool's test impact analysis, and

- CI platform's caching

... to achieve incremental testing in CI.

You can refine this pattern by adding things like:

- Adding cache restore keys to support fallback scenarios.

- Adjusting cache keys for multi-platform or multi-branch setups.

- Deciding when to run incremental tests vs. full regression tests.

Would you run incremental testing in your CI pipeline? At what point in your release cycle do you feel most comfortable running only impacted tests?

We envision continuing to invest in making incremental testing better across both local development and CI workflows. Your feedback is essential in helping us focus on the improvements that matter most to you. So, leave us your thoughts in the comments section below.

That’s a wrap (for now) on our series about Test Impact Analysis. Stay tuned — we have more software development topics in the pipeline, and we look forward to sharing them with you!

Comments

To leave a comment, please click here to sign in to your MathWorks Account or create a new one.