Understanding pig emotions to improve animal welfare

Lately, artificial intelligence (AI) is being sold as the panacea for problem-solving. Can’t decide where to live? Ask AI. Which restaurant should you go to? Ask AI. Need lottery picks? Again, ask AI. (Yes, it reportedly worked!) There seems to be no shortage of ways AI can improve your life.

Artificial intelligence isn’t just transforming human lives; it’s making a real difference for animals as well. Today, AI-powered facial recognition helps reunite lost pets with their families, while smart monitoring systems keep wildlife and drivers safer at busy road crossings.

AI is also capable of improving the lives of farm animals. An international team of scientists developed an AI algorithm that decodes pig vocalizations to detect emotions, helping farmers identify when pigs are happy or stressed.

Pigs at a free-range farm.

According to Reuters, “European scientists have developed an AI algorithm capable of interpreting pig sounds, aiming to create a tool that can help farmers improve animal welfare.”

The study

By recording an impressive 7,414 sounds from 411 pigs as they played, competed for food, or spent time alone, the scientists were able to analyze which oinks and squeals signaled positive emotions, and which ones revealed stress or negative emotions.

First, the calls were annotated with the pig’s experience at the time of the recording, or context. Were they playing or being fed? Were they isolated from the other animals? Next, the contexts were classified by emotional valence at the time a vocalization was produced: either negative, such as isolation or competing for space at the trough, or positive, such as social contact, nursing, or playing.

Then, the researchers extracted data from each call using Audio Toolbox and Signal Analyzer, including duration and frequency. They then created spectrograms in MATLAB for each call.

Using the acoustical data, the researchers tested and compared two classification methods: Permuted Discriminant Function Analysis (pDFA), a traditional statistical approach using the acoustic features, and AI in the form of a convolutional neural network (CNN).

AI outperforms the traditional statistical approach

“As an input to the neural network, spectrograms were computed from the pig vocalization audio recordings in MATLAB R2020b,” the study explained.

To bring this idea to life, the research team leveraged advanced tools from MathWorks. Assistant Professor Jeppe Have Rasmussen from the University of Copenhagen played a key role in implementing the technical approach. The process combined Computer Vision Toolbox for feature detection and Deep Learning Toolbox for training the AI models.

The backbone of the system was a ResNet-50 convolutional neural network, adapted through transfer learning. This choice allowed the researchers to build on a proven architecture while tailoring it to the unique challenge of interpreting pig vocalizations.

Two separate neural networks were trained for distinct tasks:

- Valence Classification: Determining whether a spectrogram represented a positive or negative emotional state.

- Context Classification: Identifying the specific context in which the vocalization occurred, such as feeding, isolation, or social interaction.

By training these models independently, the team ensured that each network specialized in its respective application, leading to more accurate predictions.

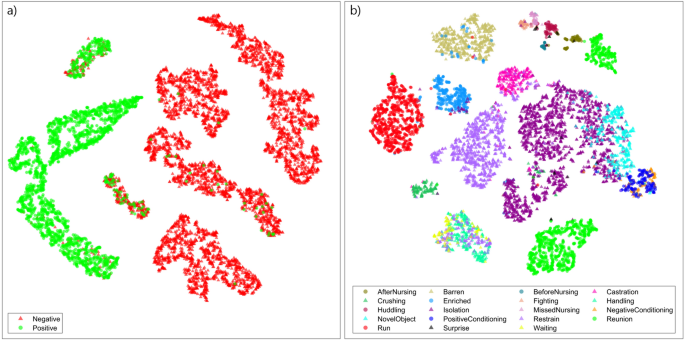

Classification of calls to the valence and context of production based on t-SNE. t-SNE embedding of (a) valence and (b) context classifying neural network’s last fully connected layer activations for each spectrogram. Triangles indicate negative valence vocalizations, while circles indicate positive ones. Image credit: Briefer et al. via Nature.

The researchers used t-SNE (t-distributed Stochastic Neighbor Embedding), a technique that reduces complex, high-dimensional data into two dimensions for visualization. In the plots above, each dot represents a vocalization. Panel (a) shows clusters based on emotional valence with green indicating positive and red indicating negative. Panel (b) reveals how calls are grouped by context, such as isolation or nursing. These clusters demonstrate that the AI model successfully learned patterns linking sound features to emotional states and situations.

Their algorithm could identify both emotion and what the pig was doing just from their calls. The neural networks outperformed the pDFA significantly. The CNNs had a valence classification accuracy of 92% compared to 62% for the pDFA classifications. While the results for context, or the pig’s activity at the time of the call, were still impressive. The neural network was correct 82% of the time, compared to just 19% accuracy for the pDFA.

AI to improve animal welfare

These findings confirm that pig vocalizations are reliable indicators of emotional states, paving the way for automated emotion-monitoring tools to improve animal welfare. Neural network analysis revealed that pigs raised outdoors or in free-range systems produce fewer stress calls than those on conventional farms. This technology could even lead to consumer-facing apps that label farms based on welfare standards.

Beyond transparency, the benefits are clear:

- Improve animal welfare by responding quickly to signs of distress.

- Reduce stress-related health issues, lowering veterinary costs and boosting productivity.

- Advance precision livestock farming with AI-driven insights for smarter care.

- Support ethical farming practices, enhancing accountability and consumer trust.

So next time you hear a pig squeal, remember, there might be an international AI team ready to translate its mood!

To read the full research paper, see doi.org/10.1038/s41598-023-45242-9

Comments

To leave a comment, please click here to sign in to your MathWorks Account or create a new one.