Exploring the MATLAB beta for Native Apple Silicon

Update 8th December 2022: You may be interested in the newer Apple Silicon beta that’s discussed at Playing with the R2022b MATLAB Apple Silicon beta for M1/M2 Mac » The MATLAB Blog – MATLAB & Simulink (mathworks.com)

When Apple released the M1 chip, the first version of their new ARM-based processors, in November 2020, it caused a great deal of excitement in the computing world. Naturally, everybody wanted to use all of their favorite applications on the new hardware from day one. There was a small problem though:

One does not simply release an Apple Silicon port of MATLAB!

It takes a lot of time to port applications as complex as MATLAB, Simulink, Simscape etc to a new CPU architecture. Decades of person-years in fact! Fortunately, Apple understands this and, just as they did for the PowerPC to Intel transition in 2005, they created a compatibility layer called Rosetta 2 that allows software targeted at Intel Processors to run on the new architecture. This gives vendors time to manage the transition. MathWorks made use of this and MATLAB has been supported on Apple Silicon Macs via Rosetta 2 since R2020b Update 3.

MATLAB beta on Native Apple Silicon available now

Ever since the M1 release, MATLAB users have been asking the question “When is a native Apple Silicon version going to be available?”. The good news is that the answer is ‘Right now!’ The bad news is that its MATLAB only (no toolboxes or Simulink yet) and its still in beta. That is, its a work in progress that shouldn’t be used in production environments and MathWorks are really interested in getting your feedback.

The beta is available at https://mathworks.com/support/apple-silicon-r2022a-beta.html to everyone who has a MATLAB license. Try it out and let us know what you find using this feedback form

What did I find in the beta?

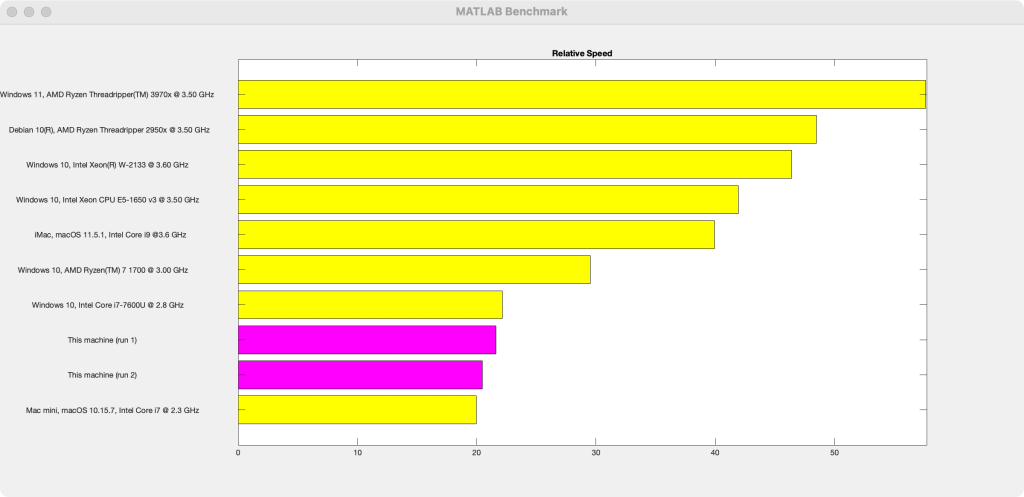

The first thing I did on launching the Native Apple Silicon Beta of MATLAB on an M1 Pro (with 10 CPU cores and 16 GPU cores) was to run bench twice using the command bench(2)

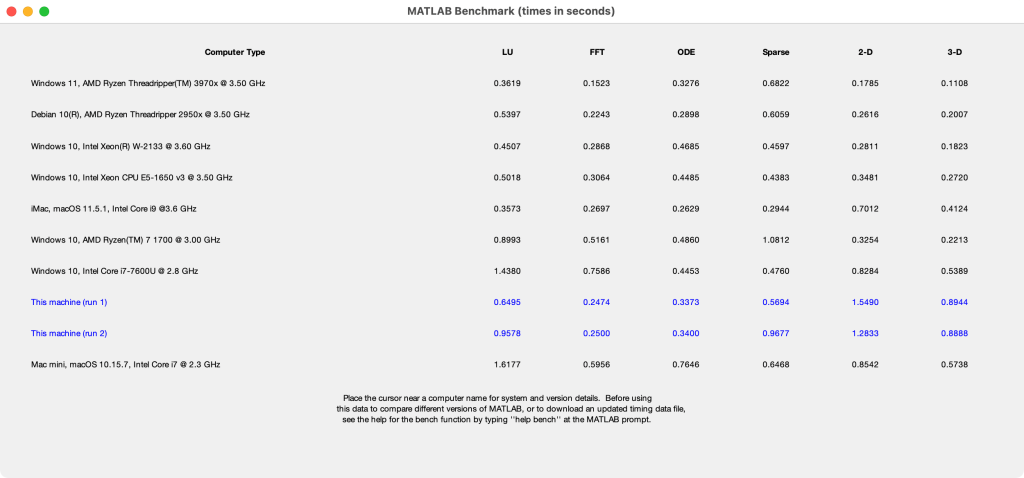

Let’s see how this compares to R2022a running under Rosetta 2 on the same machine:

Everything measured in bench shows better performance in the native beta than in the production version running on Rosetta. This is a great start but its definitely not true for all application benchmarks we’ve tried and we encourage you to give us feedback when you find something in the beta that doesn’t perform at least as well as R2022a under Rosetta.

Let’s zoom in on a couple of these results.

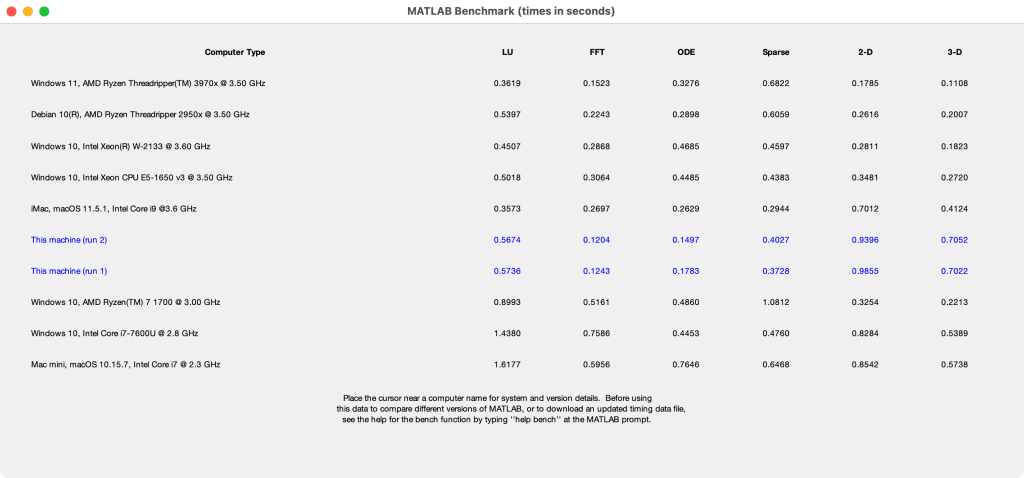

ODE Performance is great in the Apple Silicon Beta of R2022a

As you can see, Ordinary Differential Equation (ODE) solving performance is superb for the new beta. Indeed, the ODE result for native Apple Silicon is faster than all of the other systems included in the R2022a bench results. I reached out to the development team and asked what was going on. They told me “ODE is a sequential program, so its performance depends heavily on the performance of a single CPU core. A more aggressive out-of-order pipeline can significantly improve instruction level parallelism (ILP) for sequential programs. As an example, M1 includes a wider decoder, issue queues, register renaming, more ALUs, and a larger L1 cache. As a result, ODE performs better on M1 compared to x86 architecture.”

LU factorization performance

LU factorization performance in the beta isn’t too bad but it didn’t blow me away. I asked development what’s going on and if we could expect better performance in the production release. LU performance depends on two things: The speed of the underlying BLAS library and the speed of the LAPACK library that wraps around this. There are several implementations of BLAS and LAPACK that one could choose from. For x86 hardware, MATLAB currently uses the Intel MKL or AMD AOCL implementations of BLAS and LAPACK for example.

In this beta, MATLAB is using the well optimized OpenBLAS library for BLAS routines but only the reference version of LAPACK. That is, the LAPACK code behind LU factorization in the beta is not optimized at all! MathWorks are exploring options to see what the best way forward is here. It’s not as obvious as you might think!

Sometimes the M1 outperforms the M1 pro

It doesn’t show up in the computations used in the bench function but we have seen a small number of examples where the M1 pro is beaten by the smaller M1. This is almost always related to multithreading overheads. Essentially, MathWorks have some tuning to do to ensure that all of the additional cores in the M1 pro are used well.

Over to you

Did you try the beta (get it from here)? What did you find? Tell us at https://mathworks.com/support/apple-silicon-r2022a-beta-feedback.html

- 범주:

- Apple,

- performance

Cleve’s Corner: Cleve Moler on Mathematics and Computing

Cleve’s Corner: Cleve Moler on Mathematics and Computing The MATLAB Blog

The MATLAB Blog Guy on Simulink

Guy on Simulink MATLAB Community

MATLAB Community Artificial Intelligence

Artificial Intelligence Developer Zone

Developer Zone Stuart’s MATLAB Videos

Stuart’s MATLAB Videos Behind the Headlines

Behind the Headlines File Exchange Pick of the Week

File Exchange Pick of the Week Hans on IoT

Hans on IoT Student Lounge

Student Lounge MATLAB ユーザーコミュニティー

MATLAB ユーザーコミュニティー Startups, Accelerators, & Entrepreneurs

Startups, Accelerators, & Entrepreneurs Autonomous Systems

Autonomous Systems Quantitative Finance

Quantitative Finance MATLAB Graphics and App Building

MATLAB Graphics and App Building

댓글

댓글을 남기려면 링크 를 클릭하여 MathWorks 계정에 로그인하거나 계정을 새로 만드십시오.