Add two lines to your MATLAB code to make it work with big data

Today's guest blogger is Harald Brunnhofer, a Principal Training Engineer at MathWorks. On top of getting thousands of users started with MATLAB or taking their MATLAB skills to the next level, he is the content developer of the Accelerating and Parallelizing MATLAB Code and Processing Big Data with MATLAB training courses.

In an earlier post, Mike gave an overview of MATLAB's High Performance Computing (HPC) and Big Data datatypes. Today, I'll be showing you how you can use tall arrays to move from small to big data with minimal changes to your code.

"Big data" is becoming more and more relevant in a variety of industries and applications. For our purpose, this is data that is (far) too large to fit into the memory of a single computer: tens of gigabytes, possibly terabytes or even petabytes (= 1024 terabytes) of data. So what are you going to do if you are getting out-of-memory errors or know very well that you will get these before you even try?

Table of Contents

Some general advice for large datasets

Datastores: Good for big and small data

Set up the datastore

Import and inspect the data

Perform the analysis

Tall Arrays: An easy lazy evaluation framework for handling big data

Generating some big data for experimenting

Set up the datastore

Setting up parallel resources

Import the data

Perform the analysis

Getting the results

A slightly different problem

Delete test big data (optional)

Summary and Resources

Supporting functions

Some general advice for large datasets

Even before data gets "big", it may be of interest to save memory, time, or both by

- importing only the data you need: If you have tabular data with many columns but only need to work with a few, it is a waste to import the columns that you don't need. For datastores, as shown below, you can use the SelectedVariableNames to choose the variables you'd like to import.

- choosing data types carefully: if you have numerical data that can be stored and manipulated in single or integer data types, this saves some memory. If text data can only take a small number of values compared to the size of the data, categorical arrays will save memory as well. For datastores, you can change the data type of a variable using the TextScanFormats or SelectedFormats properties as shown in the generateBigData function at the bottom of this script.

- avoiding unnecessary copies of data: what is consuming twice the memory of one large variable? Two variables of the same size. I know that this sounds trivial, but it's easy to escape one's mind. In-place operations are an advanced way of avoiding variables getting copied.

Datastores: Good for big and small data

If you've worked with .csv files or other tabular text files before, chances are you've been using functions like readtable, readmatrix or older functions such as textscan or csvread. Big data workflows, on the other hand, start with the concept of a datastore so we'll start by using a datastore in a small data situation.

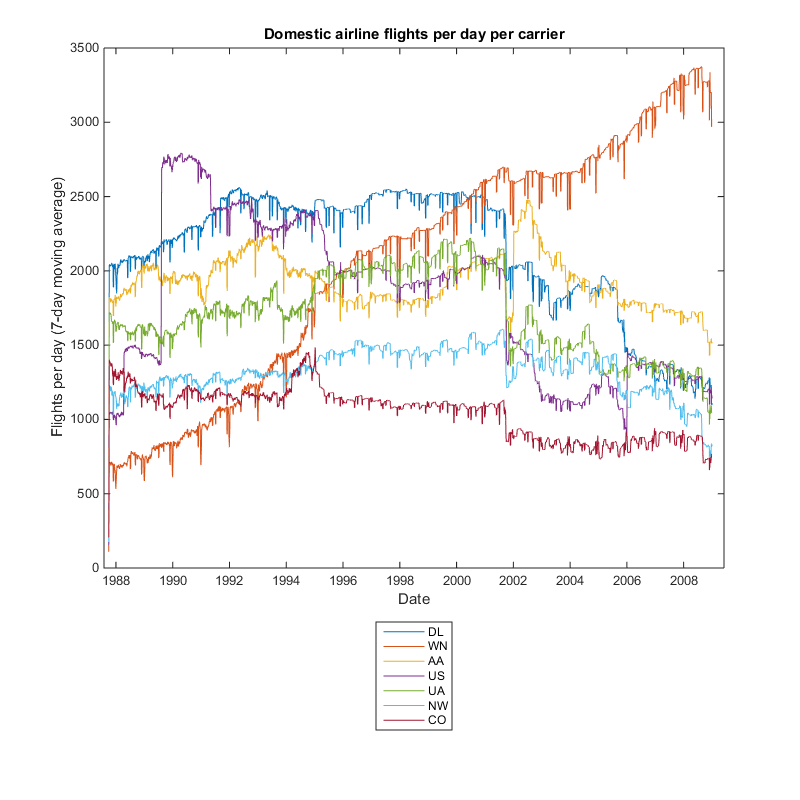

We'll need a data file for our example below. The airlinesmall.csv file which ships with MATLAB "only" has a little over 100,000 lines of data, so it will comfortably fit into the memory of a modern computer. Even more so if we only import the columns of the dataset which we actually need. Our objective is to determine the average delay of a flight, depending on the carrier. The example below is written to accommodate much larger datasets, as well.

Set up the datastore

A datastore is a MATLAB object that refers to one file or, when using * as a wildcard, a set of files. These files can be stored on disk or remotely, e.g. on Amazon S3, Azure Blob Storage, or Hadoop. If the data is stored remotely, it is strongly recommended to process the data on the environment that stores it to avoid having to download the data.

A datastore does not directly read the data. Instead, it holds information about which files to read and how to read them when requested. This is very helpful in iteratively reading a large collection of files instead of loading all of it at once. MATLAB has datastores for various file formats, including text, spreadsheet, image, Parquet, and even custom format files. For a full list of types of datastores, see documentation on available datastores.

If the datastore refers to a collection of files, they need to have a comparable structure. For tabular text files or spreadsheets, this means identical column headers.

Name-value pairs can be used to fine-tune settings when creating the datastore, and you can use dot indexing to set or get properties of the datastore after it has been created.

format compact

ds = datastore("airlinesmall.csv", TreatAsMissing="NA", Delimiter=",");

ds.SelectedVariableNames = ["UniqueCarrier", "ArrDelay"];

ds.SelectedFormats{1} = '%C';

Import and inspect the data

The read command will import a block of data. For tabular text datastores, the read command returns a table. The imported number of lines is determined by the ReadSize property. The next call to read will then resume where the previous one left off.

If all of the data fits into memory at once, you can use the readall command to import all data with a single command even when the data is spread across multiple files.

ds.ReadSize = "file"; % for now, we can read an entire file at once

data = read(ds);

The result is a table with two columns, the carrier and the arrival delay of each flight. Let's look at some lines of it:

disp(data(10:20,:))

You can find a list of carrier codes and airlines here.

Perform the analysis

The groupsummary command is a fairly new and convenient way to efficiently analyze data by groups. You can then sort by average arrival delay.

delayStats = groupsummary(data, "UniqueCarrier", "mean");

delayStats = sortrows(delayStats, "mean_ArrDelay")

We now get the results we want

disp("Airline " + string(delayStats.UniqueCarrier(1)) + " had the smallest average delay at " + ...

delayStats.mean_ArrDelay(1) + " minutes." + newline + "Airline " + ...

string(delayStats.UniqueCarrier(end)) + " had the largest average delay at " + delayStats.mean_ArrDelay(end) + " minutes.")

Tall Arrays: An easy lazy evaluation framework for handling big data

When we only had a small amount of data in the datastore, we used

data = read(ds);

to acquire the entire data and we processed everything in memory. When our data is too big for memory, we could loop over the blocks and obtain partial results from each block, but that might be rather cumbersome even in relatively simple settings like ours.

MATLAB Tall Arrays extend the in-memory, often table-based workflow to data that does not fit in memory. Thus, we can develop our analysis code based on an in-memory MATLAB table and use this analysis code with little or no adjustments for the entire dataset that otherwise won't fit in memory.

To achieve that, tall arrays are created from a datastore and use a "lazy evaluation" method for data processing. With lazy evaluation, tall arrays first collect all the analysis operations on the data instead of directly executing them after each call. When requested, MATLAB determines the best way to run those operations, reads in data in blocks from the underlying datastore, applies the operations, and provides the final result to the user.

Generating some big data for experimenting

For the purpose of this blog post, we will generate a big data set from the small data set by creating multiple copies and adding some random changes to the arrival delays using a function incuded at the end of this script. For the sake of sanity and to spare your hard drive, in this example we'll generate 50 files of around 12 Mb each. The workflow you are about to see would also work just fine if you had one or even fifty 30Gb files. It would just take (much) longer, so we will explore parallel computing options as well.

generateBigData(50, 50) % Uses function at bottom of this script to generate about 600 MB of data

Set up the datastore

We explained the concept of datastores with a small sample dataset. Now, instead of the small dataset, we will point the datastore to the complete collection of datafiles using a wildcard (*).

The only difference between the 'Big data' version and what we've seen until now is the file name.

ds = datastore("airlinebig*.csv", TreatAsMissing="NA", Delimiter=","); % adjusting only the location

ds.SelectedVariableNames = ["UniqueCarrier", "ArrDelay"];

% ds.SelectedFormats{1} = '%C'; % this would lead to an extra pass and thus longer execution time

% ds.ReadSize = "file"; % we do not want to rely on the data of even a single file to fit into memory

Setting up parallel resources

If Parallel Computing Toolbox is available, multiple cores can be used to speed up the execution. If you do not have access to Parallel Computing Toolbox, comment this section out, and you will still be able to run the example. To learn more about parallel pools and the difference between thread and process based parallel pools, see the Run Code on Parallel Pools and Choose Between Thread-Based and Process-Based Environments pages in the documentation. On top of that, you could run computations on a cluster or cloud, including HPC environments, instead of on your desktop. This would require MATLAB Parallel Server.

if isempty(gcp("nocreate"))

parpool("Threads")

end

Import the data

The tall command constructs a tall array from a datastore. For tall arrays, MATLAB will only start reading the data when requested using the gather command or other commands, like plot or histogram, that trigger read operations of tall variables.

data = tall(ds) % the first genuine change to the code used previously

For the moment, we only know that we will have a tall table with two variables named UniqueCarrier and ArrDelay, but we don't even see how many rows it will have because that would require reading all of the data.

Perform the analysis

delayStats = groupsummary(data, "UniqueCarrier", "mean")

This time, we only know that we will have a tall table with three variables named UniqueCarrier, GroupCount, and mean_ArrDelay, and we still don't see how many rows it will have because that would require reading all of the data.

delayStats = sortrows(delayStats, "mean_ArrDelay")

Just like before, the actual analysis is deferred.

Getting the results

Let's trigger the actual processing of the data. Since the data is now imported block by block and there could be a significant number of blocks, this may take a while:

delayStats = gather(delayStats); % the other genuine change to the code used previously

Reporting the results:

disp("Airline " + string(delayStats.UniqueCarrier(1)) + " had the smallest average delay at " ...

+ delayStats.mean_ArrDelay(1) + " minutes." + newline + ...

"Airline " + string(delayStats.UniqueCarrier(end)) + " had the largest average delay at " ...

+ delayStats.mean_ArrDelay(end) + " minutes.")

Since our large data set is copies of the original data set with small variations, our result is comparable to before.

A slightly different problem

When considering punctuality statistics, people often consider the percentage of flights that are delayed by less than a certain time.

The following variable lets you choose what delay is considered acceptable.

acceptableDelay = 5

To do this we need a function to calculate the percentage of flights that meet our delay criteria. We can write this function as a single line and pass it to groupsummary as an anonymous function.

fcn = @(x) sum(x <= acceptableDelay)/numel(x)*100;

Other than that, the workflow is almost identical to what we have done before:

ds = tabularTextDatastore("airlinebig*.csv", TreatAsMissing="NA", Delimiter=",");

ds.SelectedVariableNames = ["UniqueCarrier", "ArrDelay"];

data = tall(ds);

delayStats = groupsummary(data, "UniqueCarrier", fcn);

delayStats.Properties.VariableNames{3} = 'Punctuality'; % renaming the data variable (optional)

delayStats = gather(sortrows(delayStats, "Punctuality", "descend"));

Reporting the results:

disp("Airline " + string(delayStats.UniqueCarrier(1)) + " had the highest punctuality rate at " ...

+ delayStats.Punctuality(1) + "%." + newline + "Airline " + ...

string(delayStats.UniqueCarrier(end)) + " had the lowest punctuality rate at " + delayStats.Punctuality(end) + "%.")

Delete test big data (optional)

Optionally, delete the large files we have created to mimic big data.

deleteTestFiles = true;

if deleteTestFiles

delete("airlinebig*.csv")

end

Summary and Resources

In this example, we transitioned an application that was working fine on data that fit into memory to an application that works with big data. All we had to do was use tall instead of readall and to add a gather command. For this example, the commands and syntax used have not changed.

Note that not all of the functions shipping with MATLAB and the toolboxes are supported for tall arrays. View a list of all functions that support tall arrays here. In this list, the  icon indicates that the behavior on tall arrays differs from the behavior on variables that fit in memory. Click that icon to view the details.

icon indicates that the behavior on tall arrays differs from the behavior on variables that fit in memory. Click that icon to view the details.

Resources for learning more about tall arrays:

- Processing Big Data with MATLAB training

- Documentation for tall arrays

- Documentation on available datastores

- Documentation on developing custom algorithms for tall arrays

- Documentation on running computations on a cluster or cloud instead of your desktop

Supporting functions

generateBigData creates a synthetic large data set by creating multiple copies of the airlinesmall.csv file that ships with MATLAB and adding some random changes to the arrival delays. This function is not optimized for performance.

Inputs:

- numBlocks the number of times the original data is replicated to create a single (fairly) big file

- numFiles the number of copies of that (fairly) big file to be created

function generateBigData(numBlocks, numFiles)

rng("default")

ds = datastore("airlinesmall.csv", TreatAsMissing="NA", Delimiter=",");

ds.TextscanFormats([11, 23]) = {'%s'};

data = readall(ds);

newData = data;

delete("airlinebig*.csv")

h = waitbar(0, "0 of " + numBlocks + " blocks written.");

c = onCleanup(@() close(h));

for k = 1:numBlocks

newData.ArrDelay = data.ArrDelay + round(5*randn(size(data.ArrDelay)));

writetable(newData, "airlinebig"+k+".csv", "WriteMode","append")

waitbar(k/numFiles, h, k + " of " + numBlocks + " blocks written.");

end

h = waitbar(0, "0 of " + numFiles + " copies created.");

c = onCleanup(@() close(h));

for k = 2:numFiles

copyfile("airlinebig1.csv", "airlinebig"+k+".csv");

waitbar(k/numFiles, h, k + " of " + numFiles + " copies created.");

end

end

Comments

To leave a comment, please click here to sign in to your MathWorks Account or create a new one.