The Intel Hypercube, part 1

Even though I am one of the founders of the MathWorks, I only acted as an advisor to the company for its first five years. During that time, from 1985 to 1989, I was trying my luck with two Silicon Valley computer startup companies. Both enterprises failed as businesses, but the experience taught me a great deal about the computer industry, and influenced how I viewed the eventual development of MATLAB. The first of these startups developed the Intel Hypercube.

Contents

Caltech Cosmic Cube

In 1981, Caltech Professor Chuck Seitz and his students developed one of the world's first parallel computers, which they called the Cosmic Cube. There were 64 nodes. Each node was a single board computer based on the Intel 8086 CPU and 8087 floating point coprocessor. These were the chips that were being used in the IBM PC at the time. There was room on the board of the Cosmic Cube for only 128 kilobytes of memory.

Seitz's group designed a chip to handle the communication between the nodes. It was not feasible to connect a node directly to each of the 63 other nodes. That would have required $64^2 = 4096$ connections. Instead, each node was connected to only six other nodes. That required only $6 \times 64 = 384$ connections. Express each node's address in binary. Since there are $2^6$ nodes this requires six bits. Connect a node to the nodes whose addresses differ by one bit. This corresponds to regarding the nodes as the vertices of a six-dimensional cube and making the connections along the edges of the cube. So, these machines are called "hypercubes".

The graphic shows the 16 nodes in a four-dimensional hypercube. Each node is connected to four others. For example, using binary, node 0101 is connected to nodes 0100, 0111, 0001, and 1101.

Caltech Professor Geoffrey Fox and his students developed applications for the Cube. Among the first were programs for the branch of high energy physics known as quantum chromodynamics (QCD) and for work in astrophysics modeling the formation of galaxies. They also developed a formidable code to play chess.

Silicon Forest

The first startup I joined wasn't actually in Silicon Valley, but in an offspring in Oregon whose boosters called the Silicon Forest. By 1984, Intel had been expanding its operations outside California and had developed a sizeable presence in Oregon. Gordon Moore, one of Intel's founders and then its CEO, was a Caltech alum and on its Board of Trustees. He saw a demo of the Cosmic Cube at a Board meeting and decided that Intel should develop a commercial version.

Two small groups of Intel engineers had already left the company and formed startups in Oregon. One of the co-founders of one of the companies was John Palmer, a former student of mine and one of the authors of the IEEE 754 floating point standard. Palmer's company, named nCUBE, was already developing a commercial hypercube.

Hoping to dissuade more breakoff startups, Intel formed two "intrepreneurial" operations in Beaverton, Oregon, near Portland. The Wikipedia dictionary defines intrapreneurship to be "the act of behaving like an entrepreneur while working within a large organization." Justin Rattner was appointed to head one of the new groups, Intel Scientific Computers, which would develop the iPSC, the Intel Personal Supercomputer.

UC Berkeley Professor Velvel Kahan, was (and still is) a good friend of mine. He had been heavily involved with Intel (and Palmer) on the development of the floating point standard and the 8087 floating point chip. He recommended that Intel recruit me to join the iPSC group, which they did.

At the time in 1984, I had been chairman of the University of New Mexico Computer Science Department for almost five years. I did not see my future in academic administration. We had just founded The MathWorks, but Jack Little was quite capable of handling that by himself. I was excited by the prospect of being involved in a startup and learning more about parallel computing. So my wife, young daughter, and I moved to Oregon. This involved driving through Las Vegas and the story that I described in my Potted Palm blog post.

Distributed Arrays

As I drove north across Nevada towards Oregon, I was hundreds of miles from any computer, workstation or network connection. I could just think. I thought about how we should do matrix computation on distributed memory parallel computers when we got them working. I knew that the Cosmic Cube guys at Caltech had broken matrices into submatrices like the cells in their partial differential equations. But LINPACK and EISPACK and our fledgling MATLAB stored matrices by columns. If we preserved that column organization, it would be much easier to produce parallel versions of some of those programs.

So, I decided to create distributed arrays by dealing their columns like they were coming from a deck of playing cards. If there are p processors, then column j of the array would be stored on processor with identification number mod(j, p).

Gaussian elimination, for example, would proceed in the following way. At the k -th step of the elimination, the node that held the k -th column would search it for the largest element. This is the k -th pivot. After dividing all the other elements in the column by the pivot to produce the multipliers, it would broadcast a message containing these multipliers to all the other nodes. Then, in the step that requires most of the arithmetic operations, all the nodes would apply the multipliers to their columns.

This column oriented approach could be used to produce distributed memory parallel versions of the key matrix algorithms in LINPACK and EISPACK.

Intel Personal Supercomputer

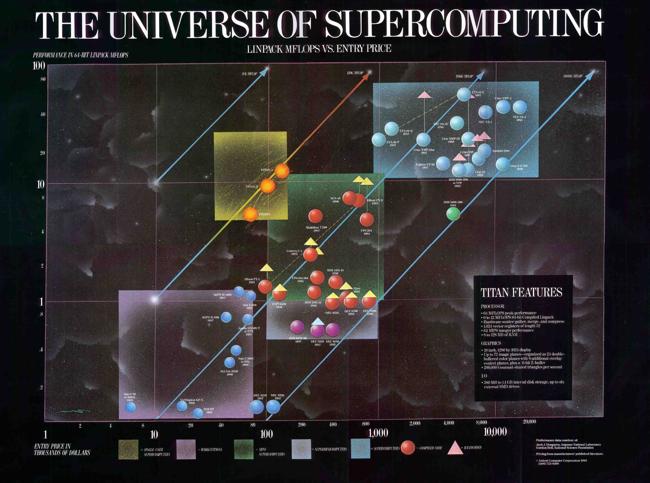

Introducing in 1985, the iPSC was available in three models, the d5, d6, and d7, for 5, 6, and 7-dimensional hypercubes. The d5 had 32 nodes in the one cabinet pictured here. The d6 had 64 nodes in two of these cabinets, and the d7 had 128 nodes in four cabinets. The list prices ranged from 170 thousand dollars to just over half a million.

Each node had an Intel 80286 CPU and an 80287 floating point coprocessor. These were the chips used in the IBM PC/AT, the "Advanced Technology" personal computer that was the fastest available at the time. There was 512 kB, that's half a megabyte, of memory. A custom chip handled the hypercube communication with the other nodes, via the backplane within a cabinet and via ethernet between cabinets.

It was possible to replace half the nodes with boards that were populated with memory chips, 4 megabytes per board, to give 4.5 megabytes per node. That would turn one cabinet into a d4 with a total of 72 megabytes of memory. A year later another variant was announced that had boards with vector floating point processors.

A front end computer called the Cube Manager was an Intel-built PC/AT microcomputer with 4 MBytes of RAM, a 140 MByte disk, and a VT100-compatible "glass teletype". The Manager had direct ethernet connections to all the nodes. We usually accessed it by remote login from workstations at our desks. The Manager ran XENIX, a derivative of UNIX System III. There were Fortran and C compilers. We would compile code, build an executable image, and download it to the Cube.

There was a minimal operating system on the cube, which handled message passing between nodes. Messages sent between nodes that were not directly connected in the hypercube interconnect would have to pass through intermediate nodes. We soon had library functions that included operations like global broadcast and global sum.

The Lights

If you look carefully in the picture, you can see red and green LEDs on each board. These lights proved to be very useful. The green light was on when the node was doing useful computation and the red light was on when the node was waiting for something to do. You could watch the lights and get an idea of how a job was doing and even some idea of its efficiency.

One day I was watching the lights on the machine and I was able to say "There's something wrong with node 7. It's out of sync with the others." We removed the board and, sure enough, a jumper how been set incorrectly so the CPU's clock was operating at 2/3 its normal rate.

At my suggestion, a later model had a third, yellow, light that was on when then the math coprocessor was being used. That way one could get an idea of arithmetic performance.

First Customer Ship

In the computer manufacturing business, the date of First Customer Ship, or FCS, is a Big Deal. It's like the birth of a child. Our first customer was the Computer Science Department at Yale University and, in fact, they had ordered a d7, the big, 128-node machine. When the scheduled FCS date grew near, the machine wasn't quite ready. So we had Bill Gropp, who was then a grad student at Yale and a leading researcher in their computer lab, fly out from Connecticut to Oregon and spend several days in our computer lab. So it was FCS all right, but of the customer, not of the equipment.

Star Wars

It was during this time that President Ronald Reagan's administration had proposed the Strategic Defense Initiative, SDI. The idea was to use both ground-based and space-based missiles to protect the US from attack by missiles from elsewhere. The proposal had come to be known by its detractors as the "Star Wars" system.

One of the defense contractors working on SDI believed that decentralized, parallel computing was the key to the command and control system. If it was decentralized, then it couldn't be knocked out with a single blow. They heard about our new machine and asked for a presentation. Rattner, I, and our head of marketing went to their offices near the Pentagon. We were ushered into a conference room with the biggest conference table I had ever seen. There were about 30 people around the table, half of them in military uniforms. The lights were dim because PowerPoint was happening.

I was about halfway through my presentation about how to use the iPSC when a young Air Force officer interrupts. He says, "Moler, Moler, I remember your name from someplace." I start to reply, "Well, I'm one of the authors of LINPACK and ..." He interrupts again, "No, I know that... It's something else." Pause. "Oh, yeh, nineteen dubious ways!" A few years earlier Charlie Van Loan and I had published "Nineteen dubious ways to compute the exponential of a matrix." It turns out that this officer had a Ph.D. in Mathematics and had been teaching control theory and systems theory at AFIT, the Air Force Institute of Technology in Ohio. So for the next few minutes we talked about eigenvalues and Jordan Canonical Forms while everybody else in the room rolled their eyes and looked at the ceiling.

Preview of part 2

In my next post, part 2, I will describe how this machine, the iPSC/1, influenced both MATLAB, and the broader technical computing community.

댓글

댓글을 남기려면 링크 를 클릭하여 MathWorks 계정에 로그인하거나 계정을 새로 만드십시오.