Optimization of HRTF Models with Deep Learning

A crash course in Spatial Audio

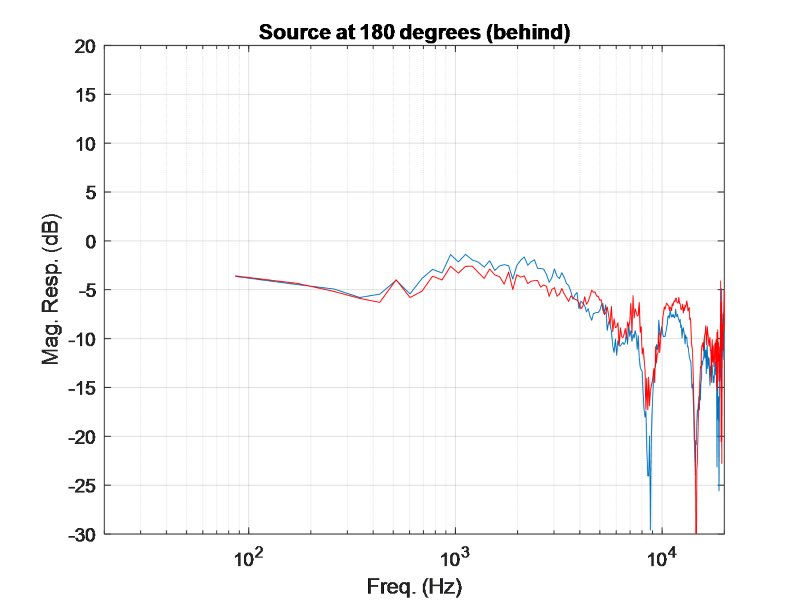

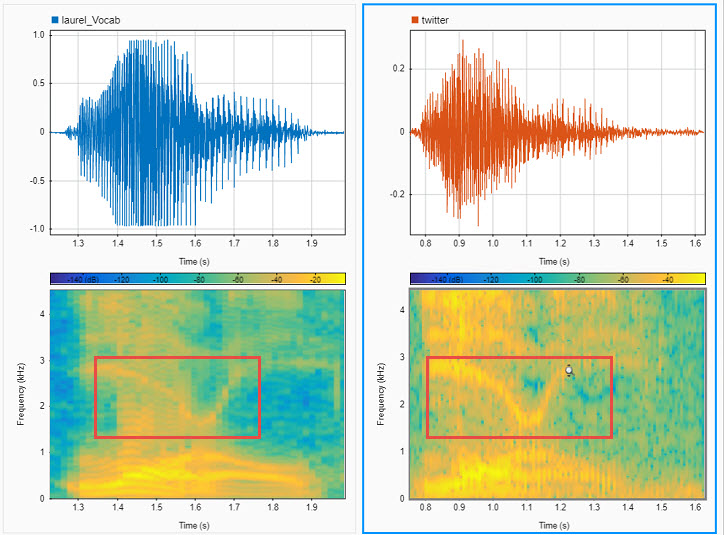

One important aspect of this research is in the localization of sound. This is studied quite broadly for audio applications and relates to how we as humans hear and understand where sound is coming from. This will vary person to person, but it generally has to do with the delay relative to each ear (below ~800 Hz) and spectral details at the individual ears at frequencies greater than ~ 800 Hz. These are primarily the cues that we use to localize sound on a daily basis (for example see the cocktail party effect). Below are figures displaying the Head-Related impulse response measured in an anechoic chamber at the entrance of both ear-canals of a human subject (left figure), and the Fourier-domain representation i.e., the Head-related Transfer function (right figure), which shows how a human hears sound at both ears at a certain location (e.g., 45 degrees to the left and front and 0 degrees elevation) in the audible frequencies. |

|

- Humans are very good at spotting discrepancies in sound, which will appear fake and lead to a less than genuine experience for the user.

- Head related transfer functions are different for different people over all angles.

- Each HRTF is direction dependent and will vary at each angle for any given person.

Current state of the art vs. our new approach

The question you might be asking is “well, if everyone is different, why not just take the average of all the plots and create an average HRTF?” To that, I say “if you take the average, you’ll just have an average result.” Can deep learning help us improve on the average? Prior to our research, the primary method to perform this analysis was principle component analysis for HRTF modeling over a set of people. In the past, researchers have found 5 or 6 components used that generalize as well as they can for a small test set of approximately 20 subjects([1] [2] [3]) but we want to generalize over a larger dataset and a larger number of angles. We are going to show a new approach using deep learning. We are going to apply this to an autoencoder approach for learning a lower dimensional representation (latent representation) of HRTFs using nonlinear functions, and then using another network (a Generalized Regression neural network in this case) to map angles to the latent representation. We start with an autoencoder of 1 hidden layer, and then we optimize the number of hidden layers and the spread of the Gaussian RBF in the GRNN by doing Bayesian optimization with a validation metric (the log-spectral distortion metric). The next section shows the details of this new approach.New approach

For our approach we are using the IRCAM dataset, which consists of 49 subjects with 115 directions of sound per subject. We are going to use an autoencoder model and compare this against the principle component analysis model, (which is a linearly optimal solution conditioned on the number of PC’s) and we will compare the results using objective comparisons using log spectral distortion metric to compare performance.Data setup

As I mentioned, the dataset has 49 subjects, 115 angles and each HRTF is created by computing the FFT over 1024 frequency bins. Problem statement: can we find an HRTF representation for each angle that best maximizes the fit over all subjects for that angle? We’re essentially looking for the best possible generalization, over all subjects, for each of the 115 angles.- We also used hyperparameter tuning (bayesopt) for the deep learning model.

- We take the entire HRTF dataset (1024X5635) and train the autoencoder. The output of the hidden layer gives you a compact representation of the input data. We take the autoencoder, we extract that representation and then map that back to the angles using a Generalized RNN. We also add jitter, or noise that we add for each angle and for each subject. This will help the network generalize rather than overfit, since we aren’t looking for the perfect answer (this doesn’t exist!) rather the generalization that best fits all test subjects.

- Bayesian optimization was used for:

- The size of the autoencoder network (the number of layers)

- The jitter/noise variance added to each angle

- The RBF spread for the GRNN

Results

We optimized the Log-spectral distortion to determine the results:

Selection of PCA-order (abscissa: no. of PC, ordinate: explained variance), (a) left-ear (b) right ear)

Example of the left-ear HRTF reconstruction comparison using AE-model (green) compared to true HRTF (blue). The PCA model is used as an anchor and is shown in red.

References

[1] D. Kistler, and F. Wightman, “A model of head-related transfer functions based on principal components analysis and minimum-phase reconstruction,” J. Acoust. Soc. Amer., vol. 91(3), 1992, pp. 1637-1647. [2] W. Martens, “Principal components analysis and resynthesis of spectral cues to perceived direction,” Proc. Intl. Comp. Mus. Conf., 1987, pp. 274-281. [3] J. Sodnik, A. Umek, R. Susnik, G. Bobojevic, and S. Tomazic, “Representation of Head-related Transfer Functions with Principal Component Analysis,” Acoustics, Nov. 2004.- Category:

- Deep Learning

Cleve’s Corner: Cleve Moler on Mathematics and Computing

Cleve’s Corner: Cleve Moler on Mathematics and Computing The MATLAB Blog

The MATLAB Blog Guy on Simulink

Guy on Simulink MATLAB Community

MATLAB Community Artificial Intelligence

Artificial Intelligence Developer Zone

Developer Zone Stuart’s MATLAB Videos

Stuart’s MATLAB Videos Behind the Headlines

Behind the Headlines File Exchange Pick of the Week

File Exchange Pick of the Week Hans on IoT

Hans on IoT Student Lounge

Student Lounge MATLAB ユーザーコミュニティー

MATLAB ユーザーコミュニティー Startups, Accelerators, & Entrepreneurs

Startups, Accelerators, & Entrepreneurs Autonomous Systems

Autonomous Systems Quantitative Finance

Quantitative Finance MATLAB Graphics and App Building

MATLAB Graphics and App Building

Comments

To leave a comment, please click here to sign in to your MathWorks Account or create a new one.