Bias in Deep Learning Systems

A discussion of the film, Coded Bias

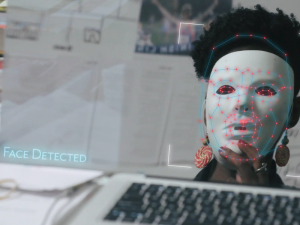

This post is from Heather Gorr (@heather.codes) to recap her experience watching Coded Bias, with a call to action for everyone reading. Coded bias was recently nominated Critics' Choice Award for Best Science Documentary and is coming to Netflix on April 5, 2021AI can be wrong. We know this, some of us probably know too well! But there are times when a misclassification can actually affect someone's life. We're not talking about a cat/dog misunderstanding or an unfortunate Netflix recommendation. An applicant can be wrongly overlooked for a job or an innocent person accused of a crime. These are some of the examples and themes throughout the film Coded Bias, an acclaimed documentary highlighting the discovery of Joy Buolamwini on bias in AI systems including facial recognition and text, plus the implications of such bias. In her case, the facial recognition system she was working on did not recognize her… until she put on a white mask.

MathWorks recently hosted a public screening and a Q&A with the filmmaker, Shalini Kantayya, as part of our NeurIPS meetups (yes, a software company hosted a Sundance film screening!) Several hundred across the world joined us to learn about this important topic and engage in discussions. In this post, we'll give a summary of points from the film, a discussion on bias, and the impact in the deep learning community (and society). We'll also point to resources on how to help and learn more.

Viewing of the film “Coded Bias” (from Heather's desk) during our virtual NeurIPs meetup

What is "Bias"?

Maybe you've heard the term bias mathematically speaking and in a social or general sense. The definitions are very similar. For example, the terms "signal bias" or "floating point bias" are used to describe adding an offset (such as a constant value). In other words, mathematical bias is a displacement from a neutral position, just like the general meaning.

|

So, what is the context in the film? Both. Joy found that the data set used to train the facial recognition model was made up primarily of white males. When she broadened the study to investigate more commercial systems, the data sets consisted of 80% lighter skinned people. The algorithms misclassified faces of darker skinned women with an error rate of up to 37 percent. Lighter skinned men, however, had error rates of no more than 1%. [1] |

|

Let's think this through: The imbalanced data biased the algorithm. Having much more data, it was trained to classify white faces much more accurately (and disproportionately to rates of dark faces). Furthermore, the algorithms are then used in law enforcement and other systems which are already biased against dark faces, further compounding when the algorithm itself is biased.

It's not just images, but other types of data which also can lead to bias. For example, many text and NLP models also use large data sets which have been found to similarly exhibit biases based on the population from which the data are collected. [2]

|

|

Coded Bias highlighted many examples of harmful consequences, especially in the way of civil rights. AI systems are used by police departments, job application sites, and financial systems prominently. At least half of U.S. states allow police to search their database of driver's license photos for matches. However, innocent people have been arrested and their lives forever changed. The film discussed countless examples of wrongful arrests. One example that sticks out is a 14-year-old boy, stopped and searched by police due to a false match. |

We could go on but know there's hope! Based on Joy's research and advocacy, including founding of the Algorithmic Justice League, several big tech companies stopped selling their tools to law enforcement and a number of U.S. states discontinued facial recognition programs.

What did we learn?

There are many lessons from the film, especially to those of us working in the deep learning field. One important point is to think about the training data! It's very popular to use transfer learning, adapting a model pretrained by researchers. But we should include investigation of the training data before using them, especially depending on the type of system you're building.

In addition, there is research in the community on interpretability and more transparency in model building. Of course, the algorithms for this are not often taking into account human/ societal bias, but they may be helpful in identifying issues, patterns, and trends in the data and results. For example, algorithms such as LIME, Grad-CAM and Occlusion Sensitivity can give you insight into a deep learning network, and why the network chose a particular option. For example, the following images (from the recent post on deep learning visualizations), show results from Grad-CAM (gradient-weighted class activation mapping) highlighting portions of images which influence the classification more strongly.

Results from Grad-CAM identifying portions of images which influence the classification.

But we must also step back and think like human beings (keep the machines accountable!) We can be more aware of how models were trained, which data sets were used, and communicate these things in our own research. And more aware of how the algorithms are being used IRL (in real life) and the implications of your work.

What can we do?

The film concluded with a hopeful call out to what we can all do as individuals to help. Essentially the themes are awareness, education, and action. Education is an important one for us as so many of you are just getting started with deep learning and AI. And since you made it to the end of this post, you are aware and have some background knowledge as you go back to your life. That's the first step!

|

To do more, we can learn and take action through the links below. |

References

[1] “Gender Shades: Intersectional Accuracy Disparities in Commercial Gender Classification” by Joy Buolamwini and Timnit Gebru, Proceedings of Machine Learning Research, February 2018 http://proceedings.mlr.press/v81/buolamwini18a/buolamwini18a.pdf [2] Emily M. Bender, Timnit Gebru, Angelina McMillan-Major, and Shmargaret Shmitchell. 2021. On the Dangers of Stochastic Parrots: Can Language Models Be Too Big? In Conference on Fairness, Accountability, and Transparency (FAccT '21), March 3–10, 2021, Virtual Event, Canada. ACM, NewYork, NY, USA, 14 pages. https://doi.org/10.1145/3442188.3445922 https://faculty.washington.edu/ebender/papers/Stochastic_Parrots.pdf [3] Coded Bias Educational Discussion Guide https://static1.squarespace.com/static/5eb23eee707c5356dea97eaa/t/5ffe4ff872238a5c80e4020b/1610502147093/CODED_Educational_Guide_Final.pdf [4] https://mathworks.com/discovery/interpretability.html [5] https://blogs.mathworks.com/deep-learning/2019/06/20/explainable-ai/- Category:

- Deep Learning

Comments

To leave a comment, please click here to sign in to your MathWorks Account or create a new one.