Painting Music with Artificial Intelligence

Introduction

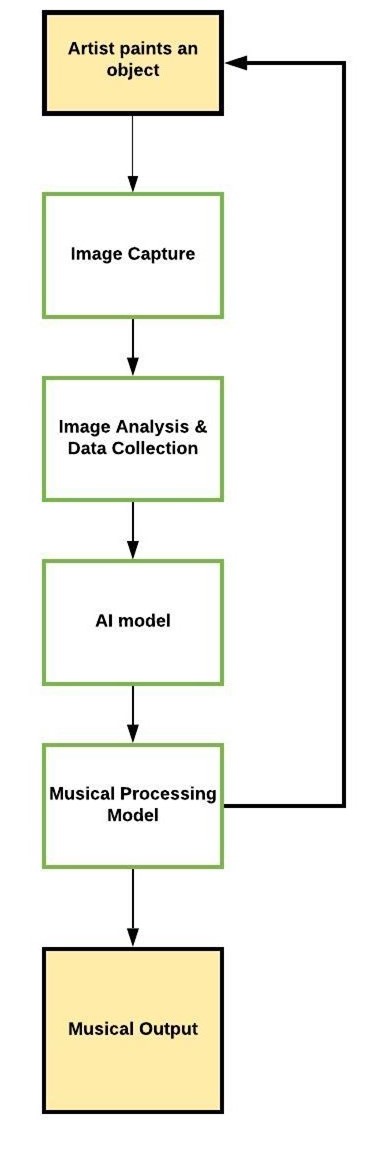

Painting Music is a project we are co-developing in collaboration with visual artist, Kate Steenhauer. We have developed a system using image processing and Artificial Intelligence which can, in real time, convert the process of a live painted drawing into a musical score that is unique to each performance (see Figure 1 of a photo taken during live performance). This began as a part of an undergraduate Honours project and focused on the question "Is AI good or bad?". Since then, the prototype system has been used in a performance; had a short 20-minute film made about it; been published as a journal paper; and we have been asked to attend several webinars (links to which are provided below). In this blog post, we are looking to provide an insight into the processes within the system, challenges we encountered in the development process, and finally how we are able to translate visual inputs to audio outputs.

Figure 1: Photograph of Kate Steenhauer during Painting Music live performance - Jack Caven is seated behind the lighting.

Photo credit - Aberdeen University May Festival

Resources

| Painting Music on YouTube | Painting Music Blog | Webinar | Journal Paper |

| https://katesteenhauer.com/painting-music/ | Scroll to Episode 12 | Drawing: Research, Theory, Practice |

Overview

Image Capture and Practical Set up

In addition to the development of the software, we had to overcome several practical challenges. The problem of image capture was overcome through having a Go Pro camera attached to a rig above the canvas (see images below).

Figure 3: Photograph of Kate Steenhauer and Jack Caven during live performance of Painting Music.

Photo credit - Aberdeen University May Festival

We were able to communicate wirelessly with the Go Pro camera through MATLAB using libraries developed in the MATLAB community for this purpose, which allowed the system to be controlled by Jack under the command of Kate as she painted each element. The lights added, either side of the canvas, were required as during a rehearsal, we discovered that shadows would have an undesired effect on the capture of images and the resultant musical output.

Image Analysis and Dataset Collection

We were able to acquire over 1300 images of different elements of art pieces which Kate had previously painted. This provided us with a sufficient dataset to use for training the Self-Organising Map. An example of an object and the artworks they come from can be seen below.

|

|

Figure 4: Completed painting and example image element extraction. Image © Kate Steenhauer

The next step was to identify what features we can extract from the various objects and how we can correlate them to a musical output. The table below conveys some examples of the correlations we made. Note that these attributes do not imply an actual relationship between art and music in this way, these are simply the relationships that we defined in order to create a relationship between the two domains so that music can then be created. In addition, there are many aspects that we would like to include in the future such as the symmetry of the painted object, or the self-similarity of the painting itself. Note that in principle it would also be possible to go from music to painting (i.e. create a painting from musical elements) although this is an area for future research.

The code snippet below is from a feature extraction function that we developed and is used to extract the data from the images to build our training and test set. A similar script was used during the real-time performance to extract data from the images the Go Pro captured. Firstly, the images are processed using imread, and are then cropped to remove anything outside of the canvas using imcrop before passing on to a further function for feature extraction. The feature extraction function uses an options variable to define which aspects of the image analysis to process, which are binary flags to indicate whether they should be undertaken.

if options.PixelUsedCount

%count number of Black and White pixels in the image

BW = imbinarize(thisImage);

thisFeatureAnalysis.BlackPixels=sum(BW(:));

thisFeatureAnalysis.WhitePixels=(numel(BW)-thisFeatureAnalysis.BlackPixels)/numel(BW);

thisFeatureAnalysis.BlackPixels=thisFeatureAnalysis.BlackPixels/numel(BW);

end

if options.FrequencyAnalysis

%undertake binned frequency analysis on the image

[thisFeatureAnalysis.FrequencyBins,thisFeatureAnalysis.RawFFT2]=FrequencyAnalysis(thisImage,NumberOfBins);

end

if options.BinGreyscale

%undertake binned greyscale analysis on image

thisFeatureAnalysis.GreyLevels=imhist(thisImage(:),NumberOfBins);

%dividing by the sum results in the bins being a %

thisFeatureAnalysis.GreyLevels=thisFeatureAnalysis.GreyLevels/max(thisFeatureAnalysis.GreyLevels);

end

AI Model

We used a Self-Organising Map to analyse the input art attribute data and drive the music output. Using Deep Learning Toolbox, the SOM can be trained, visualised and tested easily. The code snippet below shows the training process once the training data has been developed. For the Painting Music application, we chose to have a 36-node SOM. This gives sufficient discrimination for the painting elements, which in turn means that the nodes are not too specialised in what they learn for each painted element. This helps when using the output of the SOM as this gives a range for the equivalent musical elements which gives the process some choice when running in real time and prevents the exact same notes being generated for a similar object.

SOM = selforgmap([6 6]); SOM = train(SOM,ImageFeatures); view(SOM)

Using several interpretation tools given in the toolbox, we were able to see the types of attributes that were triggering each node. This allowed us to assign music attributes to each node. The figure below shows the sample hits for the training data set, and therefore the various nodes which can be fired during a performance.

Figure 5: Sample hits for trained SOM on training dataset

To study how the SOM differentiates painted objects further, we passed two randomly painted objects into the system and observed the output of the SOM (see table 1 below).

Table 1 - SOM Test with randomly selected objects

|

|

|

|

The results shown in the above table indicated to us that the SOM is able to differentiate the painted objects. This conclusion was drawn from examining the SOM Hits Plots (shown in the bottom row of table 1). From carrying out further in-depth analysis of the SOM weights, it was found that the painted object shown in the left column of table 1 contained a higher variance in frequency, but covered less white space than that of the painted object in the right column of table 1.

Music Processing Model

Using the correlations established and the output node of the SOM, we were able to deliver an audible output through the use of a MIDI. The weights of the SOM fired were used to define a range of values for each of the attributes with the equivalent musical attribute as given in the Table above. So as an example, an element painted by Kate is mapped to a node that has low frequency features, these are then used to drive the selection of notes that are also low pitch – or if creating a chord or harmony, then to use a note that is lower in pitch for this purpose.

The MIDI allowed us to choose different instruments to use within the melody generation and also allow us to select notes to play using numbers that relate to the attributes described in the above table and are defined by the winning node in the SOM. The initial simulations of music production suggested we required some additional structure to guide the system to generate slightly more pleasant music. We decided to use a mathematical model known as Markov Chains to help guide the system to producing better music. By downloading musical scores in the form of MIDI files from sites such as Musescore.com, we were able to calculate a number of Markov Chain models with music from The Beatles, Bach, and Beethoven, which we used to generate chords and harmonies.

Recent Development

An issue we encountered during the performance was the effect the paint drying had on the feature extraction phase of the process. Drying causes the paint to change colour slightly, so when comparing new images to older images (within the element removal process) the system was interpreting the new changes in colour as a new element. To prevent this, we recorded the areas in which elements were already painted. When a new element is then painted, we are able to remove any shapes which are picked up due to drying of the paint. The code snippet below shows how we were able to do this.

|

|

Conclusion and Future Development

The original aim of this project was to exhibit the power of AI. The performance encourages the individual to question their own stance towards AI (is AI good or bad?). We are now in the process of building a bespoke, transportable system for Kate to be able to carry out performances with the Painting Music system independently. Following this, we will be looking to further enhance the musical output created by the system, and to also generate music in different genres (such as dance music, or blues) and to develop solutions to problems which we faced during the original performance. For example, a problem we are looking to solve is re-alignment of the canvas if the camera has accidently been moved out of its original position. We strongly believe Painting Music has many applications, from the arts and entertainment (interactive personal music generation), to healthcare (arts therapy); and we will continue to develop the system and explore these applications.

- 범주:

- Deep Learning

댓글

댓글을 남기려면 링크 를 클릭하여 MathWorks 계정에 로그인하거나 계정을 새로 만드십시오.