Explainable AI (XAI): Implement explainability in your work

This is the third post in a 3-post series on Explainable AI (XAI). In the first post, we showed examples and offered practical advice on how and when to use XAI techniques for computer vision tasks. In the second post, we offered words of caution and discussed the limitations. In this post, we conclude the series by offering a practical guide for getting started with explainability, including tips and examples.In this blog post, we focus on image classification tasks and offer 4 practical tips, which help you make the most of Explainable AI techniques, for those of you ready to implement explainability in your work.

TIP 1: Why is explainability important?

Before you dive into the numerous practical details related to using XAI techniques in your work, you should start by examining your reasons for using explainability. Explainability can help you better understand your model’s predictions and reveal inaccuracies in your model and bias in your data. In the second blog post of this series, we commented on the use of post-hoc XAI techniques to assist in diagnosing potential blunders that the deep learning model might be making; that is, producing results that are seemingly correct but reveal that the model was “looking at the wrong places.” A classic example in the literature demonstrated that a husky vs. wolf image classification algorithm was, in fact, a “snow detector.” (Fig. 1). Figure 1: Example of misclassification in a “husky vs. wolf” image classifier due to a spurious correlation between images of wolves and the presence of snow. The image on the right, which shows the result of the LIME post-hoc XAI technique, captures the classifier blunder. [Source]

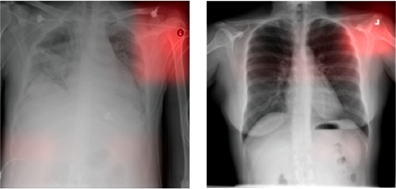

These are examples where there is not much at stake. But what about high-stakes areas (such as healthcare) and sensitive topics in AI (such as bias and fairness)? In the field of radiology, there is a famous example where models designed to identify pneumonia in chest X-rays learned to recognize a metallic marker placed by radiology technicians in the corner of the image (Fig. 2). This marker is typically used to indicate the source hospital where the image was taken. As a result, the models performed effectively when analyzing images from the hospital they were trained on, but struggled when presented with images from other hospitals that had different markers. And most importantly, explainable AI revealed that the models were not diagnosing pneumonia but classifying the presence of metallic markers.

Figure 1: Example of misclassification in a “husky vs. wolf” image classifier due to a spurious correlation between images of wolves and the presence of snow. The image on the right, which shows the result of the LIME post-hoc XAI technique, captures the classifier blunder. [Source]

These are examples where there is not much at stake. But what about high-stakes areas (such as healthcare) and sensitive topics in AI (such as bias and fairness)? In the field of radiology, there is a famous example where models designed to identify pneumonia in chest X-rays learned to recognize a metallic marker placed by radiology technicians in the corner of the image (Fig. 2). This marker is typically used to indicate the source hospital where the image was taken. As a result, the models performed effectively when analyzing images from the hospital they were trained on, but struggled when presented with images from other hospitals that had different markers. And most importantly, explainable AI revealed that the models were not diagnosing pneumonia but classifying the presence of metallic markers.

Figure 2: A deep learning model for detecting pneumonia: the CNN has learned to detect a metal token that radiology technicians place on the patient in the corner of the image field of view at the time they capture the image. When these strong features are correlated with disease prevalence, models can leverage them to indirectly predict disease. [Source]

Example

This example shows MATLAB code to produce post-hoc explanations (using two popular post-hoc XAI techniques, Grad-CAM and image LIME) for a medical image classification task.

Figure 2: A deep learning model for detecting pneumonia: the CNN has learned to detect a metal token that radiology technicians place on the patient in the corner of the image field of view at the time they capture the image. When these strong features are correlated with disease prevalence, models can leverage them to indirectly predict disease. [Source]

Example

This example shows MATLAB code to produce post-hoc explanations (using two popular post-hoc XAI techniques, Grad-CAM and image LIME) for a medical image classification task.

TIP 2: Can you use an inherently explainable model?

Deep learning models are often the first choice to consider, but should they be? For problems involving (alphanumerical) tabular data there are numerous interpretable ML techniques to choose from, including: decision trees, linear regression, logistic regression, Generalized Linear Models (GLMs), and Generalized Additive Models (GAMs). In computer vision, however, the prevalence of deep learning architectures such as convolutional neural networks (CNNs) and, more recently, vision transformers, make it necessary to implement mechanisms for visualizing network predictions after the fact. In a landmark paper, Duke University researcher and professor Cynthia Rudin made a strong claim in favor of the interpretable models (rather than post-hoc XAI techniques applied to an opaque model). Alas, prescribing the use of interpretable models and successfully are two dramatically different things; for example, an interpretable model from Rudin’s research group, ProtoPNet, has achieved relatively modest success and popularity. In summary, from a pragmatic standpoint, you are better off using pretrained models such as the ones available here and dealing with their opaqueness through judicious use of post-hoc XAI techniques than embarking on a time-consuming research project. Example This MATLAB page provides a brief overview of interpretability and explainability, with links to many code examples.TIP 3: How to choose the right explainability technique?

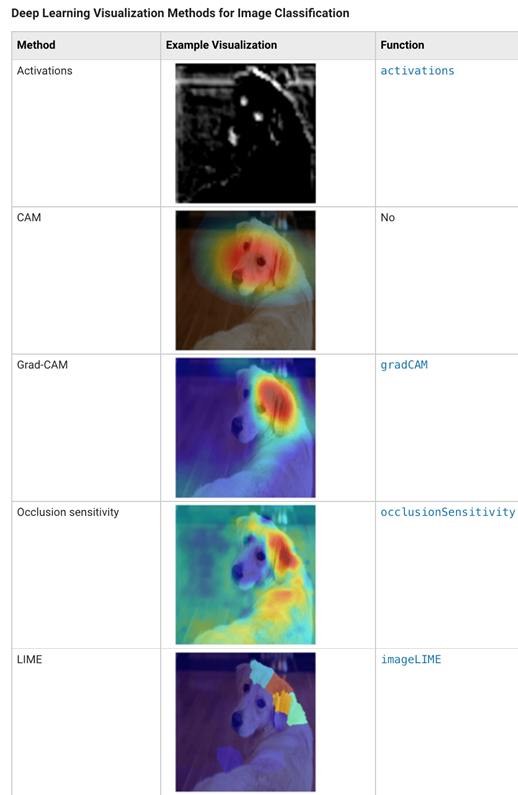

There are many post-hoc XAI techniques to choose from – and several of them have become available as MATLAB library functions, including Grad-CAM and LIME. These are two of the most popular methods in an ever-growing field that has more than 30 techniques to choose from (as of Dec 2022). Consequently, selecting the best method can be intimidating at first. As with many other decisions in AI, I advise to start with the most popular, broadly available methods first. Later, if you collect enough evidence (for example by running experiments with users of the AI solution) that certain techniques work best in certain contexts, you can test and adopt other methods. In the case of image classification, the perception of added value provided by the XAI technique can also be associated to the visual display of results. Fig. 3 provides five examples of visualization of XAI results using different techniques. The visual results are significantly different among them, which might lead to different users preferring different methods. Figure 3: Examples of different post-hoc XAI techniques and associated visualization options. [Source]

Example

The GUI-based UNPIC app allows you to explore the predictions of an image classification model using several deep learning visualization and XAI techniques.

Figure 3: Examples of different post-hoc XAI techniques and associated visualization options. [Source]

Example

The GUI-based UNPIC app allows you to explore the predictions of an image classification model using several deep learning visualization and XAI techniques.

TIP 4: Can you improve XAI results and make them more user-centric?

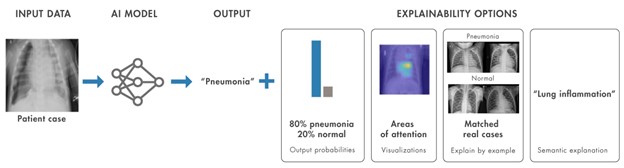

You can view explainable AI techniques as one option for interpreting the model’s decisions along with a range of other options (Fig. 4). For example, in medical image classification, an AI solution that predicts a medical condition from a patient’s chest x-ray might use gradually increasing degrees of explainability: (1) no explainability information, just the outcome/prediction; (2) adding output probabilities for most likely predictions, giving a measure of confidence associated with them; (3) adding visual saliency information describing areas of the image driving the prediction; (4) combining predictions with results from a medical case retrieval (MCR) system and indicating matched real cases that could have influenced the prediction; and (5) adding computer-generated semantic explanation. Figure 4: XAI as a gradual approach: in addition to the model’s prediction, different types of supporting information can be added to explain the decision. [Source]

Example

This example shows MATLAB code to produce post-hoc explanations (heat maps) and output probabilities for a food image classification task and demonstrates their usefulness in the evaluation of misclassification results.

Figure 4: XAI as a gradual approach: in addition to the model’s prediction, different types of supporting information can be added to explain the decision. [Source]

Example

This example shows MATLAB code to produce post-hoc explanations (heat maps) and output probabilities for a food image classification task and demonstrates their usefulness in the evaluation of misclassification results.

A cheat sheet with practical suggestions, tips, and tricks

Using post-hoc XAI can help but it shouldn’t be seen as a panacea. We hope the discussions, ideas, and suggestions in this blog series were useful to your professional needs. To conclude, we present a cheat sheet with some key suggestions for those who want to employ explainable AI in their work:| 1 | Start with a clear understanding of the problem you are trying to solve and the specific reasons why you might want to use explainable AI models. |

|

|

| 2 | Whenever possible, use an inherently explainable model, such as a decision tree, or a rule-based model. On the other hand, CNNs are amenable to post-hoc XAI techniques based on gradients and weight values. |

|

|

| 3 | Visualize and assess the model outputs. This can help you understand how the model is making decisions and identify any issues that may arise. |

|

|

| 4 | Consider providing additional context around the decision-making process to end-users, such as feature importance or sensitivity analysis. This can help build trust in the model and increase transparency. |

|

|

| 5 | Finally, document the entire process, including the data used, the model architecture, and the methods used to evaluate the model's performance. This will ensure reproducibility and allow others to verify your results. |

|

Read more about it:

- Christoph Molnar’s book “Interpretable Machine Learning” (available here) is an excellent reference to the vast topic of interpretable/explainable AI.

- This 2022 paper by Soltani, Kaufman, and Pazzani provides an example of ongoing research on shifting the focus of XAI explanations toward user-centric (rather than developer-centric) explanations.

- The 2021 blog post A Visual History of Interpretation for Image Recognition, by Ali Abdalla, provides a richly illustrated introduction to the most popular post-hoc XAI techniques and provides historical context for their development.

Cleve’s Corner: Cleve Moler on Mathematics and Computing

Cleve’s Corner: Cleve Moler on Mathematics and Computing The MATLAB Blog

The MATLAB Blog Guy on Simulink

Guy on Simulink MATLAB Community

MATLAB Community Artificial Intelligence

Artificial Intelligence Developer Zone

Developer Zone Stuart’s MATLAB Videos

Stuart’s MATLAB Videos Behind the Headlines

Behind the Headlines File Exchange Pick of the Week

File Exchange Pick of the Week Hans on IoT

Hans on IoT Student Lounge

Student Lounge MATLAB ユーザーコミュニティー

MATLAB ユーザーコミュニティー Startups, Accelerators, & Entrepreneurs

Startups, Accelerators, & Entrepreneurs Autonomous Systems

Autonomous Systems Quantitative Finance

Quantitative Finance MATLAB Graphics and App Building

MATLAB Graphics and App Building

댓글

댓글을 남기려면 링크 를 클릭하여 MathWorks 계정에 로그인하거나 계정을 새로 만드십시오.