Tips on Accelerating Deep Learning Training

Transfer Learning

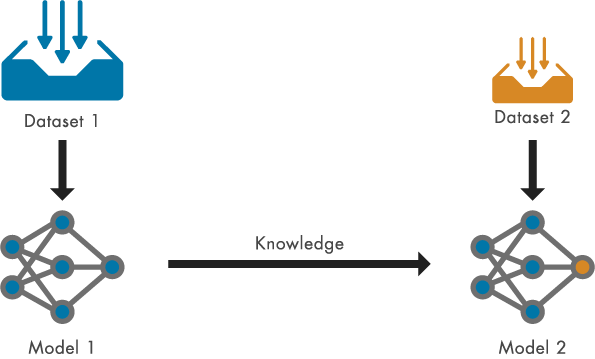

Transfer learning is a deep learning approach in which a model that has been trained for one task is used as a starting point for a model that performs a similar task. Transfer learning is useful for tasks for which a variety of pretrained models exist, such as computer vision and natural language processing. For computer vision, many popular convolutional neural networks (CNNs) are pretrained on the ImageNet dataset, which contains over 14 million images and a thousand classes of images. Updating and retraining a network with transfer learning is usually much faster and easier than training a network from scratch. Figure: Transfer knowledge from a pretrained model to another model that can be trained faster with less training data.

To get a pretrained model, you can explore MATLAB Deep Learning Model Hub to access models by category and get tips on choosing a model. You can also get pretrained networks from external platforms. You can convert a model from TensorFlow™, PyTorch®, or ONNX™ to a MATLAB model by using an import function, such as the importNetworkFromTensorFlow function.

In R2024a, the imagePretrainedNetwork function was introduced and you can use it to load most pretrained image models. This function loads a pretrained neural network and optionally adapts the network architecture for transfer learning and fine-tuning workflows. For an example, see Retrain Neural Network to Classify New Images.

Figure: Transfer knowledge from a pretrained model to another model that can be trained faster with less training data.

To get a pretrained model, you can explore MATLAB Deep Learning Model Hub to access models by category and get tips on choosing a model. You can also get pretrained networks from external platforms. You can convert a model from TensorFlow™, PyTorch®, or ONNX™ to a MATLAB model by using an import function, such as the importNetworkFromTensorFlow function.

In R2024a, the imagePretrainedNetwork function was introduced and you can use it to load most pretrained image models. This function loads a pretrained neural network and optionally adapts the network architecture for transfer learning and fine-tuning workflows. For an example, see Retrain Neural Network to Classify New Images.

net = imagePretrainedNetwork("squeezenet",NumClassses=5);

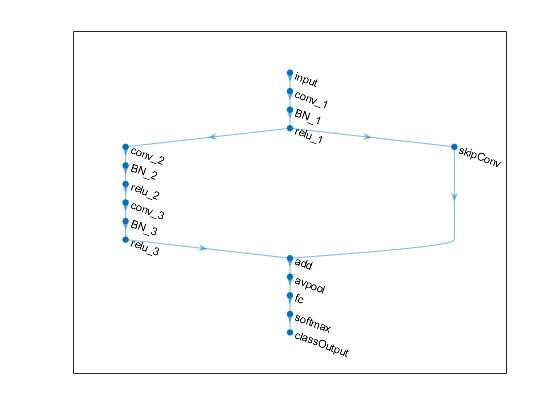

You can also prepare networks for transfer learning interactively using the Deep Network Designer app. Load a pretrained built-in model or import a model from PyTorch or TensorFlow, and then, modify the last learnable layer of the model with the app. This workflow is shown in the following animated figure and described in detail in the example Prepare Network for Transfer Learning Using Deep Network Designer.

Animated Figure: Interactive transfer learning using the Deep Network Designer app.

Animated Figure: Interactive transfer learning using the Deep Network Designer app.

GPUs and Parallel Computing

High-performance GPUs have a parallel architecture that is efficient for deep learning, as deep learning algorithms are inherently parallel. When GPUs are combined with clusters or cloud computing, training time for deep neural networks can be significantly reduced. Use the trainnet function to train networks, which uses a GPU if one is available. For more information on how to take advantage of GPUs for training, see Scale Up Deep Learning in Parallel, on GPUs, and in the Cloud. The following tips will help you time your code when working with GPUs:- Consider “warming up” your GPUs. Your code will run slower in the first 3-4 runs. So, measure the training time (see gputimeit) after the initial runs.

- Don’t forget synchronization when working with GPUs. See the wait(gpudevice) function to learn how to synchronize GPUs.

Code Profiling

Before trying to speed up training by editing your code, use code profiling to uncover the slowest parts of your code. Profiling is a way to measure the time it takes to run the code and identify which functions and lines of code are consuming the most time. You can profile your code interactively with the MATLAB Profiler or programmatically with the profile function. Here, we have profiled the training in the example Train Network on Image and Feature Data by using the profile function.profile on -historysize 9000000 net = trainnet(dsTrain,net,"crossentropy",options); profile viewerThe profiling results are shown in the following figure. Note that this specific example trains a very small network and training is very fast. But we can still observe that a portion of the training time is taken by reading the training data from a datastore. When training much more complex networks with larger datasets, profiling can reveal more opportunities for code optimization. For example, you might observe that preprocessing the input data before training (rather than repeatedly preprocessing data at runtime) can save time, or that too much time is spent on validation.

Figure: Code profiler results for training a small neural network.

Figure: Code profiler results for training a small neural network.

Monitoring Overhead

When you train a deep neural network, you can visualize the training progress. This is very helpful to assess the performance of your network and whether it meets your requirements. However, training visualizations add processing overhead to the training and increase training time. If you have completed your assessment, for example by plotting the training progress for several epochs, consider turning off the visualizations and inspecting the plots after the training completion. Figure: Training progress of a CNN for regression.

Another way to monitor the training progress is by using the verbose output of the trainnet function. Displaying the training progress at the command line has less negative impact on the training time than the visualization of the training progress. However, to decrease training time you can turn off the verbose output or decrease the frequency with which the progress is displayed.

Figure: Training progress of a CNN for regression.

Another way to monitor the training progress is by using the verbose output of the trainnet function. Displaying the training progress at the command line has less negative impact on the training time than the visualization of the training progress. However, to decrease training time you can turn off the verbose output or decrease the frequency with which the progress is displayed.

Conclusion

In this blog post, we have provided a few tips on how to accelerate training time for deep neural networks. The Deep Learning Toolbox documentation provides many more resources. If you are not sure how to get started with training acceleration or need specific advice, comment below. Or leave a comment to share with the AI blog community your tips and tricks for accelerating deep learning training in MATLAB.- Category:

- Deep Learning,

- New features,

- Tips and Tricks

Cleve’s Corner: Cleve Moler on Mathematics and Computing

Cleve’s Corner: Cleve Moler on Mathematics and Computing The MATLAB Blog

The MATLAB Blog Guy on Simulink

Guy on Simulink MATLAB Community

MATLAB Community Artificial Intelligence

Artificial Intelligence Developer Zone

Developer Zone Stuart’s MATLAB Videos

Stuart’s MATLAB Videos Behind the Headlines

Behind the Headlines File Exchange Pick of the Week

File Exchange Pick of the Week Hans on IoT

Hans on IoT Student Lounge

Student Lounge MATLAB ユーザーコミュニティー

MATLAB ユーザーコミュニティー Startups, Accelerators, & Entrepreneurs

Startups, Accelerators, & Entrepreneurs Autonomous Systems

Autonomous Systems Quantitative Finance

Quantitative Finance MATLAB Graphics and App Building

MATLAB Graphics and App Building

Comments

To leave a comment, please click here to sign in to your MathWorks Account or create a new one.