The solvers function from Optimization toolbox is one of my favourite enhancements of R2022b because it helps improve my knowledge of which algorithms can solve my problems. Solving Rosenbrock with the default algorithm

$ f(x)=100\left ( x_2 - x_1^2\right )^2 + (1-x_1)^2 $

The minimum is at (1,1) which is inside a long, narrow and flat valley. Many Optimization solvers find the valley rather easily but struggle with finding the global mimimum within it.

rosen = @(x,y) 100*(y - x.^2).^2 + (1 - x).^2;

fcontour(rosen,[-0.5,2.0],LevelStep=10)

title("Contour plot of Rosenbrock's function. Global minimum shown in red")

% Set up a couple of Optimization variables and apply boundaries

x = optimvar("x",LowerBound=-3,UpperBound=3);

y = optimvar("y",LowerBound = 0,UpperBound=9);

%Define the objective function

obj = 100*(y - x^2)^2 + (1 - x)^2;

%Set up the Optimization problem

prob = optimproblem(Objective=obj);

[sol,fval,exitflag,solution] = solve(prob,x0)

Solving problem using lsqnonlin.

Local minimum possible.

lsqnonlin stopped because the final change in the sum of squares relative to

its initial value is less than the value of the function tolerance.

<stopping criteria details>

sol =

x: 1.0000

y: 1.0000

fval = 4.9503e-16

exitflag =

FunctionChangeBelowTolerance

MATLAB analysed the problem and decided to use lsqnonlin as the solver. This turned out rather well for us as it went on to find the minimum without any difficulty. There were only 27 function evaluations. What other algorithms could solve this problem?

You might ask yourself, however, out of all of the Optimization solvers in both Optimization Toolbox and Global Optimization Toolbox, which of them are capable of solving this problem as well? This is what the solvers function does for us. Give it an Optimization problem and it will return the default solver that MATLAB would choose for that problem. [autosolver,allsolvers] = solvers(prob);

fprintf("The default solver for this problem is %s\n",autosolver)

The default solver for this problem is lsqnonlin

It also returns all other solvers that could tackle the input problem.

allsolvers'

"lsqnonlin"

"lsqcurvefit"

"fmincon"

"ga"

"patternsea…

"surrogateopt"

"particlesw…

"simulannea…

"gamultiobj"

"paretosearch"

Let's explicity instruct MATLAB to use one of these: fmincon [sol,fval,exitflag,solution] = solve(prob,x0,Solver="fmincon")

Solving problem using fmincon.

Local minimum found that satisfies the constraints.

Optimization completed because the objective function is non-decreasing in

feasible directions, to within the value of the optimality tolerance,

and constraints are satisfied to within the value of the constraint tolerance.

<stopping criteria details>

sol =

x: 1.0000

y: 1.0000

fval = 1.9355e-13

exitflag =

OptimalSolution

So fmincon can and did solve the problem but it had to work much harder with 71 function evaluations. Just because the solvers function determines that an algorithm is capable of tackling a problem doesn't necessarily mean its a good fit. An example of a really bad fit for this problem is Simulated Annealing. [sol,fval,exitflag,solution]= solve(prob,x0,Solver="simulannealbnd")

Solving problem using simulannealbnd.

Optimization terminated: change in best function value less than options.FunctionTolerance.

sol =

x: 2.8612

y: 8.1900

fval = 3.4653

exitflag =

SolverConvergedSuccessfully

solution =

iterations: 2247

funccount: 2262

message: 'Optimization terminated: change in best function value less than options.FunctionTolerance.'

rngstate: [1×1 struct]

problemtype: 'boundconstraints'

temperature: [2×1 double]

totaltime: 0.2231

solver: 'simulannealbnd'

Yikes! That's rather far from the actual solution and we've already burned though over a thousand function evaluations. Perhaps simulated annealing isn't such a good idea for this function after all.

Let's try every solver that's valid for this problem using default settings for each one

variableNames = ["x solution","y solution","objective value","function evaluations"];

results = array2table(NaN(numel(allsolvers), numel(variableNames)));

results.Properties.VariableNames = variableNames;

results.Properties.RowNames = allsolvers;

for solverNum = 1:numel(allsolvers)

solver = allsolvers(solverNum);

% I want each solver to be quiet while it works.

options=optimoptions(solver,Display="None");

if solver=="surrogateopt"

options=optimoptions(options,PlotFcn=[]);

[sol,fval,exitflag,solution] = solve(prob,x0,Solver=solver,Options=options);

%Some solvers have solution.funcCount, others have solution.funccount

if isfield(solution,'funcCount')

funcount = solution.funcCount;

funcount = solution.funccount;

results.("x solution")(solverNum) = sol.x;

results.("y solution")(solverNum) = sol.y;

results.("objective value")(solverNum) = fval;

results.("function evaluations")(solverNum) = funcount;

results

results = 10×4 table

| | x solution | y solution | objective value | function evaluations |

|---|

| 1 lsqnonlin | 1 | 1 | 0 | 27 |

|---|

| 2 lsqcurvefit | 1 | 1 | 0 | 27 |

|---|

| 3 fmincon | 1 | 1 | 0 | 71 |

|---|

| 4 ga | 0.9443 | 0.8904 | 0.0033 | 9453 |

|---|

| 5 patternsearch | 1.8658 | 3.4852 | 0.7511 | 734 |

|---|

| 6 surrogateopt | 0.9885 | 0.9768 | 0.0001 | 200 |

|---|

| 7 particleswarm | 0.9996 | 0.9993 | 0 | 5200 |

|---|

| 8 simulannealbnd | 2.3423 | 5.4883 | 1.8021 | 2151 |

|---|

| 9 gamultiobj | 1.0121 | 1.0244 | 0.0001 | 5100 |

|---|

| 10 paretosearch | 0.9497 | 0.9088 | 0.0074 | 9000 |

|---|

It seems that MATLAB's default choice for this problem, lsqnonlin, was a good one. Indeed it was the best one. That's not always the case though.

Which algorithm is best for Rastigrin's function?

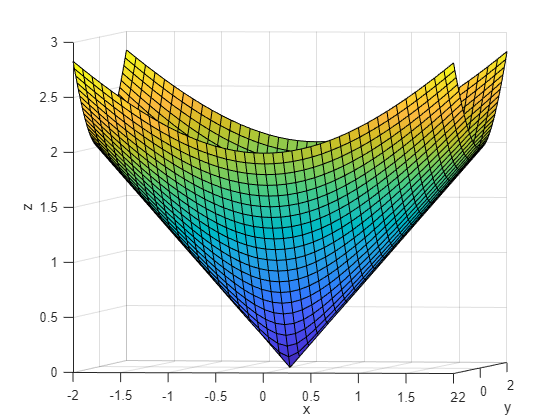

Consider Rastigrin's function. This is another classic test function in numerical optimization with many local minima and a global minimum at (0,0): ras = @(x, y) 20 + x.^2 + y.^2 - 10*(cos(2*pi*x) + cos(2*pi*y));

Plotting it shows just how many local minima there are

fsurf(ras,[-3 3],"ShowContours","on")

title("rastriginsfcn(x,y)")

Usually you don't know the location of the global minimum of your objective function. To show how the solvers look for a global solution, this example starts all the solvers around the point [2,3], which is far from the global minimum. It also uses finite bounds of -70 to 130 for each variable

x = optimvar("x","LowerBound",-70,"UpperBound",130);

y = optimvar("y","LowerBound",-70,"UpperBound",130);

prob = optimproblem("Objective",ras(x,y));

This time, MATLAB chooses fmincon as the default solver which gets stuck in one of the local minima solve(prob,x0)

Solving problem using fmincon.

Local minimum found that satisfies the constraints.

Optimization completed because the objective function is non-decreasing in

feasible directions, to within the value of the optimality tolerance,

and constraints are satisfied to within the value of the constraint tolerance.

<stopping criteria details>

ans =

x: -1.9899

y: -2.9849

As before, we use solvers to get the list of everything that could tackle this problem [autosolver,allsolvers] = solvers(prob)

"fmincon" "ga" "patternse… "surrogate… "particles… "simulannea… "gamultiobj" "paretosea…

variableNames = ["x","y","objective value","function evaluations"];

results = array2table(NaN(numel(allsolvers), numel(variableNames)));

results.Properties.VariableNames = variableNames;

results.Properties.RowNames = allsolvers;

for solverNum = 1:numel(allsolvers)

solver = allsolvers(solverNum);

% I want each solver to be quiet while it works.

options=optimoptions(solver,Display="None");

if solver=="surrogateopt"

options=optimoptions(options,PlotFcn=[]);

[sol,fval,exitflag,solution] = solve(prob,x0,Solver=solver,Options=options);

%Some solvers have solution.funcCount, others have solution.funccount

if isfield(solution,'funcCount')

funcount = solution.funcCount;

funcount = solution.funccount;

results.("x")(solverNum) = sol.x;

results.("y")(solverNum) = sol.y;

results.("objective value")(solverNum) = fval;

results.("function evaluations")(solverNum) = funcount;

results

results = 8×4 table

| | x | y | objective value | function evaluations |

|---|

| 1 fmincon | -1.9899 | -2.9849 | 12.9344 | 39 |

|---|

| 2 ga | 1.9899 | 2.9849 | 12.9344 | 3343 |

|---|

| 3 patternsearch | 0 | 0 | 0 | 99 |

|---|

| 4 surrogateopt | -0.9982 | 0.0094 | 1.0146 | 200 |

|---|

| 5 particleswarm | 0.9950 | 0 | 0.9950 | 1500 |

|---|

| 6 simulannealbnd | -0 | 0 | 0 | 2864 |

|---|

| 7 gamultiobj | -0 | 0 | 0 | 5100 |

|---|

| 8 paretosearch | -0.9950 | 0 | 0.9950 | 7153 |

|---|

It's worth bearing in mind that these 'solver sweeps' only consider the default settings for each solver. Each solver has a range of options that can improve performance and solution quality that haven't been considered here but we have made a good start in exploring the algorithms we can bring to bear on our problem.

To learn more about the Optimization algorithms available in MATLAB, take a look at the documentation.

- This page characteristics of the Global Optimization Toolbox solvers, and provides some recommendations of what to try in different scenarios.

- This page is a decision table for the Optimization Toolbox solvers.

Cleve’s Corner: Cleve Moler on Mathematics and Computing

Cleve’s Corner: Cleve Moler on Mathematics and Computing The MATLAB Blog

The MATLAB Blog Guy on Simulink

Guy on Simulink MATLAB Community

MATLAB Community Artificial Intelligence

Artificial Intelligence Developer Zone

Developer Zone Stuart’s MATLAB Videos

Stuart’s MATLAB Videos Behind the Headlines

Behind the Headlines File Exchange Pick of the Week

File Exchange Pick of the Week Hans on IoT

Hans on IoT Student Lounge

Student Lounge MATLAB ユーザーコミュニティー

MATLAB ユーザーコミュニティー Startups, Accelerators, & Entrepreneurs

Startups, Accelerators, & Entrepreneurs Autonomous Systems

Autonomous Systems Quantitative Finance

Quantitative Finance MATLAB Graphics and App Building

MATLAB Graphics and App Building

댓글

댓글을 남기려면 링크 를 클릭하여 MathWorks 계정에 로그인하거나 계정을 새로 만드십시오.