Paged Matrix Functions in MATLAB (2024 edition)

Back in 2021, Loren Shure posted an article that introduced the first page-wise matrix functions in MATLAB: A page-wise matrix multiply pagemtimes, along with page-wise transpose pagetranspose, and complex conjugate transpose pagectranspose; all of which were added to MATLAB R2020b.

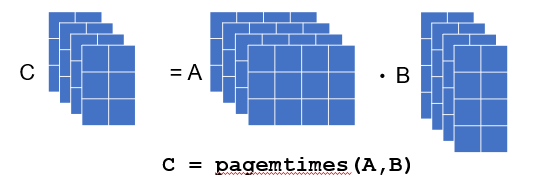

The following diagram is helpful to visualize what's going on in the 3D case. Each 3D array is considered as a set of matrices (or pages)

A demo I like to show is this one. Image that A and B are stacks of 100,000 matrices, each of size 3x3. We want to multiply each pair together to form the stack C. Here's the old way of doing this using a loop.

A = rand(3,3,100000);

B = rand(3,3,100000);

C = zeros(3,3,100000); % preallocate the results

tic

for i=1:100000

C(:,:,i) = A(:,:,i) * B(:,:,i);

end

loopTime = toc

and this is how I'd do it using pagemtimes.

tic

C1 = pagemtimes(A,B);

pagedTime = toc

The result is a lot faster.

fprintf("The pagemtimes version is %f times faster than the loop\n",loopTime/pagedTime)

That's a lot of speedup! Indeed, this demo has been carefully selected to show about the most amount of speed-up you can expect on your machine for pagemtimes for reasons we'll get into later.

The idea of page-wise matrix functions turned out to be both a popular and useful idea! Here at MathWorks, we found uses for them all over our products and the user community made requests for several more variants. Page-wise matrix-multiply was a great start but you also wanted page-wise SVD, backslash, eig and more. MathWork's development team delivered!

So, let's take a closer look at the state of play for paged matrix functions as of R2024a.

The full list of page-wise matrix functions in MATLAB

Here's a list of all of the page-wise matrix functions we have in MATLAB, along with when they were introduced.

- pagetranspose (R2020b) Page-wise transpose

- pagectranspose (R2020b) Page-wise complex conjugate transpose

- pageeig (R2023a) Page-wise eigenvalues and eigenvectors

- pagelsqminnorm (R2024a) Page-wise minimum-norm least-squares solution to linear equation

- pagemtimes (R2020b) Page-wise matrix multiplication

- pagemldivide (R2022a) Page-wise left matrix divide

- pagemrdivide (R2022a) Page-wise right matrix divide

- pagenorm (R2022b) Page-wise matrix or vector norm

- pagepinv (R2024a) Page-wise Moore-Penrose pseudoinverse

- pagesvd (R2021b) Page-wise singular value decomposition

Why have separate functions?

From the very beginning, a natural question to ask was "Instead of having a bunch of different page functions, why not have just one called, say, pagefun that takes the required function as an argument". Indeed, MATLAB has such a function in the parallel computing toolbox that works on distributed and GPU arrays.

We did consider that but one reason we decided against it was option handling. Some options might only apply to certain functions. The options documentation would be long with most of it being not relevant to what someone wanted to do. Another reason is performance; the current design allows us to go directly to the underlying function.

Where does the speed-up come from?

Much of the original blog post on page-wise matrix functions focused on how much faster they could be than using loops. My demo above showed that in certain cases, this speed-up could be significant. So where does this speed-up come from?

Argument checking: The first optimization is rather mundane: Argument checking. In the page-wise case, you only need to do it once whereas if you loop over 100,000 pages, you do argument checking 100,000 times. For large matrices, this overhead is insignificant but when you are dealing with thousands of very small matrices, the time to check arguments is on-par with the computation time.

More parallelism: The second reason for the speed-up is parallelism. We can choose to run the internal for-loop over all of the pages in parallel if we wish. This is where things get a little more complicated. Most of MATLAB's linear algebra functions already run in parallel. Multiply two large matrices together while monitoring your CPU workload and you'll see this in action. This leaves us with a decision to make, we need to decide to either let the underlying BLAS/LAPACK routine run multi-threaded and not thread the loop over the pages, or to run BLAS/LAPACK single-threaded and thread the outer loop.

With very small matrices, this decision is easy. There is probably no benefit at all from parallelism when multiplying two very small matrices together. When you have 100,000 pairs of them, however, the route to parallelism is clear.

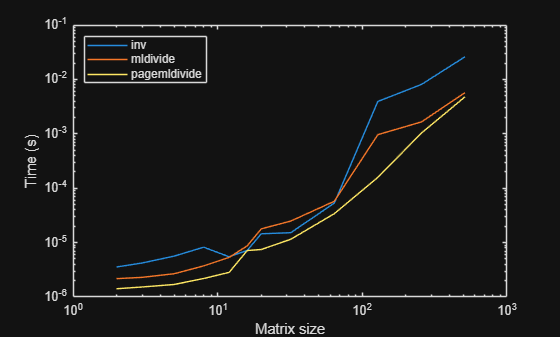

Small matrix math tricks: Since the main speedup of using paged functions happens for many small matrices, this made us focus on the performance for such small matrices (e.g. 3x3) using various mathematical tricks. These speed-ups also found their way into the standard MATLAB functions such as inv and mldivide. Head over to the documentation for mldivide, for example, and you'll see this

As a result of all of this, the most impressive results come from huge numbers of small matrices and hence the structure of my initial demo in this blog post.

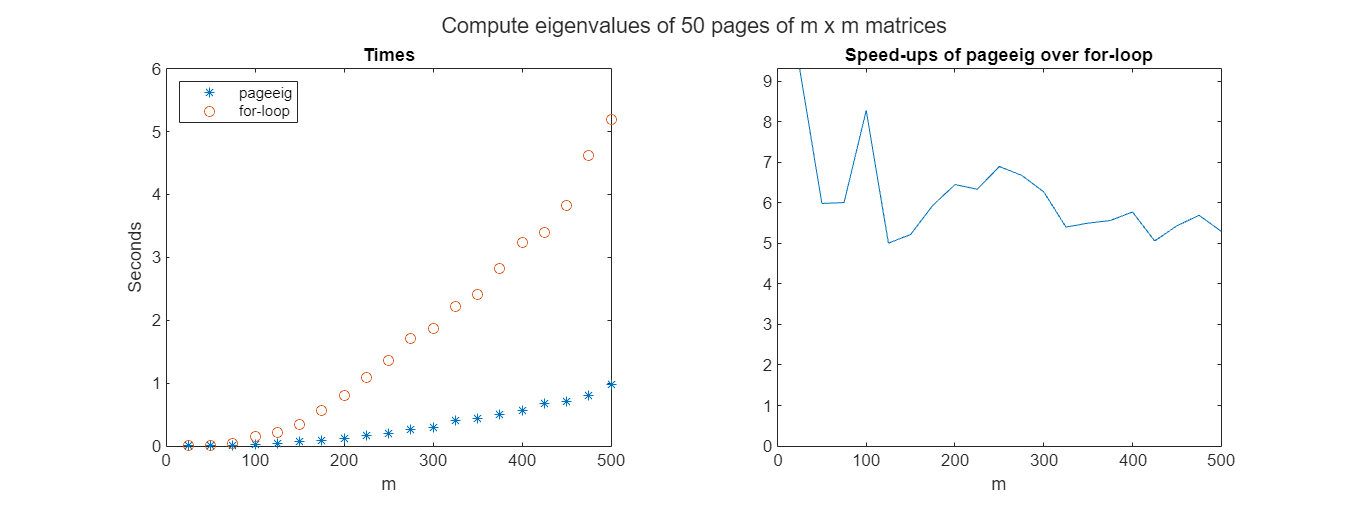

With all of that said, however, there is still useful speed-up to be found in with smaller numbers of larger matrix sizes as this demo of paigeeig shows.

mset = 25:25:500;

msetSize = numel(mset);

numPages = 50;

tLoop = zeros(msetSize,1);

tPage = zeros(msetSize,1);

for i=1:msetSize

A = rand(mset(i),mset(i),numPages);

CPage = pageeig(A);

f = @() pageeig(A);

tPage(i) = timeit(f);

CLoop = eigLoop(A);

f = @() eigLoop(A);

tLoop(i) = timeit(f);

end

function C = eigLoop(A)

[rows,~,pages] = size(A);

C = zeros(rows,1,pages);

for i=1:pages

C(:,1,i) = eig(A(:,:,i));

end

end

figure('Position',[100,100,1200,450])

t = tiledlayout(1,2);

nexttile

plot(mset,tPage,'*');

hold on

plot(mset,tLoop,'o');

legend({'pageeig','for-loop'},'Location','northwest');

title('Times')

xlabel('m')

ylabel('Seconds')

hold off

nexttile

plot(mset,tLoop./tPage)

title('Speed-ups of pageeig over for-loop')

xlabel('m')

ylim([0,inf])

titleStr = sprintf('Compute eigenvalues of %d pages of m x m matrices',numPages);

title(t,titleStr)

Where are page-wise matrix functions used?

One aspect of my job is to work with MATLAB users around the world on making their code go faster and I've used paged functions several times since they were introduced. I usually can't discuss user's code, however, so I asked around internally to see where MathWorks uses them. The answer was "Almost everywhere!"

For example, it turns out that over 100 shipping functions across the various toolboxes make use of pagemtimes. Its used in 5G Toolbox, Aerospace Toolbox, Antenna Toolbox, Audio Toolbox, Communications Toolbox, System Identification Toolbox, Image Processing Toolbox, Lidar Toolbox, Medical Imaging Toolbox, Navigation Toolbox, Deep Learning Toolbox, Partial Differential Equation Toolbox, Phased Array System Toolbox, Radar Toolbox, RF Toolbox, Text Analytics Toolbox, Computer Vision Toolbox, WLAN Toolbox and the MATLAB Support Package for Quantum Computing. That's a big list and shows that the concept of page-wise matrix functions is useful in many diverse areas of technical computing.

One of our developers reached out to tell me that modern communications system use a technique called OFDM where data is sent over the air in parallel on sets of adjacent frequencies. At each frequency, there is an equalization process where the receiver tries to undo distortion from the channel and to do this we solve a least squares problem at each frequency (solve Ax = b or Ax + n = b where n represents additive Gaussian noise).

These least squares problems can be solved in parallel using routines like pagesvd across all relevant frequencies. This led to substantial speedups in toolbox functions like ofdmEqualize (Communications Toolbox) and nrEqualizeMMSE (5G Toolbox) and wlanHEEqualize (WLAN Toolbox). This speedup in turn led to significant speedups in popular shipping examples such as NR PDSCH Throughput - MATLAB & Simulink (mathworks.com).

Page-wise matrix functions have become extremely useful additions to the toolkit of many MathWorkers and I encourage you to look through your own code to see where they might be applied. Also, if you have found them useful in your own code, please do let me know in the comments

How are we choosing which page-wise functions to add?

As you know, it all started with pagemtimes. From there, internal and external users of MATLAB made requests for the other functions and we've been steadily adding them in. The primary driver for which functions we've actually implemented is a discussion of applications. A page-wise function that could be used in several, well-defined application areas gets much higher priority than "foo is a matrix function so why not implement pagefoo?"

So, if this article has got your interest and you have an application that could benefit from an as-yet-unimplemented page-wise function, do let us know the details.

Comments

To leave a comment, please click here to sign in to your MathWorks Account or create a new one.