Preparing for the RoboCup Major Leagues

The Racing Lounge is back from Christmas break. I wish you all a succesful and healthy 2018!

In today’s post, Sebastian Castro discusses his experiences with a robotics workshop he helped deliver at the RoboCup Asia-Pacific (RCAP) event in Bangkok, Thailand.

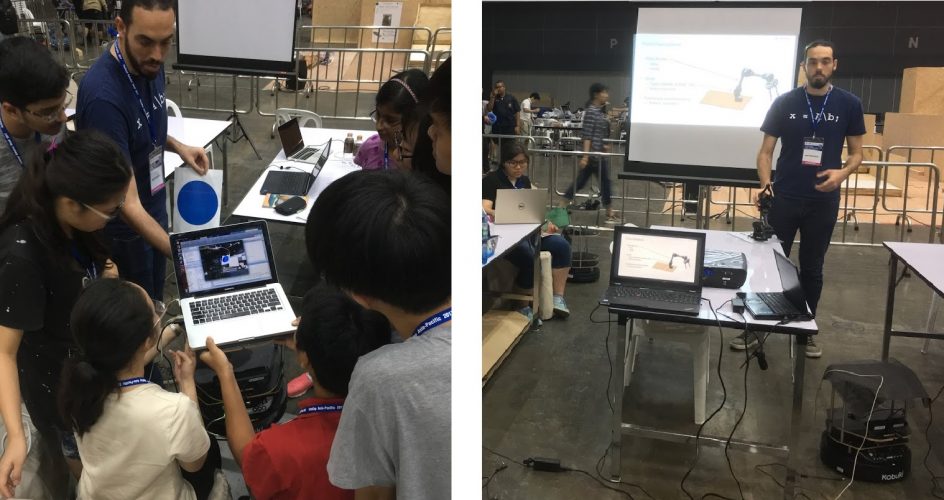

As a reminder, MathWorks is a global sponsor of RoboCup which comes with many benefits to student teams. At RCAP, we had a booth with information, giveaways, software demonstrations, and more. We were even joined by our distributors in Southeast Asia, TechSource. Next time you’re at a RoboCup event, please stop by and say “hi”.

MathWorks and TechSource at our booth

MathWorks and TechSource at our booth

Background

One of the biggest challenges in RoboCup is the jump from the Junior (pre-university) leagues to the Major (university-level) leagues. Typically, there is a significant learning curve that stops even the most successful Junior teams from continuing in RoboCup once they move from high school to university.

Many RoboCup organizers are aware of this issue, which had led them to create intermediate challenges targeted at overcoming this learning curve. Unlike the Major league competitions, which are primarily research platforms for students pursuing advanced degrees, these challenges are more suited for educating undergraduate students. Some examples are the RoboCupRescue Rapidly Manufactured Robot and the RoboCup Asia-Pacific CoSpace challenges.

What made RCAP extra special for us was being invited by Dr. Jeffrey Tan to help with another challenge of this type. Dr. Tan has been an organizer with the RoboCup@Home league for over 4 years, as well as an advisor for the KameRider team. We decided to deliver a joint workshop for his RoboCup@Home Education league. This was a great opportunity for us because it allowed us to introduce MATLAB into various aspects of robot design and programming, and served as a good benchmark of our tools in a real, world-class competition.

There were 4 teams participating:

- Tamagawa Science Club — Tamagawa Academy, Japan — high school

- Nalanda — Genesis Global School, India — high school

- KameRider EDU — Nankai University, China/Universiti Teknology Malaysia — undergraduate

- Skuba JR — Kasetsart University, Thailand — undergraduate

RoboCup@Home Education Crew — Courtesy of Dr. Kanjanapan Sukvichai (Skuba JR advisor)

Days 1-2: The Workshop

For the first 2 days, we came up with an ambitious curriculum of topics that got the students up and running with a TurtleBot2 from scratch — including an RGB + depth sensor and a robot arm.

All we requested in advance was to bring a laptop with Ubuntu 14.04. We then installed the Robot Operating System (ROS) and MATLAB with a complimentary license from our Web site.

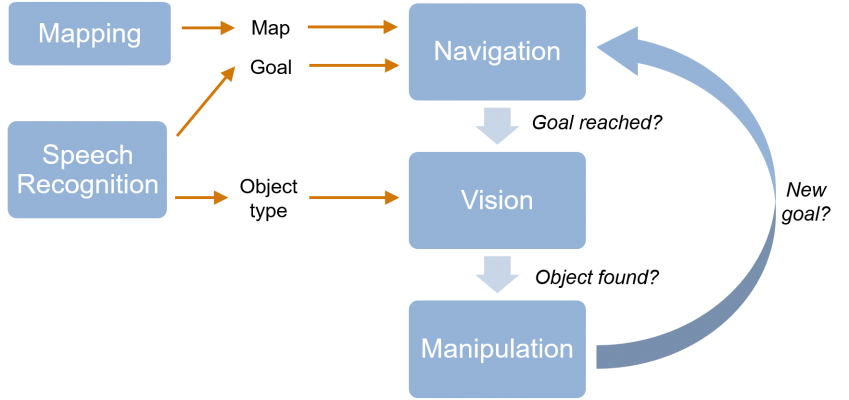

The goal was to develop the pieces of a typical RoboCup@Home algorithm. If the robot has a map of its environment and receives a spoken command — for example, “bring me the water bottle from the kitchen” — the diagram below is an example of the components needed to complete that task.

A. Speech Recognition and Synthesis

Speech recognition was done with CMU PocketSphinx, while speech input and synthesis was done with the audio_common stack. In the workshop, we showed how to detect speech, look for key words in a dictionary, and take actions based on those key words. This was all done outside of MATLAB.

Several students asked about MATLAB’s capabilities for speech recognition. Right now, there are two ways to get this working:

- Use the ROS tools above to publish the detected text on a ROS topic and subscribe to it in MATLAB.

- Call a user-defined Python speech module from MATLAB.

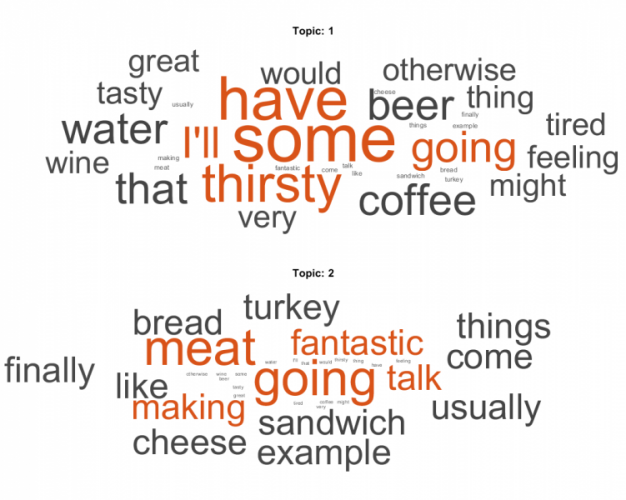

Once the text is in MATLAB, you can take advantage of its capabilities for characters and strings, or even the new Text Analytics Toolbox.

I have personally got approach #2 working with this Python SpeechRecognition package — in particular, with Google Cloud Speech and CMU PocketSphinx. The image below shows a simple example I ran which used Text Analytics Toolbox to cluster my speech into two categories — food and drink. There are words like “going”, “have”, and “some” which give us no extra information. Luckily, the toolbox has preprocessing capabilities to address such issues.

B. Mapping and Navigation

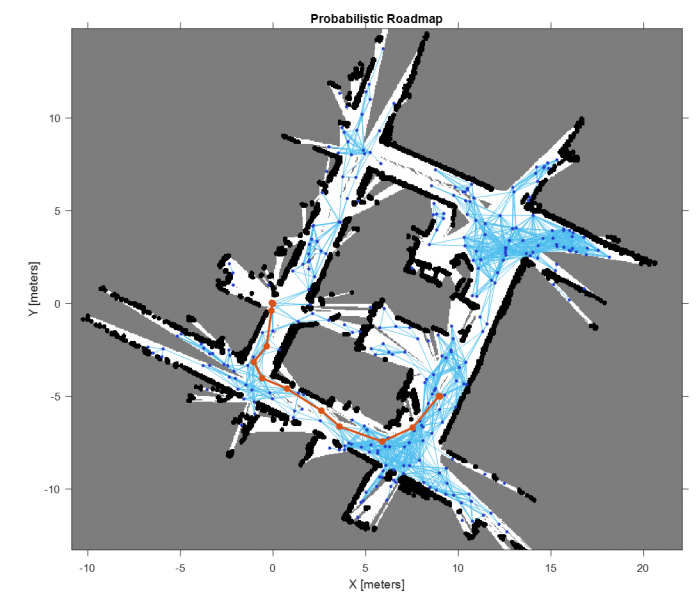

To perform mapping and navigation tasks, we took the following workflow

- Generate a map of the environment using the existing TurtleBot gmapping example and driving the robot around.

- In the example above, the latest map is published on a ROS topic (/map). So, we can read the map into MATLAB as an occupancy grid and save it to a file.

- Once the map is in MATLAB, we can sample a probabilistic roadmap (PRM) and use it to find a path between two points.

- Then, we can program a robot to follow this path with the Pure Pursuit algorithm.

Below you can see an example map and path I generated near my office. Assuming the map is static, steps 3 and 4 can be repeated for different start and goal points as needed.

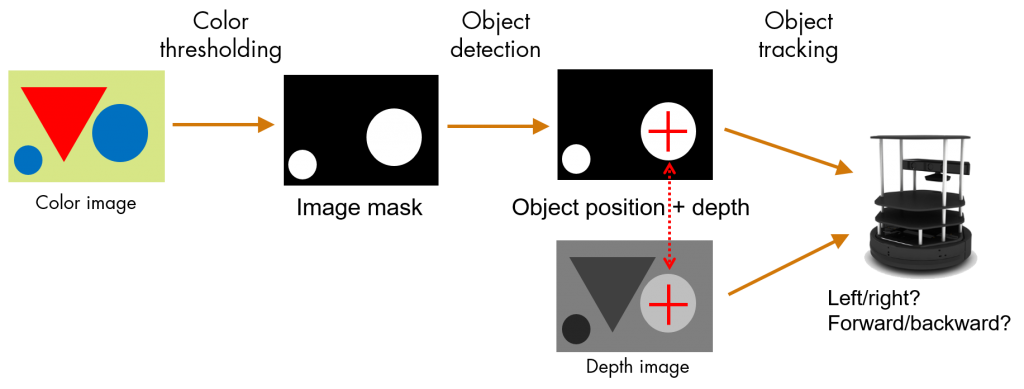

C. Computer Vision and Control

For this task, we operated exclusively in the MATLAB world. We received many comments about prototyping with images being easier than with OpenCV, mostly because more challenging languages (Python or C++) were required for the latter.

Our vision and control workflow was:

- Using the Color Thresholder app to define thresholds for tracking an object of interest

- Performing blob analysis to find the object position

- Estimating the distance object using the depth image at the detected object position

- Moving the robot depending on the object position and depth. We started with simple on-off controllers with deadband on both linear and angular velocity.

At this point, students had reference MATLAB code for a closed-loop vision-based controller with ROS. Over the next few days, they were encouraged to modify this code to make their robots track objects more robustly. Let’s remember that most of the students had never been exposed to MATLAB before!

D. Manipulation

The robots were fitted with TurtleBot arms. There were two sides to getting these arms working: hardware and software.

On the hardware side, we pointed students to the ROBOTIS Dynamixel servo ROS tutorials. The goal here was to make sure there was a ROS interface to joint position controllers for each of the motors in the robot arm. This would allow us to control the arms with controllers in MATLAB.

On the software side, the steps were:

- Import the robot arm description (URDF) file into MATLAB as a rigid body tree representation

- Become familiar with the Inverse Kinematics (IK) functionality in the Robotics System Toolbox

- Follow a path by using IK at several points — first in simulation and then on the real robot arm

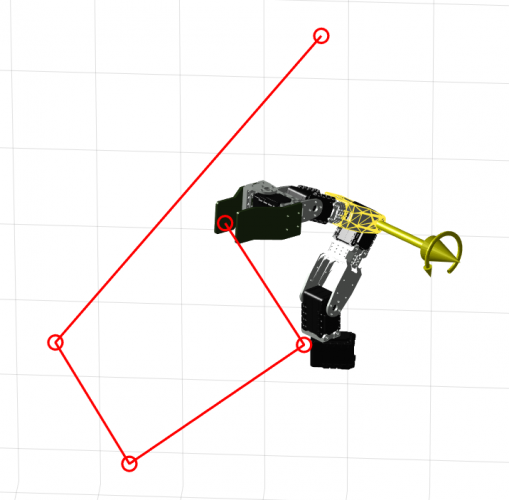

The figure below shows a path that linearly interpolates between points. However, smoother trajectories are also possible with a little more math, or with tools like the Curve Fitting Toolbox.

Days 3-5: The Competition

The workshop days were meant to raise awareness of software tools needed to succeed in the competition. With a stack of ROS tutorials, example MATLAB files, and other useful links, the students were now tasked with taking our reference applications and using them to compete in the same challenges as the major leagues.

These challenges were as follows. Credit to Dr. Tan’s KameRider team for the sample YouTube videos.

- Speech and Person Recognition: Demonstrating basic speech and vision functionality. An example would be asking the robot “how many people are in front of you?” and getting a correct answer. [Person Video] [ Speech Video]

- Help-me-carry: Following a person and assisting them by carrying an object. [Help-me-carry Video]

- Restaurant: Identifying a person ready to place an order, correctly listening to the order, and retrieving the object that has been ordered [Restaurant Video] [Manipulation Video]

- Finals: Teams can choose freely what to demonstrate, and are evaluated on criteria such as novelty, scientific contribution, presentation, and performance

During this time, students were spending hours digesting workshop material, testing their code, and slowly building up algorithms to clear the challenges.

Tamagawa Science Club robot during the competition — Courtesy of Dr. Jeffrey Tan

Some highlights:

- Teams experienced firsthand how to take pieces of code that performed different tasks and integrate them into one working system.

- All teams had complete computer vision and control algorithms with MATLAB, but not all teams had functioning speech detection/synthesis and manipulator control. They found the MATLAB code easier to set up and modify than some of the other ROS based packages, which required knowledge of Python, C++, and/or the Catkin build system to use and modify.

- The two high school teams successfully generated a map of the environment and implemented a navigation algorithm.

- The Nalanda team was able to add obstacle avoidance to their robot using the Vector Field Histogram functionality in Robotics System Toolbox.

- A handful of teams were able to tune some of the pretrained cascade object detector and people detector examples for their person recognition and final challenges.

KameRider EDU during the Person Recognition challenge — Courtesy of Dr. Jeffrey Tan

Conclusion

This event was a lot of fun, and as a bonus I enjoyed escaping the cold Boston winter for a few weeks. It was satisfying to see how much the students were able to achieve, and how our conversations evolved, in such a short time frame.

- At the beginning, it was mostly questions about installation, error messages, basic MATLAB and ROS questions, and “how am I going to get all of this done?”.

- Near the end, students had a pretty good understanding of basic ROS constructs (topics, messages, launch files, Catkin, etc.), general programming tools (conditional statements, loops, functions, breakpoints, etc.) and — most importantly — were already asking “what’s next?”

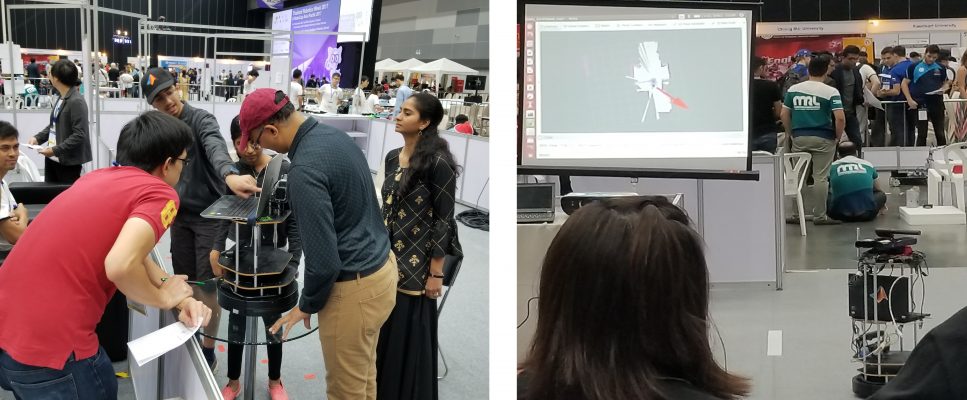

Workshop in action — Courtesy of Team Nalanda

The general consensus was that both MATLAB and ROS were needed to make this workshop happen. Implementing and testing algorithms was made accessible by MATLAB, whereas installation of existing ROS packages facilitated some of the necessary low-level sensing and actuation, as well as mapping, capabilities.

- Many ROS packages were easy to set up and could deliver powerful results right away. However, understanding the underlying code and build system to modify or extend these packages was nontrivial for beginners. This is likely because ROS is designed for users comfortable with a rigorous software development process.

- On the other hand, MATLAB needed a single installation at the beginning, required no recompilation, and the sample code (both our workshop files and documentation examples) was determined easy to follow, debug, and modify.

Heramb Modugula (Team Nalanda) remarked that “plenty of time was available to tinker with the robot and example code, and eventually, coding on our own”. His coach and father, Srikant Modugula, highlighted integration of software component as the most critical task. “While MATLAB provides a powerful framework to do robotic vision, motion, and arm related tasks, we look forward to an easier way to connect it with ROS enabled TurtleBot and seamlessly compile/run multiple programs.”

Computer vision and manipulation at the workshop — Courtesy of Dr. Jeffrey Tan

To summarize, MATLAB and its associated toolboxes are a complete design tool. This includes a programming language, an interactive desktop environment (IDE), graphical programming environments like Simulink and Stateflow, apps to help with algorithm design and tuning, and standalone code generation tools.

Our recommended approach is to use MATLAB and Simulink for prototyping algorithms that may be a subset of the entire system, and then deploying these algorithms as standalone ROS nodes using automatic code generation. This way, the robot does not rely on the MATLAB environment (and its associated overhead) at competition time. For more information, please refer to our Getting Started with MATLAB, Simulink, and ROS blog post or reach out to us.

Find the MathWorks logos!

Our goal is that challenges like these will lower the entry barrier for new teams from around the world to join the RoboCup major leagues and perform competitively in their first year. This will create opportunities for newcomers to become comfortable with robot programming and eventually transition to being “true” major league teams — that is, bringing state-of-the-art algorithms to RoboCup and pushing the boundaries of robotics research worldwide.

For this reason, we will work towards offering this workshop at future events, as well as open-sourcing our materials and posting them online. If you are interested in using this material to learn or teach, or have any thoughts to share, please leave us a comment. We hope to see more of you sign up for future RoboCup@Home Education challenges. Until next time!

评论

要发表评论,请点击 此处 登录到您的 MathWorks 帐户或创建一个新帐户。