YOLOv2 Object Detection: Deploy Trained Neural Networks to NVIDIA Embedded GPUs

Our previous blog post, walked us through using MATLAB to label data, and design deep neural networks, as well as importing third-party pre-trained networks. We trained a YOLOv2 network to identify different competition elements from RoboSub–an autonomous underwater vehicle (AUV) competition. See our trained network identifying buoys and a navigation gate in a test dataset.

But what next? When building an autonomous, untethered system like an AUV for RoboSub, the challenge is transferring these algorithms from a desktop/development environment onto an embedded computer, characterized by a low power requirement, but also lower memory and compute capabilities. Remember: whether it’s life or autonomous systems, everything is a compromise ?. Let’s dive into using MATLAB to deploy this network to an NVIDIA Jetson. NVIDIA Jetson is a power efficient System-on-Module (SOM) with CPU, GPU, PMIC, DRAM, and flash storage for edge AI applications that comes in a variety of configuration specifications. While this workflow is for the NVIDIA Jetson TX2, the same can be applied to other NVIDIA embedded products as well.

I. Setup the Jetson and the Host Computer

First, let’s look at some setup steps needed on the MATLAB Host and Jetson.

MATLAB Host

GPU Coder helps convert MATLAB code into CUDA code for the NVIDIA Jetson. Here is a list of required products for this example.

MathWorks Products

- MATLAB® (required)

- MATLAB Coder™ (required)

- Parallel Computing Toolbox™ (required)

- Deep Learning Toolbox™ (required for deep learning)

- GPU Coder Interface for Deep Learning Libraries (required for deep learning)

- GPU Coder Support Package for NVIDIA GPU’s (required to deploy code to NVIDIA GPUs)

- Image Processing Toolbox™ (recommended)

- Computer Vision Toolbox™ (recommended)

- Embedded Coder® (recommended)

- Simulink® (recommended)

Third-Party Libraries

As a reference we are using MATLAB R2020a; please refer to the documentation for the appropriate libraries for other versions.

- C/C++ Compiler – Microsoft Visual Studio 2013 – 2019 can be used for Windows. For Linux use GCC C/C++ compiler 6.3.x. To check if the compiler has been set-up correctly, use the following command:

mex -setup C++

- CUDA Toolkit and driver: GPU Coder has been tested with CUDA toolkit v10.1 The default installation comes with the nvcc compiler, cuFFT, cuBLAS, cuSOLVER, and Thrust libraries

- CUDA Deep Neural Network library (cuDNN): The NVIDIA CUDA® Deep Neural Network library (cuDNN) is a GPU-accelerated library of primitives for deep neural networks. GPU coder has been tested with v7.5.x. Download the files and run the installer.

- NVIDIA TensorRT: High Performance deep learning inference optimizer and runtime library, GPU Coder has been tested with v5.1.x. Supported in R2019b and later for CUDA Code generation from a Windows Machine.

- OpenCV Libraries: Open Source Computer Vision Library (OpenCV) v3.1.0 is required. The OpenCV library that ships with Computer Vision Toolbox does not have all the required libraries and the OpenCV installer does not install them. Therefore, you must download the OpenCV source and build the libraries and add it to the path

- CUDA toolkit for ARM® and Linaro GCC 4.9 Use the gcc-linaro-4.9-2016.02-x86_64_aarch64-linux-gnu release tarball.

Environment Variables

Once all the libraries are installed, set the environment variables to point MATLAB to these libraries, these environment variables are erased when a MATLAB session is ended. Add this code to a startup script to ensure these variables are set when MATLAB is launched.

setenv('CUDA_PATH','C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v10.0');

setenv('NVIDIA_CUDNN','C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\cuDNN');

setenv('NVIDIA_TENSORT','C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\TensorRT');

setenv('OPENCV_DIR','C:\Program Files\opencv\build');

setenv('PATH', ...

['C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v10.0\bin;C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\cuDNN\bin;C:\Program Files\opencv\build\x64\vc15\bin'...

getenv('PATH')]);

NVIDIA Jetson

Once you have installed JetPack on the Jetson, to run this example, install Simple DirectMedia Layer (SDL v1.2) library on the Jetson by running the following commands in a terminal on the Jetson.

$ sudo apt-get install libsdl1.2debian

$ sudo apt-get install libsdl1.2-dev

Next, set environment variables on the Jetson by adding the following commands to the $HOME/ .bashrc file for bash profiles. Use sudo gedit $HOME/ .bashrc to open the file:

export PATH=/usr/local/cuda/bin:$PATH

export LD_LIBRARY_PATH=/usr/local/cuda/lib64:$LD_LIBRARY_PATH

II. Establish a connection with the Jetson and Verify Set-up

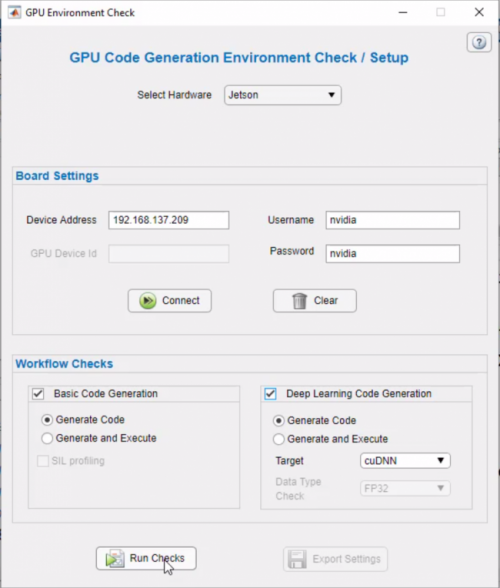

Once all the required libraries are downloaded, use the coder.checkGPUInstallApp to interactively test if the system variables are set-up correctly and if the MATLAB host is ready to generate and deploy code for the Jetson.

coder.checkGpuInstallApp;

Enter the appropriate device parameters and choose the checks to run. Based on the combination of checks selected MATLAB will:

- Verify if the environment variables have been set up correctly

- Generate code for an example function with a deep learning network

- Compile and deploy it to the Jetson

- Produce an html report with the results of the check

Check out this documentation page to learn more.

III. Prepare MATLAB Code for Code Generation

MATLAB code and Simulink models can be converted into low-level code like C/C++, CUDA, HDL etc. This enables you to get the best of both worlds–develop code in a high-level language, and then implement it as efficient low-level code, optimized for embedded devices. Never used code generation before? Check out this tutorial series on the basics of code generation and hardware support.

Our AUV relies on a camera to see its surroundings, so our object detection system must consist of a camera feeding a video stream into the Jetson. Our network will process the image stream and return the pixel location, confidence score, and classification label of the object of interest. When converting MATLAB Code to C/C++ or in this case, CUDA, wrap the functionality you want to generate code for in a function as shown below. GPU Coder will convert this MATLAB function into a CUDA function and generate any other files needed to execute this function in a CUDA environment. Use the %#codegen directive to check for code generation compatibility:

function roboSubPredict() %#codegen % Create a Jetson object to access the board and its peripherals hwobj = jetson; w = webcam(hwobj,1); d = imageDisplay(hwobj); % Load the trained network from a MAT file persistent mynet if isempty(mynet) mynet = coder.loadDeepLearningNetwork('detectorYoloV2.mat'); end % Process loop for i = 1:1e5 img = snapshot(w); [bboxes,scores,labels] = mynet.detect(img,'Threshold',0.6); [~,idx] = max(scores); % Annotate detections in the image. if ~isempty(bboxes) outImg = insertObjectAnnotation(img,'Rectangle', bboxes(idx), cellstr(labels(idx))); else outImg = img; end image(d,outImg); end end

This function loads and constructs a trained detector object—mynet from a MAT-File—with the coder.loadDeepLearningNetwork function. Declaring mynet as persistent, stores it in memory and saves having to reconstruct the object at every function call. The detect method of the object returns the location, confidence score, and classification label. At student competitions, very often teams are training new deep learning detectors during the competition event to counter natural lighting and weather conditions. The network is loaded at compile time so swap out the MAT-file and rebuild the code, to use the most appropriate network for the given conditions, within minutes.

The next step is to acquire the image stream from a camera peripheral connected to the Jetson Board. The GPU Coder Support Package for NVIDIA GPUs will help you do this. Now, for this demonstration we are using an NVIDIA Jetson TX2, but as mentioned above, the support package can generate code for other NVIDIA GPUs as well.

GPU Coder Support Package for NVIDIA GPUs

GPU Coder Support Package for NVIDIA GPUs automates the deployment of MATLAB algorithms on embedded NVIDIA GPUs by building and deploying the generated CUDA code onto the GPU and CPU of the target hardware board. It enables you to remotely communicate with the NVIDIA target and control the peripheral devices for prototyping. Examine the code below:

Create a Jetson object to access the board and its peripherals.

hwobj = jetson; w = webcam(hwobj,1); d = imageDisplay(hwobj);

Calling the jetson function creates a Jetson object which represents a connection to the Jetson board; use this object and its various methods to create objects for peripherals connected to the board like the webcam and imageDisplay as shown above. For more information on the Jetson object check out its documentation page. Use these objects and their methods to stream in video data and display the output on a display device respectively.

img = snapshot(w); % w is the webcam object created earlier image(d, img); % d is the imageDisplay object

IV. Generate Code and Deploy Application

Finally, we are ready to deploy our application to the Jetson. Use the coder configurations object to configure the build as below:

cfg = coder.gpuConfig('exe');

cfg.Hardware = coder.hardware('NVIDIA Jetson');

cfg.Hardware.BuildDir = '~/remoteBuildTest';

cfg.GenerateExampleMain = 'GenerateCodeAndCompile'

In this configuration we are instructing GPU Coder to auto generate a main function and compile the code into an executable. This can be adjusted based on your application, need to generate a library to integrate into another codebase? Choose the appropriate build type as shown here.

Call the codegen command and generate a report to view the generated code.

codegen('-config ', cfg,'roboSubPredict', '-report');

This will deploy a roboSubPredict.elf executable in the build directory on the Jetson. Navigate to that directory to see all the generated files on the Jetson.

Use the Jetson object to launch and kill the application on the Jetson from MATLAB.

hwobj.runApplication('roboSubPredict');

hwobj.killApplication('roboSubPredict');

Here is a video of our trained detector running as CUDA code on the Jetson, identifying buoys and navigation gates from a video playing on a computer screen.

To learn more and download the code used in this example visit this GitHub repository and watch this video.

Key Takeaways

I want to end on some key takeaways:

- Code Generation and Deployment helps you get production ready code in minutes without the hassle of having to debug low-level code

- The hardware support package helps automate the build and deployment onto the Jetson

- Swap out the detector MAT-files and rebuild to generate and deploy code for a new network within minutes

- Change the build-type to generate readable, editable code that can be integrated into other codebases

评论

要发表评论,请点击 此处 登录到您的 MathWorks 帐户或创建一个新帐户。