Complete Pivoting and Hadamard Matrices

For several years we thought Hadamard matrices showed maximum element growth for Gaussian elimination with complete pivoting. We were wrong.

Contents

Complete Pivoting Growth Factor

I want to continue the discussion from my previous blog post of element growth during Gaussian elimination. Suppose we are solving a system of linear equations involving a matrix $A$ of order $n$. Let $a_{i,j}^{(k)}$ denote the elements in the matrix after the $k$ th step of the elimination. Recall that the growth factor for the pivoting process we are using is the quantity $$ \rho_n = { \max_{i,j,k} |a_{i,j}^{(k)}| \over \max_{i,j} |a_{i,j}| } $$ Complete pivoting is intended to control the growth factor by using both row and column interchanges at each step in the reduction to bring the largest element in the entire unreduced matrix into the pivot position. In his 1962 analysis of roundoff error in Gaussian elimination, J. H. Wilkinson proved that the growth factor for complete pivoting satisfies $$ \rho_n \le g(n) $$ where the growth function is $$ g(n) = (n \ 2 \ 3^{1/2} 4^{1/3} \cdots n^{1/(n-1)})^{1/2} \approx 1.8 \ n^{1/2} n^{1/4 \log{n}} $$ Wilkinson's analysis makes it clear that the growth can never actually be anywhere near this large. We saw that the growth factor for partial pivoting is $2^{n-1}$. For complete pivoting, it's much, much less. To get a feeling for now much, express $g(n)$ as a MATLAB one-linerg = @(n) sqrt(n*prod((2:n).^(1./(1:(n-1)))))

g =

function_handle with value:

@(n)sqrt(n*prod((2:n).^(1./(1:(n-1)))))

Check out $n = 100$

g(100)

ans = 3.5703e+03So here $g(n) \approx 35n$.

Hadamard Matrices

Jacques Hadamard was a French mathematician who lived from 1865 until 1963. He made contributions in many fields, ranging from number theory to partial differential equations. The matrices named after him have entries +1 and -1 and mutually orthogonal rows and columns. The $n$ -dimensional parallelotope spanned by the rows of a Hadamard matrix has the largest possible volume among all parallelotopes spanned by matrices with entries bounded by one. We are interested in Hadamard matrices in the MATLAB world because they are the basis for Hadamard transforms, which are closely related to Fourier transforms. They are also applicable to error correcting codes and in statistics to estimation of variance. So MATLAB already has a hadamard function. Here is the 8-by-8 Hadamard matrix.H = hadamard(8)

H =

1 1 1 1 1 1 1 1

1 -1 1 -1 1 -1 1 -1

1 1 -1 -1 1 1 -1 -1

1 -1 -1 1 1 -1 -1 1

1 1 1 1 -1 -1 -1 -1

1 -1 1 -1 -1 1 -1 1

1 1 -1 -1 -1 -1 1 1

1 -1 -1 1 -1 1 1 -1

Check that the rows are perpendicular to each other.

H'*H

ans =

8 0 0 0 0 0 0 0

0 8 0 0 0 0 0 0

0 0 8 0 0 0 0 0

0 0 0 8 0 0 0 0

0 0 0 0 8 0 0 0

0 0 0 0 0 8 0 0

0 0 0 0 0 0 8 0

0 0 0 0 0 0 0 8

The orthogonality of the rows leads to element growth during Gaussian elimination. If we call for the LU factorization of H, no pivoting actually takes places, but the same result would be produced by complete pivoting that settles ties in favor of the incumbent.

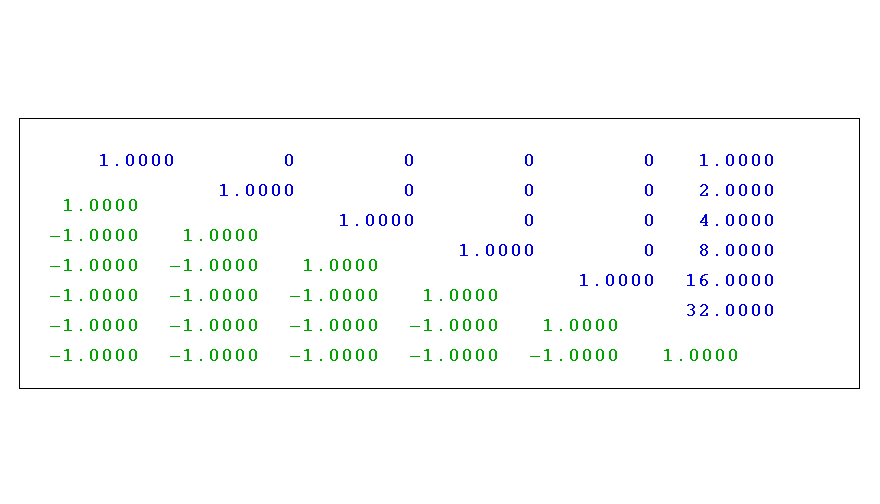

[L,U] = lu(H)

L =

1 0 0 0 0 0 0 0

1 1 0 0 0 0 0 0

1 0 1 0 0 0 0 0

1 1 1 1 0 0 0 0

1 0 0 0 1 0 0 0

1 1 0 0 1 1 0 0

1 0 1 0 1 0 1 0

1 1 1 1 1 1 1 1

U =

1 1 1 1 1 1 1 1

0 -2 0 -2 0 -2 0 -2

0 0 -2 -2 0 0 -2 -2

0 0 0 4 0 0 0 4

0 0 0 0 -2 -2 -2 -2

0 0 0 0 0 4 0 4

0 0 0 0 0 0 4 4

0 0 0 0 0 0 0 -8

This, then, is one matrix at $n = 8$ where

$$ \rho_n = n $$

Is rho(n) equal to n ?

For almost 30 years everybody believed that Hadamard matrices were the extreme cases and that the growth factor $\rho_n$ for complete pivoting with real matrices was equal to $n$. At various times in the 1960s and '70s I personally did numerical experiments looking for matrices with large pivot growth. The computers I had at the time limited the order of my matrices to a few dozen. I never found any cases of $\rho_n > n$. One of the people who worked on the problem was Leonard Tornheim at Chevron Research in California. He also did a lot of numerical experiments. He found a complex 3-by-3 matrix with $\rho_3 > 3$. He also proved that for real matrices, $\rho_3 = 2 \ 1/4$. In 1968, Colin Cryer made an official conjecture that $\rho_n$ was equal to $n$ for real matrices. A 1988 paper by Jane Day and Brian Peterson provides a good survey of what was known up to that time about element growth with complete pivoting.Nick Gould's Surprise

Then, in 1991, Nick Gould, from Oxford, surprised us all by announcing the discovery of real matrices for which the complete pivoting $\rho_n$ is greater than $n$. For an $n$ -by- $n$ matrix, with $n$ between 13 and 16, Nick set up a large, sparse, nonlinear optimization problem involving roughly $n^3/3$ variables, and tackled it with the LANCELOT package that he and his colleagues Andy Conn and Philippe Toint had developed. Most of his paper is about a 13-by-13 matrix with $$ \rho_{13} = 13.0205 $$ This just barely exceeds the $\rho_n = n$ conjecture. But he also found some slightly larger matrice that do a better job. $$ \rho_{14} = 14.5949 $$ $$ \rho_{15} = 16.1078 $$ $$ \rho_{16} = 18.0596 $$ The 16-by-16 is particularly dramatic because it is not a Hadamard matrix. So, as far as I know, this is where the matter still stands today. Nobody is interested in running larger experiments. I have no idea what $\rho_{100}$ or $\rho_{1000}$ might be. Of course, we don't really need to know because we don't use complete pivoting in practice.P^8

This reminds me of a story that I like to tell. I'm not sure it's actually true. Peter Businger was a grad student at Stanford in Math and Computer Science at the same time I was in the early '60s. He worked with Gene Golub to write the first published Algol procedure for the QR matrix factorization using Householder reflections. Peter left Stanford to join Joe Traub's group at Bell Labs that was producing what they called the Port library of mathematical software. They were importing programs from everybody else, running the routines on Bell Labs' machines, thoroughly testing the software, deciding which codes were best, and producing a consolidated library. Peter was in charge of matrix computation. He checked out all the imported linear equation solvers, including mine. He decided none of them was satisfactory, because of this pivoting growth question. So he wrote a program of his own that did partial pivoting, and monitor the growth. If the growth was too large, the program switched to complete pivoting. At the time, Bell Labs was trying to patent software, so Peter named his new subroutine "P^8". This stood for "Peter's Pseudo Perfect Partial Pivot Picker, Patent Pending". In my opinion, that is one of the great all time subroutine names. The experience inspired Peter to go to law school at night and change jobs. He switched to the Bell Labs patent department. I've lost contact with Peter, so I can't ask him if he really did name the routine P^8. If he didn't, he should have.Rook Pivoting

Les Foster, who I mentioned in the last post for finding examples of exponential growth with partial pivoting, promotes what he calls "Rook Pivoting". Search down the column for the largest element, as in partial pivoting, but then also search along that row for the last element. This is intermediate between partial and complete pivoting. Les is able to prove some results about pivot growth. But the algorithm does not generalize well to a block form.Hadamard of Order 92.

I was present at a milestone in the history of Hadamard matrices. The orthogonality conditions readily imply that the order $n$ of a Hadamard matrix must be 1, 2, or a multiple of 4. But the question remains, does a Hadamard matrix of order $n = 4k$ exist for every $k$? This is an open question in number theory. It is fairly easy to create Hadamard matrices of some sizes. A nifty way to generate a Hadamard matrix of order a power of two is the recursion.H = 1; for n = 2, 4, 8, ... H = [H H H -H]; endIf A and B are Hadamard matrices, then so is

kron(A,B)By 1961, with these and other similar constructions, it turned out that it was known how to construct Hadamard matrices for all orders $n \le 100$ that are multiples of four except $n = 92$. In 1961 I had a summer job at JPL, Caltech's Jet Propulsion Lab. My office was in a temporary trailer and my trailer mate was Len Baumert. Len proudly showed me a color graphic that he had just made and that he was proposing for the cover of Scientific American. It was a 92-by-92 matrix made up from 23-by-23 blocks of alternating light and dark cells representing +1 and -1. The graphic didn't make the cover of Scientific American, but I kept my copy for a long time. Len had done a computation on JPL's machines that filled in the last value of $n$ less than 100. His paper with his colleagues Sol Golomb, a professor at USC, and Marshall Hall, Jr., a professor at Caltech, was published in the prestigious Bulletin of the AMS. It turns out that I had taken a course on difference sets, the mathematics involved in generating the matrix, from Hall at Caltech. Here is a MATLAB picture of Baumert92. You can get the function from this link.

H = Baumert92; pcolor92(H);Let's check that we get the expected pivot growth.

[L,U] = lu(H); unn = U(end,end)

unn = 92.0000

评论

要发表评论,请点击 此处 登录到您的 MathWorks 帐户或创建一个新帐户。