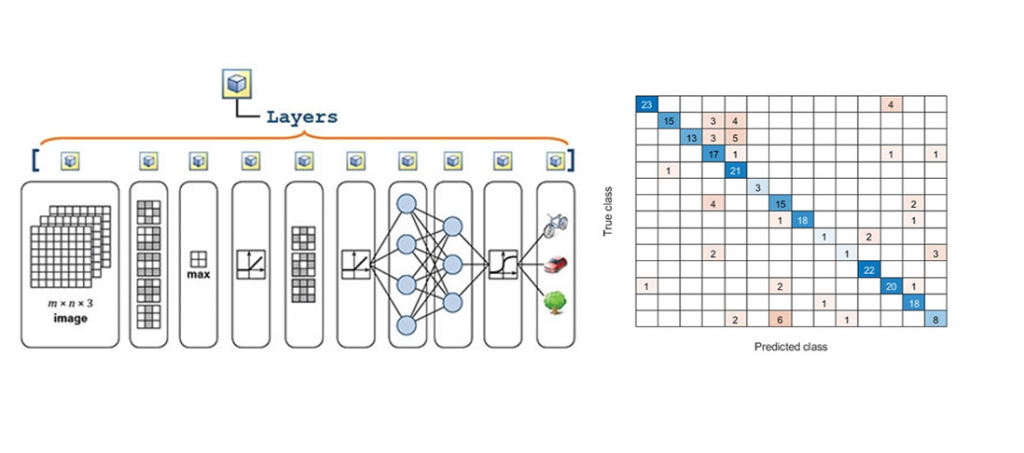

3 Trends in Deep Learning

This void between starting and finishing is where we see a lot of engineers spend a huge portion of their time with various tasks such as:

This void between starting and finishing is where we see a lot of engineers spend a huge portion of their time with various tasks such as:

- Increasing the accuracy of a model with parameter tuning.

- Converting models to C or CUDA to take advantage of speed and hardware.

- Experimenting with new network architectures for transfer learning.

Trend #1: Cloud Computing We all know training complicated networks takes time. Adding to that, techniques like Bayesian optimization - which will train your network multiple times with different training parameters – can provide powerful results at a cost: more time. An option to alleviate some of this pain is to move from local resources to clusters (HPC) or the cloud. The cloud is emerging as a great resource: providing the latest hardware, multiple GPUs at one time, and only paying for resources when they are needed. How MATLAB helps you with this trend:

- Check out the MATLAB cloud computing page.

- And MATLAB specific NVIDIA GPU Cloud (NGC) support in our documentation.

- There's also a walk-through video on how to set up MATLAB and NGC here.

- MATLAB has ONNX import and export capabilities through this support package

- GPU Coder is the product to watch. It was named Embedded Vision’s 2018 product of the year.

- MATLAB has coder tools and support packages to various devices: including iOS, Android, and FPGA to name a few

- Though not deep learning specific, I've heard App Designer now supports Web App deployment.

*Introductions: As Steve mentioned in his last post, I’ll be taking over the blog, and I’m very excited for this new challenge! For those interested, I want to introduce myself and talk about my vision for this blog. As you may have seen, I’ve been warming up for this role as a guest blogger writing about "deep learning in action" (part 1, part 2 and part 3) And prior to that, I took over the ‘pick of the week’ blog and wrote about our deep learning tutorial series. I maintain the MATLAB for Deep Learning content, and I appear in a few videos on our site from time to time. Background: I've been at MathWorks 5 years. I started as an Application Engineer, which meant I got to travel to customer sites and present to customers, specializing in image processing and computer vision. My theory is most of you reading this have never experienced a MathWorks seminar, and I’d like that to change. I now work in marketing**: My current job is making sure everyone knows about the capabilities of the tools and how to solve their problems, and this blog fits well within this job description. **Some people shudder when they hear the word marketing. It’s still a technical role, I just happen to also like spending time on better wording, formatting and visualizations. It’s a win-win for you. You’ll see! Blog Vision: My vision for this blog in one word is access. I have access to insider information because I work here. My goal for this blog is to be the source of that information. I want to talk about the behind-the-scenes deep learning things that you may not see by reading the documentation. While I can’t share future plans, I can give insight, demos, developer Q&A time, and code you won’t find in the product. That’s the vision. I hope you’ll join me on this journey!

- Category:

- Deep Learning

Cleve’s Corner: Cleve Moler on Mathematics and Computing

Cleve’s Corner: Cleve Moler on Mathematics and Computing The MATLAB Blog

The MATLAB Blog Guy on Simulink

Guy on Simulink MATLAB Community

MATLAB Community Artificial Intelligence

Artificial Intelligence Developer Zone

Developer Zone Stuart’s MATLAB Videos

Stuart’s MATLAB Videos Behind the Headlines

Behind the Headlines File Exchange Pick of the Week

File Exchange Pick of the Week Hans on IoT

Hans on IoT Student Lounge

Student Lounge MATLAB ユーザーコミュニティー

MATLAB ユーザーコミュニティー Startups, Accelerators, & Entrepreneurs

Startups, Accelerators, & Entrepreneurs Autonomous Systems

Autonomous Systems Quantitative Finance

Quantitative Finance MATLAB Graphics and App Building

MATLAB Graphics and App Building

Comments

To leave a comment, please click here to sign in to your MathWorks Account or create a new one.