Using AI for Reduced-Order Modeling

Figure: The MathWorks team at our booth at NeurIPS 2022

Founded in 1987, the Conference on Neural Information Processing Systems (abbreviated as NeurIPS) is one of the most prestigious and competitive international conferences in machine learning. Last week, the MathWorks team was at NeurIPS 2022 in New Orleans for the in-person portion of the conference.

During the Expo Day at NeurIPS, I presented a talk about ‘Using AI for Reduced Order Modeling’. This blog post provides an overview of this presentation. If you are interested in learning more, check out the Slides: Using AI for Reduced-Order Modeling.

Figure: The MathWorks team at our booth at NeurIPS 2022

Founded in 1987, the Conference on Neural Information Processing Systems (abbreviated as NeurIPS) is one of the most prestigious and competitive international conferences in machine learning. Last week, the MathWorks team was at NeurIPS 2022 in New Orleans for the in-person portion of the conference.

During the Expo Day at NeurIPS, I presented a talk about ‘Using AI for Reduced Order Modeling’. This blog post provides an overview of this presentation. If you are interested in learning more, check out the Slides: Using AI for Reduced-Order Modeling.

Figure: Presentation on Using AI for Reduced-Order Modeling at NeurIPS 2022

Additionally, we had many interesting interactions at the booth on deep learning, reinforcement learning, and interoperability between MATLAB and Python®. My colleagues Drew and Naren developed an excellent reinforcement learning demo that showcases learning directly from hardware by using a Quanser QUBE™-Servo 2 and Reinforcement Learning Toolbox.

Figure: Presentation on Using AI for Reduced-Order Modeling at NeurIPS 2022

Additionally, we had many interesting interactions at the booth on deep learning, reinforcement learning, and interoperability between MATLAB and Python®. My colleagues Drew and Naren developed an excellent reinforcement learning demo that showcases learning directly from hardware by using a Quanser QUBE™-Servo 2 and Reinforcement Learning Toolbox.

What is ROM and why use it

If you are an engineer or have ever worked in solving an engineering problem, you have probably tried to explain the behavior of a system using first principles. In such situations, you must understand the system’s physics to derive a mathematical representation. The real value of a first-principles model is that results typically have a clear, explainable physical meaning. In addition, behaviors can often be parameterized. However, high-fidelity non-linear models can take hours or even days to simulate. In fact, system analysis and design might require thousands or hundreds of thousands of model simulations to obtain meaningful results. This causes a significant computational challenge for many engineering teams. Moreover, linearizing complex models can result in high-fidelity models that do not contribute to the dynamics of interest in your application. In these situations, AI-based reduced-order models can significantly speed up simulations and analysis of higher-order large-scale systems. Reduced Order Modeling (ROM) is a technique for reducing the computational complexity or storage requirement of a computer model, while preserving the expected fidelity within a controlled error. Engineers and scientists use ROM techniques to:- Speed up system-level desktop simulation

- Perform hardware-in-the-loop testing

- Enable system-level simulation

- Develop virtual sensors and digital twins

- Perform control design

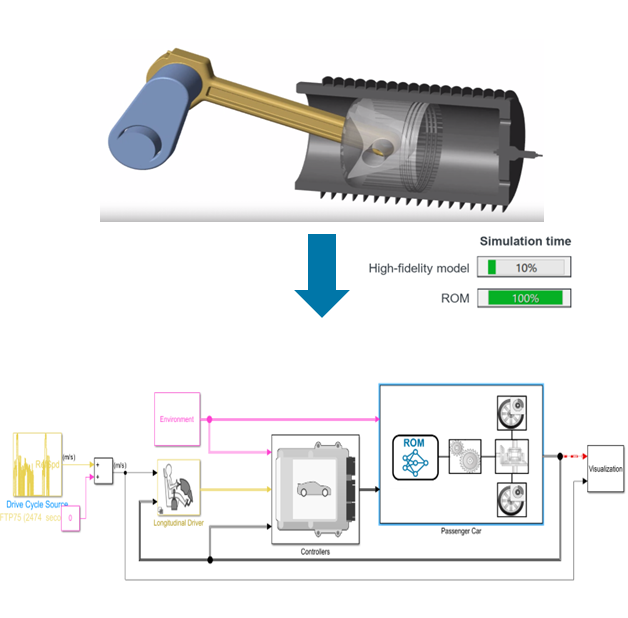

Figure: The simulation of a reduced-order model is significantly faster than the simulation of a high-fidelity model.

Figure: The simulation of a reduced-order model is significantly faster than the simulation of a high-fidelity model.

AI-based reduced-order modeling

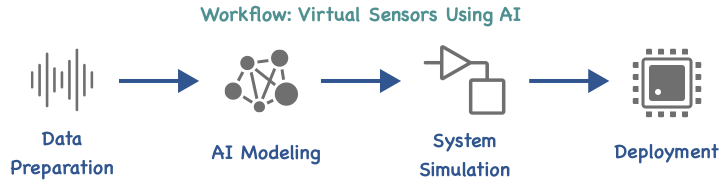

AI enables to create a model from measured data of your component, so you get the right answer in an accurate, dynamic, and low-cost way. We can’t always make good analytical models of the things in the world. Sometimes the theory and/or technology isn’t there. For example, estimating a motor’s internal temperature is challenging because no cost-efficient sensors can do this, and methods like FEA and lumped thermal models are either very slow or require domain expertise to set up. Even if you already have a high-fidelity first-principles model, you can use data-driven models to create a surrogate model that is potentially simpler and simulates faster. A faster but equally accurate model can help you progress as you design, test, and deploy your system. For this talk, I focused on replacing an existing high-fidelity first-principles model with an AI-based reduced-order model. To create such a reduced-order model, you can follow the steps in an AI-driven system design workflow: data preparation, AI modeling, system simulation, and deployment.- Virtual XCU Calibration with Neural Networks

- Building Better Engines with AI

- Using Deep Learning Networks to Estimate NOX Emissions

Conclusion

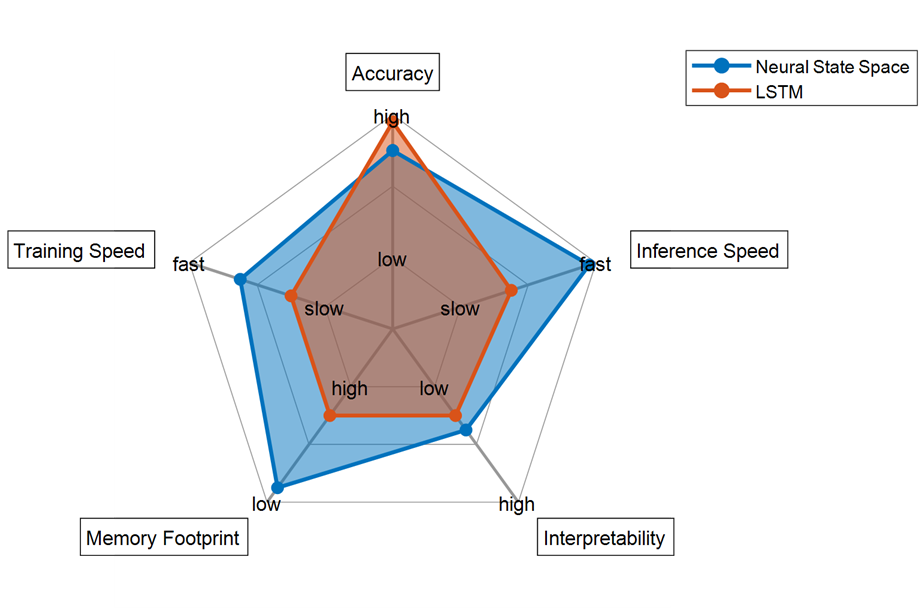

It was truly exciting to attend NeurIPS 2022 and have the opportunity to share with the Deep Learning community how AI can be used for reduced-order modeling. Before wrapping up the session, I went back to the AI-based reduced order models I had trained and analyzed different attributes. This allows you to evaluate and make design tradeoffs based on specific requirements you might have. As the following graph describes, the LSTM model provides slightly better accuracy, but the Neural State Space outperforms the LSTM in every other attribute. Figure: Radar plot highlighting different attributes of the trained LSTM and Neural State Space models. Note that the results shown in this plot are specific to this vehicle engine example.

In summary, an AI model may be used to create an AI-based reduced-order model that replaces part of the complex dynamics of a vehicle engine. Using data synthetically generated from the original first-principles model, you can train AI models using various techniques (LSTMs, Neural ODEs, NLARX models, etc.) to mimic the behavior of the vehicle engine. You can then integrate such an AI model into Simulink for system-level simulation (together with the rest of the first-principles components), generate C/C++ code, and perform HIL testing.

Leave a comment with anything you’d like to chat about related to AI for Reduced Order Modeling. And don’t forget to look at the Slides: Using AI for Reduced-Order Modeling, which provide details on how to use AI for ROM at every stage of a complete system!

Figure: Radar plot highlighting different attributes of the trained LSTM and Neural State Space models. Note that the results shown in this plot are specific to this vehicle engine example.

In summary, an AI model may be used to create an AI-based reduced-order model that replaces part of the complex dynamics of a vehicle engine. Using data synthetically generated from the original first-principles model, you can train AI models using various techniques (LSTMs, Neural ODEs, NLARX models, etc.) to mimic the behavior of the vehicle engine. You can then integrate such an AI model into Simulink for system-level simulation (together with the rest of the first-principles components), generate C/C++ code, and perform HIL testing.

Leave a comment with anything you’d like to chat about related to AI for Reduced Order Modeling. And don’t forget to look at the Slides: Using AI for Reduced-Order Modeling, which provide details on how to use AI for ROM at every stage of a complete system!

Cleve’s Corner: Cleve Moler on Mathematics and Computing

Cleve’s Corner: Cleve Moler on Mathematics and Computing The MATLAB Blog

The MATLAB Blog Guy on Simulink

Guy on Simulink MATLAB Community

MATLAB Community Artificial Intelligence

Artificial Intelligence Developer Zone

Developer Zone Stuart’s MATLAB Videos

Stuart’s MATLAB Videos Behind the Headlines

Behind the Headlines File Exchange Pick of the Week

File Exchange Pick of the Week Hans on IoT

Hans on IoT Student Lounge

Student Lounge MATLAB ユーザーコミュニティー

MATLAB ユーザーコミュニティー Startups, Accelerators, & Entrepreneurs

Startups, Accelerators, & Entrepreneurs Autonomous Systems

Autonomous Systems Quantitative Finance

Quantitative Finance MATLAB Graphics and App Building

MATLAB Graphics and App Building

Comments

To leave a comment, please click here to sign in to your MathWorks Account or create a new one.