Adaptive VR adjusts to your vision, even if you wear glasses

Virtual reality (VR) is a hot topic among tech enthusiasts. With initial success in the gaming and entertainment markets, VR is now finding new applications in everything from real estate to healthcare. Some estimates predict VR will be a $160M market by 2020.

But VR can be hard on the eyes and has been linked to eyestrain and headaches. Since it takes a “one-size-fits-all” approach, it can be exceptionally hard on consumers that wear corrective lenses. Based on data from the Vision Council of America, that’s approximately 4 billion adults that could find VR extra challenging.

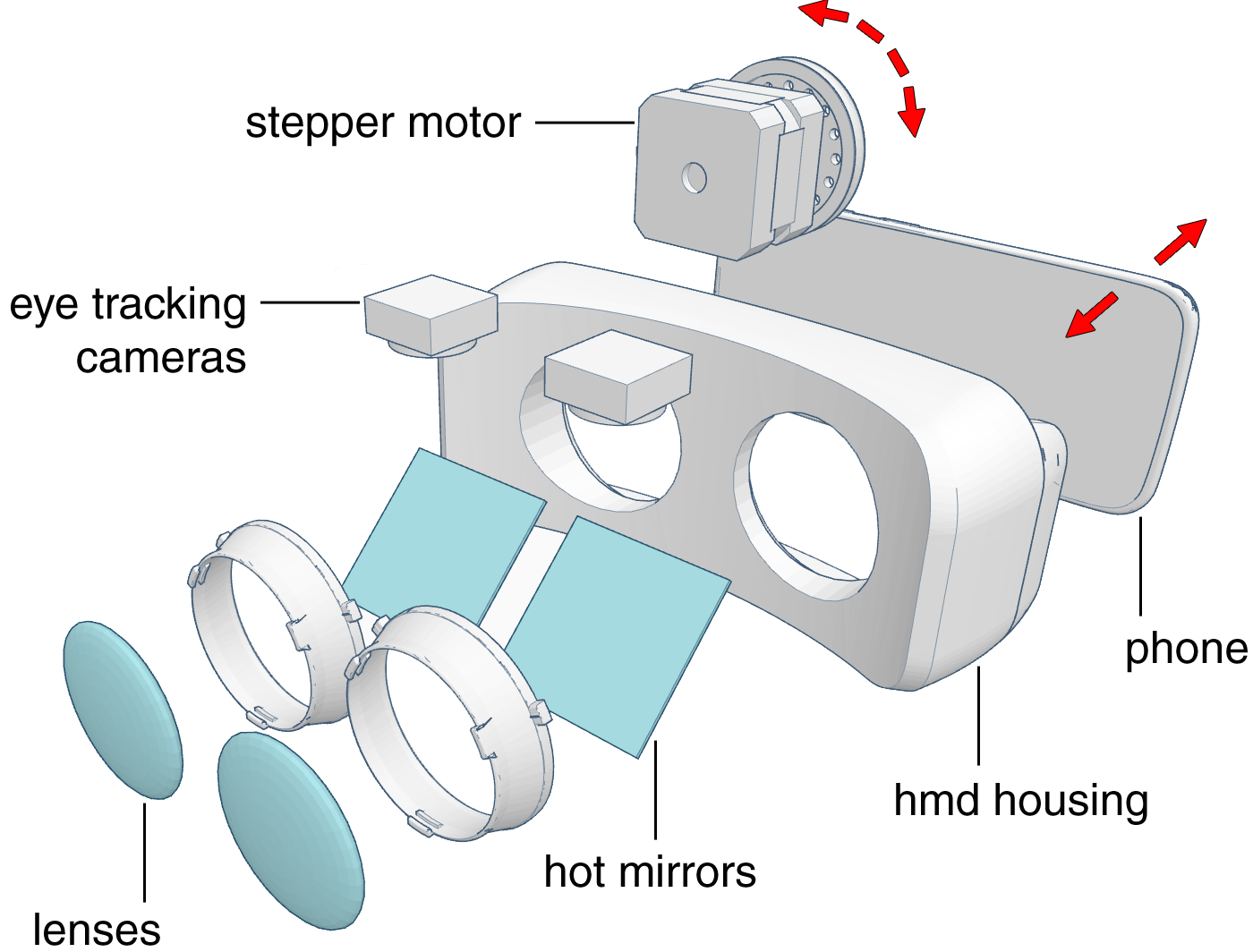

A conventional near-eye display (Samsung Gear VR) is augmented by a gaze tracker and a motor that is capable of adjusting the physical distance between screen and lenses. Image credit: Stanford University.

The problem for many VR consumers is that headset displays are a fixed distance from your eyes and are designed to work best for people with perfect vision. To address this, researchers at Stanford’s Computational Imaging Lab, working with a scientist from the Department of Psychological and Brain Sciences at Dartmouth College, published research that shows how VR headsets can be designed to adapt to the differences in eyesight.

“If you wear glasses, or know someone who does, this technology could make virtual reality far easier for them to use,” stated Digital Trends.

Adapting the VR experience

The research paper, “Optimizing virtual reality for all users through gaze-contingent and adaptive focus displays,” was published in in the Proceedings of the National Academy of Sciences (PNAS). It details how the researchers created optocomputational technology that adapts the focal plane for VR users based on their unique vision requirements.

“Every person needs a different optical mode to get the best possible experience in VR,” said Gordon Wetzstein, assistant professor of electrical engineering and senior author of the paper.

The researchers developed and tested two distinct hardware approaches. The software then adapted the hardware to provide the best viewing environment.

Liquid-filled tunable lenses

First, they developed a hardware system that utilizes focus-tunable lenses. The tunable lenses were liquid-filled lenses that change in thickness to vary their focal power. This happens in real time while the user is viewing the VR content.

“The lenses are driven by the same computer that controls the displayed images, allowing for precise temporal synchronization between the virtual image distance and the onscreen content,” per the paper in PNAS.

This tunable lens approach used a special table-mounted system, shown in the image below. The team used an autorefractor to obtain measurements of the users’ vision prescription. Then, liquid-filled lenses are adjusted in real time to obtain the optimum VR viewing experience. The study found that VR-corrected vision provided sharpness compatible with the vision the users achieved when wearing their glasses, showing they could now make full use of VR without their corrective lenses.

The benchtop setup was designed to incorporate adaptive focus via tunable, liquid-filled lenses. Image credit: Stanford University in PNAS.

Dynamic focus for moving objects in VR

When an object moves in depth – closer to or farther from us – our eyes naturally adjust to focus on the object. With VR headsets, the focus plane is a set distance from our eyes. The difference between the object’s distance, near or far, and the set focal plane can cause eye strain.

The researchers’ second hardware approach utilized a mechanically-actuated display to accommodate for objects’ movement in relation to the natural focal length. Instead of fixing the focus for the predetermined distance between the eyes and the VR screen, this approach moved the VR screen to change that distance. This approach also used eye-tracking cameras to monitor where the user was looking.

A schematic showing the basic components of a gaze-contingent prototype. The stepper motor mounted on top rotates based on position reported from the integrated eye tracker and software, moving the phone back and forth (red arrows). Image credit: Stanford University.

This approach did not require the desktop set-up, but rather made use of a commercially available VR headset. The team modified a Samsung Gear VR by adding a stereoscopic eye tracker and a motor that mechanically adjusts the distance between the screen and the magnifying lenses in real time.

The “computational” in optocomputational

The systems rely on software to adjust the operation hardware in real-time. Much of the software running the experiment was completed in C++, using OpenGL and hardware libraries. The data analysis and plotting were completed in MATLAB. This included reading the CSV files output by the autorefractor plus the files output by the study-running software.

“The exact nature of the data analysis we did was Fourier gain analysis of the measured user data, with some thresholding and error rejection,” stated Nitish Padmanaban, the paper’s lead author and a Ph.D. student at Stanford. “We then combined it with demographic data to produce a set of struct arrays, which we variously slice and filter to plot data for only the desired demographic. From there we run statistics on the differences in populations using, depending on the nature of the data, the ranksum() and signrank() Wilcoxon texts, corr() for Pearson correlation, or a binomial test.”

Making the virtual world a little easier on the eyes

These VR display prototypes adapt to the eyes of each user. This can make VR more comfortable for everyone, regardless of age or eyesight.

“It’s important because people who are nearsighted, farsighted or presbyopic – these three groups alone – they account for more than 50 percent of the U.S. population,” said Robert Konrad, one of the paper’s authors and a Ph.D. candidate at Stanford. “The point is that we can essentially try to tune this into every individual person to give each person the best experience.”

This research has caught the attention of commercial VR providers, giving hope that someday we could all enjoy a personalized VR experience. VR will no longer be a “one-size-fits-all” technology. Imagine, it could be possible to have better vision in the virtual world than in the real one.

Cleve’s Corner: Cleve Moler on Mathematics and Computing

Cleve’s Corner: Cleve Moler on Mathematics and Computing The MATLAB Blog

The MATLAB Blog Guy on Simulink

Guy on Simulink MATLAB Community

MATLAB Community Artificial Intelligence

Artificial Intelligence Developer Zone

Developer Zone Stuart’s MATLAB Videos

Stuart’s MATLAB Videos Behind the Headlines

Behind the Headlines File Exchange Pick of the Week

File Exchange Pick of the Week Hans on IoT

Hans on IoT Student Lounge

Student Lounge MATLAB ユーザーコミュニティー

MATLAB ユーザーコミュニティー Startups, Accelerators, & Entrepreneurs

Startups, Accelerators, & Entrepreneurs Autonomous Systems

Autonomous Systems Quantitative Finance

Quantitative Finance MATLAB Graphics and App Building

MATLAB Graphics and App Building

댓글

댓글을 남기려면 링크 를 클릭하여 MathWorks 계정에 로그인하거나 계정을 새로 만드십시오.