What’s New in Automated Driving in MATLAB and Simulink?

MATLAB and Simulink Release 2019b has been a major release regarding automotive features. The following article focuses on the automated driving highlights, namely the 3D simulation features. These tools can be a great help when designing for perception systems and controls algorithms for automated driving or active safety. For a more complete overview of latest features, I recommend to check this list of R2019b features or to jump into this section if you are only interested in automotive applications.

3D Simulation Environment

Developing and tuning control algorithms for active safety or automated driving applications requires either a massive amount of logged sensor data or a virtual development environment. Today, both are required as one approach can’t fully replace the other.

In the first method, sensor data is gathered while driving on the roads. This data will be used and simply said replayed into the control algorithm. The amount of data increases with increasing demands regarding algorithm robustness and safety. Thus, you want to cover a sufficient number of roads under varying conditions. Alternatively, you may rely on a virtualized workflow where varying conditions can be done in software and typically with a lot less effort.

The base ingredients to virtually develop perception systems are these:

- A virtual environment infrastructure

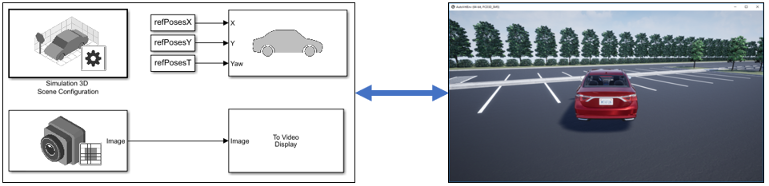

Simulink integrates and co-simulates with Unreal Engine 4 by ©Epic Games and allows you to conduct simulations in a photorealistic 3D environment. - Virtual sensor models

Consider these sensors as pieces of software that generate signals equivalent to actual sensors but from a virtual scene. With R2019b, there is now a complete set of virtual sensors available including camera, lidar and radar. - Virtual scenes

Automated Driving Toolbox provides straight and curved roads, parking lots, a typical US city block as well as highway. Also Mcity, a proving ground by the University of Michigan is among the scenes. It comes without saying that you may create your own custom scene with the Unreal Editor using a support package.

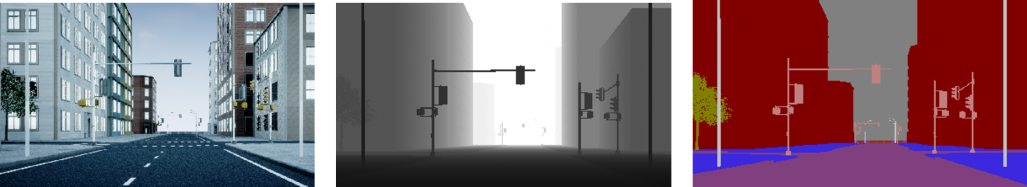

Camera Sensor

The Simulation 3D Camera sensor not only provides RGB image data of the world but also provides a depth map and also labelled information. Possible outputs are shown below, namely a RGB image (left), a depth map whose grayscales represent distance (center) and the outputs of semantic segmentation (right). There are two types of cameras available, one with a standard focal length and one with a fisheye lens. They both come with a distortion model which you can calibrate to represent custom cameras. The depth map can be compared to what a stereo camera would output after post processing. You may have used a stereo camera before, such as a Kinect in conjunction with your Xbox. Stereo vision as a concept aims to recover depth information from camera images by comparing two or more views of the same scene. The technique of semantic segmentation associates pixels of an image with a class label, such as road, sky, traffic sign, car or pedestrian. In order to be able assign labels, a semantic segmentation network needs to be trained with example data using e.g. deep learning.

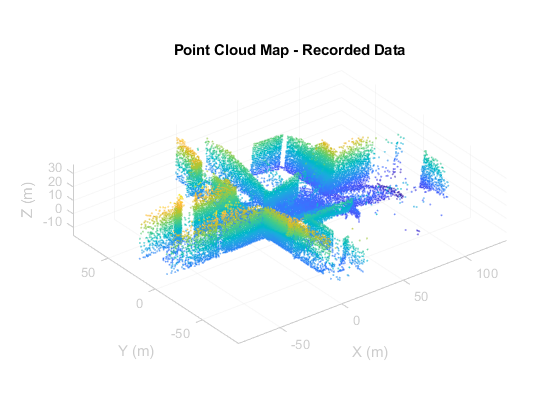

Lidar Sensor

Lidar sensors which are sometimes called laser scanners, allow you to measure distance to a target by illuminating the target with laser light and measuring the reflected light with a sensor. The output of a 3D lidar is a point cloud, namely a set of data points in space, that gets updated based on the horizontal resolution of the device. (There are 2D lidars as well. In automated driving applications mainly 3D lidars are used). Find an example point cloud in the illustration below.

This example is a good starting point to explore the concept of developing a perception algorithm based on virtual lidar sensor data. As a side note, allow me to link two more examples showing what you can do with lidar in terms of tracking and map building: Track Vehicles Using Lidar and Build a Map from Lidar Data.

Application Example

Concluding this section about 3D virtual environments and sensor models, I recommend checking out this example of a closed loop controls model called Lane-Following Control with Monocular Camera Perception where virtual sensor data is used to control a car in a realistic driving situation.

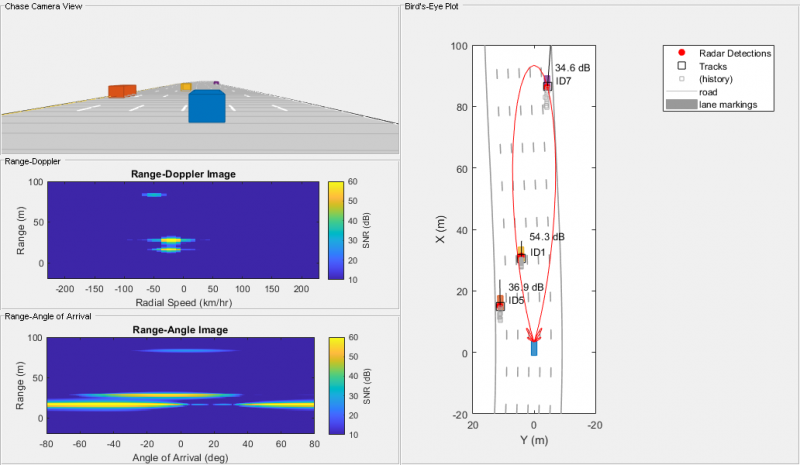

Driving Scenario Designer

The above examples use the Unreal Engine 4, which by itself is quite demanding in terms of compute resources. If you are in the early stages of automated driving development, you may prefer a simpler and faster environment. Typical use cases would be evaluating and comparing sensor configurations or algorithmic concepts. Here the Driving Scenario Designer can come into play. Among MathWorks staff, the tool is typically called ‘cuboid world’ because actors are represented in a simplified manner as cuboids. Find below an example that shows how to model a radar’s hardware, signal processing, and propagation environment – and the cuboids of course (see top left area).

The beauty of the tool comes from its simplicity. You may create road and actor models using a drag-and-drop interface. You may also import OpenDRIVE® data if it is available for your desired scenarios. In the context of safety critical applications, such as emergency braking, emergency lane keeping, and lane keep assist systems, a library of prebuilt scenarios representing European New Car Assessment Programme (Euro NCAP®) test protocols is available.

Simulink Integration

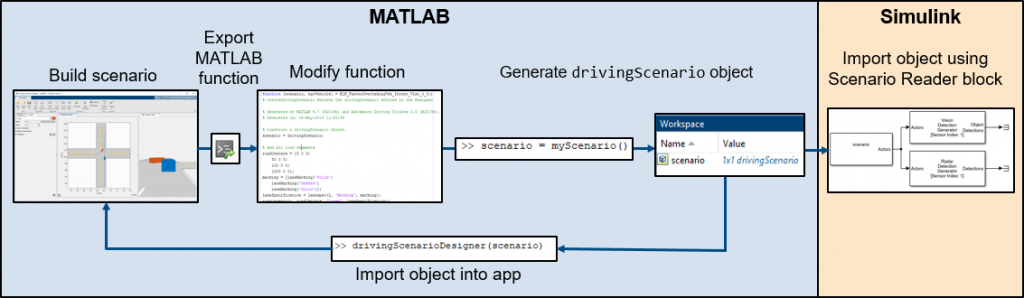

Find below a flowchart of how you would use Driving Scenario Designer in conjunction with Simulink.

Application Example

To keep the spirit of this blog post, I am also linking an example here where you can where you can try out the functionality. It is called “Test Closed-Loop ADAS Algorithm Using Driving Scenario”.

Conclusion

Overall, I hope you found this blog post interesting and relevant for your work. Since automated driving is a huge topic, we actually welcome your comments and guidance in terms of what we should cover in future.

Thanks, and Best,

Christoph

- Category:

- Automated driving,

- Automotive,

- MATLAB,

- Simulink,

- Workflow

Comments

To leave a comment, please click here to sign in to your MathWorks Account or create a new one.